How we did the autopilot for the service station

Hi, Habr! I work in a small startup in Berlin that develops auto pilots for cars. We are finishing the project for the service stations of a major German automaker and I would like to tell you about it: how we did it, what difficulties we encountered and what new we discovered. In this part I will tell you about the perception module and a little about the architecture of the solution as a whole. About the remaining modules, perhaps, we will tell in the following sections. I would be very happy feedback and view from the side of our approach.

The press release of the project by the customer can be found here .

To begin with, I will say why the automaker turned to us, and did not make the project on its own. It is difficult for large German concerns to change processes, and the format of developing cars is rarely suitable for software - iterations are long and require good planning. It seems to me that German automakers understand this, and therefore you can meet startups that are founded by them, but work as an independent company (for example, AID from Audi and Zenuity from Volvo). Other automakers organize events like Startup Autobahn, where they are looking for possible contractors for tasks and new ideas. They can order a product or a prototype and get the finished result in a short period of time. This may turn out to be faster than trying to do the same thing yourself, and in terms of costs, it is no more expensive than its own design. The complexity of changing processes well demonstrates the number of permissions required to start testing the autopilot machine with customers: consent to video filming people (even if we do not save data, and streaming video is used only anonymously without identifying specific people), consent to video shooting territories, the consent of the trade union and the working consul to test these technologies, the consent of the security service, the consent of the IT service - this is not the whole list.

In the current project, the customer wants to understand whether it is possible to control the vehicles in the service centers with the help of “AI”. The user script is as follows:

')

Features: Not all machines have cameras. On those machines on which they are, we do not have access to them. The only data on the machine that we have access to is sonar and odometer

Thus, the car should be controlled by external sensors installed in the service area.

The architecture of the final product is as follows:

For infrastructure level, ROS is used.

Here is what happens after the technician chose the car and clicked “call in”:

Check out the same way as the race.

One of the main and, in my opinion, the most interesting module is perception. This module describes the data from the sensors in such a way that you can accurately make a decision about the movement. In our project, he gives the coordinates, orientation and dimensions of all objects falling on the camera. When designing this module, we decided to start with algorithms that would allow for image analysis in a single pass. We tried:

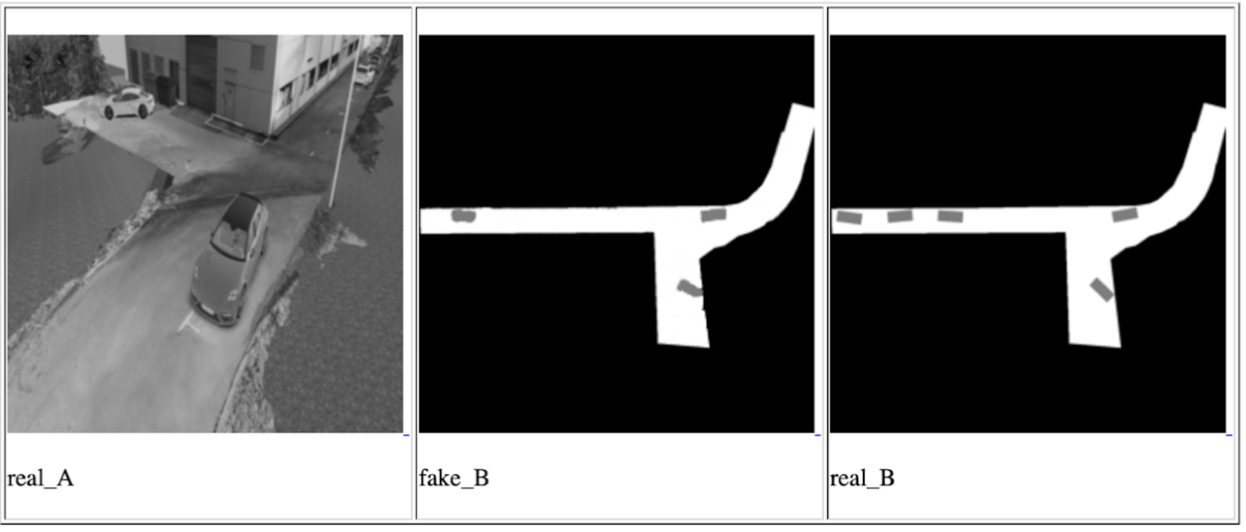

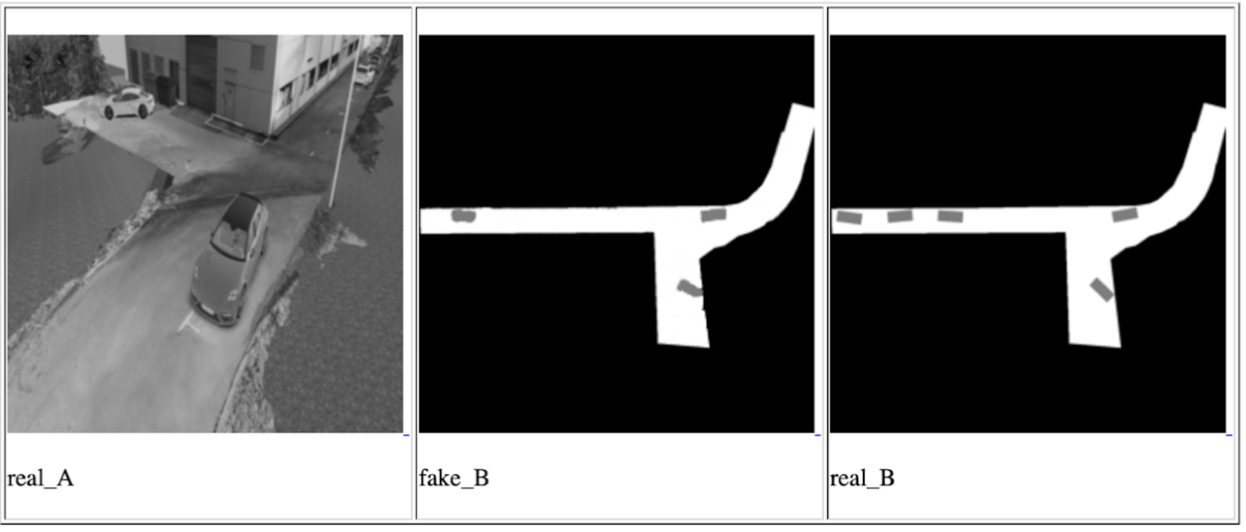

One of the Conditional GAN iterations for a single camera, from left to right: input image, network prediction, expected result

In fact, the idea of these approaches is to ensure that the final network can understand the location and orientation of all cars and other moving objects that hit the camera by looking at the input photo once. Data about the objects in this case will be stored in latent vectors. The network was trained on data from the simulator, which is an exact copy of the point where the demonstration will take place. And we managed to achieve certain results, but we decided not to use these methods for several reasons:

Nevertheless, it was interesting to us to understand the possibilities of these approaches, and we will have them in mind for future tasks.

After that, we approached the task on the other hand, through the usual search for objects + network to determine the spatial position of the objects found (for example, such or such ). This option seemed to us the most accurate. The only drawback is that it is slower than the approaches proposed before this, but it fits into our possible delay frames, since the vehicle’s speed in the service area is no more than 5 km / hour. The most interesting work in the field of predicting the 3D position of the object seemed to us this one , which shows quite good results on KITTI . We built a similar network with some changes and wrote our own algorithm for determining the surrounding box, and to be more precise, the algorithm for estimating the coordinates of the center of the projection of the object on the ground - for making decisions about the direction of motion we do not need data on the height of the objects. The image of the object and its type (car, pedestrian, ..) is fed to the network input, its size and orientation in space are output. Next, the module evaluates the center of the projection and gives data for all objects: the center coordinates, orientation and dimensions (width and length).

In the final product, each picture is first run through the network to search for objects, then all objects are sent to the 3D network to predict the orientation and size, after which we estimate the center of the projection of each and send it and the data on the orientation and size further. A feature of this method is that it is very strongly tied to the accuracy of the boundary boundary of the object search network. For this reason, networks like YOLO did not suit us. The optimal balance of performance and accuracy of the boundary box seemed to us on the RetinaNet network.

It is worth noting one thing with which we were lucky on this project: the land is flat. Well, that is, not as flat as in a well-known community, but there are no bends in our territory. This allows the use of fixed monocular cameras to project objects into the coordinates of the earth’s plane without information about the distance to the object. Future plans include the introduction of monocular depth prediction. There is a lot of work on this topic, for example, one of the latest and very interesting, which we are currently trying for future projects. Depth prediction will not only work on flat ground, it should potentially increase the accuracy of obstacles, simplify the process of configuring new cameras and allow you to abandon the need to label each object - it does not matter to us what kind of object it is if it is some kind of obstacle.

That's all, thank you for reading and happy to answer questions. As a bonus, I want to tell you about an unexpected negative effect: the autopilot does not care about the orientation of the car, for it does not matter how to drive - front or back. The main thing is to drive optimally and not bump into anyone. Therefore, there is a high probability that the car will pass part of the way in reverse, especially in small areas where high maneuverability is required. However, people are used to the fact that the car basically moves in front, and often expect the same behavior from the autopilot. If a business person sees a car that, instead of driving in front, goes backwards, then he may consider that the product is not ready and contains errors.

PS I am sorry that there are no images and videos with real testing, but I can not publish them for legal reasons.

The press release of the project by the customer can be found here .

To begin with, I will say why the automaker turned to us, and did not make the project on its own. It is difficult for large German concerns to change processes, and the format of developing cars is rarely suitable for software - iterations are long and require good planning. It seems to me that German automakers understand this, and therefore you can meet startups that are founded by them, but work as an independent company (for example, AID from Audi and Zenuity from Volvo). Other automakers organize events like Startup Autobahn, where they are looking for possible contractors for tasks and new ideas. They can order a product or a prototype and get the finished result in a short period of time. This may turn out to be faster than trying to do the same thing yourself, and in terms of costs, it is no more expensive than its own design. The complexity of changing processes well demonstrates the number of permissions required to start testing the autopilot machine with customers: consent to video filming people (even if we do not save data, and streaming video is used only anonymously without identifying specific people), consent to video shooting territories, the consent of the trade union and the working consul to test these technologies, the consent of the security service, the consent of the IT service - this is not the whole list.

Task

In the current project, the customer wants to understand whether it is possible to control the vehicles in the service centers with the help of “AI”. The user script is as follows:

')

- A technician wants to start working with a machine that is somewhere in the parking lot outside the test area.

- He chooses a car on the tablet, selects the service box, and clicks “Call in”.

- The car drives in and stops at the end point (elevator, ramp, or something else).

- When the technician finishes work on the machine, he presses the button on the tablet, the car leaves and parks on some free space outside.

Features: Not all machines have cameras. On those machines on which they are, we do not have access to them. The only data on the machine that we have access to is sonar and odometer

Sonary and odometry

Sonars are distance sensors, are installed in a circle on a car and often look like round dots, they allow you to estimate the distance to an object, but only close and with low accuracy. Odometry - data on the actual speed and direction of the car. Knowing this data and the initial position, one can quite accurately determine the current position of the machine.

Thus, the car should be controlled by external sensors installed in the service area.

Decision

The architecture of the final product is as follows:

- In the service area, we install external cameras, lidars and other things not (hello Tesla).

- The data from the cameras is sent to Jetson TX2 (three cameras each), which are concerned with the task of searching for the machine and preprocessing images from the cameras.

- Next, these cameras come to the central server, which is proudly called the Control Tower and on which they fall into the perception, tracking and path-planning modules. As a result of the analysis, a decision is made on the further direction of the car’s movement and it is sent to the car.

- At this stage of the project, another Jetson TX2 is put in the car, which with the help of our driver connects to the Vector, which decrypts the vehicle data and sends commands. The TX2 receives control commands from the central server and transmits them to the vehicle.

For infrastructure level, ROS is used.

Here is what happens after the technician chose the car and clicked “call in”:

- The system is looking for a car: we send a command to the car to flash alarms, after which we can determine which of the cars in the parking lot is chosen by the technician. At the initial stage of development, we also considered the option of identifying a car by the plate number, but in some areas the number of the parked car may not be visible. In addition, if we made the definition of the car by the registration number, then the resolution of the photos would have to be greatly increased, which would have a negative impact on performance, and so we use the same image to search and control the car. This stage occurs once and is repeated only if for some reason we lost the car in tracking.

- As soon as the car is found, we throw pictures from the cameras on which the car falls into the perception module, which produces a segmentation of the space and gives the coordinates of all the objects, their orientation and size. This process is ongoing, running at a rate of about 30 frames per second. Subsequent processes are also ongoing and run until the machine arrives at the end point.

- The tracking module receives input from perception, sonar and odometry, keeps in memory all the objects found, combines them, specifies the location, predicts the position and speed of objects.

- Next, the path planner, which is divided into two parts: the global path planner for the global route and the local path planner for the local one (responsible for avoiding obstacles), builds the path and decides where to go to our car, sends the command.

- Jetson takes the car by car and broadcasts it to the car.

Check out the same way as the race.

Perception

One of the main and, in my opinion, the most interesting module is perception. This module describes the data from the sensors in such a way that you can accurately make a decision about the movement. In our project, he gives the coordinates, orientation and dimensions of all objects falling on the camera. When designing this module, we decided to start with algorithms that would allow for image analysis in a single pass. We tried:

- Disentangled VAE . A small modification made to β-VAE allowed us to train the network so that the latent vectors stored the image information in a schematic top-down view.

- Conditional GAN (the most famous implementation is pix2pix ). This network can be used to build maps. We also used it to build a schematic top view, putting data from one or all cameras into it at the same time and waiting for a schematic top view of the output.

One of the Conditional GAN iterations for a single camera, from left to right: input image, network prediction, expected result

In fact, the idea of these approaches is to ensure that the final network can understand the location and orientation of all cars and other moving objects that hit the camera by looking at the input photo once. Data about the objects in this case will be stored in latent vectors. The network was trained on data from the simulator, which is an exact copy of the point where the demonstration will take place. And we managed to achieve certain results, but we decided not to use these methods for several reasons:

- For the allotted time, we could not learn how to use data from latent vectors to describe the image. The result of the network has always been a picture - a top view with a schematic arrangement of objects. This is less accurate and we feared that such accuracy would not be enough to drive a car.

- The solution is not scalable: for all subsequent installations and for cases when you need to change the direction of some cameras, you need to reconfigure the simulator and repeat full training.

Nevertheless, it was interesting to us to understand the possibilities of these approaches, and we will have them in mind for future tasks.

After that, we approached the task on the other hand, through the usual search for objects + network to determine the spatial position of the objects found (for example, such or such ). This option seemed to us the most accurate. The only drawback is that it is slower than the approaches proposed before this, but it fits into our possible delay frames, since the vehicle’s speed in the service area is no more than 5 km / hour. The most interesting work in the field of predicting the 3D position of the object seemed to us this one , which shows quite good results on KITTI . We built a similar network with some changes and wrote our own algorithm for determining the surrounding box, and to be more precise, the algorithm for estimating the coordinates of the center of the projection of the object on the ground - for making decisions about the direction of motion we do not need data on the height of the objects. The image of the object and its type (car, pedestrian, ..) is fed to the network input, its size and orientation in space are output. Next, the module evaluates the center of the projection and gives data for all objects: the center coordinates, orientation and dimensions (width and length).

In the final product, each picture is first run through the network to search for objects, then all objects are sent to the 3D network to predict the orientation and size, after which we estimate the center of the projection of each and send it and the data on the orientation and size further. A feature of this method is that it is very strongly tied to the accuracy of the boundary boundary of the object search network. For this reason, networks like YOLO did not suit us. The optimal balance of performance and accuracy of the boundary box seemed to us on the RetinaNet network.

It is worth noting one thing with which we were lucky on this project: the land is flat. Well, that is, not as flat as in a well-known community, but there are no bends in our territory. This allows the use of fixed monocular cameras to project objects into the coordinates of the earth’s plane without information about the distance to the object. Future plans include the introduction of monocular depth prediction. There is a lot of work on this topic, for example, one of the latest and very interesting, which we are currently trying for future projects. Depth prediction will not only work on flat ground, it should potentially increase the accuracy of obstacles, simplify the process of configuring new cameras and allow you to abandon the need to label each object - it does not matter to us what kind of object it is if it is some kind of obstacle.

That's all, thank you for reading and happy to answer questions. As a bonus, I want to tell you about an unexpected negative effect: the autopilot does not care about the orientation of the car, for it does not matter how to drive - front or back. The main thing is to drive optimally and not bump into anyone. Therefore, there is a high probability that the car will pass part of the way in reverse, especially in small areas where high maneuverability is required. However, people are used to the fact that the car basically moves in front, and often expect the same behavior from the autopilot. If a business person sees a car that, instead of driving in front, goes backwards, then he may consider that the product is not ready and contains errors.

PS I am sorry that there are no images and videos with real testing, but I can not publish them for legal reasons.

Source: https://habr.com/ru/post/457500/

All Articles