Automatic task assignment in Jira using ML

Hi, Habr! My name is Sasha and I am a backend developer. In my free time I study ML and have fun with the data of hh.ru.

This article is about how we automated the routine process of assigning tasks to testers using machine learning.

In hh.ru there is an internal service for which tasks are created in Jira (within the company they are called HHS), if someone doesn’t work or doesn’t work correctly. Further, these tasks are manually handled by the QA team leader, Alexey, and assigned to the team whose area of responsibility is the fault. Lesha knows that robots should perform boring tasks. Therefore, he turned to me for help on the part of ML.

')

The chart below shows the amount of HHS per month. We are growing and the number of tasks is growing. Tasks are mainly created during working hours a few pieces per day, and this has to be constantly distracted.

So, it is necessary to learn from historical data to determine the development team to which HHS belongs. This is a multi-class classification task.

In machine learning tasks, the most important thing is the quality data. The outcome of the problem depends on them. Therefore, any machine learning tasks must begin with examining the data. Since the beginning of 2015, we have accumulated about 7,000 tasks that contain the following useful information:

Let's start with the target variable. First, each team has areas of responsibility. Sometimes they intersect, sometimes one team may intersect in development with another. The decision will be based on the assumption that assignee, which remained at the task at the time of closure, is responsible for its solution. But we need to predict not a specific person, but a team. Fortunately, we have in Jira the composition of all teams and can be smoked. But with the definition of team by person there are a number of problems:

After screening out irrelevant data, the training sample was reduced to 4900 tasks.

Let's look at the distribution of tasks between teams:

Tasks need to be distributed between 22 teams.

Summary and Description - text fields.

First, they should be cleaned from unnecessary characters. For some tasks, it makes sense to leave symbols in the lines that carry information, for example + and #, to distinguish between c ++ and c #, but in this case I decided to leave only letters and numbers, since not found where other characters may be useful.

Words need to be lemmatized. Lemmatization is the reduction of a word to a lemma, its normal (vocabulary) form. For example, cats → cat. I also tried stemming, but with lemmatization, the quality was slightly higher. Stemming is the process of finding the stem of a word. This basis is due to the algorithm (they are different in different implementations), for example, cats → cats. The meaning of the first and second is to match each other the same words in different forms. I used Python wrapper for Yandex Mystem .

Then the text should be cleared from stop words that do not carry the payload. For example, "was", "me", "more." I usually take stop words from NLTK .

Another approach that I try in the tasks of working with text is character-by-word word splitting. For example, there is a “search”. If you break it into components of 3 characters, then you get the words "poi", "ois", "lawsuit". It helps to get additional connections. Suppose there is the word "search." Lemmatization does not lead to the “search” and “search” for the general form, but a division of 3 characters will highlight the common part - the “lawsuit”.

I made two tokenizers. Tokenizer is a method that inputs text to the input, and the output is a list of tokens that make up the text. The first highlights lemmatized words and numbers. The second highlights only lemmatized words, which are broken down by 3 characters each, i.e. at the exit he has a list of three-character tokens.

Tokenizers are used in TfidfVectorizer , which is used to convert text (and not only) data into a vector representation based on tf-idf . At the input it is given a list of lines, and at the output we get the matrix M by N, where M is the number of lines, and N is the number of signs. Each sign is a frequency characteristic of a word in a document where the frequency is penalized if the given word occurs many times in all documents. Thanks to the ngram_range TfidfVectorizer parameter, I added more digrams and trigrams as signs.

I also tried to use word embeddings using Word2vec as additional signs. Embedding is a vector representation of the word. For each text I averaged the embeddings of all his words. But it did not give any gain, therefore I refused these signs.

For Labels was used CountVectorizer . At the input it receives the string with tags, and at the output we have a matrix, where the rows correspond to the tasks, and the columns - to the tags. Each cell contains the number of occurrences of the tag in the task. In my case, this is 1 or 0.

For Reporter approached LabelBinarizer . It binarizes the “one-against-all” signs. For each task there can be only one creator. At the entrance to LabelBinarizer, a list of task creators is submitted, and the output is a matrix, where rows are tasks, and columns correspond to the names of task creators. It turns out that in each line is “1” in the column corresponding to the creator, and in the rest - “0”.

For Created, the difference in days between the task creation date and the current date is considered.

The result was the following signs:

All these signs are combined into one large matrix (4855, 129478), which will be used for training.

Separately, it is worth noting the names of signs. Because Some machine learning models are able to highlight the signs that have the greatest impact on class recognition, it is necessary to use this. TfidfVectorizer, CountVectorizer, LabelBinarizer have methods get_feature_names that return a list of attributes, the order of which corresponds to the columns of matrices with data.

Very often XGBoost gives good results. With him and started. But I generated a huge number of features, the number of which significantly exceeds the size of the training sample. In this case, the probability of retraining XGBoost. The result was not very. High dimensionality digests well LogisticRegression . She showed higher quality.

I also tried as an exercise to build a model on a neural network in Tensorflow for this excellent tutor, but it turned out worse than the logistic regression.

I also played with XGBoost and Tensorflow hyperparameters, but leave it outside of the post, since the logistic regression result was not surpassed. In the latter, I twisted all the pens that you can. All parameters in the result remained default, except for two: solver = 'liblinear' and C = 3.0

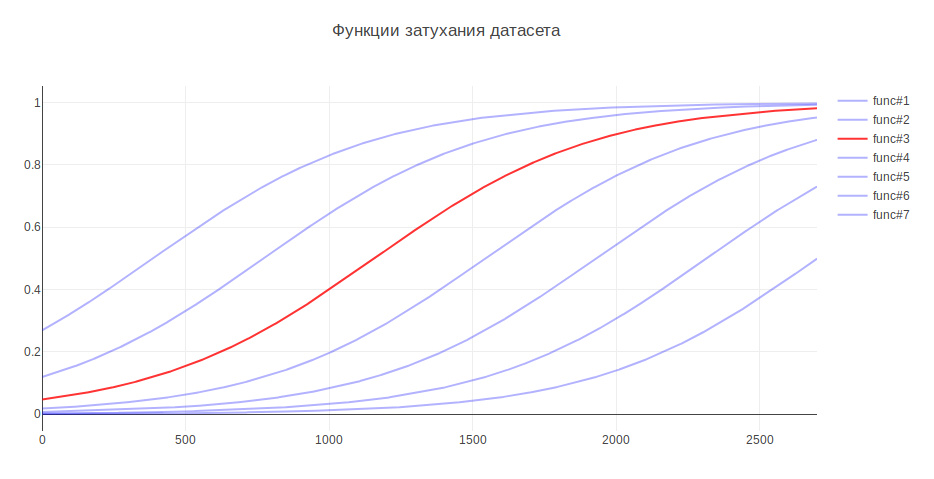

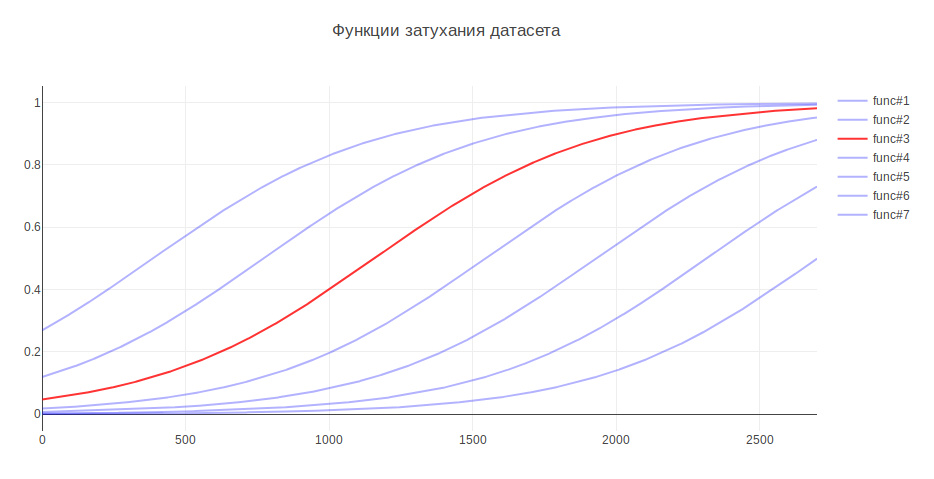

Another parameter that can affect the result is the size of the training sample. Because I deal with historical data, and in a few years the history can seriously change, for example, responsibility for something can be transferred to another team, then more recent data can bring more benefits, and old ones even lower the quality. On this account, I came up with a heuristic - the older the data, the less contribution they have to make to the training model. Depending on old age, the data is multiplied by a certain coefficient, which is taken from the function. I generated a few functions to damp the data and used the one that gave the most boost in testing.

Due to this, the quality of classification increased by 3%.

In classification tasks, you need to think about what is more important for us - accuracy or completeness ? In my case, if the algorithm is wrong, then there is nothing terrible, we have very good knowledge between teams and the task will be transferred to the responsible ones, or to the main one in QA. In addition, the algorithm is not mistaken randomly, but finds a command close to the problem. Therefore, it was decided to take 100% for completeness. And for the measurement of quality, the accuracy metric was chosen - the proportion of correct answers, which for the final model was 76%.

As a validation mechanism, I first used cross-validation - when the sample is divided into N parts and the quality is checked on one part, and the others are trained, and so N times, until each part plays the role of a test. The result is then averaged. But in my case, this approach did not fit, because the order of the data changes, and as it has already become known, the quality depends on the freshness of the data. Therefore, I studied all the time in old ones, and validated in fresh ones.

Let's see which commands are most often confused by the algorithm:

In the first place is Marketing and Pandora. This is not surprising, since The second team grew out of the first one and took with it responsibility for a multitude of functionalities. If you look at the rest of the teams, you can also see the reasons related to the company's internal cooking.

For comparison, I want to look at random models. If you assign a responsible person randomly, the quality will be about 5%, and if the most common class, then - 29%

LogisticRegression for each class returns the coefficients of signs. The greater the value, the greater the contribution this feature has made to this class.

Under the spoiler output top tags. Prefixes mean whence signs were received:

Signs roughly reflect what teams do

On this, the construction of the model is completed and it is possible to build a program on its basis.

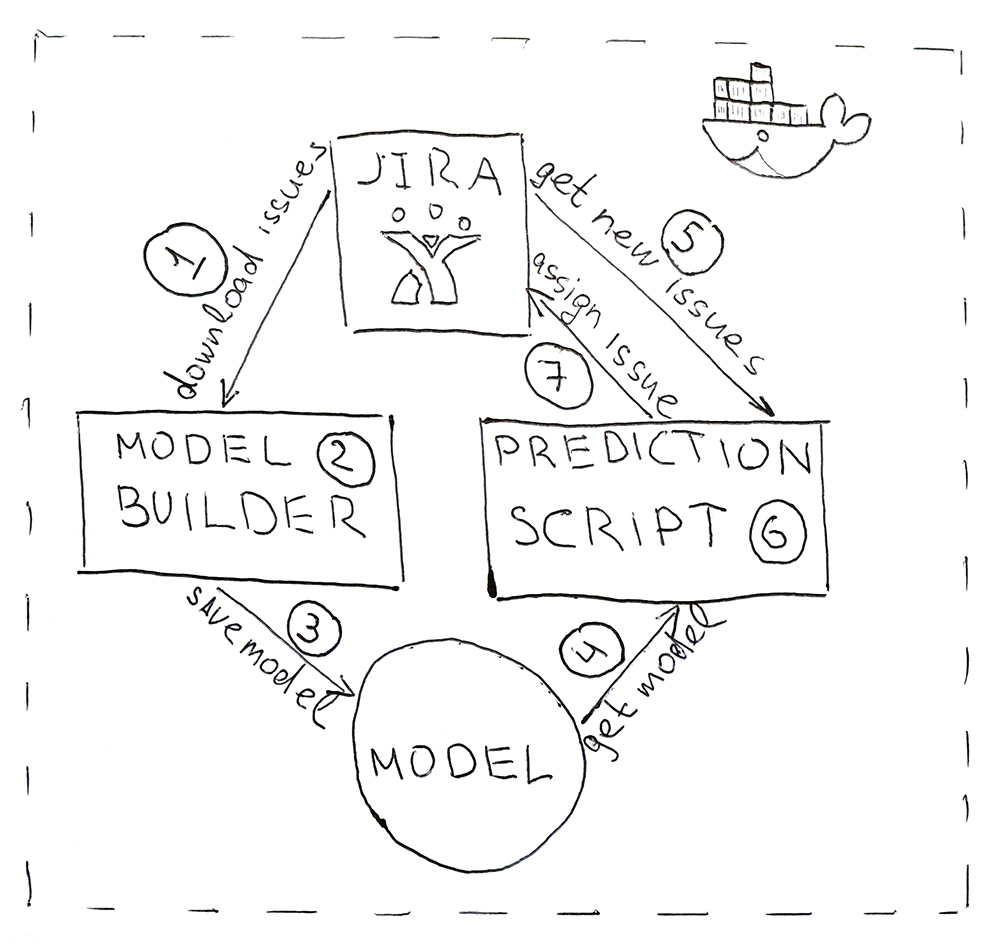

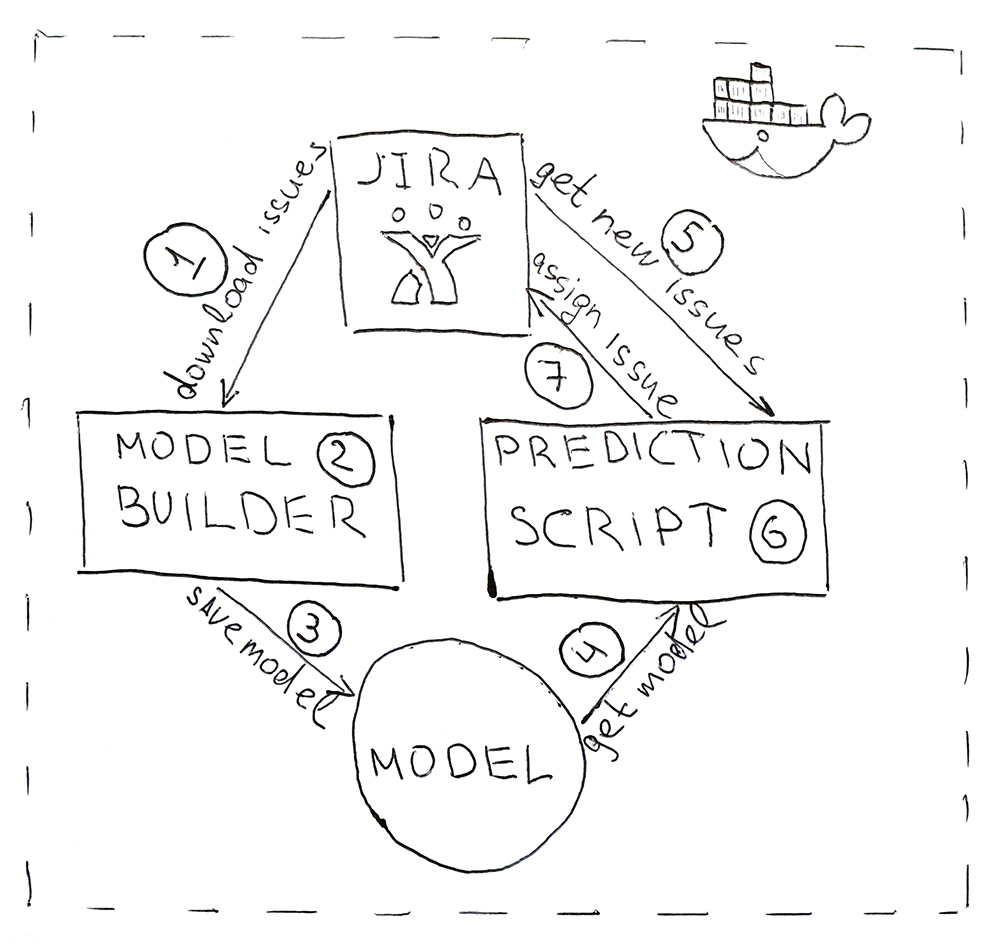

The program consists of two Python scripts. The first builds the model, and the second fulfills the predictions.

To make the program easy to deploy and have the same versions of libraries as during development, scripts are packaged in a Docker container.

As a result, we have automated the routine process. The accuracy of 76% is not too big, but in this case the misses are not critical. All tasks find their performers, and the most important thing is that for this you no longer need to be distracted several times a day in order to delve into the essence of the tasks and look for those responsible. Everything works automatically! Hooray!

This article is about how we automated the routine process of assigning tasks to testers using machine learning.

In hh.ru there is an internal service for which tasks are created in Jira (within the company they are called HHS), if someone doesn’t work or doesn’t work correctly. Further, these tasks are manually handled by the QA team leader, Alexey, and assigned to the team whose area of responsibility is the fault. Lesha knows that robots should perform boring tasks. Therefore, he turned to me for help on the part of ML.

')

The chart below shows the amount of HHS per month. We are growing and the number of tasks is growing. Tasks are mainly created during working hours a few pieces per day, and this has to be constantly distracted.

So, it is necessary to learn from historical data to determine the development team to which HHS belongs. This is a multi-class classification task.

Data

In machine learning tasks, the most important thing is the quality data. The outcome of the problem depends on them. Therefore, any machine learning tasks must begin with examining the data. Since the beginning of 2015, we have accumulated about 7,000 tasks that contain the following useful information:

- Summary - title, short description

- Description - full description of the problem

- Labels - a list of tags associated with the problem

- Reporter - the name of the creator of HHS. This feature is useful because people work with a limited set of functionalities.

- Created - date of creation

- Assignee - the one to whom the task is assigned. From this feature, the target variable will be generated.

Let's start with the target variable. First, each team has areas of responsibility. Sometimes they intersect, sometimes one team may intersect in development with another. The decision will be based on the assumption that assignee, which remained at the task at the time of closure, is responsible for its solution. But we need to predict not a specific person, but a team. Fortunately, we have in Jira the composition of all teams and can be smoked. But with the definition of team by person there are a number of problems:

- Not all HHS are related to technical problems, and we are only interested in those tasks that can be assigned to the development team. Therefore, it is necessary to throw out tasks where assignee is not from the technical department

- sometimes teams cease to exist. They are also removed from the training set.

- unfortunately, people do not work forever in the company, and sometimes move from team to team. Fortunately, we managed to get a history of changes in the composition of all teams. Having the creation date of HHS and assignee, you can find which team was engaged in the task at a certain time.

After screening out irrelevant data, the training sample was reduced to 4900 tasks.

Let's look at the distribution of tasks between teams:

Tasks need to be distributed between 22 teams.

Signs:

Summary and Description - text fields.

First, they should be cleaned from unnecessary characters. For some tasks, it makes sense to leave symbols in the lines that carry information, for example + and #, to distinguish between c ++ and c #, but in this case I decided to leave only letters and numbers, since not found where other characters may be useful.

Words need to be lemmatized. Lemmatization is the reduction of a word to a lemma, its normal (vocabulary) form. For example, cats → cat. I also tried stemming, but with lemmatization, the quality was slightly higher. Stemming is the process of finding the stem of a word. This basis is due to the algorithm (they are different in different implementations), for example, cats → cats. The meaning of the first and second is to match each other the same words in different forms. I used Python wrapper for Yandex Mystem .

Then the text should be cleared from stop words that do not carry the payload. For example, "was", "me", "more." I usually take stop words from NLTK .

Another approach that I try in the tasks of working with text is character-by-word word splitting. For example, there is a “search”. If you break it into components of 3 characters, then you get the words "poi", "ois", "lawsuit". It helps to get additional connections. Suppose there is the word "search." Lemmatization does not lead to the “search” and “search” for the general form, but a division of 3 characters will highlight the common part - the “lawsuit”.

I made two tokenizers. Tokenizer is a method that inputs text to the input, and the output is a list of tokens that make up the text. The first highlights lemmatized words and numbers. The second highlights only lemmatized words, which are broken down by 3 characters each, i.e. at the exit he has a list of three-character tokens.

Tokenizers are used in TfidfVectorizer , which is used to convert text (and not only) data into a vector representation based on tf-idf . At the input it is given a list of lines, and at the output we get the matrix M by N, where M is the number of lines, and N is the number of signs. Each sign is a frequency characteristic of a word in a document where the frequency is penalized if the given word occurs many times in all documents. Thanks to the ngram_range TfidfVectorizer parameter, I added more digrams and trigrams as signs.

I also tried to use word embeddings using Word2vec as additional signs. Embedding is a vector representation of the word. For each text I averaged the embeddings of all his words. But it did not give any gain, therefore I refused these signs.

For Labels was used CountVectorizer . At the input it receives the string with tags, and at the output we have a matrix, where the rows correspond to the tasks, and the columns - to the tags. Each cell contains the number of occurrences of the tag in the task. In my case, this is 1 or 0.

For Reporter approached LabelBinarizer . It binarizes the “one-against-all” signs. For each task there can be only one creator. At the entrance to LabelBinarizer, a list of task creators is submitted, and the output is a matrix, where rows are tasks, and columns correspond to the names of task creators. It turns out that in each line is “1” in the column corresponding to the creator, and in the rest - “0”.

For Created, the difference in days between the task creation date and the current date is considered.

The result was the following signs:

- tf-idf for Summary in words and numbers (4855, 4593)

- tf-idf for Summary on three-character partitions (4855, 15518)

- tf-idf for Description in words and numbers (4855, 33297)

- tf-idf for Description on three-character partitions (4855, 75359)

- number of entries for Labels (4855, 505)

- binary signs for Reporter (4855, 205)

- task lifetime (4855, 1)

All these signs are combined into one large matrix (4855, 129478), which will be used for training.

Separately, it is worth noting the names of signs. Because Some machine learning models are able to highlight the signs that have the greatest impact on class recognition, it is necessary to use this. TfidfVectorizer, CountVectorizer, LabelBinarizer have methods get_feature_names that return a list of attributes, the order of which corresponds to the columns of matrices with data.

Prediction model selection

Very often XGBoost gives good results. With him and started. But I generated a huge number of features, the number of which significantly exceeds the size of the training sample. In this case, the probability of retraining XGBoost. The result was not very. High dimensionality digests well LogisticRegression . She showed higher quality.

I also tried as an exercise to build a model on a neural network in Tensorflow for this excellent tutor, but it turned out worse than the logistic regression.

Hyperparameter selection

I also played with XGBoost and Tensorflow hyperparameters, but leave it outside of the post, since the logistic regression result was not surpassed. In the latter, I twisted all the pens that you can. All parameters in the result remained default, except for two: solver = 'liblinear' and C = 3.0

Another parameter that can affect the result is the size of the training sample. Because I deal with historical data, and in a few years the history can seriously change, for example, responsibility for something can be transferred to another team, then more recent data can bring more benefits, and old ones even lower the quality. On this account, I came up with a heuristic - the older the data, the less contribution they have to make to the training model. Depending on old age, the data is multiplied by a certain coefficient, which is taken from the function. I generated a few functions to damp the data and used the one that gave the most boost in testing.

Due to this, the quality of classification increased by 3%.

Quality control

In classification tasks, you need to think about what is more important for us - accuracy or completeness ? In my case, if the algorithm is wrong, then there is nothing terrible, we have very good knowledge between teams and the task will be transferred to the responsible ones, or to the main one in QA. In addition, the algorithm is not mistaken randomly, but finds a command close to the problem. Therefore, it was decided to take 100% for completeness. And for the measurement of quality, the accuracy metric was chosen - the proportion of correct answers, which for the final model was 76%.

As a validation mechanism, I first used cross-validation - when the sample is divided into N parts and the quality is checked on one part, and the others are trained, and so N times, until each part plays the role of a test. The result is then averaged. But in my case, this approach did not fit, because the order of the data changes, and as it has already become known, the quality depends on the freshness of the data. Therefore, I studied all the time in old ones, and validated in fresh ones.

Let's see which commands are most often confused by the algorithm:

In the first place is Marketing and Pandora. This is not surprising, since The second team grew out of the first one and took with it responsibility for a multitude of functionalities. If you look at the rest of the teams, you can also see the reasons related to the company's internal cooking.

For comparison, I want to look at random models. If you assign a responsible person randomly, the quality will be about 5%, and if the most common class, then - 29%

The most significant signs

LogisticRegression for each class returns the coefficients of signs. The greater the value, the greater the contribution this feature has made to this class.

Under the spoiler output top tags. Prefixes mean whence signs were received:

- sum - tf-idf for Summary in words and numbers

- sum2 - tf-idf for Summary on three-character splits

- desc - tf-idf for Description in words and numbers

- desc2 - tf-idf for Description on three-character splits

- lab - Labels field

- rep - field Reporter

Signs of

A-Team: sum_site (1.28), lab_reviews_and_inviting (1.37), lab_rejects to the employer (1.07), lab_web (1.03), sum_work (1.04), sum_work_site (1.59), lab_hhs (1.19), lab_feedback (1.06, a repentant (1.59), lab_hhs (1.19), lab_feedback (1.06,) (1.16), sum_window (1.13), sum_to break (1.04), rep_name_1 (1.22), lab_otkliki_coeker (1.0), lab_site (0.92)

API: lab_accounting__account (1.12), sum_comment_surface (0.94), rep_name_sword (0.9), rep_name_3 (0.83), rep_name_4 (0.91) ), sum_view (0.91), desc_comment (1.02), rep_name_6 (0.85), desc_ summary (0.86), sum_api (1.01)

Android: sum_android (1.77), lab_ios (1.66), sum_app (2.9), sum_hr_mobile (1.4), lab_android (3.55), sum_hr (1.36), lab_mobile_app (3.33), sum_mobile (1.4), rep_name_2 (1.34), sum2_ril (1.27 ), sum_app_android (1.28), sum2_pri_ril_lo (1.19), sum2_pri_ril (1.27), sum2_ilo_lozh (1.19), sum2_ilo_lozh_ozh (1.19)

Billing: rep_name_7 (3.88), desc_count (3.23), rep_name_8 (3.15), lab_billing_wtf (2.46), rep_name_9 (4.51), rep_name_10 (2.88), sum_count (3.16), lab_billing (2.41), rep_name_11 (2.27), lab_illing red ), sum_service (2.33), lab_payment_services (1.92), sum_act (2.26), rep_name_12 (1.92), rep_name_13 (2.4)

Brandy: lab_totals_ of talents (2.17), rep_name_14 (1.87), rep_name_15 (3.36), lab_clickme (1.72), rep_name_16 (1.44), rep_name_17 (1.63), rep_name_18 (1.29), sum_page (1.24), sum_brand (1.JF),), rep, name_18 (1.29), sum_page (1.24), rep_name_17 (1.63), rep_name_18 (1.29) ), constructor sum (1.59), lab_brand.page (1.33), sum_description (1.23), sum_description_company (1.17), lab_article (1.15)

Clickme: desc_act (0.73), sum_adv_hh (0.65), sum_adv_hh_en (0.65), sum_hh (0.77), lab_hhs (1.27), lab_bs (1.91), rep_name_19 (1.17), rep_name_20 (1.29), rep_name_21 (rep), rep_name_19 (1.17), rep_name_20 (1.29), rep_name_21 (1.9), rep_name_19 (1.17) ), sum_advertising (0.67), sum_deposition (0.65), sum_adv (0.65), sum_hh_ua (0.64), sum_click_31 (0.64)

Marketing: lab_region (0.9) lab_tormozit_sayt (1.23) sum_rassylka (1.32) lab_menedzhery_vakansy (0.93) sum_kalendar (0.93), rep_name_22 (1.33), lab_oprosy (1.25), rep_name_6 (1.53), lab_proizvodstvennyy_kalendar (1.55), rep_name_23 (0.86 ), sum_andex (1.26), sum_distribution_vacancy (0.85), sum_distribution (0.85), sum_category (0.85), sum_error_transition (0.83)

Mercury: lab_services (1.76), sum_capcha (2.02), lab_siterer_services (1.89), lab_lawyers (2.1), lab_autorization_counter (1.68), lab_production (2.53), lab_reply_sum (2.21), reps, 1.77, i, rep, name_ rep, (rep. Name), rep, name, rep, name, resume (2.21), rep, name, rep, name_24_; ), sum_user (1.57), rep_name_26 (1.43), lab_moderation_ of vacancies (1.58), desc_password (1.39), rep_name_27 (1.36)

Mobile_site: sum_mobile_version (1.32), sum_version_site (1.26), lab_application (1.51), lab_statistics (1.32), sum_mobilny_version_say_site (1.25), lab_mobile_version (5.1), sum_version (1.41), rep_name_28 (cf.pdf), aus.eek. ), lab_jtb (1.07), rep_name_16 (1.12), rep_name_29 (1.05), sum_site (0.95), rep_name_30 (0.92)

TMS: rep_name_31 (1.39), lab_talantix (4.28), rep_name_32 (1.55), rep_name_33 (2.59), sum_vacancy_talantix (0.74), lab_search (0.57), lab_search (0.63), rep_name_34 (0.64), lab_lender (0.56), lab_search (0.63), rep_name_34 (0.64), lab_lender (0.56), lab_search (0.63), lab_search (0.63), rep_name_34 (0.64), 0.66) ), lab_tms (0.74), sum_otklik_hh (0.57), lab_mailing (0.64), sum_talantix (0.6), sum2_mpo (0.56)

Talantix: sum_system (0.86), rep_name_16 (1.37), sum_talantix (1.16), lab_mail (0.94), lab_xor (0.8), lab_talantix (3.19), rep_name_35 (1.07), rep_name_18 (1.33), lab_personal_dates (96), rep_name_35 (1.07), rep_name_18 (1.33), lab_personal_dates (96), rep_name_35 (1.07), rep_name_18 (1.33) ), sum_alantix (0.89), sum_because (0.78), lab_mail (0.77), sum_elect_set_view (0.73), rep_name_6 (0.72)

WebServices: sum_vacancy (1.36), desc_pattern (1.32), sum_archive (1.3), lab_writing_ templates (1.39), sum_phone_number_phone (1.44), rep_name_36 (1.28), lab_lawyers (2.1), lab_inviting (1.27), lab_ing_accord_lawers (1.27), lab_lawyers (2.1), lab_lawyers (2.17), lab_lawyers (2.1), lab_lawyers (2.13) ), lab_eleased_sum (1.2), lab_keye_wab, (1.22), sum_to find (1.18), sum_telephone (1.16), sum_folder (1.17)

iOS: sum_application (1.41), desc_application (1.13), lab_andriod (1.73), rep_name_37 (1.05), sum_ios (1.14), lab_mobile_app (1.88), lab_ios (4.55), rep_name_6 (1.41), rep_name_38 (1.35), mo_mobile_ ), sum_ mobile (0.98), rep_name_39 (0.74), sum_ summary_open (0.88), rep_name_40 (0.81), lab_duplication_vacancy (0.76)

Architecture: sum_statistics_otklik (1.1), rep_name_41 (1.4), lab_graphic_setting_displaces, o and_otclik_vakanii (1.04), lab_developing_vacancy (1.16), lab_quot (1.0), sum_special offer (1.02), rep_name_42 (1.33), rep_name, 1.0_, sum_special proposal (1.02), rep_name_42 (1.33), rep_name, 1.0_, sum_special proposal (1.02), rep_name_42 (1.33), rep_name, 1.0_, sum_special proposal (1.02), rep_name_42 (1.33), rep_name, 1.0_, sum_special proposal (1.02), rep_name_42 (1.33), rep_name, 1.0, _ ), rep_name_43 (1.09), sum_zavisat (0.83), sum_statistika (0.83), lab_otkliki_ employer (0.76), sum_500ka (0.74)

Bank Salary: lab_500 (1.18), lab_authorization (0.79), sum_500 (1.04), rep_name_44 (0.85), sum_500_site (1.03), lab_site (1.08), lab_visibility_subject (1.54), lab_specification (1.26), lab_specification_subject_subject (1.54), lab_profile (1.26), lab_setting_vizability_subject_ 1.9 sum_error (0.79), lab_post_ orders (1.33), rep_name_43 (0.74), sum_ie_11 (0.69), sum_500_error (0.66), sum2_say_ite (0.65)

Mobile products: lab_mobile_application (1.69), lab_otklik (1.65), sum_hr_mobile (0.81), lab_applicant (0.88), lab_employer (0.84), sum_mobile (0.81), rep_name_45 (1.2), desc_d0 (0.87), rep_name_46 (4.46 (46), rep_name_45 (1.2), desc_d0 (0.84). 0.79), sum_wrong_work_search (0.61), desc_app (0.71), rep_name_47 (0.69), rep_name_28 (0.61), sum_work_search (0.59)

Pandora: sum_to come (2.68), desc_to come (1.72), lab_sms (1.59), sum_statu (2.75), sum_education_otklik (1.38), sum_disable (2.96), lab_esto_recovery_password (1.52), lab_path_tasks (1.31),). ), lab_list (1.72), lab_list (3.37), desc_list (1.69), desc_mail (1.47), rep_name_6 (1.32)

Peppers: lab_sohranenie_rezyume (1.43) sum_rezyume (2.02) sum_voronka (1.57) sum_voronka_vakansiya (1.66) desc_rezyume (1.19) lab_rezyume (1.39) sum_kod (1.2), lab_applicant (1.34), sum_indeks (1.47) sum_indeks_vezhlivost (1.47 ), lab_creation_summer (1.28), rep_name_45 (1.82), sum_weekness (1.47), sum_save_suminal (1.18), lab_index_fariness (1.13)

Search 1 (1.62), sum_synonym (1.71), sum_sample (1.62), sum2_isk (1.58), sum2_tois_isk (1.57), lab_automatic update_sum (1.57)

Search 2: rep_name_48 (1.13), desc_d1 (1.1), lab_premium_v_poiske (1.02) lab_prosmotry_vakansii (1.4), sum_poisk (1.4), desc_d0 (1.2), lab_pokazat_kontakty (1.17), rep_name_49 (1.12), lab_13 (1.09), rep_name_50 (1.05), lab_search_vocancies (1.62), lab_otkliki_ and_inviting (1.61), sum_otklik (1.09), lab_selected_subject (1.37), lab_filter_v__critical (1.08)

SuperProducts: lab_contact_information (1.78), desc_address (1.46), rep_name_4es (1.84), sum_address (1.74), lab_socon__ vacancies (1.68), lab_assigned_sum (1.45), lab_otcli__worker (1.29), lab_otclik_worker (1.29), lab_otclik_worker (1.45), lab_otclik_worker (1.29), sum_right (1.164), lab_responses_ employer (1.29), sum_right (1.164), 45 (5.9), lab_otclik_worker (1.29), sum_right (1.4), (5.4), 45 (45), (1.45), lab_otcli__customer (1.29), sum_right (1.4), (5.4), 45 (45), lab_truck__custom (1.28), lab__call_reference (1.45), lab__response_ (0.93) ), sum_fault (1.33), rep_name_42 (1.32), sum_quot (1.14), desc_address_ofis (1.14), rep_name_51 (1.09)

API: lab_accounting__account (1.12), sum_comment_surface (0.94), rep_name_sword (0.9), rep_name_3 (0.83), rep_name_4 (0.91) ), sum_view (0.91), desc_comment (1.02), rep_name_6 (0.85), desc_ summary (0.86), sum_api (1.01)

Android: sum_android (1.77), lab_ios (1.66), sum_app (2.9), sum_hr_mobile (1.4), lab_android (3.55), sum_hr (1.36), lab_mobile_app (3.33), sum_mobile (1.4), rep_name_2 (1.34), sum2_ril (1.27 ), sum_app_android (1.28), sum2_pri_ril_lo (1.19), sum2_pri_ril (1.27), sum2_ilo_lozh (1.19), sum2_ilo_lozh_ozh (1.19)

Billing: rep_name_7 (3.88), desc_count (3.23), rep_name_8 (3.15), lab_billing_wtf (2.46), rep_name_9 (4.51), rep_name_10 (2.88), sum_count (3.16), lab_billing (2.41), rep_name_11 (2.27), lab_illing red ), sum_service (2.33), lab_payment_services (1.92), sum_act (2.26), rep_name_12 (1.92), rep_name_13 (2.4)

Brandy: lab_totals_ of talents (2.17), rep_name_14 (1.87), rep_name_15 (3.36), lab_clickme (1.72), rep_name_16 (1.44), rep_name_17 (1.63), rep_name_18 (1.29), sum_page (1.24), sum_brand (1.JF),), rep, name_18 (1.29), sum_page (1.24), rep_name_17 (1.63), rep_name_18 (1.29) ), constructor sum (1.59), lab_brand.page (1.33), sum_description (1.23), sum_description_company (1.17), lab_article (1.15)

Clickme: desc_act (0.73), sum_adv_hh (0.65), sum_adv_hh_en (0.65), sum_hh (0.77), lab_hhs (1.27), lab_bs (1.91), rep_name_19 (1.17), rep_name_20 (1.29), rep_name_21 (rep), rep_name_19 (1.17), rep_name_20 (1.29), rep_name_21 (1.9), rep_name_19 (1.17) ), sum_advertising (0.67), sum_deposition (0.65), sum_adv (0.65), sum_hh_ua (0.64), sum_click_31 (0.64)

Marketing: lab_region (0.9) lab_tormozit_sayt (1.23) sum_rassylka (1.32) lab_menedzhery_vakansy (0.93) sum_kalendar (0.93), rep_name_22 (1.33), lab_oprosy (1.25), rep_name_6 (1.53), lab_proizvodstvennyy_kalendar (1.55), rep_name_23 (0.86 ), sum_andex (1.26), sum_distribution_vacancy (0.85), sum_distribution (0.85), sum_category (0.85), sum_error_transition (0.83)

Mercury: lab_services (1.76), sum_capcha (2.02), lab_siterer_services (1.89), lab_lawyers (2.1), lab_autorization_counter (1.68), lab_production (2.53), lab_reply_sum (2.21), reps, 1.77, i, rep, name_ rep, (rep. Name), rep, name, rep, name, resume (2.21), rep, name, rep, name_24_; ), sum_user (1.57), rep_name_26 (1.43), lab_moderation_ of vacancies (1.58), desc_password (1.39), rep_name_27 (1.36)

Mobile_site: sum_mobile_version (1.32), sum_version_site (1.26), lab_application (1.51), lab_statistics (1.32), sum_mobilny_version_say_site (1.25), lab_mobile_version (5.1), sum_version (1.41), rep_name_28 (cf.pdf), aus.eek. ), lab_jtb (1.07), rep_name_16 (1.12), rep_name_29 (1.05), sum_site (0.95), rep_name_30 (0.92)

TMS: rep_name_31 (1.39), lab_talantix (4.28), rep_name_32 (1.55), rep_name_33 (2.59), sum_vacancy_talantix (0.74), lab_search (0.57), lab_search (0.63), rep_name_34 (0.64), lab_lender (0.56), lab_search (0.63), rep_name_34 (0.64), lab_lender (0.56), lab_search (0.63), lab_search (0.63), rep_name_34 (0.64), 0.66) ), lab_tms (0.74), sum_otklik_hh (0.57), lab_mailing (0.64), sum_talantix (0.6), sum2_mpo (0.56)

Talantix: sum_system (0.86), rep_name_16 (1.37), sum_talantix (1.16), lab_mail (0.94), lab_xor (0.8), lab_talantix (3.19), rep_name_35 (1.07), rep_name_18 (1.33), lab_personal_dates (96), rep_name_35 (1.07), rep_name_18 (1.33), lab_personal_dates (96), rep_name_35 (1.07), rep_name_18 (1.33) ), sum_alantix (0.89), sum_because (0.78), lab_mail (0.77), sum_elect_set_view (0.73), rep_name_6 (0.72)

WebServices: sum_vacancy (1.36), desc_pattern (1.32), sum_archive (1.3), lab_writing_ templates (1.39), sum_phone_number_phone (1.44), rep_name_36 (1.28), lab_lawyers (2.1), lab_inviting (1.27), lab_ing_accord_lawers (1.27), lab_lawyers (2.1), lab_lawyers (2.17), lab_lawyers (2.1), lab_lawyers (2.13) ), lab_eleased_sum (1.2), lab_keye_wab, (1.22), sum_to find (1.18), sum_telephone (1.16), sum_folder (1.17)

iOS: sum_application (1.41), desc_application (1.13), lab_andriod (1.73), rep_name_37 (1.05), sum_ios (1.14), lab_mobile_app (1.88), lab_ios (4.55), rep_name_6 (1.41), rep_name_38 (1.35), mo_mobile_ ), sum_ mobile (0.98), rep_name_39 (0.74), sum_ summary_open (0.88), rep_name_40 (0.81), lab_duplication_vacancy (0.76)

Architecture: sum_statistics_otklik (1.1), rep_name_41 (1.4), lab_graphic_setting_displaces, o and_otclik_vakanii (1.04), lab_developing_vacancy (1.16), lab_quot (1.0), sum_special offer (1.02), rep_name_42 (1.33), rep_name, 1.0_, sum_special proposal (1.02), rep_name_42 (1.33), rep_name, 1.0_, sum_special proposal (1.02), rep_name_42 (1.33), rep_name, 1.0_, sum_special proposal (1.02), rep_name_42 (1.33), rep_name, 1.0_, sum_special proposal (1.02), rep_name_42 (1.33), rep_name, 1.0, _ ), rep_name_43 (1.09), sum_zavisat (0.83), sum_statistika (0.83), lab_otkliki_ employer (0.76), sum_500ka (0.74)

Bank Salary: lab_500 (1.18), lab_authorization (0.79), sum_500 (1.04), rep_name_44 (0.85), sum_500_site (1.03), lab_site (1.08), lab_visibility_subject (1.54), lab_specification (1.26), lab_specification_subject_subject (1.54), lab_profile (1.26), lab_setting_vizability_subject_ 1.9 sum_error (0.79), lab_post_ orders (1.33), rep_name_43 (0.74), sum_ie_11 (0.69), sum_500_error (0.66), sum2_say_ite (0.65)

Mobile products: lab_mobile_application (1.69), lab_otklik (1.65), sum_hr_mobile (0.81), lab_applicant (0.88), lab_employer (0.84), sum_mobile (0.81), rep_name_45 (1.2), desc_d0 (0.87), rep_name_46 (4.46 (46), rep_name_45 (1.2), desc_d0 (0.84). 0.79), sum_wrong_work_search (0.61), desc_app (0.71), rep_name_47 (0.69), rep_name_28 (0.61), sum_work_search (0.59)

Pandora: sum_to come (2.68), desc_to come (1.72), lab_sms (1.59), sum_statu (2.75), sum_education_otklik (1.38), sum_disable (2.96), lab_esto_recovery_password (1.52), lab_path_tasks (1.31),). ), lab_list (1.72), lab_list (3.37), desc_list (1.69), desc_mail (1.47), rep_name_6 (1.32)

Peppers: lab_sohranenie_rezyume (1.43) sum_rezyume (2.02) sum_voronka (1.57) sum_voronka_vakansiya (1.66) desc_rezyume (1.19) lab_rezyume (1.39) sum_kod (1.2), lab_applicant (1.34), sum_indeks (1.47) sum_indeks_vezhlivost (1.47 ), lab_creation_summer (1.28), rep_name_45 (1.82), sum_weekness (1.47), sum_save_suminal (1.18), lab_index_fariness (1.13)

Search 1 (1.62), sum_synonym (1.71), sum_sample (1.62), sum2_isk (1.58), sum2_tois_isk (1.57), lab_automatic update_sum (1.57)

Search 2: rep_name_48 (1.13), desc_d1 (1.1), lab_premium_v_poiske (1.02) lab_prosmotry_vakansii (1.4), sum_poisk (1.4), desc_d0 (1.2), lab_pokazat_kontakty (1.17), rep_name_49 (1.12), lab_13 (1.09), rep_name_50 (1.05), lab_search_vocancies (1.62), lab_otkliki_ and_inviting (1.61), sum_otklik (1.09), lab_selected_subject (1.37), lab_filter_v__critical (1.08)

SuperProducts: lab_contact_information (1.78), desc_address (1.46), rep_name_4es (1.84), sum_address (1.74), lab_socon__ vacancies (1.68), lab_assigned_sum (1.45), lab_otcli__worker (1.29), lab_otclik_worker (1.29), lab_otclik_worker (1.45), lab_otclik_worker (1.29), sum_right (1.164), lab_responses_ employer (1.29), sum_right (1.164), 45 (5.9), lab_otclik_worker (1.29), sum_right (1.4), (5.4), 45 (45), (1.45), lab_otcli__customer (1.29), sum_right (1.4), (5.4), 45 (45), lab_truck__custom (1.28), lab__call_reference (1.45), lab__response_ (0.93) ), sum_fault (1.33), rep_name_42 (1.32), sum_quot (1.14), desc_address_ofis (1.14), rep_name_51 (1.09)

Signs roughly reflect what teams do

Use model

On this, the construction of the model is completed and it is possible to build a program on its basis.

The program consists of two Python scripts. The first builds the model, and the second fulfills the predictions.

- Jira provides an API through which you can download already solved tasks (HHS). Once a day the script is launched and downloads them.

- The downloaded data is converted into signs. First, the data is beaten for training and test data and is fed into the ML model for validation in order to ensure that the quality does not start to fall from start to start. And then the second time the model is trained on all data. The whole process takes about 10 minutes.

- The trained model is saved to the hard disk. I used the dill utility to serialize objects. In addition to the model itself, you must also save all the objects that were used to obtain the signs. This is to get the signs in the same space for new HHS

- Using the same dill, the model is loaded into a prediction script, which runs every 5 minutes.

- Go to Jira for new HHS.

- We get the signs and transfer them to the model, which will return for each HHS class name - the name of the team.

- For the team we find the person in charge and assign him a task through the Jira API. They may be a tester, if a team does not have a tester, then a team leader.

To make the program easy to deploy and have the same versions of libraries as during development, scripts are packaged in a Docker container.

As a result, we have automated the routine process. The accuracy of 76% is not too big, but in this case the misses are not critical. All tasks find their performers, and the most important thing is that for this you no longer need to be distracted several times a day in order to delve into the essence of the tasks and look for those responsible. Everything works automatically! Hooray!

Source: https://habr.com/ru/post/457418/

All Articles