Failover cluster PostgreSQL + Patroni. Implementation experience

In the article I will tell you how we approached the issue of PostgreSQL fault tolerance, why it became important for us and what happened in the end.

We have a high-loaded service: 2.5 million users worldwide, 50K + active users every day. The servers are located in Amazone in one region of Ireland: there are always 100+ different servers in operation, of which almost 50 are with databases.

The entire backend is a large monolithic stateful Java application that keeps a constant websocket connection with the client. With simultaneous work of several users on one board, they all see changes in real time, because we record every change in the database. We have about 10K requests per second to our databases. At peak load in Redis, we write 80-100K requests per second.

')

Initially, our service worked with Redis, a key-value repository that stores all data in the server’s RAM.

Redis pros:

Cons Redis for us:

We took into account the disadvantages and realized that we need to move to something more convenient, with normal transactions and less dependent on latency. We conducted a study, analyzed a variety of options and chose PostgreSQL.

We have been moving to the new database for 1.5 years and have transported only a small part of the data, so now we are working simultaneously with Redis and PostgreSQL. More information about the stages of moving and switching data between the database is written in the article of my colleague .

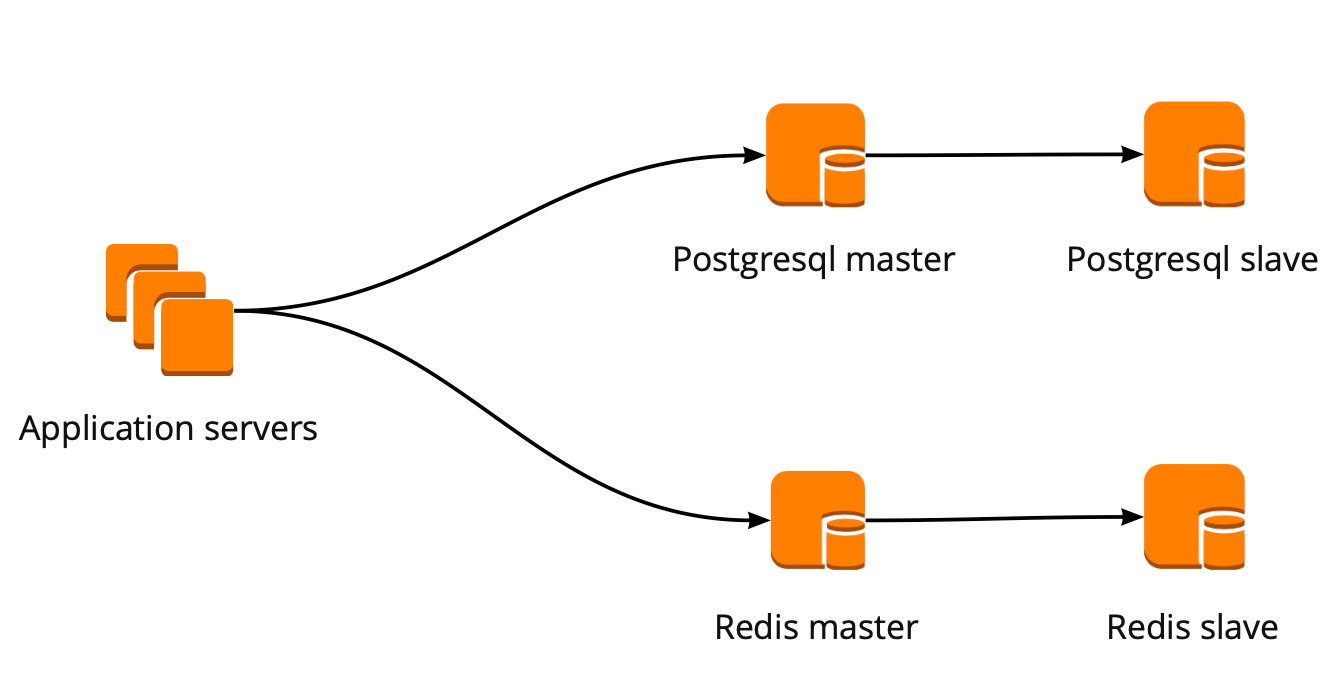

When we started to move, our application worked directly from the database and addressed the Redis and PostgreSQL wizard. The PostgreSQL cluster consisted of a master and a replica with asynchronous replication. So the scheme of work with bases looked:

While we were moving, the product was also developing: the number of users and the number of servers that worked with PostgreSQL increased, and we began to lack connections. PostgreSQL creates a separate process for each connection and consumes resources. You can increase the number of connections until a certain point, otherwise there is a chance to get a non-optimal database operation. The ideal option in such a situation would be the choice of the connection manager, who will face the base.

We had two options for the connection manager: Pgpool and PgBouncer. But the first one does not support transactional operation with the base, so we chose PgBouncer.

We set up the following operation scheme: our application refers to one PgBouncer, followed by PostgreSQL masters, and for each master, one replica with asynchronous replication.

At the same time, we could not store the entire amount of data in PostgreSQL and the speed of working with the database was important for us, so we started to sharpen PostgreSQL at the application level. The scheme described above is relatively convenient for this: when adding a new shard to PostgreSQL, it is enough to update the PgBouncer configuration and the application can immediately work with the new shard.

This scheme worked until the only instance of the PgBouncer died. We are in AWS, where all instances are running on hardware that periodically dies. In such cases, the instance simply moves to a new hardware and works again. It happened with PgBouncer, however, it became unavailable. The result of this fall was the inaccessibility of our service for 25 minutes. AWS for such situations recommends the use of redundancy on the user's side, which was not implemented by us at that time.

After that, we seriously thought about the resiliency of PgBouncer and PostgreSQL clusters, because this situation could be repeated with any instance in our AWS account.

We built the PgBouncer fault tolerance scheme as follows: all application servers access the Network Load Balancer, which is supported by two PgBouncer. Each PgBouncer looks at the same PostgreSQL master of each shard. In case of a repetition of the situation with the AWS instance crash, all traffic is redirected through another PgBouncer. Fault tolerance Network Load Balancer provides AWS.

This scheme allows you to easily add new PgBouncer servers.

In solving this problem, we considered various options: self-signed failover, repmgr, AWS RDS, Patroni.

They can monitor the wizard and, in the event of a crash, push the cue to the wizard and update the PgBouncer configuration.

The advantages of this approach are maximum simplicity, because you write the scripts yourself and understand exactly how they work.

Minuses:

Handwritten failover looks very difficult and requires nontrivial support. With a single PostgreSQL cluster, this will be the easiest option, but it doesn’t scale, so it’s not suitable for us.

Replication Manager for PostgreSQL clusters that can manage the operation of a PostgreSQL cluster. In this case, there is no automatic failover “out of the box”, so for the work you will need to write your “wrapper” over the finished solution. So everything can turn out even more complicated than with samopisny scripts, so we didn’t even try Repmgr.

It supports everything you need for us, can make backups and maintains a pool of connections. It has automatic switching: when the master dies, the replica becomes the new master, and AWS changes the dns entry to the new master, and the replicas can be located in different AZ.

The disadvantages are the lack of fine-tuning. As an example of fine-tuning: on our instances there are restrictions for tcp connections, which, unfortunately, cannot be done in RDS:

In addition, at AWS RDS, the price is almost twice as expensive as the normal price of the instance, which was the main reason for rejecting this decision.

This is a python template for managing PostgreSQL with good documentation, automatic failover and source code on github.

Advantages of Patroni:

Minuses:

As a result, we chose Patroni to create a failover cluster.

Before Patroni, we had 12 PostgreSQL shards in the configuration of one master and one replica with asynchronous replication. The application servers accessed the databases through the Network Load Balancer, which was preceded by two instances with the PgBouncer, and all PostgreSQL servers were behind them.

To implement Patroni, we needed to choose a distributed storage cluster configuration. Patroni works with distributed configuration storage systems such as etcd, Zookeeper, onsul. We just have a full-fledged Consul cluster, which works in conjunction with Vault and we don’t use it anymore. An excellent reason to start using Consul for its intended purpose.

We have a Consul cluster, which consists of three nodes and a Patroni cluster, which consists of a leader and a replica (in Patroni, the master is called the cluster leader, and the slaves are replicas). Each Patroni cluster instance constantly sends information about the cluster status to the Consul. Therefore, from onsul you can always find out the current configuration of the Patroni cluster and who is the leader at the moment.

To connect Patroni to onsul, it is enough to study the official documentation, in which it is written that it is necessary to specify a host in the format of http or https, depending on how we work with Consul, and the connection scheme, optionally:

It looks easy, but then the pitfalls begin. With onsul we work on a secure connection via https and our connection config will look like this:

But that doesn't work. At startup, Patroni cannot connect to onsul, because it tries to go via http anyway.

The Patroni source code helped to deal with the problem. Good thing it is written in python. It turns out the host parameter does not parse, and the protocol must be specified in the scheme. This is what a working configuration unit for working with Consul looks like with us:

So, we selected storage for a configuration. Now you need to understand how PgBouncer will switch its configuration when the leader changes in the Patroni cluster. In the documentation for this question there is no answer, because There, in principle, work with PgBouncer is not described.

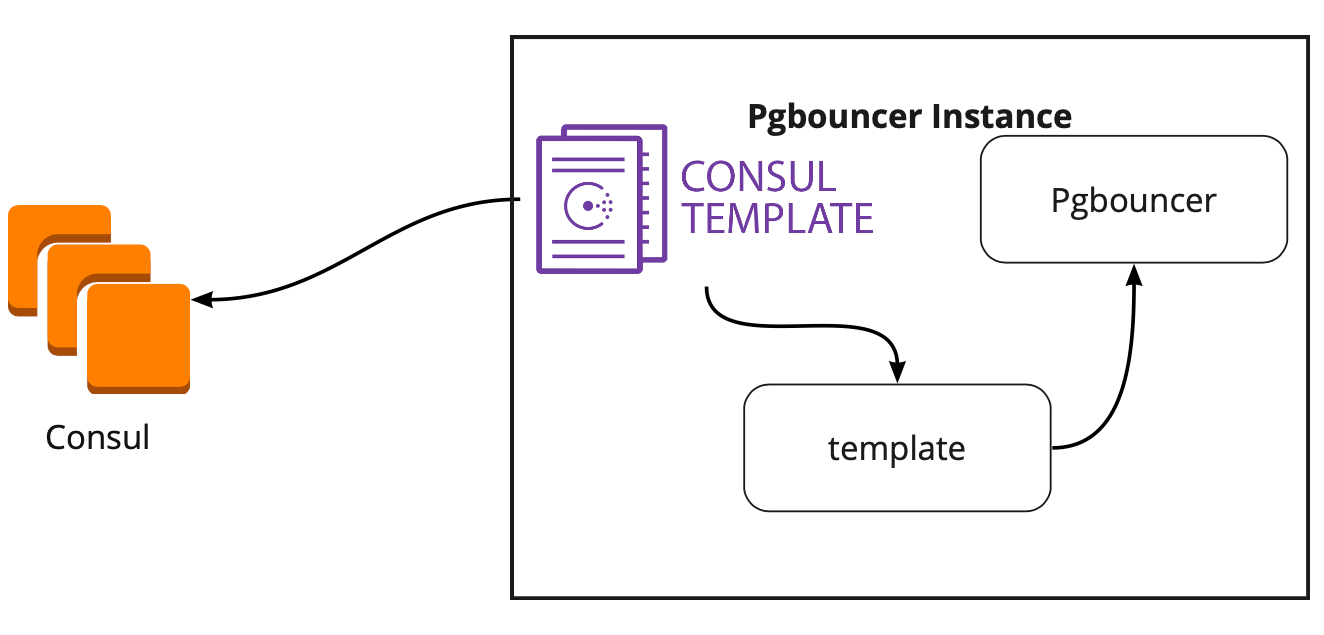

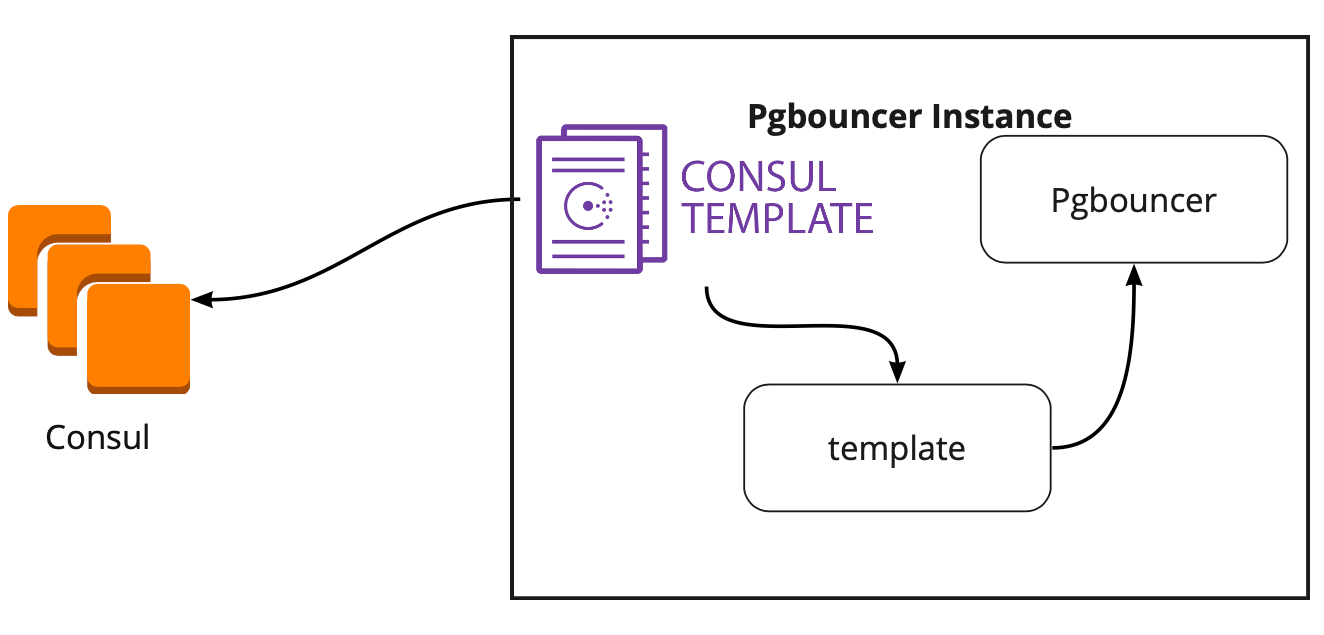

In search of a solution, we found an article (the name, unfortunately, I don’t remember), where it was written that the Consul-template helped a lot with PgBouncer and Patroni. This prompted us to research the work of the Consul-template.

It turned out that the Consul-template constantly monitors the configuration of the PostgreSQL cluster in the Consul. When changing the leader, it updates the PgBouncer configuration and sends the command to reload it.

A big plus of the template is that it is stored as a code, so when adding a new shard, it is enough to make a new commit and update the template automatically, supporting the principle of Infrastructure as code.

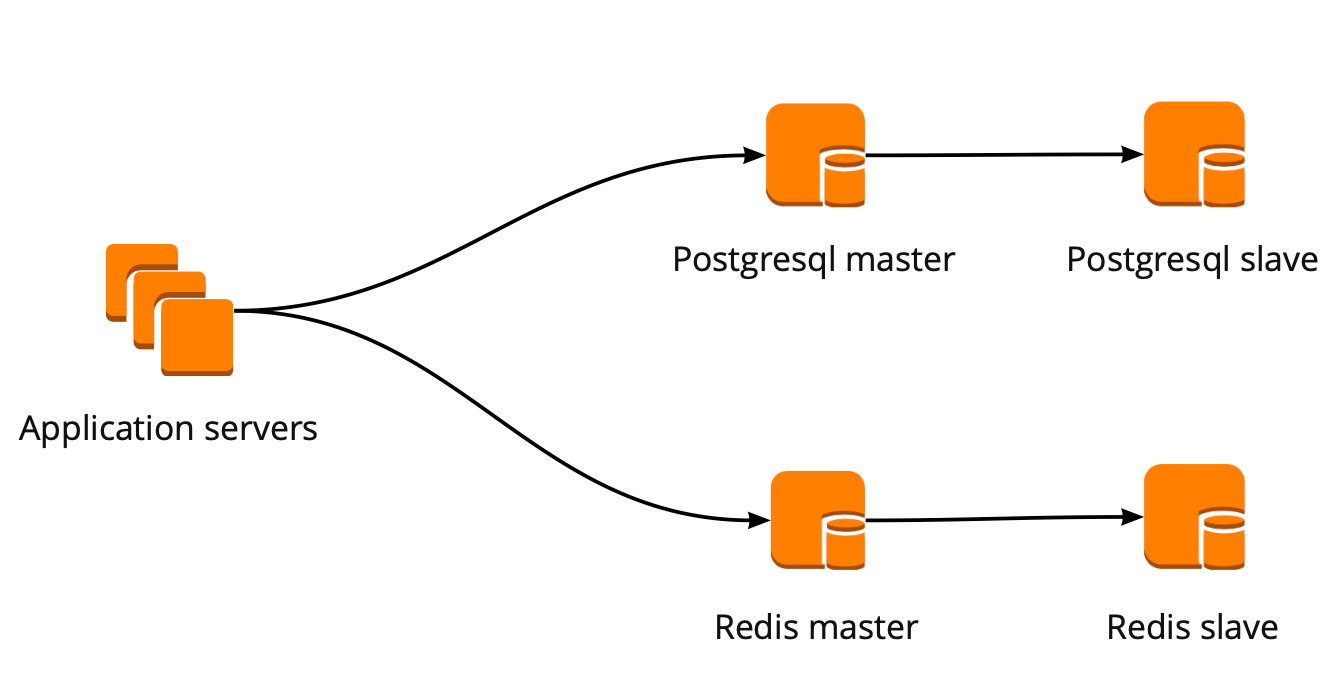

As a result, we got the following work scheme:

All application servers access the balancer → there are two instances of PgBouncer behind it. On each instance, a Consul-template is running, which monitors the status of each Patroni cluster and monitors the relevance of the PgBouncer config, which sends requests to the current leader of each cluster.

Before launching the prod, we launched this scheme on a small test environment and checked the operation of automatic switching. They opened the board, moved the sticker and at that moment “killed” the cluster leader. In AWS, all you have to do is turn off the instance through the console.

The sticker for 10-20 seconds came back, and then again began to move normally. This means that the Patroni cluster worked correctly: changed the leader, sent the information to onsul, and onsul-template immediately picked up this information, replaced the PgBouncer configuration and sent the command to reload.

Everything works perfectly! But new questions appear: How does this work under high loads? How to quickly and safely roll out everything in production?

The test environment in which we conduct load testing helps us to answer the first question. It is completely identical to production by architecture and has generated test data that is approximately equal in volume to production. We decide to just “kill” one of the PostgreSQL masters during the test and see what happens. But before that, it is important to check the automatic rolling, because in this environment we have several PostgreSQL shards, so we will get excellent testing of the configuration scripts before selling.

Both tasks are ambitious, but we have PostgreSQL 9.6. Can we immediately upgrade to 11.2?

We decide to do it in 2 stages: first upgrade to version 11.2, then launch Patroni.

To quickly update the PostgreSQL version, you need to use the -k option, in which you create hard links on the disk and do not need to copy your data. On bases of 300-400 GB, the update takes 1 second.

We have a lot of shards, so the update needs to be done automatically. To do this, we wrote Ansible playbook, which performs the whole update process for us:

It is important to note here that before launching the upgrade, it is necessary to execute it with the --check parameter in order to be sure of the possibility of an upgrade. Also, our script makes configs substitution at the time of the upgrade. The script was completed in 30 seconds, this is an excellent result.

To solve the second problem, just look at the configuration of Patroni. The official repository has an example configuration with initdb, which is responsible for initializing the new database when Patroni is first launched. But since we have a ready base, we simply removed this section from the configuration.

When we started to install Patroni on a ready-made PostgreSQL cluster and launch it, we faced a new problem: both servers were launched as leader. Patroni knows nothing about the early state of the cluster and tries to start both servers as two separate clusters with the same name. To solve this problem, you need to delete the data directory on the slave:

This must be done only on the slave!

When a clean replica is connected, Patroni makes a basebackup leader and restores it to a replica, and then catches up with the current state of the wal-logs.

Another difficulty we encountered is that all PostgreSQL clusters are called main by default. When each cluster knows nothing about the other - this is normal. But when you want to use Patroni, all clusters must have a unique name. The solution is to change the cluster name in the PostgreSQL configuration.

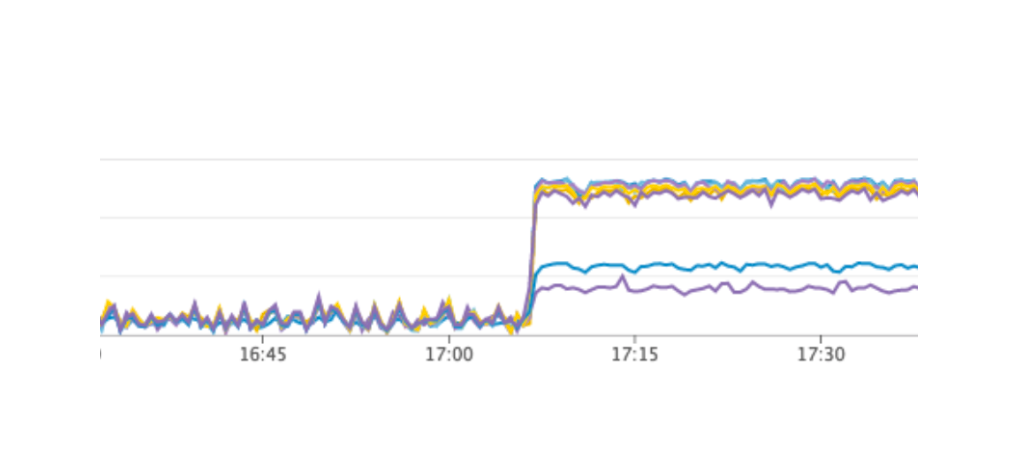

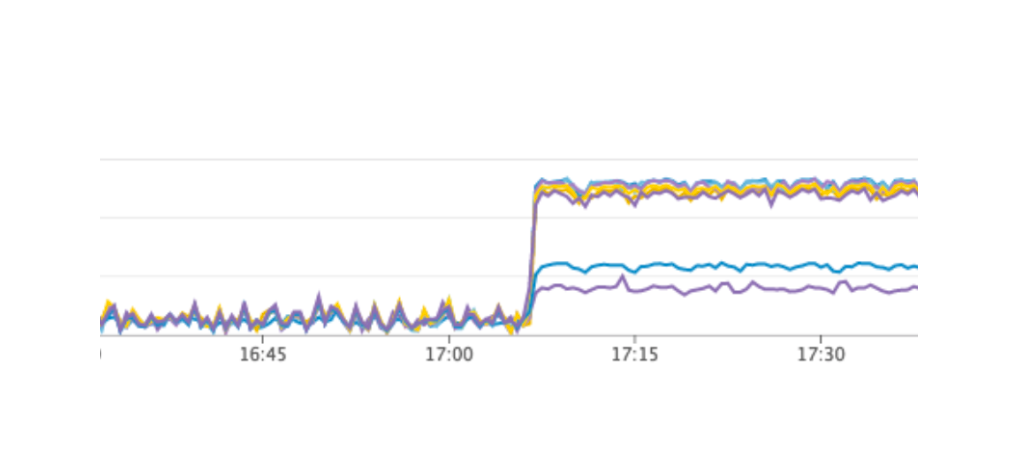

We have launched a test that simulates the work of users on the boards. When the load reached our daily average, we repeated the exact same test, we turned off one instance with the leader PostgreSQL. The automatic failover worked as we expected: Patroni changed the leader, the Consul-template updated the PgBouncer configuration and sent the command to reload. According to our graphs in Grafana it was clear that there are delays for 20-30 seconds and a small amount of errors from the servers associated with the connection to the database. This is a normal situation, such values are valid for our failover and definitely better than the downtime of the service.

As a result, we got the following plan:

At the same time, our scheme allows you to make the first item at almost any time; we can remove each PgBouncer from work in turn and execute it with deploy and launch the consul-template. So we did.

For fast rolling, we used Ansible, since we already checked the entire playbook on the test environment, and the execution time of the full script was from 1.5 to 2 minutes for each shard. We could roll everything one by one on each shard without stopping our service, but we would have to shut down every PostgreSQL for a few minutes. In this case, users whose data is on this shard, could not fully work at this time, and this is unacceptable for us.

The way out of this situation was planned maintenance, which runs with us every 3 months. This is the window for scheduled work when we completely turn off our service and update the database instances. One week remained until the next window, and we decided to just wait and prepare further. During the wait, we additionally secured ourselves: for each shard, PostgreSQL was picked up in a spare replica in case of failure, to save the latest data, and added a new instance for each shard, which should become a new replica in the Patroni cluster, so as not to execute the command to delete data . All this helped to minimize the risk of error.

We restarted our service, everything worked as it should, users continued to work, but on the charts we noticed an abnormally high load on the Consul server.

Why we did not see it on the test environment? This problem illustrates very well that it is necessary to follow the principle of Infrastructure as code and refine the entire infrastructure, starting with test environments and ending with production. Otherwise, it is very easy to get such a problem that we got. What happened? Sonsul first appeared in production, and then in test environments, and as a result, Consul's version was higher in test environments than in production. Just in one of the releases, a CPU leak was solved when working with the consul-template. Therefore, we simply updated Consul, thus solving the problem.

However, we received a new problem, which was not even suspected. When updating Consul, we simply remove the Consul node from the cluster using the consul leave command → Patroni connects to another Consul server → everything works. But when we reached the last instance of the Consul cluster and sent the consul leave command to it, all Patroni clusters just restarted, and we saw the following error in the logs:

The cluster Patroni was unable to obtain information about its cluster and restarted.

To find a solution, we turned to the authors of Patroni through an issue on github. They suggested improvements to our configuration files:

We were able to repeat the problem on the test environment and tested these parameters there, but, unfortunately, they did not work.

The problem still remains unresolved. We plan to try the following solutions:

We understand the location of the error: the problem is probably in the use of default timeout, which is not overridden by the configuration file. When the last onsul server is removed from the cluster, the entire Consul cluster hangs, which lasts longer than a second, because of this Patroni cannot get the cluster status and completely restarts the entire cluster.

Fortunately, no more errors, we have not met.

After the successful launch of Patroni, we added an additional replica in each cluster. Now in each cluster there is a quorum similarity: one leader and two replicas, - for safety in case of split-brain when switching.

On production Patroni works more than three months. During this time he has already managed to help us out. Recently, the leader of one of the clusters died in AWS, automatic failover worked and users continued to work. Patroni completed his main task.

A small summary of the use of Patroni:

We have a high-loaded service: 2.5 million users worldwide, 50K + active users every day. The servers are located in Amazone in one region of Ireland: there are always 100+ different servers in operation, of which almost 50 are with databases.

The entire backend is a large monolithic stateful Java application that keeps a constant websocket connection with the client. With simultaneous work of several users on one board, they all see changes in real time, because we record every change in the database. We have about 10K requests per second to our databases. At peak load in Redis, we write 80-100K requests per second.

')

Why we switched from Redis to PostgreSQL

Initially, our service worked with Redis, a key-value repository that stores all data in the server’s RAM.

Redis pros:

- High response rate, because everything is stored in memory;

- Convenience of backup and replication.

Cons Redis for us:

- There are no real transactions. We tried to imitate them at the level of our application. Unfortunately, this did not always work well and required the writing of very complex code.

- The amount of data is limited by the amount of memory. As the amount of data increases, memory will grow, and eventually we will rest on the characteristics of the selected instance, which in AWS requires stopping our service to change the type of instance.

- It is necessary to constantly maintain a low latency level, since we have a very large number of requests. The optimal delay level for us is 17-20 ms. At a level of 30-40 ms, we receive long responses to requests from our application and degradation of the service. Unfortunately, this happened in September 2018, when for some reason one of the instances with Redis received latency 2 times more than usual. To solve the problem, we stopped the service in the middle of the working day for unscheduled maintenance and replaced the problem instance Redis.

- It is easy to obtain inconsistency of data even with minor errors in the code and then spend a lot of time writing code to correct this data.

We took into account the disadvantages and realized that we need to move to something more convenient, with normal transactions and less dependent on latency. We conducted a study, analyzed a variety of options and chose PostgreSQL.

We have been moving to the new database for 1.5 years and have transported only a small part of the data, so now we are working simultaneously with Redis and PostgreSQL. More information about the stages of moving and switching data between the database is written in the article of my colleague .

When we started to move, our application worked directly from the database and addressed the Redis and PostgreSQL wizard. The PostgreSQL cluster consisted of a master and a replica with asynchronous replication. So the scheme of work with bases looked:

PgBouncer implementation

While we were moving, the product was also developing: the number of users and the number of servers that worked with PostgreSQL increased, and we began to lack connections. PostgreSQL creates a separate process for each connection and consumes resources. You can increase the number of connections until a certain point, otherwise there is a chance to get a non-optimal database operation. The ideal option in such a situation would be the choice of the connection manager, who will face the base.

We had two options for the connection manager: Pgpool and PgBouncer. But the first one does not support transactional operation with the base, so we chose PgBouncer.

We set up the following operation scheme: our application refers to one PgBouncer, followed by PostgreSQL masters, and for each master, one replica with asynchronous replication.

At the same time, we could not store the entire amount of data in PostgreSQL and the speed of working with the database was important for us, so we started to sharpen PostgreSQL at the application level. The scheme described above is relatively convenient for this: when adding a new shard to PostgreSQL, it is enough to update the PgBouncer configuration and the application can immediately work with the new shard.

Fault tolerance PgBouncer

This scheme worked until the only instance of the PgBouncer died. We are in AWS, where all instances are running on hardware that periodically dies. In such cases, the instance simply moves to a new hardware and works again. It happened with PgBouncer, however, it became unavailable. The result of this fall was the inaccessibility of our service for 25 minutes. AWS for such situations recommends the use of redundancy on the user's side, which was not implemented by us at that time.

After that, we seriously thought about the resiliency of PgBouncer and PostgreSQL clusters, because this situation could be repeated with any instance in our AWS account.

We built the PgBouncer fault tolerance scheme as follows: all application servers access the Network Load Balancer, which is supported by two PgBouncer. Each PgBouncer looks at the same PostgreSQL master of each shard. In case of a repetition of the situation with the AWS instance crash, all traffic is redirected through another PgBouncer. Fault tolerance Network Load Balancer provides AWS.

This scheme allows you to easily add new PgBouncer servers.

Creating a PostgreSQL Failover Cluster

In solving this problem, we considered various options: self-signed failover, repmgr, AWS RDS, Patroni.

Custom scripts

They can monitor the wizard and, in the event of a crash, push the cue to the wizard and update the PgBouncer configuration.

The advantages of this approach are maximum simplicity, because you write the scripts yourself and understand exactly how they work.

Minuses:

- The master could not die, a network failure could occur instead. Failover, without knowing it, will advance the cue to the master, and the old master will continue to work. As a result, we will receive two servers in the master role and will not know which of them contains the latest actual data. This situation is also called split-brain;

- We were left without a replica. In our configuration, the master and one replica, after switching the replica moves to the master and we no longer have replicas, so we have to manually add a new replica;

- We need additional monitoring of work failover, while we have 12 PostgreSQL shards, which means we need to monitor 12 clusters. If you increase the number of shards, you must still remember to update failover.

Handwritten failover looks very difficult and requires nontrivial support. With a single PostgreSQL cluster, this will be the easiest option, but it doesn’t scale, so it’s not suitable for us.

Repmgr

Replication Manager for PostgreSQL clusters that can manage the operation of a PostgreSQL cluster. In this case, there is no automatic failover “out of the box”, so for the work you will need to write your “wrapper” over the finished solution. So everything can turn out even more complicated than with samopisny scripts, so we didn’t even try Repmgr.

AWS RDS

It supports everything you need for us, can make backups and maintains a pool of connections. It has automatic switching: when the master dies, the replica becomes the new master, and AWS changes the dns entry to the new master, and the replicas can be located in different AZ.

The disadvantages are the lack of fine-tuning. As an example of fine-tuning: on our instances there are restrictions for tcp connections, which, unfortunately, cannot be done in RDS:

net.ipv4.tcp_keepalive_time=10 net.ipv4.tcp_keepalive_intvl=1 net.ipv4.tcp_keepalive_probes=5 net.ipv4.tcp_retries2=3 In addition, at AWS RDS, the price is almost twice as expensive as the normal price of the instance, which was the main reason for rejecting this decision.

Patroni

This is a python template for managing PostgreSQL with good documentation, automatic failover and source code on github.

Advantages of Patroni:

- Each configuration parameter is listed, it is clear how what works;

- Automatic failover works out of the box;

- It is written in python, and since we write a lot in python ourselves, it will be easier for us to deal with problems and, possibly, even help the development of the project;

- Completely controls PostgreSQL, allows you to change the configuration at once on all the nodes of the cluster, and if the cluster is restarted to apply the new configuration, this can be done again using Patroni.

Minuses:

- From the documentation it is not clear how to work with PgBouncer correctly. Although it is difficult to call it a minus, because the task of Patroni is to manage PostgreSQL, and how connections to Patroni will go is our problem;

- There are few examples of the implementation of Patroni on large volumes, with many examples of implementation from scratch.

As a result, we chose Patroni to create a failover cluster.

Patroni implementation process

Before Patroni, we had 12 PostgreSQL shards in the configuration of one master and one replica with asynchronous replication. The application servers accessed the databases through the Network Load Balancer, which was preceded by two instances with the PgBouncer, and all PostgreSQL servers were behind them.

To implement Patroni, we needed to choose a distributed storage cluster configuration. Patroni works with distributed configuration storage systems such as etcd, Zookeeper, onsul. We just have a full-fledged Consul cluster, which works in conjunction with Vault and we don’t use it anymore. An excellent reason to start using Consul for its intended purpose.

How Patroni works with Consul

We have a Consul cluster, which consists of three nodes and a Patroni cluster, which consists of a leader and a replica (in Patroni, the master is called the cluster leader, and the slaves are replicas). Each Patroni cluster instance constantly sends information about the cluster status to the Consul. Therefore, from onsul you can always find out the current configuration of the Patroni cluster and who is the leader at the moment.

To connect Patroni to onsul, it is enough to study the official documentation, in which it is written that it is necessary to specify a host in the format of http or https, depending on how we work with Consul, and the connection scheme, optionally:

host: the host:port for the Consul endpoint, in format: http(s)://host:port scheme: (optional) http or https, defaults to http It looks easy, but then the pitfalls begin. With onsul we work on a secure connection via https and our connection config will look like this:

consul: host: https://server.production.consul:8080 verify: true cacert: {{ consul_cacert }} cert: {{ consul_cert }} key: {{ consul_key }} But that doesn't work. At startup, Patroni cannot connect to onsul, because it tries to go via http anyway.

The Patroni source code helped to deal with the problem. Good thing it is written in python. It turns out the host parameter does not parse, and the protocol must be specified in the scheme. This is what a working configuration unit for working with Consul looks like with us:

consul: host: server.production.consul:8080 scheme: https verify: true cacert: {{ consul_cacert }} cert: {{ consul_cert }} key: {{ consul_key }} Consul-template

So, we selected storage for a configuration. Now you need to understand how PgBouncer will switch its configuration when the leader changes in the Patroni cluster. In the documentation for this question there is no answer, because There, in principle, work with PgBouncer is not described.

In search of a solution, we found an article (the name, unfortunately, I don’t remember), where it was written that the Consul-template helped a lot with PgBouncer and Patroni. This prompted us to research the work of the Consul-template.

It turned out that the Consul-template constantly monitors the configuration of the PostgreSQL cluster in the Consul. When changing the leader, it updates the PgBouncer configuration and sends the command to reload it.

A big plus of the template is that it is stored as a code, so when adding a new shard, it is enough to make a new commit and update the template automatically, supporting the principle of Infrastructure as code.

New architecture with Patroni

As a result, we got the following work scheme:

All application servers access the balancer → there are two instances of PgBouncer behind it. On each instance, a Consul-template is running, which monitors the status of each Patroni cluster and monitors the relevance of the PgBouncer config, which sends requests to the current leader of each cluster.

Manual testing

Before launching the prod, we launched this scheme on a small test environment and checked the operation of automatic switching. They opened the board, moved the sticker and at that moment “killed” the cluster leader. In AWS, all you have to do is turn off the instance through the console.

The sticker for 10-20 seconds came back, and then again began to move normally. This means that the Patroni cluster worked correctly: changed the leader, sent the information to onsul, and onsul-template immediately picked up this information, replaced the PgBouncer configuration and sent the command to reload.

How to survive under high load and keep the minimum downtime?

Everything works perfectly! But new questions appear: How does this work under high loads? How to quickly and safely roll out everything in production?

The test environment in which we conduct load testing helps us to answer the first question. It is completely identical to production by architecture and has generated test data that is approximately equal in volume to production. We decide to just “kill” one of the PostgreSQL masters during the test and see what happens. But before that, it is important to check the automatic rolling, because in this environment we have several PostgreSQL shards, so we will get excellent testing of the configuration scripts before selling.

Both tasks are ambitious, but we have PostgreSQL 9.6. Can we immediately upgrade to 11.2?

We decide to do it in 2 stages: first upgrade to version 11.2, then launch Patroni.

PostgreSQL update

To quickly update the PostgreSQL version, you need to use the -k option, in which you create hard links on the disk and do not need to copy your data. On bases of 300-400 GB, the update takes 1 second.

We have a lot of shards, so the update needs to be done automatically. To do this, we wrote Ansible playbook, which performs the whole update process for us:

/usr/lib/postgresql/11/bin/pg_upgrade \ <b>--link \</b> --old-datadir='' --new-datadir='' \ --old-bindir='' --new-bindir='' \ --old-options=' -c config_file=' \ --new-options=' -c config_file=' It is important to note here that before launching the upgrade, it is necessary to execute it with the --check parameter in order to be sure of the possibility of an upgrade. Also, our script makes configs substitution at the time of the upgrade. The script was completed in 30 seconds, this is an excellent result.

Running patroni

To solve the second problem, just look at the configuration of Patroni. The official repository has an example configuration with initdb, which is responsible for initializing the new database when Patroni is first launched. But since we have a ready base, we simply removed this section from the configuration.

When we started to install Patroni on a ready-made PostgreSQL cluster and launch it, we faced a new problem: both servers were launched as leader. Patroni knows nothing about the early state of the cluster and tries to start both servers as two separate clusters with the same name. To solve this problem, you need to delete the data directory on the slave:

rm -rf /var/lib/postgresql/ This must be done only on the slave!

When a clean replica is connected, Patroni makes a basebackup leader and restores it to a replica, and then catches up with the current state of the wal-logs.

Another difficulty we encountered is that all PostgreSQL clusters are called main by default. When each cluster knows nothing about the other - this is normal. But when you want to use Patroni, all clusters must have a unique name. The solution is to change the cluster name in the PostgreSQL configuration.

Load test

We have launched a test that simulates the work of users on the boards. When the load reached our daily average, we repeated the exact same test, we turned off one instance with the leader PostgreSQL. The automatic failover worked as we expected: Patroni changed the leader, the Consul-template updated the PgBouncer configuration and sent the command to reload. According to our graphs in Grafana it was clear that there are delays for 20-30 seconds and a small amount of errors from the servers associated with the connection to the database. This is a normal situation, such values are valid for our failover and definitely better than the downtime of the service.

Patroni output to production

As a result, we got the following plan:

- Deploy the Consul-template to the PgBouncer server and launch;

- PostgreSQL updates to version 11.2;

- Cluster name change;

- Launch Patroni Cluster.

At the same time, our scheme allows you to make the first item at almost any time; we can remove each PgBouncer from work in turn and execute it with deploy and launch the consul-template. So we did.

For fast rolling, we used Ansible, since we already checked the entire playbook on the test environment, and the execution time of the full script was from 1.5 to 2 minutes for each shard. We could roll everything one by one on each shard without stopping our service, but we would have to shut down every PostgreSQL for a few minutes. In this case, users whose data is on this shard, could not fully work at this time, and this is unacceptable for us.

The way out of this situation was planned maintenance, which runs with us every 3 months. This is the window for scheduled work when we completely turn off our service and update the database instances. One week remained until the next window, and we decided to just wait and prepare further. During the wait, we additionally secured ourselves: for each shard, PostgreSQL was picked up in a spare replica in case of failure, to save the latest data, and added a new instance for each shard, which should become a new replica in the Patroni cluster, so as not to execute the command to delete data . All this helped to minimize the risk of error.

We restarted our service, everything worked as it should, users continued to work, but on the charts we noticed an abnormally high load on the Consul server.

Why we did not see it on the test environment? This problem illustrates very well that it is necessary to follow the principle of Infrastructure as code and refine the entire infrastructure, starting with test environments and ending with production. Otherwise, it is very easy to get such a problem that we got. What happened? Sonsul first appeared in production, and then in test environments, and as a result, Consul's version was higher in test environments than in production. Just in one of the releases, a CPU leak was solved when working with the consul-template. Therefore, we simply updated Consul, thus solving the problem.

Restart Patroni cluster

However, we received a new problem, which was not even suspected. When updating Consul, we simply remove the Consul node from the cluster using the consul leave command → Patroni connects to another Consul server → everything works. But when we reached the last instance of the Consul cluster and sent the consul leave command to it, all Patroni clusters just restarted, and we saw the following error in the logs:

ERROR: get_cluster Traceback (most recent call last): ... RetryFailedError: 'Exceeded retry deadline' ERROR: Error communicating with DCS <b>LOG: database system is shut down</b> The cluster Patroni was unable to obtain information about its cluster and restarted.

To find a solution, we turned to the authors of Patroni through an issue on github. They suggested improvements to our configuration files:

consul: consul.checks: [] bootstrap: dcs: retry_timeout: 8 We were able to repeat the problem on the test environment and tested these parameters there, but, unfortunately, they did not work.

The problem still remains unresolved. We plan to try the following solutions:

- Use the Consul-agent on each instance of the Patroni cluster;

- Fix the problem in the code.

We understand the location of the error: the problem is probably in the use of default timeout, which is not overridden by the configuration file. When the last onsul server is removed from the cluster, the entire Consul cluster hangs, which lasts longer than a second, because of this Patroni cannot get the cluster status and completely restarts the entire cluster.

Fortunately, no more errors, we have not met.

Results of using Patroni

After the successful launch of Patroni, we added an additional replica in each cluster. Now in each cluster there is a quorum similarity: one leader and two replicas, - for safety in case of split-brain when switching.

On production Patroni works more than three months. During this time he has already managed to help us out. Recently, the leader of one of the clusters died in AWS, automatic failover worked and users continued to work. Patroni completed his main task.

A small summary of the use of Patroni:

- Convenience configuration changes. It is enough to change the configuration on one instance and it will pull up on the entire cluster. If a reboot is required to apply the new configuration, Patroni will report it. Patroni can restart the entire cluster with a single command, which is also very convenient.

- Automatic failover works and has already managed to help us out.

- PostgreSQL update without downtime application. You must first upgrade the replicas to the new version, then change the leader in the Patroni cluster and update the old leader. When this happens, the necessary testing of automatic failover occurs.

Source: https://habr.com/ru/post/457326/

All Articles