Dynamic creation of robots.txt for ASP.NET Core sites

Now I am in the process of transferring parts of the old WebForms of my site, which are still running on bare hardware, in ASP.NET Core and Azure Application Services. In the process, I realized that I want to make sure that my sites are not indexed in Google, Yandex, Bing and other search engines.

I already have a robots.txt file, but I want one to serve only for production, and others for development. I thought about several ways to solve this problem. I could have a static robots.txt file, a robots-staging.txt file and conditionally copy one over the other in my Azure DevOps CI / CD pipeline.

Then I realized that the simplest thing is to make robots.txt dynamic. I was thinking about writing my own middleware, but it seemed troublesome with a lot of code. I wanted to see how simple it can be.

- This can be implemented as embedded middleware: just lambda, func and linq in one line

- You can write your own middleware and make many options, then activate it in the environment of env.IsStaging () or another

- You can create a single Razor Page with TegHelpers environment

The last option seemed the simplest and meant that I could change cshtml without full recompilation, so I created a single Razor Page RobotsTxt.cshtml. Then I used the built-in tag environment helper to conditionally generate parts of the file. Also note that I forcibly specified the mime type as text / plain and do not use the Layout page, since it must be autonomous.

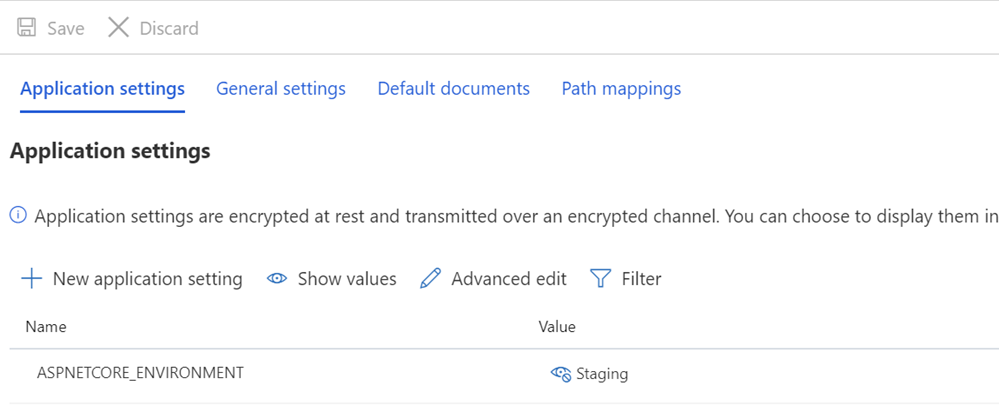

@page @{ Layout = null; this.Response.ContentType = "text/plain"; } # /robots.txt file for http://www.hanselman.com/ User-agent: * <environment include="Development,Staging">Disallow: /</environment> <environment include="Production">Disallow: /blog/private Disallow: /blog/secret Disallow: /blog/somethingelse</environment> Then I check if the ASPNETCORE_ENVIRONMENT variables are set correctly in my staging and / or production systems.

I also want to point out how an odd interval might look and how some text rests on TagHelpers. Remember that the TagHelper tag sometimes “disappears” (is deleted) when it does its job, but gaps remain around it. Therefore, I want User-agent: * to have a line, and then Disallow will immediately appear on the next line. Although the source code may be more beautiful if it starts from a different line, but then it will be the wrong file. I want the result to be correct. This is for understanding:

User-agent: * Disallow: / This gives me a robots.txt file in / robotstxt, but not in / robots.txt. See a mistake? Robots.txt is a (fake) file, so I need to map the route from the /robots.txt request to the Razor page with the name RobotsTxt.cshtml.

Here I add RazorPagesOptions to my Startup.cs with a custom PageRoute that displays /robots.txt in / robotstxt. (I have always found this API annoying, since the parameters, in my opinion, should be changed to (from, to)), so watch this so that you don't spend ten extra minutes, as I just did) .

public void ConfigureServices(IServiceCollection services) { services.AddMvc() .AddRazorPagesOptions(options => { options.Conventions.AddPageRoute("/robotstxt", "/Robots.Txt"); }); } And it's all! Simple and transparent.

You can also add caching, if you like, as a middleware of a larger size or even on a cshtml page, for example

context.Response.Headers.Add("Cache-Control", $"max-age=SOMELARGENUMBEROFSECONDS"); but I will leave this small optimization as an exercise for you.

UPDATE: When I finished, I found this middleware robots.txt and NuGet on GitHub. I am still satisfied with my code and do not object to the absence of external dependency (external independence is not important to me), but it is nice to keep it for future more complex tasks and projects.

')

Source: https://habr.com/ru/post/457184/

All Articles