How the frame is rendered A Plague Tale: Innocence

Foreword

As in my other studies, let's start with an introduction. Today we will review the latest game by the French developer Asobo Studio. I first saw the video of this game last year, when a colleague shared a 16-minute gameplay trailer with me. My attention was attracted by the “rat against the light” mechanic, but I didn’t really want to play this game. However, after its release, many began to say that it looks like it was made on the Unreal engine, but it is not. I was curious to see how rendering works and how much the developers were generally inspired by Unreal. I was also interested in the process of rendering a flock of rats, because in the game she looked very convincing and also is one of the key elements of the gameplay.

When I started trying to capture the game, I thought that I would have to give up because nothing worked. Although the game uses DX11, which is now supported by almost all analysis tools, I could not get any of them to work. When I tried to use RenderDoc, the game crashed on launch, and the same thing happened with the PIX. I still don’t know why this is happening, but fortunately, I managed to execute several captures using NSight Graphics. As usual, I raised all parameters to the maximum and began to look for suitable frames for analysis.

Frame breakdown

Having made a couple of grips, I decided to use one of the very beginning of the game for analyzing the frame. There is not much difference between the grippers, and besides, I can avoid spoilers.

As usual, let's start with the final frame:

')

The first thing I noticed was a completely different balance in this game of rendering events compared to what I had seen in other games before. There are many draw calls, which is normal, but surprisingly only a few of them are used for post-processing. In other games, after rendering colors, the frame goes through many stages to get the final result, but in A Plague Tale: Innocence, the post-processing stack is very small and optimized to only a few draw / compute events.

The game starts building a frame with a GBuffer rendering with six render targets. Interestingly, these are all render targets have a 32-bit unsigned integer format (with the exception of one) instead of RGBA8 colors or other specific data formats. This was difficult because I had to decode each channel manually using the NSight Custom Shader function. I spent a lot of time figuring out which values are encoded into 32-bit targets, but it’s possible that I missed something anyway.

GBuffer 0

The first target contains some shading values in 24 bits, and some other values for hair in 8 bits.

GBuffer 1

The second target looks like a traditional RGBA8-target with different material management values in each channel. As far as I understand, the red channel is metalness (it’s not quite clear why some leaves are marked with it), the green channel looks like the roughness value, and the blue channel is the mask of the main character. No alpha channel was used in any of my captures.

GBuffer 2

The third target also looks like RGBA8 with albedo in the RGB channels, and the alpha channel in each capture I made was completely white, so I don’t quite understand what this data should do.

GBuffer3

The fourth target is curious, because on all my captures it is almost completely black. Values look like a mask of a piece of vegetation and all hair / fur. Perhaps this is somehow related to translucency.

GBuffer 4

The fifth target is probably some kind of normal encoding, because I haven't seen them anywhere else, and the shader seems to be sampling normal maps, and then doing output to this target. With this in mind, I did not figure out how to properly visualize them.

Depth of GBuffer 5

Mask from GBuffer 5

The last target is an exception because it uses a 32-bit floating point format. The reason for this is that it contains the linear depth of the image, and the sign bit encodes some other mask that again masks the hair and part of the vegetation.

After the creation of the GBuffer is completed, the resolution of the depth map is reduced in the computational shader, and then shadow maps are rendered (directed cascading shadow maps from the sun and cubic depth maps from point sources of lighting).

Twilight rays

After completing the shadow maps, you can calculate the light, but first, the god rays are rendered in a separate target.

SSAO

At the stage of computing the lighting, a computational shader is performed to calculate the SSAO.

Illuminated opaque geometry

Lighting is added from cubic maps and local light sources. All these different sources of illumination in combination with the above rendered targets as a result form an illuminated HDR image.

Elements rendered proactively rendering

Elements that are rendered proactively are added on top of the lighted opaque geometry, but in this scene they are not very noticeable.

After the accumulation of all color, we are almost finished, there are only a few post-processing operations and UI.

The color resolution decreases in the computational shader and then increases to create a very beautiful and soft bloom effect.

After compositing all previous results, adding camera dirt, color correction and finally tonal correction of the image, we get the colors of the scene. Overlaying UI gives us an image from the beginning of the article.

A couple of interesting things about rendering are worth mentioning:

- Instancing (duplication of geometry) is used only for individual meshes (it seems that only for vegetation). All other objects are rendered in separate draw calls.

- Objects seem to be roughly sorted from front to back, with some exceptions.

- It seems that the developers did not make any effort to group the draw calls in terms of material parameters.

Rats

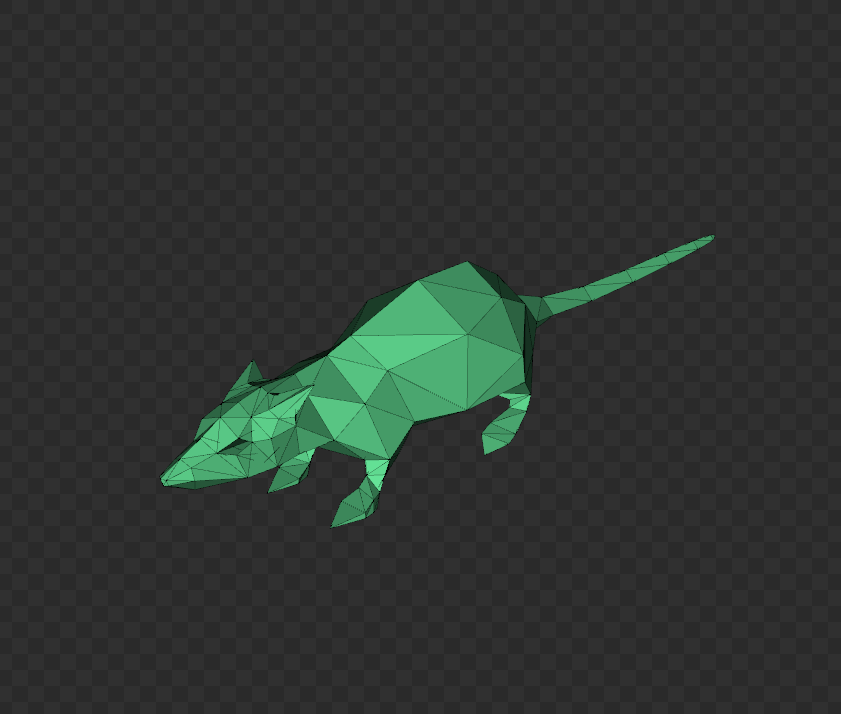

As I said at the beginning of the article, one of the reasons why I wanted to explore this game was the way of rendering a pack of rats. The decision somehow disappointed me: it seems that it was made by brute force. Here I use screenshots from another game scene, but I hope there are no spoilers in it.

As in the case of other objects, there seems to be no duplication of geometry for rats, unless we reach the distance at which we switch to the last level of mesh detail (LOD). Let's see how it works.

LOD0

LOD1

LOD2

LOD3

Rats have 4 levels of LOD. Interestingly, at the third level, the tail is bent to the body, while the fourth tail does not exist at all. This probably means that the animations are active only for the first two levels. Unfortunately, NSight Graphics does not seem to have enough tools to check it out.

Without instancing rats.

With duplication.

In the scene captured above, the following number of rats are rendered:

- LOD0 - 200

- LOD1 - 200

- LOD2 - 1258

- LOD3 - 3500 (with duplication of geometry)

This makes us understand that there is a hard limit on the number of rats that can be rendered on the first two LODs.

In the capture I made, I couldn’t identify any logic that binds rats to individual LODs. Sometimes rats located closer to the camera are not very detailed, and sometimes barely visible rats have high details.

Finally

Plague Tale: Innocence is very interesting in terms of rendering. His results no doubt impressed me, they serve the gameplay very well. As with any proprietary engine, it would be great to hear more detailed analysis from the mouths of the developers themselves, especially because I was not able to confirm some of my theories. I hope my article will ever get to someone from Asobo Studio and they will see that people have an interest in this.

Source: https://habr.com/ru/post/456614/

All Articles