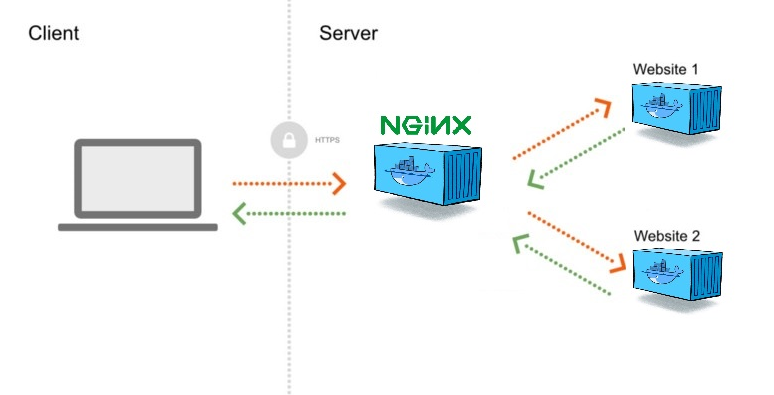

One of the hundreds of ways to publish multiple production projects on one server

When sites become a little more than one, and the resources of one server are more than enough, the question arises how not to overpay and pack everything into one virtual machine of the most attractive service , given that someday our applications will grow into a large-scale distributed network - we must lay a highload seed.

Described below will most likely be useful to those who are just starting to walk with confident steps in their craft.

From the preview it can be seen, the entire current architecture, it is quite simple, based on the docker, which includes

- Nginx container that looks outside and proxies all requests

- Lots and lots of our applications, respectively enclosed in containers

- Manage the whole process

Set up the environment

sudo apt update sudo apt install docker.io mkdir /home/$USER/app Let's deploy our applications

I will add a small blot, for those who are still not familiar with docker, all application containers will be started without access to the external environment, this is very convenient - it greatly increases security, access to them will be done only by proxying traffic through nginx. You can also launch individual hosts for your needs, for example, with MariaDB or Mongo and access them via local IP.

The first will be on nodejs with ssl connected

mkdir /home/$USER/app/web-one.oyeooo.com mkdir /home/$USER/app/web-one.oyeooo.com/ssl nano /home/$USER/app/web-one.oyeooo.com/index.js nano /home/$USER/app/web-one.oyeooo.com/package.json const fs = require("fs"), https = require("https"), express = require("express"), app = express(), port = 443 let options = { key: fs.readFileSync("ssl/web-one.oyeooo.com.key"), cert: fs.readFileSync("ssl/web-one.oyeooo.com.crt") } https.createServer(options, app).listen(port, function(){ console.log("Express server listening on port " + port); }) app.get("/", function (req, res) { res.writeHead(200) res.end("Oyeooo") }) { "name": "oyeooo", "version": "1.0.0", "description": "", "main": "index.js", "directories": { "lib": "lib" }, "scripts": { "start": "node index.js" }, "author": "", "license": "ISC", "dependencies": { "express": "^4.17.1" } } We get SLL and put the certificates in the folder /home/app/web-one.oyeooo.com/ssl

The second is a simple apache static application.

mkdir /home/$USER/app/web-two.oyeooo.com nano /home/$USER/app/web-two.oyeooo.com/index.html <!DOCTYPE html> <html> <head> <title>Welcome to me!</title> </head> <body> <h1>Oyeooo!</h1> <p><em>Thank you for using habr.</em></p> </body> </html> Create a network

docker network create --subnet=172.18.0.0/24 oyeooo Now run the containers

sudo docker run --net oyeooo --ip 172.18.0.2 --name web-one -v /home/$USER/app/web-one.oyeooo.com:/home/app -it node bash cd /home/app npm i npm start ctrl + q + p

sudo docker run --net oyeooo --ip 172.18.0.3 --name web-two -d -v /home/$USER/app/web-two.oyeooo.com:/usr/local/apache2/htdocs httpd ctrl + q + p

Go to nginx-proxy

mkdir /home/$USER/app/nginx mkdir /home/$USER/app/nginx/conf mkdir /home/$USER/app/nginx/ssl mkdir /home/$USER/app/nginx/logs mkdir /home/$USER/app/nginx/logs/web-one.oyeooo.com mkdir /home/$USER/app/nginx/logs/web-two.oyeooo.com nano /home/$USER/app/nginx/conf/web-one.oyeooo.com.conf nano /home/$USER/app/nginx/conf/web-two.oyeooo.com.conf server { listen 80; server_name web-one.oyeooo.com; access_log /var/log/nginx/web-one.oyeooo.com/http-access.log; error_log /var/log/nginx/web-one.oyeooo.com/http-error.log; return 301 https://$host$request_uri; } server { listen 443 ssl; server_name web-one.oyeooo.com.conf; access_log /var/log/nginx/web-one.oyeooo.com/https-access.log; error_log /var/log/nginx/web-one.oyeooo.com/https-error.log; ssl_certificate /etc/nginx/ssl/web-one.oyeooo.com/web-one.oyeooo.com.crt; ssl_certificate_key /etc/nginx/ssl/web-one.oyeooo.com/web-one.oyeooo.com.key; ssl_session_timeout 5m; ssl_protocols TLSv1 TLSv1.1 TLSv1.2; location / { proxy_pass https://172.18.0.2/; proxy_http_version 1.1; proxy_set_header Upgrade $http_upgrade; proxy_set_header Connection 'upgrade'; proxy_set_header Host $host; proxy_cache_bypass $http_upgrade; proxy_set_header X-Real-IP $remote_addr; proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for; proxy_set_header X-NginX-Proxy true; client_max_body_size 512M; } } server { listen 80; server_name web-two.oyeooo.com; access_log /var/log/nginx/web-two.oyeooo.com/http-access.log; error_log /var/log/nginx/web-two.oyeooo.com/http-error.log; location / { proxy_pass http://172.18.0.3/; proxy_http_version 1.1; proxy_set_header Upgrade $http_upgrade; proxy_set_header Connection 'upgrade'; proxy_set_header Host $host; proxy_cache_bypass $http_upgrade; proxy_set_header X-Real-IP $remote_addr; proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for; proxy_set_header X-NginX-Proxy true; client_max_body_size 512M; } } Expand nginx:

sudo docker run --net oyeooo --ip 172.18.0.4 --name nginx -it -v /home/$USER/app/nginx/logs:/var/log/nginx -v /home/$USER/app/nginx/ssl:/etc/nginx/ssl -v /home/$USER/app/nginx/conf:/etc/nginx/conf.d -p 80:80 -p 443:443 nginx bash # conf ssl ls /etc/nginx/conf.d ls /etc/nginx/ssl # nginx service nginx start Environment deployed. Thank!

You can adjust our routes with conf files — create or delete and reload:

sudo docker exec -it nginx nginx -s reload If the article comes out of the sandbox - write crm to control the logic.

')

Source: https://habr.com/ru/post/456612/

All Articles