What is information?

A study of How Much Information, conducted in 2009, showed that the amount of information consumed per week has increased fivefold since 1986. From 250 thousand words per week to 1.25 million! Since then, this figure has increased significantly. This is followed by more stunning performance: in 2018 the number of Internet users and users of social. networks - 4.021 billion and 3.196 billion. A modern person analyzes an incredible amount of information per day, using various schemes and strategies for its processing, to make decisions that are beneficial to him. The human species has generated 90% of the information in this world over the past two years. Now, if we round it up, we produce about 2.5 quintillon bytes (2.5 * 10 ^ 18 bytes) of new information per day. If you divide this number by the number of people living now, then it turns out that on average one person creates 0.3 gigabytes of information per day.

How much information does Homo sapiens occupy? (hereinafter Homo). For simplicity in computer science came up with a term called bits. A bit is the minimum unit of information. The file with this work takes several kilobytes. Such a document fifty years ago would have occupied all the memory of the most powerful computer. The average book in the digital version takes up a thousand times more space and this is already a megabyte. High-quality photo on a powerful camera - 20 megabytes. One digital disk is 40 times larger. Interesting scales begin with gigabytes. Human DNA, all of the information about us is about 1.5 gigabytes. Multiply this by seven billion and get 1.05 x 10 ^ 19 bytes. In general, this amount of information in modern conditions we can produce in 10 days. This number of bits will describe all the people living now. And this is only data about the people themselves, without interactions between them, without interactions with nature and culture, which man himself created for himself. How much will this figure increase if you add variables and uncertainties of the future? Chaos will be the right word.

')

Information has an amazing property. Even when it is not, it is. And here you need to give an example. In behavioral biology is a famous experiment. Two cells stand opposite each other. In the 1st monkey of high rank. Alpha male. In the 2nd cage, the monkey is lower in status, beta male. Both monkeys can watch their counterpart. Add an influence factor to the experiment. Between the two cells put a banana. A beta male never dares to take a banana if he knows that the alpha male has also seen this banana. Because he immediately feels all the aggression of the alpha male. Next, slightly change the initial conditions of the experiment. The alpha male cell is covered with an opaque fabric to deprive it of its view. Repeating all that made before the picture becomes completely different. A beta male without any remorse comes up and takes a banana.

It's all about his ability to analyze, he knows that the alpha male has not seen how they put a banana and for him a banana simply does not exist. A beta male analyzed the absence of a signal about the appearance of a banana in an alpha male and took advantage of the situation. Setting a specific diagnosis to a patient in many cases is made when certain symptoms occur to him, however, a huge number of diseases, viruses and bacteria can even confuse an experienced doctor, how can he determine the exact diagnosis without spending time, which can be vital for the patient? It's simple. He analyzes not only the symptoms that the patient has, but also those that he does not have, which reduces the search time tenfold. If something does not give one or another signal, this also carries certain information - as a rule, of a negative nature, but not always. Analyze not only the information signals that are, but also those that do not.

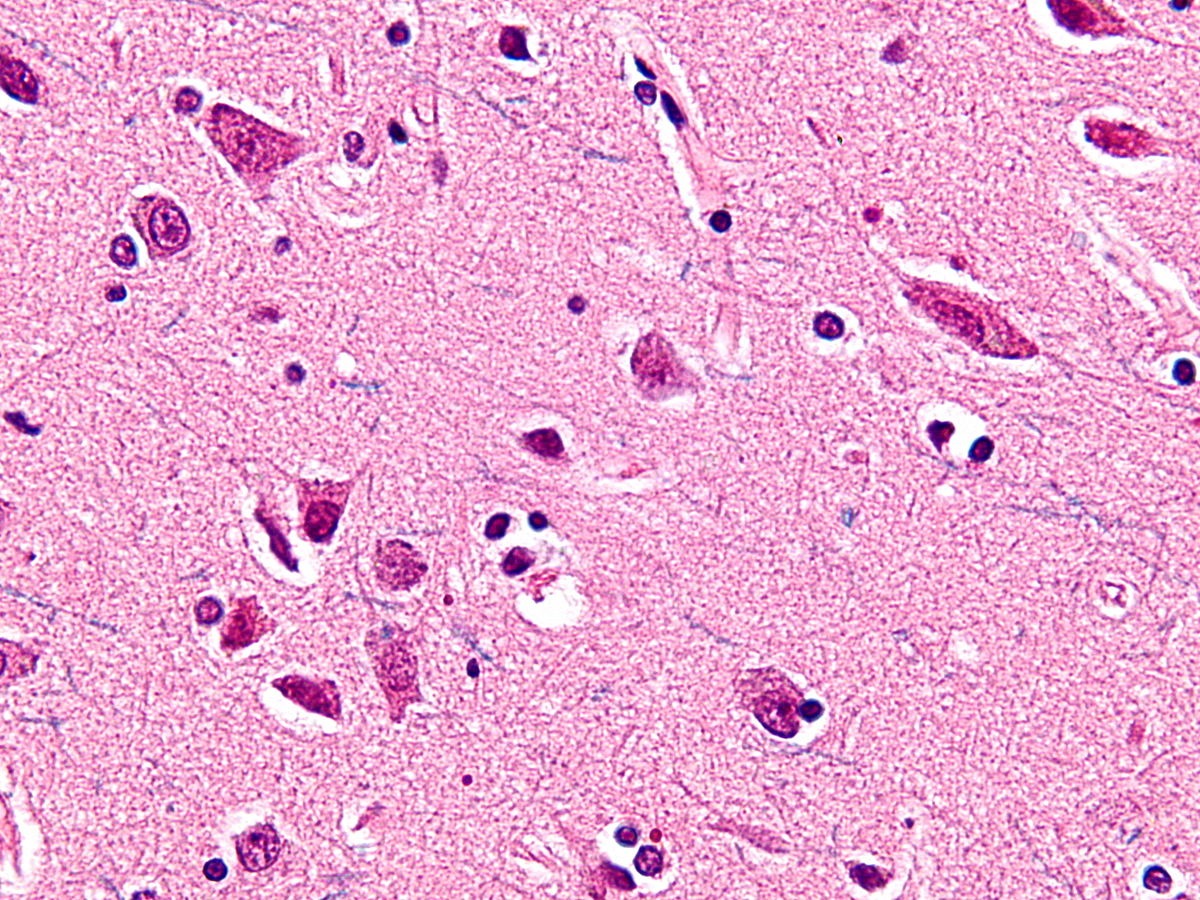

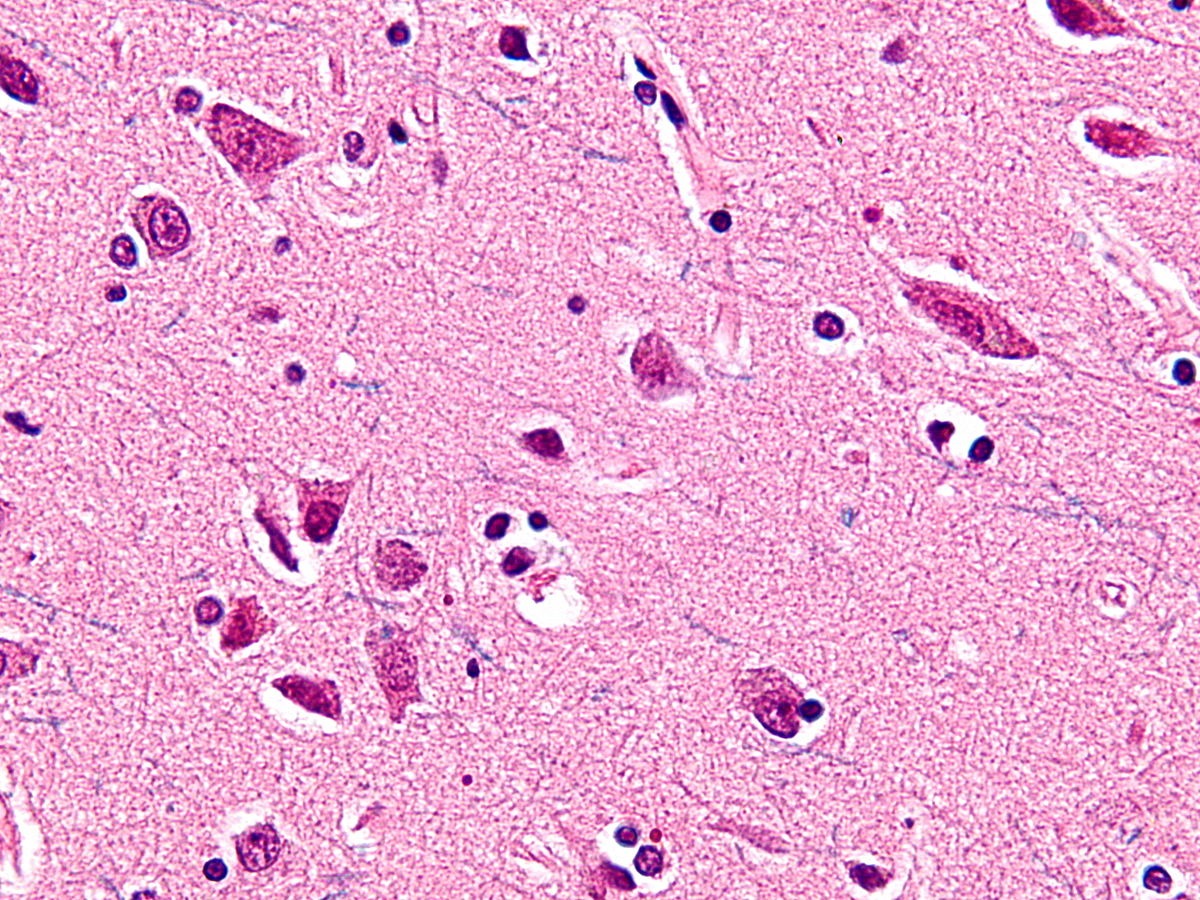

In connection with the listed figures and problems, a number of questions and problems arise. How? How did you manage to achieve this? Can the organism / society function normally in such conditions? How information affects biological, economic and other types of systems. The amount of information that we perceive in 2019 will seem scanty for the descendants of 2050. Already, the species is already creating new patterns and patterns of working with information, studying its properties and effects. Phrase: - “for a year I lived a million years” is no longer a joke and not absurd, but a reality. The amount of information that creates a person affects the social, economic, cultural and even biological life. In 1980, people dreamed of creating a quantum computer to increase computing power. It was a dream of the species. The discovery that promised this invention was to anticipate a new era. In 2018, IBM launched the first commercial quantum computer, but no one noticed. This news was discussed by an incredibly small number of people. She just drowned in that informational abundance in which we now exist. The main focus of research in recent years has become neuroscience, algorithms, mathematical models, artificial intelligence, which generally indicates the search for the possibility of normal functioning in an information-enriched environment. Definitions of patterns in the transfer of information, factors of its influence, properties and capabilities. In 1929, the Von Economo neurons were discovered, which are found only in highly social groups of animals. There is a direct correlate of the size of the group and the size of the brain, the larger the group of animals, the larger their brain size relative to the body. It is not surprising that von Economo's neurons are found only in cetaceans, elephants, and primates. Neurons Von Economo are responsible for transmitting large amounts of information in the brain.

bye AG Lukashenko did not forbid them to communicate, look at them

This type of neurons is an obligatory neural adaptation in very large brains, which allows you to quickly process and transmit information on very specific projections, which has evolved with respect to new social behaviors. The apparent presence of these specialized neurons in highly intelligent mammals only can be an example of convergent evolution. New information always generates new, qualitatively different patterns and relationships. Regularities are established only on the basis of information. Primate beats a stone on the bone of a dead buffalo. One stroke and the bone is broken into two pieces. Another blow and another rift. The third blow and a few more fragments. The pattern is clear. Blow to the bone and at least one new fragment. Are primatopia so good at recognizing patterns? Multiple intercourse and deferred labor after nine months.

How long did it take to link these two events? For a long time, childbirth was generally not associated with sexual intercourse between a man and a woman. In most cultures and religions, the gods answered for the birth of a new life. The exact date of discovery of this pattern, unfortunately, has not been established. However, it is worth noting that there are still closed societies of hunter-gatherers that these processes do not bind, and special rituals performed by the shaman are responsible for their birth. The main cause of infant mortality during childbirth until 1920 was dirty hands. Clean hands and a deceased child are also examples of non-obvious patterns. Here is another example of the pattern, which until 1930 remained implicit. What is it about? About blood types. In 1930, Landsteiner received the Nobel Prize for this discovery. Up to this point, the knowledge that it is only possible to transfuse a blood type to a person that matches the donor with the needy was unclear. Such examples are billions. It should be noted that the search for patterns is what the species is engaged in constantly. From a businessman who finds patterns in behavior or needs of people, and then earns on this pattern for many years, to serious scientific studies that make it possible to predict climate change, people’s migration, finding places for mining, comets cyclicality, embryo development, virus evolution and how the top behavior of neurons in the brain. Of course, everything can be explained by the device of the universe in which we live, and the second law of thermodynamics that entropy is constantly increasing, but this level is not suitable for practical purposes. Should choose a more close to life. The level of biology and computer science.

What is information? According to popular notions, information is information regardless of the form in which they are presented or to solve the problem of uncertainty. In physics, information is a measure of the orderliness of a system. In information theory, the definition of this term is as follows: information is data, bits, facts or concepts, a set of values. All these concepts are blurred and inaccurate, moreover, erroneous.

In proof of this, we propose a thesis - the information itself is meaningless. What is the number “3”? Or what is the letter “A”? Just a character with no value assigned. But what is the number “3” in the blood type column? This is the value that will save life. It already affects the strategy of behavior. An example brought to the point of absurdity, but not losing its significance. Douglas Adams wrote “A Hitchhiker's Guide to the Galaxy”. In this book, the created quantum computer had to answer the main question of life and the Universe. What is the meaning of life and the universe? The answer was received after seven and a half million years of continuous calculations. The computer concluded by repeatedly checking the value for correctness that the answer was “42”. The examples given make it clear that information without the external environment in which it is located (the context) does not mean anything. The number “2” can mean the number of monetary units sick with Ebola, happy children, or an indicator of a person’s knowledge of a question. For further proof, let us turn into the world of biology: the leaves of plants very often have the shape of a semicircle and first, as it were, rise up, expanding, but after a certain point of refraction stretch down, tapering. In DNA, as in the main carrier of information or values, there is no gene that would encode them such a craving down after a certain point. The fact that a leaf of a plant stretches downwards is a trick of gravity.

The DNA itself, in plants, in mammals, and in the already mentioned Homo Sapiens, carries little information, if at all. After all, DNA is a set of values in a particular environment. DNA basically carries transcription factors, something that needs to be activated by a certain external environment. Place the plant / human DNA in a different atmosphere or gravity environment, and the output will be a completely different product. Therefore, transferring our DNA to alien forms of life for research purposes is a rather stupid exercise. It is quite possible that, in their midst, human DNA will grow into something even more terrifying than a two-legged, straight-eared primate with a protruding thumb and ideas about equality. Information is values / data / bits / matter in any form in continuous communication with the environment, system or context. Information does not exist without environmental, system or context factors. Only in close connection with these conditions is information capable of conveying meanings. In the language of mathematics or biology, information does not exist without the external environment or systems, the variables on which it affects. Information is always an appendage of the circumstances in which it moves. This article will discuss the basic ideas of the theory of information. Proceedings of intellectual activity of Claude Shannon, Richard Feynman.

A distinctive feature of the form is the ability to create abstractions and build patterns. Represent some phenomena through others. We encode. Photons on the retina create images, air vibrations are transformed into sounds. We associate a certain sound with a certain picture. We interpret the chemical element in the air with our receptors in the nose as smell. Through drawings, pictures, hieroglyphs, sounds, we can connect events and transmit information.

here it actually encodes your reality

Such coding and abstraction should not be underestimated, it is enough just to remember how much it affects people. Encodings are able to prevail over biological programs, a person for the sake of an idea (a picture in his head that determines the strategy of behavior) refuses to transfer copies of his genes further or to recall the full power of physical formulas that allowed him to send a species representative to space, chemical equations that help heal people etc. Moreover, we can encode what is already encoded. The simplest example is the translation from one language to another. One code is represented in the form of another. The simplicity of transformation, as the main factor in the success of this process, allows you to make it endless. You can translate the expression from Japanese into Russian, from Russian into Spanish, from Spanish into a binary system, from it into Morse code, then present it in Braille, then in the form of a computer code, and then in the form of electrical impulses, send it directly to the brain where it decodes the message. Just recently, a reverse process was done and the brain activity was decoded to speech.

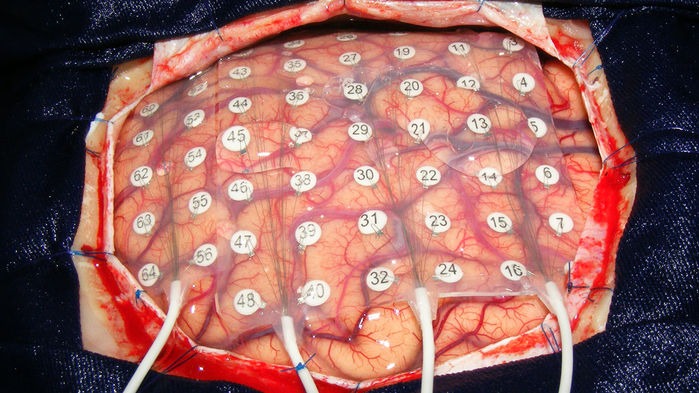

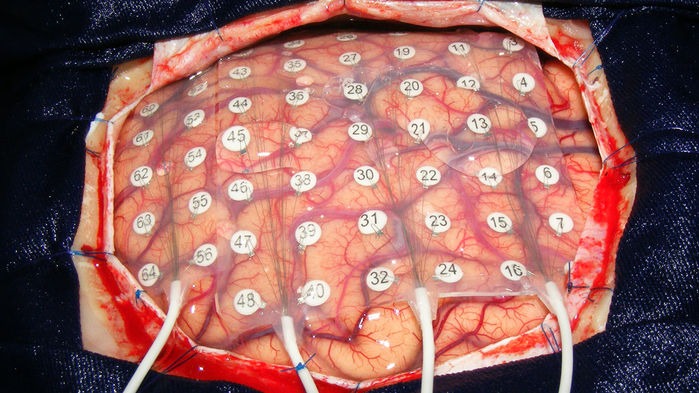

zafigachili in the picture above the electrodes and considered all your uniqueness

In the period from forty to twenty thousand years ago, primitive people began to actively encode information in the form of speech or gesture codes, rock art. Modern people, observing the first cave paintings, are trying to identify (decode) their meaning, the search for meanings is another distinctive feature of the species. Recreating the context of certain markers or remnants of information, modern anthropologists are trying to understand the life of primitive people. The quintessence of the coding process embodied in the form of writing. Writing solved the problem of information loss during its transmission not only in space, but also in time. Hieroglyphs of numbers allow you to encode calculations, words, objects, etc. However, if the problem is solved more or less efficiently, if of course both participants in the communication process use the same conditional agreements on the interpretation and decoding process of the same characters, hieroglyphs, the printed writing has failed with time and speed of transmission. To solve the problem of speed, radio and telecommunications systems were invented. The key stage in the development of information transfer can be considered two ideas. The first is digital communication channels, and the second is the development of the mathematical apparatus. Digital communication channels solved the problem in the speed of information transfer, and the mathematical apparatus in its accuracy.

Any channel has a certain level of noise and interference, due to which information comes with interference (a set of values and hieroglyphs is distorted, the context is lost) or does not come at all. With the development of technology, the amount of noise in digital communication channels decreased, but never reduced to zero, and as the distance increased, it generally increased. The key problem that needs to be solved when information is lost in digital communication channels was identified and solved by Claude Shannon in 1948, and the term bit was coined. It sounds like this: - “Let the source of messages have entropy (H) for one second, and (C) - channel capacity. If H < or = , then information coding is possible in which the source data will be transmitted through a channel with an arbitrarily small number of errors. ”

and you did not call to play this game

This formulation of the problem is the cause of the rapid development of science called "information theory". The main problems that it solves and tries to solve, are reduced to the fact that digital channels, as mentioned above, have noise or are formulated as follows - “there is no absolute reliability of the channel in the transmission of information.” Those. information may be lost, distorted, filled with errors due to the influence of the environment on the information transmission channel. Claude Shannon put forward a number of theses, from which it follows that the possibility of transmitting information without loss and changes in it, i.e. with absolute accuracy, exists in most channels with noise. In fact, he allowed Homo Sapiens not to waste efforts on improving communication channels. Instead, he proposed developing more efficient coding and decoding schemes. Represent information in the form of 0 and 1. The idea can be expanded to mathematical abstractions or language coding. Demonstrate the effectiveness of ideas can be an example. The scientist observes the behavior of quarks at the Hadron Collider, he enters his data into a table and analyzes, displays the pattern in the form of formulas, formulates the main trends in the form of equations or records in the form of mathematical models, factors influencing the behavior of quarks. He needs to transfer this data without loss. He faces a series of questions. Use the digital communication channel or transmit through your assistant or call and personally tell everything? Time remains critically short, and the transfer of information is urgently needed, so the e-mail is dismissed.Helper - absolutely unreliable communication channel with the probability of occurrence of noise close to infinity. As a communication channel, he chooses to call.

How accurately can he reproduce the data tables? If the table has one row and two columns, it is pretty accurate. And if there are ten thousand lines and fifty columns? Instead, it conveys a pattern encoded as a formula. If he were in a situation where he could transfer the table without loss and was sure that another participant in the communication process would come to the same laws, and time would not be an influence factor, then the question would be meaningless. However, the law derived as a formula reduces the amount of time for decoding, is less subject to transformations and noise during transmission of information. Examples of such encodings in the course of this work will be given multiple times. The communication channel can be considered a disk, person, paper, satellite dish, telephone, cable,through which signals flow, and so on. Encoding not only eliminates the problem of information loss, but also the problem of its volume. Using coding, you can reduce the dimension, reduce the amount of information. After reading the book, the probability of retelling the book without loss of information tends to zero, in the absence of Sawant syndrome. Having encoded or formulated the main idea of the book in the form of a definite utterance, we present its brief overview. The main task of coding is to shorten the formulation of the original signal without losing information for its transmission over a long distance out of time to another communication participant in such a way that the participant can decode it effectively. A web page, a formula, an equation, a text file, a digital image, digitized music, a video image are all vivid examples of encodings.Encoding not only eliminates the problem of information loss, but also the problem of its volume. Using coding, you can reduce the dimension, reduce the amount of information. After reading the book, the probability of retelling the book without loss of information tends to zero, in the absence of Sawant syndrome. Having encoded or formulated the main idea of the book in the form of a definite utterance, we present its brief overview. The main task of coding is to shorten the formulation of the original signal without losing information for its transmission over a long distance out of time to another communication participant in such a way that the participant can decode it effectively. A web page, a formula, an equation, a text file, a digital image, digitized music, a video image are all vivid examples of encodings.Encoding not only eliminates the problem of information loss, but also the problem of its volume. Using coding, you can reduce the dimension, reduce the amount of information. After reading the book, the probability of retelling the book without loss of information tends to zero, in the absence of Sawant syndrome. Having encoded or formulated the main idea of the book in the form of a definite utterance, we present its brief overview. The main task of coding is to shorten the formulation of the original signal without losing information for its transmission over a long distance out of time to another communication participant in such a way that the participant can decode it effectively. A web page, a formula, an equation, a text file, a digital image, digitized music, a video image are all vivid examples of encodings.Using coding, you can reduce the dimension, reduce the amount of information. After reading the book, the probability of retelling the book without loss of information tends to zero, in the absence of Sawant syndrome. Having encoded or formulated the main idea of the book in the form of a definite utterance, we present its brief overview. The main task of coding is to shorten the formulation of the original signal without losing information for its transmission over a long distance out of time to another communication participant in such a way that the participant can decode it effectively. A web page, a formula, an equation, a text file, a digital image, digitized music, a video image are all vivid examples of encodings.Using coding, you can reduce the dimension, reduce the amount of information. After reading the book, the probability of retelling the book without loss of information tends to zero, in the absence of Sawant syndrome. Having encoded or formulated the main idea of the book in the form of a definite utterance, we present its brief overview. The main task of coding is to shorten the formulation of the original signal without losing information for its transmission over a long distance out of time to another communication participant in such a way that the participant can decode it effectively. A web page, a formula, an equation, a text file, a digital image, digitized music, a video image are all vivid examples of encodings.After reading the book, the probability of retelling the book without loss of information tends to zero, in the absence of Sawant syndrome. Having encoded or formulated the main idea of the book in the form of a definite utterance, we present its brief overview. The main task of coding is to shorten the formulation of the original signal without losing information for its transmission over a long distance out of time to another communication participant in such a way that the participant can decode it effectively. A web page, a formula, an equation, a text file, a digital image, digitized music, a video image are all vivid examples of encodings.After reading the book, the probability of retelling the book without loss of information tends to zero, in the absence of Sawant syndrome. Having encoded or formulated the main idea of the book in the form of a definite utterance, we present its brief overview. The main task of coding is to shorten the formulation of the original signal without losing information for its transmission over a long distance out of time to another communication participant in such a way that the participant can decode it effectively. A web page, a formula, an equation, a text file, a digital image, digitized music, a video image are all vivid examples of encodings.The main task of coding is to shorten the formulation of the original signal without losing information for its transmission over a long distance out of time to another communication participant in such a way that the participant can decode it effectively. A web page, a formula, an equation, a text file, a digital image, digitized music, a video image are all vivid examples of encodings.The main task of coding is to shorten the formulation of the original signal without losing information for its transmission over a long distance out of time to another communication participant in such a way that the participant can decode it effectively. A web page, a formula, an equation, a text file, a digital image, digitized music, a video image are all vivid examples of encodings.

The problems of accuracy of transmission, distance, time, coding process were solved to one degree or another and this made it possible to create information many times more than a person is able to perceive, to find patterns that will remain unnoticed for a long time. There are a number of other problems. Where to store this amount of information? How to store?Modern coding and mathematical apparatus, as it turned out, does not quite solve the problems with storage. There is a limit for shortening information and a limit for its encoding, after which it is not possible to decode values back. As already mentioned above, a set of values without a context or external environment no longer carries information. You can, however, encode information about the external environment and a set of values separately, and then combine the indices themselves in the form of certain indices and decode the indices themselves, however, you still need to store the original values about the set of values and the external environment somewhere. Great ideas have been proposed that are used everywhere now, but they will be discussed in another article.

Looking ahead, we can give an example of the fact that it is not necessary to describe the entire external environment; only the conditions for its existence can be formulated in the form of laws and formulas. What is science? Science is the highest degree of mimicry over nature. Scientific achievements are the abstract embodiment of real-life phenomena. One of the solutions to the problem of storing information was elegantly described in Richard Feynman’s charming article “There is a lot of space downstairs: an invitation to the new world of physics”. This article is sometimes considered the work that initiated the development of nanotechnology. In it, the physicist proposes to draw attention to the amazing features of biological systems, as repositories of information. In miniature, tiny systems there are incredibly many data on behavior - how they store and use information cannot cause anything but admiration. If we talk about how much biological systems can store information, then Nature magazine estimated that all the information, values, data and laws of the world can be recorded in DNA storage weighing up to one kilogram. That's the whole contribution to the universe, one kilogram of matter. DNA is an extremely efficient structure in terms of information storage, which allows you to store and use sets of values in huge volumes. If anyone is interested, hereAn article that tells you how to record photos of cats in the DNA repository and, in general, any information, even Scriptonite songs (extremely stupid use of DNA).

Here is the code that you listen to garbage

Feynman pays attention to how much information is encoded in biological systems, that in the process of existence they not only encode information, but also change the structure of matter based on this. If up to this point all the proposed ideas were based only on the encoding of a set of values or information as such, then after this article the question was already in the encoding of the external environment within individual molecules. Encode and change matter at the level of atoms, enclose information in them, and so on. For example, he proposes to create connecting wires with a diameter of several atoms. This in turn will allow to increase the number of components of a computer millions of times, such an increase in the elements will qualitatively improve the computing power of future intelligent machines. Feynman,as the creator of quantum electrodynamics and the person who participated in the development of the atomic bomb, he was well aware that the coding of matter is not something fantastic, but seems to be a normal process in the observed reality.

He even emphasizes that physics does not prohibit the creation of objects atom by atom. In the article, he also resorts to the comparison of human and machine activity, drawing attention to the fact that any representative of the species can easily recognize the faces of people, unlike computers, for which at that time it was a task outside of computing power. Asks a number of important questions from “what prevents you from creating an ultra-small copy of something?” To “distinguishing a computer from a human brain only in the number of constituent elements?”, It also describes the mechanisms and main problems in creating something of atomic size.

Contemporaries estimated the number of neurons in the brain at about 86 billion, naturally, not a single computer then, now, has approached this value, as it turned out, this is not necessary. However, the work of Richard Feynman began to move the idea of information downward, where there is a lot of space. The article was published in 1960, after the appearance of the work of Alan Turing's “Computing Machines and the Mind” of one of the most cited works of the form. Therefore, the comparison of human activity and computer was a trend, which was reflected in the article by Richard Feynman.

Thanks to the direct contribution of a physicist to physics, the cost of storing data decreases every year, cloud technologies are developing at a crazy pace, a quantum computer has been created, we are recording data in DNA storage and doing genetic engineering, which once again proves that matter can be changed and encoded. In the next article we will talk about chaos, entropy, quantum computers, spiders, ants, hidden Markov models and category theory. There will be more math, punk rock and dna. Continued here in this article .

How much information does Homo sapiens occupy? (hereinafter Homo). For simplicity in computer science came up with a term called bits. A bit is the minimum unit of information. The file with this work takes several kilobytes. Such a document fifty years ago would have occupied all the memory of the most powerful computer. The average book in the digital version takes up a thousand times more space and this is already a megabyte. High-quality photo on a powerful camera - 20 megabytes. One digital disk is 40 times larger. Interesting scales begin with gigabytes. Human DNA, all of the information about us is about 1.5 gigabytes. Multiply this by seven billion and get 1.05 x 10 ^ 19 bytes. In general, this amount of information in modern conditions we can produce in 10 days. This number of bits will describe all the people living now. And this is only data about the people themselves, without interactions between them, without interactions with nature and culture, which man himself created for himself. How much will this figure increase if you add variables and uncertainties of the future? Chaos will be the right word.

')

Information has an amazing property. Even when it is not, it is. And here you need to give an example. In behavioral biology is a famous experiment. Two cells stand opposite each other. In the 1st monkey of high rank. Alpha male. In the 2nd cage, the monkey is lower in status, beta male. Both monkeys can watch their counterpart. Add an influence factor to the experiment. Between the two cells put a banana. A beta male never dares to take a banana if he knows that the alpha male has also seen this banana. Because he immediately feels all the aggression of the alpha male. Next, slightly change the initial conditions of the experiment. The alpha male cell is covered with an opaque fabric to deprive it of its view. Repeating all that made before the picture becomes completely different. A beta male without any remorse comes up and takes a banana.

It's all about his ability to analyze, he knows that the alpha male has not seen how they put a banana and for him a banana simply does not exist. A beta male analyzed the absence of a signal about the appearance of a banana in an alpha male and took advantage of the situation. Setting a specific diagnosis to a patient in many cases is made when certain symptoms occur to him, however, a huge number of diseases, viruses and bacteria can even confuse an experienced doctor, how can he determine the exact diagnosis without spending time, which can be vital for the patient? It's simple. He analyzes not only the symptoms that the patient has, but also those that he does not have, which reduces the search time tenfold. If something does not give one or another signal, this also carries certain information - as a rule, of a negative nature, but not always. Analyze not only the information signals that are, but also those that do not.

In connection with the listed figures and problems, a number of questions and problems arise. How? How did you manage to achieve this? Can the organism / society function normally in such conditions? How information affects biological, economic and other types of systems. The amount of information that we perceive in 2019 will seem scanty for the descendants of 2050. Already, the species is already creating new patterns and patterns of working with information, studying its properties and effects. Phrase: - “for a year I lived a million years” is no longer a joke and not absurd, but a reality. The amount of information that creates a person affects the social, economic, cultural and even biological life. In 1980, people dreamed of creating a quantum computer to increase computing power. It was a dream of the species. The discovery that promised this invention was to anticipate a new era. In 2018, IBM launched the first commercial quantum computer, but no one noticed. This news was discussed by an incredibly small number of people. She just drowned in that informational abundance in which we now exist. The main focus of research in recent years has become neuroscience, algorithms, mathematical models, artificial intelligence, which generally indicates the search for the possibility of normal functioning in an information-enriched environment. Definitions of patterns in the transfer of information, factors of its influence, properties and capabilities. In 1929, the Von Economo neurons were discovered, which are found only in highly social groups of animals. There is a direct correlate of the size of the group and the size of the brain, the larger the group of animals, the larger their brain size relative to the body. It is not surprising that von Economo's neurons are found only in cetaceans, elephants, and primates. Neurons Von Economo are responsible for transmitting large amounts of information in the brain.

bye AG Lukashenko did not forbid them to communicate, look at them

This type of neurons is an obligatory neural adaptation in very large brains, which allows you to quickly process and transmit information on very specific projections, which has evolved with respect to new social behaviors. The apparent presence of these specialized neurons in highly intelligent mammals only can be an example of convergent evolution. New information always generates new, qualitatively different patterns and relationships. Regularities are established only on the basis of information. Primate beats a stone on the bone of a dead buffalo. One stroke and the bone is broken into two pieces. Another blow and another rift. The third blow and a few more fragments. The pattern is clear. Blow to the bone and at least one new fragment. Are primatopia so good at recognizing patterns? Multiple intercourse and deferred labor after nine months.

How long did it take to link these two events? For a long time, childbirth was generally not associated with sexual intercourse between a man and a woman. In most cultures and religions, the gods answered for the birth of a new life. The exact date of discovery of this pattern, unfortunately, has not been established. However, it is worth noting that there are still closed societies of hunter-gatherers that these processes do not bind, and special rituals performed by the shaman are responsible for their birth. The main cause of infant mortality during childbirth until 1920 was dirty hands. Clean hands and a deceased child are also examples of non-obvious patterns. Here is another example of the pattern, which until 1930 remained implicit. What is it about? About blood types. In 1930, Landsteiner received the Nobel Prize for this discovery. Up to this point, the knowledge that it is only possible to transfuse a blood type to a person that matches the donor with the needy was unclear. Such examples are billions. It should be noted that the search for patterns is what the species is engaged in constantly. From a businessman who finds patterns in behavior or needs of people, and then earns on this pattern for many years, to serious scientific studies that make it possible to predict climate change, people’s migration, finding places for mining, comets cyclicality, embryo development, virus evolution and how the top behavior of neurons in the brain. Of course, everything can be explained by the device of the universe in which we live, and the second law of thermodynamics that entropy is constantly increasing, but this level is not suitable for practical purposes. Should choose a more close to life. The level of biology and computer science.

What is information? According to popular notions, information is information regardless of the form in which they are presented or to solve the problem of uncertainty. In physics, information is a measure of the orderliness of a system. In information theory, the definition of this term is as follows: information is data, bits, facts or concepts, a set of values. All these concepts are blurred and inaccurate, moreover, erroneous.

In proof of this, we propose a thesis - the information itself is meaningless. What is the number “3”? Or what is the letter “A”? Just a character with no value assigned. But what is the number “3” in the blood type column? This is the value that will save life. It already affects the strategy of behavior. An example brought to the point of absurdity, but not losing its significance. Douglas Adams wrote “A Hitchhiker's Guide to the Galaxy”. In this book, the created quantum computer had to answer the main question of life and the Universe. What is the meaning of life and the universe? The answer was received after seven and a half million years of continuous calculations. The computer concluded by repeatedly checking the value for correctness that the answer was “42”. The examples given make it clear that information without the external environment in which it is located (the context) does not mean anything. The number “2” can mean the number of monetary units sick with Ebola, happy children, or an indicator of a person’s knowledge of a question. For further proof, let us turn into the world of biology: the leaves of plants very often have the shape of a semicircle and first, as it were, rise up, expanding, but after a certain point of refraction stretch down, tapering. In DNA, as in the main carrier of information or values, there is no gene that would encode them such a craving down after a certain point. The fact that a leaf of a plant stretches downwards is a trick of gravity.

The DNA itself, in plants, in mammals, and in the already mentioned Homo Sapiens, carries little information, if at all. After all, DNA is a set of values in a particular environment. DNA basically carries transcription factors, something that needs to be activated by a certain external environment. Place the plant / human DNA in a different atmosphere or gravity environment, and the output will be a completely different product. Therefore, transferring our DNA to alien forms of life for research purposes is a rather stupid exercise. It is quite possible that, in their midst, human DNA will grow into something even more terrifying than a two-legged, straight-eared primate with a protruding thumb and ideas about equality. Information is values / data / bits / matter in any form in continuous communication with the environment, system or context. Information does not exist without environmental, system or context factors. Only in close connection with these conditions is information capable of conveying meanings. In the language of mathematics or biology, information does not exist without the external environment or systems, the variables on which it affects. Information is always an appendage of the circumstances in which it moves. This article will discuss the basic ideas of the theory of information. Proceedings of intellectual activity of Claude Shannon, Richard Feynman.

A distinctive feature of the form is the ability to create abstractions and build patterns. Represent some phenomena through others. We encode. Photons on the retina create images, air vibrations are transformed into sounds. We associate a certain sound with a certain picture. We interpret the chemical element in the air with our receptors in the nose as smell. Through drawings, pictures, hieroglyphs, sounds, we can connect events and transmit information.

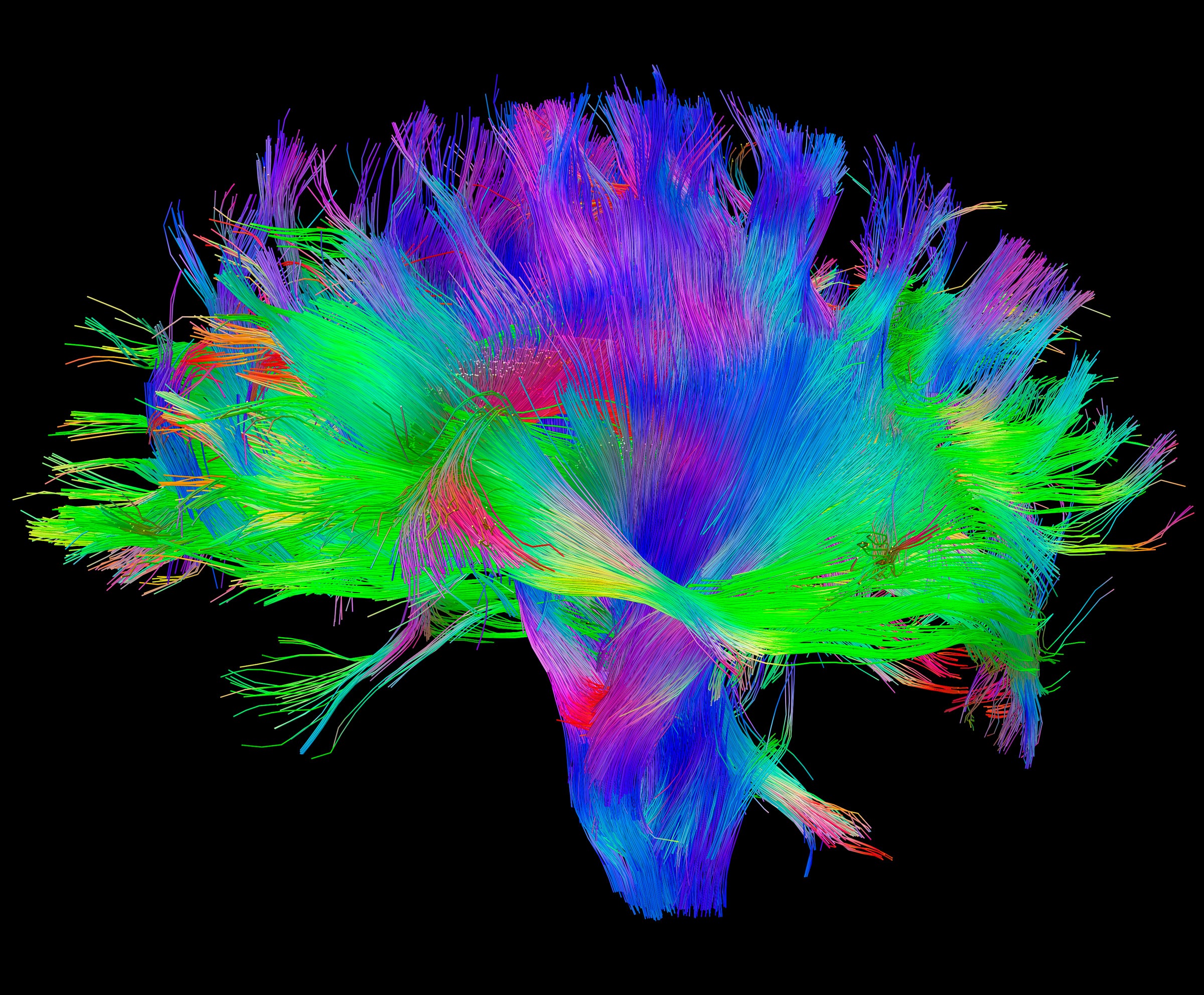

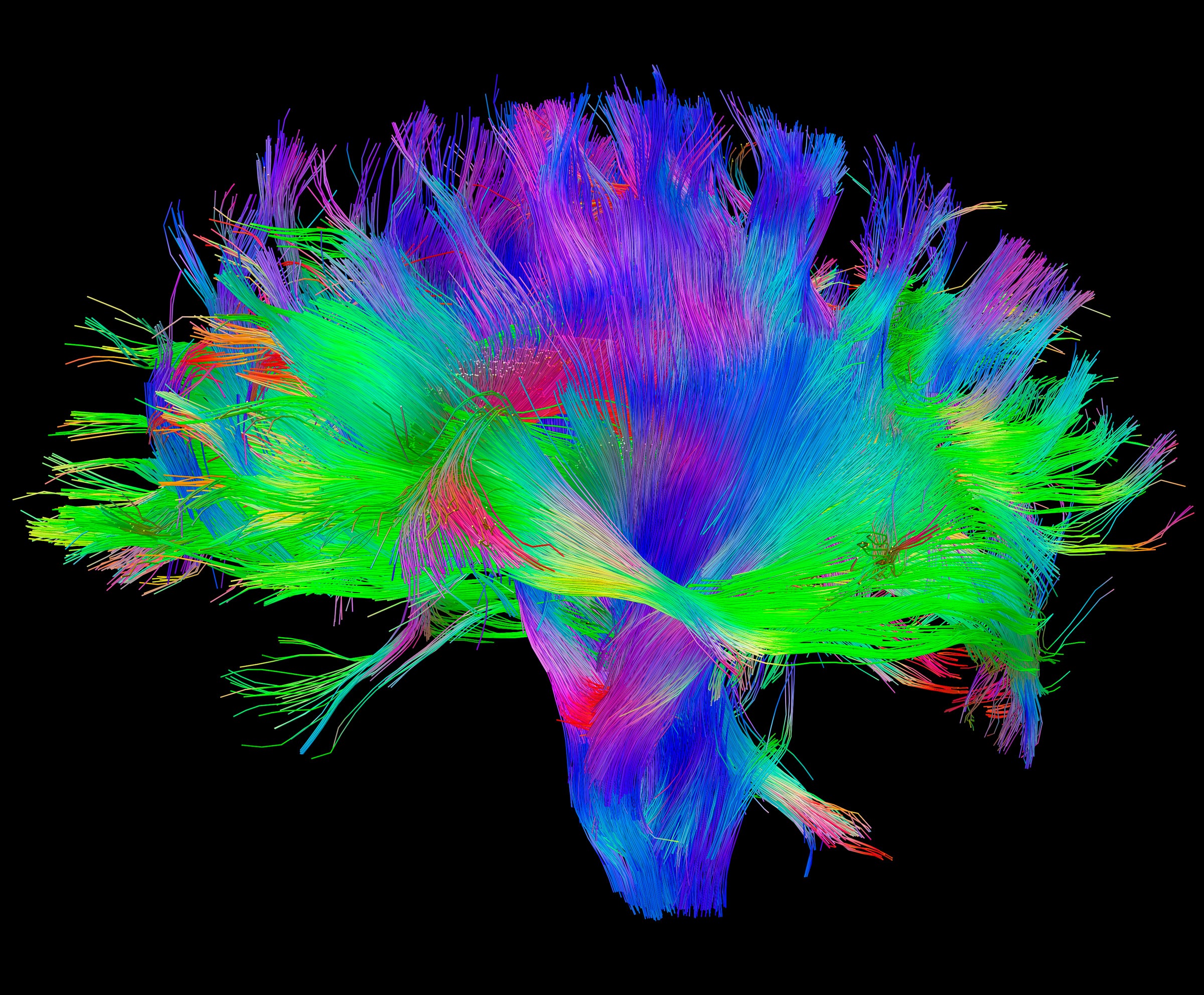

here it actually encodes your reality

Such coding and abstraction should not be underestimated, it is enough just to remember how much it affects people. Encodings are able to prevail over biological programs, a person for the sake of an idea (a picture in his head that determines the strategy of behavior) refuses to transfer copies of his genes further or to recall the full power of physical formulas that allowed him to send a species representative to space, chemical equations that help heal people etc. Moreover, we can encode what is already encoded. The simplest example is the translation from one language to another. One code is represented in the form of another. The simplicity of transformation, as the main factor in the success of this process, allows you to make it endless. You can translate the expression from Japanese into Russian, from Russian into Spanish, from Spanish into a binary system, from it into Morse code, then present it in Braille, then in the form of a computer code, and then in the form of electrical impulses, send it directly to the brain where it decodes the message. Just recently, a reverse process was done and the brain activity was decoded to speech.

zafigachili in the picture above the electrodes and considered all your uniqueness

In the period from forty to twenty thousand years ago, primitive people began to actively encode information in the form of speech or gesture codes, rock art. Modern people, observing the first cave paintings, are trying to identify (decode) their meaning, the search for meanings is another distinctive feature of the species. Recreating the context of certain markers or remnants of information, modern anthropologists are trying to understand the life of primitive people. The quintessence of the coding process embodied in the form of writing. Writing solved the problem of information loss during its transmission not only in space, but also in time. Hieroglyphs of numbers allow you to encode calculations, words, objects, etc. However, if the problem is solved more or less efficiently, if of course both participants in the communication process use the same conditional agreements on the interpretation and decoding process of the same characters, hieroglyphs, the printed writing has failed with time and speed of transmission. To solve the problem of speed, radio and telecommunications systems were invented. The key stage in the development of information transfer can be considered two ideas. The first is digital communication channels, and the second is the development of the mathematical apparatus. Digital communication channels solved the problem in the speed of information transfer, and the mathematical apparatus in its accuracy.

Any channel has a certain level of noise and interference, due to which information comes with interference (a set of values and hieroglyphs is distorted, the context is lost) or does not come at all. With the development of technology, the amount of noise in digital communication channels decreased, but never reduced to zero, and as the distance increased, it generally increased. The key problem that needs to be solved when information is lost in digital communication channels was identified and solved by Claude Shannon in 1948, and the term bit was coined. It sounds like this: - “Let the source of messages have entropy (H) for one second, and (C) - channel capacity. If H < or = , then information coding is possible in which the source data will be transmitted through a channel with an arbitrarily small number of errors. ”

and you did not call to play this game

This formulation of the problem is the cause of the rapid development of science called "information theory". The main problems that it solves and tries to solve, are reduced to the fact that digital channels, as mentioned above, have noise or are formulated as follows - “there is no absolute reliability of the channel in the transmission of information.” Those. information may be lost, distorted, filled with errors due to the influence of the environment on the information transmission channel. Claude Shannon put forward a number of theses, from which it follows that the possibility of transmitting information without loss and changes in it, i.e. with absolute accuracy, exists in most channels with noise. In fact, he allowed Homo Sapiens not to waste efforts on improving communication channels. Instead, he proposed developing more efficient coding and decoding schemes. Represent information in the form of 0 and 1. The idea can be expanded to mathematical abstractions or language coding. Demonstrate the effectiveness of ideas can be an example. The scientist observes the behavior of quarks at the Hadron Collider, he enters his data into a table and analyzes, displays the pattern in the form of formulas, formulates the main trends in the form of equations or records in the form of mathematical models, factors influencing the behavior of quarks. He needs to transfer this data without loss. He faces a series of questions. Use the digital communication channel or transmit through your assistant or call and personally tell everything? Time remains critically short, and the transfer of information is urgently needed, so the e-mail is dismissed.Helper - absolutely unreliable communication channel with the probability of occurrence of noise close to infinity. As a communication channel, he chooses to call.

How accurately can he reproduce the data tables? If the table has one row and two columns, it is pretty accurate. And if there are ten thousand lines and fifty columns? Instead, it conveys a pattern encoded as a formula. If he were in a situation where he could transfer the table without loss and was sure that another participant in the communication process would come to the same laws, and time would not be an influence factor, then the question would be meaningless. However, the law derived as a formula reduces the amount of time for decoding, is less subject to transformations and noise during transmission of information. Examples of such encodings in the course of this work will be given multiple times. The communication channel can be considered a disk, person, paper, satellite dish, telephone, cable,through which signals flow, and so on. Encoding not only eliminates the problem of information loss, but also the problem of its volume. Using coding, you can reduce the dimension, reduce the amount of information. After reading the book, the probability of retelling the book without loss of information tends to zero, in the absence of Sawant syndrome. Having encoded or formulated the main idea of the book in the form of a definite utterance, we present its brief overview. The main task of coding is to shorten the formulation of the original signal without losing information for its transmission over a long distance out of time to another communication participant in such a way that the participant can decode it effectively. A web page, a formula, an equation, a text file, a digital image, digitized music, a video image are all vivid examples of encodings.Encoding not only eliminates the problem of information loss, but also the problem of its volume. Using coding, you can reduce the dimension, reduce the amount of information. After reading the book, the probability of retelling the book without loss of information tends to zero, in the absence of Sawant syndrome. Having encoded or formulated the main idea of the book in the form of a definite utterance, we present its brief overview. The main task of coding is to shorten the formulation of the original signal without losing information for its transmission over a long distance out of time to another communication participant in such a way that the participant can decode it effectively. A web page, a formula, an equation, a text file, a digital image, digitized music, a video image are all vivid examples of encodings.Encoding not only eliminates the problem of information loss, but also the problem of its volume. Using coding, you can reduce the dimension, reduce the amount of information. After reading the book, the probability of retelling the book without loss of information tends to zero, in the absence of Sawant syndrome. Having encoded or formulated the main idea of the book in the form of a definite utterance, we present its brief overview. The main task of coding is to shorten the formulation of the original signal without losing information for its transmission over a long distance out of time to another communication participant in such a way that the participant can decode it effectively. A web page, a formula, an equation, a text file, a digital image, digitized music, a video image are all vivid examples of encodings.Using coding, you can reduce the dimension, reduce the amount of information. After reading the book, the probability of retelling the book without loss of information tends to zero, in the absence of Sawant syndrome. Having encoded or formulated the main idea of the book in the form of a definite utterance, we present its brief overview. The main task of coding is to shorten the formulation of the original signal without losing information for its transmission over a long distance out of time to another communication participant in such a way that the participant can decode it effectively. A web page, a formula, an equation, a text file, a digital image, digitized music, a video image are all vivid examples of encodings.Using coding, you can reduce the dimension, reduce the amount of information. After reading the book, the probability of retelling the book without loss of information tends to zero, in the absence of Sawant syndrome. Having encoded or formulated the main idea of the book in the form of a definite utterance, we present its brief overview. The main task of coding is to shorten the formulation of the original signal without losing information for its transmission over a long distance out of time to another communication participant in such a way that the participant can decode it effectively. A web page, a formula, an equation, a text file, a digital image, digitized music, a video image are all vivid examples of encodings.After reading the book, the probability of retelling the book without loss of information tends to zero, in the absence of Sawant syndrome. Having encoded or formulated the main idea of the book in the form of a definite utterance, we present its brief overview. The main task of coding is to shorten the formulation of the original signal without losing information for its transmission over a long distance out of time to another communication participant in such a way that the participant can decode it effectively. A web page, a formula, an equation, a text file, a digital image, digitized music, a video image are all vivid examples of encodings.After reading the book, the probability of retelling the book without loss of information tends to zero, in the absence of Sawant syndrome. Having encoded or formulated the main idea of the book in the form of a definite utterance, we present its brief overview. The main task of coding is to shorten the formulation of the original signal without losing information for its transmission over a long distance out of time to another communication participant in such a way that the participant can decode it effectively. A web page, a formula, an equation, a text file, a digital image, digitized music, a video image are all vivid examples of encodings.The main task of coding is to shorten the formulation of the original signal without losing information for its transmission over a long distance out of time to another communication participant in such a way that the participant can decode it effectively. A web page, a formula, an equation, a text file, a digital image, digitized music, a video image are all vivid examples of encodings.The main task of coding is to shorten the formulation of the original signal without losing information for its transmission over a long distance out of time to another communication participant in such a way that the participant can decode it effectively. A web page, a formula, an equation, a text file, a digital image, digitized music, a video image are all vivid examples of encodings.

The problems of accuracy of transmission, distance, time, coding process were solved to one degree or another and this made it possible to create information many times more than a person is able to perceive, to find patterns that will remain unnoticed for a long time. There are a number of other problems. Where to store this amount of information? How to store?Modern coding and mathematical apparatus, as it turned out, does not quite solve the problems with storage. There is a limit for shortening information and a limit for its encoding, after which it is not possible to decode values back. As already mentioned above, a set of values without a context or external environment no longer carries information. You can, however, encode information about the external environment and a set of values separately, and then combine the indices themselves in the form of certain indices and decode the indices themselves, however, you still need to store the original values about the set of values and the external environment somewhere. Great ideas have been proposed that are used everywhere now, but they will be discussed in another article.

Looking ahead, we can give an example of the fact that it is not necessary to describe the entire external environment; only the conditions for its existence can be formulated in the form of laws and formulas. What is science? Science is the highest degree of mimicry over nature. Scientific achievements are the abstract embodiment of real-life phenomena. One of the solutions to the problem of storing information was elegantly described in Richard Feynman’s charming article “There is a lot of space downstairs: an invitation to the new world of physics”. This article is sometimes considered the work that initiated the development of nanotechnology. In it, the physicist proposes to draw attention to the amazing features of biological systems, as repositories of information. In miniature, tiny systems there are incredibly many data on behavior - how they store and use information cannot cause anything but admiration. If we talk about how much biological systems can store information, then Nature magazine estimated that all the information, values, data and laws of the world can be recorded in DNA storage weighing up to one kilogram. That's the whole contribution to the universe, one kilogram of matter. DNA is an extremely efficient structure in terms of information storage, which allows you to store and use sets of values in huge volumes. If anyone is interested, hereAn article that tells you how to record photos of cats in the DNA repository and, in general, any information, even Scriptonite songs (extremely stupid use of DNA).

Here is the code that you listen to garbage

Feynman pays attention to how much information is encoded in biological systems, that in the process of existence they not only encode information, but also change the structure of matter based on this. If up to this point all the proposed ideas were based only on the encoding of a set of values or information as such, then after this article the question was already in the encoding of the external environment within individual molecules. Encode and change matter at the level of atoms, enclose information in them, and so on. For example, he proposes to create connecting wires with a diameter of several atoms. This in turn will allow to increase the number of components of a computer millions of times, such an increase in the elements will qualitatively improve the computing power of future intelligent machines. Feynman,as the creator of quantum electrodynamics and the person who participated in the development of the atomic bomb, he was well aware that the coding of matter is not something fantastic, but seems to be a normal process in the observed reality.

He even emphasizes that physics does not prohibit the creation of objects atom by atom. In the article, he also resorts to the comparison of human and machine activity, drawing attention to the fact that any representative of the species can easily recognize the faces of people, unlike computers, for which at that time it was a task outside of computing power. Asks a number of important questions from “what prevents you from creating an ultra-small copy of something?” To “distinguishing a computer from a human brain only in the number of constituent elements?”, It also describes the mechanisms and main problems in creating something of atomic size.

Contemporaries estimated the number of neurons in the brain at about 86 billion, naturally, not a single computer then, now, has approached this value, as it turned out, this is not necessary. However, the work of Richard Feynman began to move the idea of information downward, where there is a lot of space. The article was published in 1960, after the appearance of the work of Alan Turing's “Computing Machines and the Mind” of one of the most cited works of the form. Therefore, the comparison of human activity and computer was a trend, which was reflected in the article by Richard Feynman.

Thanks to the direct contribution of a physicist to physics, the cost of storing data decreases every year, cloud technologies are developing at a crazy pace, a quantum computer has been created, we are recording data in DNA storage and doing genetic engineering, which once again proves that matter can be changed and encoded. In the next article we will talk about chaos, entropy, quantum computers, spiders, ants, hidden Markov models and category theory. There will be more math, punk rock and dna. Continued here in this article .

Source: https://habr.com/ru/post/456276/

All Articles