KubeCon EU 2019: 10 key findings

The Datawire guys and I recently returned from the amazing KubeCon and CloudNativeCon conferences in Barcelona. We participated in 6 speeches at KubeCon, distributed a bunch of cool (without false modesty) T-shirts on our stand, talked with dozens of people and attended cool performances. There was so much interesting on KubeCon EU that I decided to write a post with key results.

And here are the conclusions I made (not in order of importance):

- Multi-platform and hybrid cloud are (still) popular.

- Combining technology is gaining momentum.

- Service Mesh Interface (SMI) announcement: stay tuned.

- (Foggy?) The future of Istio.

- Policy as the code goes up the stack.

- Cloud DevEx is still not without problems.

- Companies (still) in the initial stages of technology implementation.

- The local Kubernetes is real (but intricate).

- Consider clusters as a herd.

- The success of the Kubernetes still depends on the communities.

Multiplatform and hybrid cloud (still) popular

There were several presentations about multi-cloud at the conference (and related issues: network and security ), but I noticed that many of the introductory slides on the reports for the end users showed an infrastructure or architecture where there were at least two cloud providers. At the Datawire booth, we talked about the trends of transition to multi-cloud more often than at previous conferences.

The success of Kubernetes greatly simplified the multi-cloud strategy, providing an excellent abstraction for supplies and orchestration. In the past two years, the functionality and API Kubernetes have become much more stable, and many vendors use this platform. Storage management and network connectivity has improved, and there are enough open-source commercial products and solutions in this area. In his report “Debunking the myth: Kubernetes is difficult to organize storage,” Saad Ali, a senior Google developer, spoke interestingly about the repositories, and Eran Yanay from Twistlock presented a good overview of connections at the Kubernetes Connections lecture: how to write a CNI plugin from scratch . ”

I especially liked the different conversations about combining Azure with existing local infrastructures. Recently, I wrote an article in InfoQ about how a multi-platform architecture is related to the work of upgrading applications . I talked about three approaches: the expansion of the cloud to the data center, as in the Azure Stack , AWS Outposts and GCP Anthos ; ensuring a uniform supply structure (orchestration) among several suppliers or clouds using a platform such as Kubernetes; ensuring uniformity of the services (network) structure using the API gateway and service mesh, such as Ambassador and Consul .

We at Datawire are working hard on API gateways and, obviously, are leaning towards a flexible third approach. This allows you to gradually and safely migrate from the traditional stack closer to the cloud. Nash Jackson of HashiCorp and I have spoken at KubeCon with a lecture on “Ensuring cloud-based interaction from the end user to the service” .

Combining technology is gaining momentum

Many suppliers offer packages with Kubernetes tools and additional technologies. I drew attention to the announcement of Rio MicroPaaS from Rancher Labs - recently they have released interesting pieces. I wrote a review in InfoQ about Submariner , which connects several clusters, and the lightweight distribution kit Kubernetes - k3s . And I can't wait to learn the Supergiant Kubernetes Toolkit . This is “a suite of utilities for automating deliveries and managing Kubernetes in the cloud”.

In a corporate environment, packages are sent for storage. A good example is VMware Velero 1.0 (based on developments acquired from Heptio ), with which developers can back up and transfer Kubernetes resources and persistent volumes.

Many other operators were represented at the conference for storing and managing data in Kubernetes, for example, CockroachDB , ElasticCloud and StorageOS . Red Hat Rob from Rob Szumski talked about the evolution of the Operator SDK and the community in his presentation and introduced Operator Hub . Apparently, operator support is one of the main advantages of the Red Hat Enterprise OpenShift package.

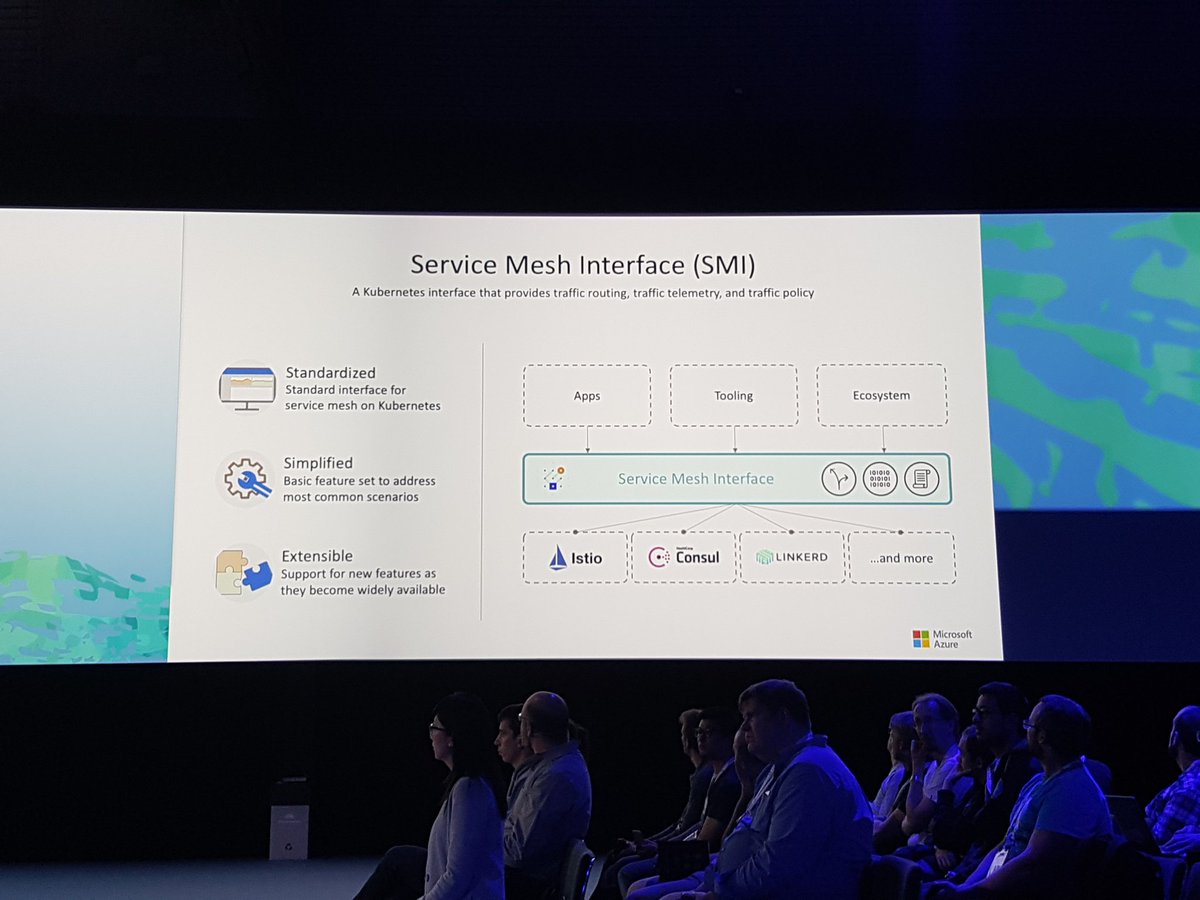

Service Mesh Interface (SMI) Announcement: Stay tuned

The announcement of the Service Mesh Interface (SMI) in a report by Gabe Monroy (Gabe Monroy) from Microsoft definitely made a lot of noise . No one will deny that the service mesh has recently become very popular, and SMI will combine the main features into a standard interface and provide "a set of common portable APIs that will ensure the interoperability of different service mesh technologies, including Istio, Linkerd and Consul Connect."

In the demonstration, Gabe shows the main features: traffic policy for applying policies such as authentication and transit encryption between services (using the example of Consul and Intention); traffic monitoring - collection of main metrics, for example, the number of errors and delays in data exchange between services (for example, Linkerd and SMI metrics server); and traffic management - measurement and transfer of traffic between different services (for example, Istio with Weaveworks Flagger ).

It would be interesting to define an interface in this highly competitive environment, but I went to the SMI website , read specifications and suspect that this abstraction can be reduced to a minimal set of common functions (this is always difficult to avoid in such solutions, as I know experience in the Java Community Process). The potential danger is that while everyone will apply this specification, suppliers will provide the most interesting features through custom extensions.

I talked about this with the guys from Datawire and I think that the service mesh exists in a market that works on the “winner takes all” principle, so in the end SMI will divert some attention to itself, and another technology will simply appear and collect all the fruits ( something like Kubernetes did with Mesos, Docker Swarm, etc.). Follow the news, see what happens.

(Foggy?) Future Istio

Although much was said about the service mesh, the Istio theme — perhaps the most famous service mesh — caused mixed feelings. Someone thinks that Istio and service mesh are synonyms (like Docker and containers) and sees only the solution, not the problem; someone likes the features of Istio; and someone not very happy with this technology.

There has been a lot of discussion about Istio benchmarking recently , and in release 1.1 some problems with the Mixer component are intentionally solved . Interestingly, I spoke with different people who evaluated Istio for several months (one team spent almost a year on this), and they claim that Istio is still very complex and resource-intensive. Someone says that releasing hosted Istio through GKE has solved many problems, but not everyone can use GCP.

A Google representative was asked what will happen to Istio, whether it will become a CNCF project. The answer was very smooth, but hazy: now Istio is open source, people can work on it, and as for CNCF, we will wait and see. So far, only Linkerd is an official project on the service mesh at CNCF, although Envoy Proxy is also a CNCF project (and Istio, the Ambassador API Gateway and many other technologies are based on it). At our booth, many asked about the integration of Linkerd 2 and Ambassador (thanks to Oliver Gould, technical director of Buoyant, who mentioned Ambassador in his detailed review of Linkerd ), and participants like the ease of use of Linkerd.

By the way, I was delighted when the guys from Knative in their speech “Knative Extension for Fun and Profit” showed that they replaced Istio with Ambassador at Knative, because Ambassador is easier to use (pay attention to their T-shirts in the photo and read the relevant task on GitHub: "Removing Istio as a dependency" ):

Policy as the code goes up the stack

In our industry, everyone has become accustomed to presenting policy as a code in connection with the Identity and Access Management System (IAM), iptable configuration, ACLs, and security groups, but so far this is done at a low level, close to the infrastructure. When I heard the guys from Netflix talked about using the Open Policy Agent (OPA) at KubeCon in Austin in 2017 , I wondered how using this project to define policy as code.

At this KubeCon, I saw that politics as a code goes up through the levels, and more and more people are discussing the use of OPA, for example during the report of Rita Zhang and Max Smythe , and in more detail on the report “Modular testing of configurations Kubernetes using the Open Policy Agent ” Gareth Rushgrove (he has a flair for projects with great potential).

I’ve been following for a long time how HashiCorp's Sentinel determines policy at the infrastructure level, and now using Intention in the Consul service mesh raises this technology even higher in the stack. Using Intention, policies can be defined at the service level. For example, service A can communicate with service B, but not with service C. When the Datawire team and I began working with HashiCorp to integrate Ambassador and Consul, we quickly realized the benefits of combining Intention with mTLS (for service identification) and ACLs (to prevent spoofing and for multi-level protection).

Cloud DevEx still not without problems

During the closing speech, “Do not stop believing.” - Bryan Liles, a senior developer at VMware, spoke about the importance of the developer’s workflow (developer experience, DevEx), and this topic was raised in several presentations. Kubernetes and its ecosystem are developing well, but the internal development cycle and integration of supply pipelines with Kubernetes definitely need to be improved.

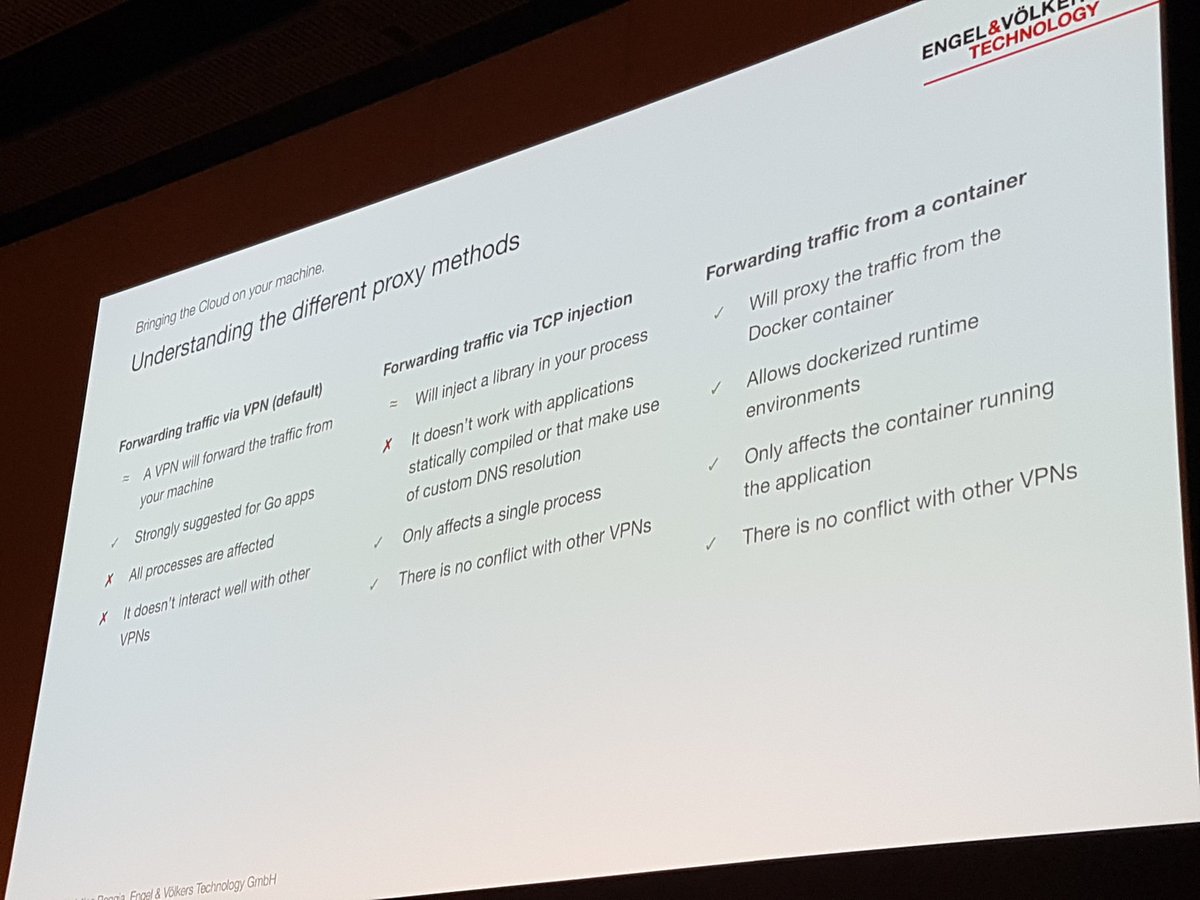

This was talked about by Christian Roggia in his report “Reproducible designs and deliveries with Bazel and Telepresence” . He told how Engel and Volkers use the Telepresence tool at CNCF in the internal development cycle so that they do not have to collect and ship the container after each change.

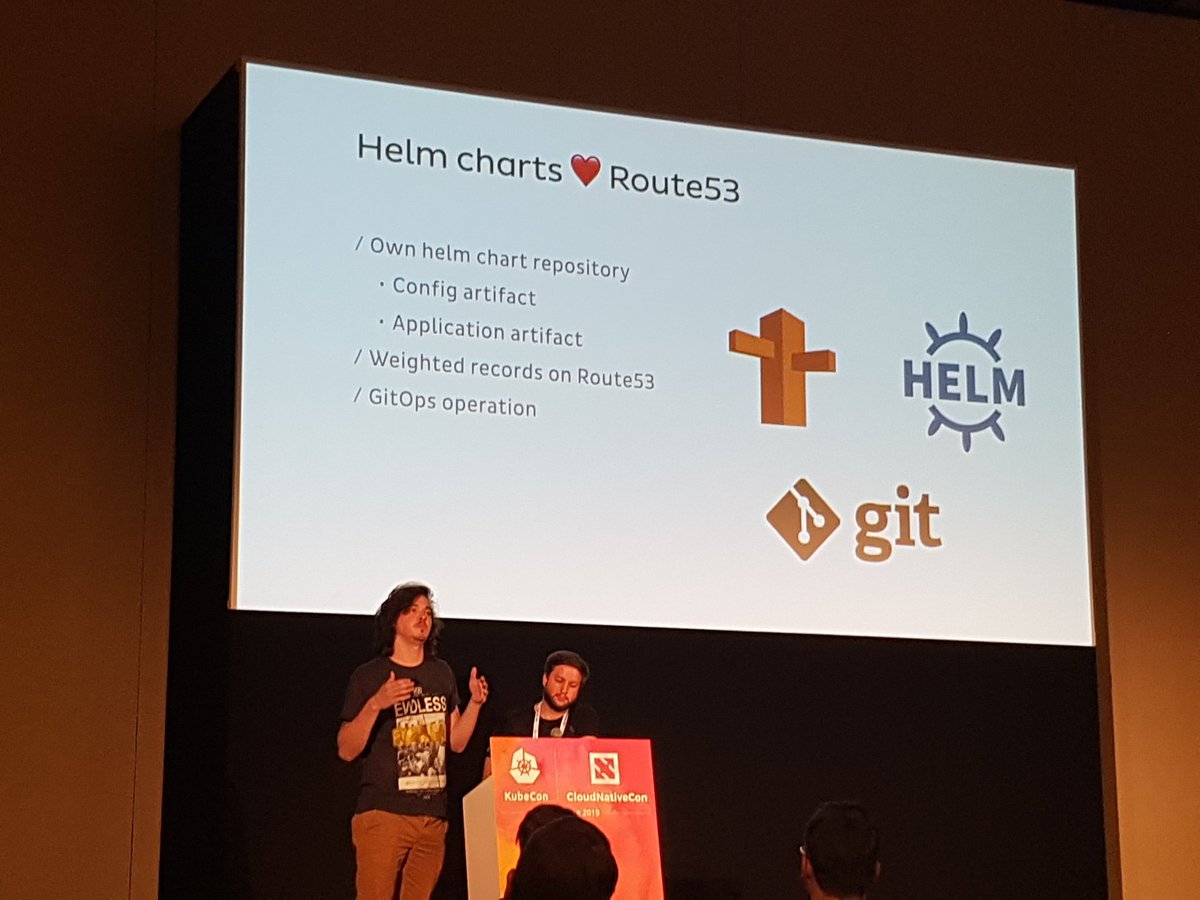

There was an interesting group discussion with the guys from Weaveworks and Cloudbees, “GitOps and best practices for CI / CD in the cloud” , where continuous delivery was discussed in detail. GitOps is developing quite successfully, so when working in Datawire and at conferences, I often meet teams that use this approach to customization and delivery. For example, Jonathan and Rodrigo mentioned this in their fascinating report “Scaling boundary operations in Onefootball using Ambassador: from 0 to 6000 requests per second” :

Companies (still) in the initial stages of technology implementation

This is the first KubeCon where at the Datawire stand I talked a lot with developers from large companies who are just starting to learn cloud technology. Almost everyone has heard of Kubernetes or experimented with it, but many still only think how to fit their old technology into a new world.

Cheryl Hung, CNCF's ecosystem director, held several discussions, including “Transforming the company with the help of cloud technologies” , and it was interesting to listen to discoverers like Intuit. Laura Rehorst in her report “From COBOL to Kubernetes: a trip to the cloud for a bank with a 250-year history” told how ABN AMRO used planning and strategic resources.

We placed the Ambassador API Gateway in the very center of the booth, so we were most often asked questions about how a modern gateway for Kubernetes differs from existing solutions for managing the full API life cycle. We are currently working on the next commercial product in this area - the Ambassador Code , and it was interesting to discuss with developers the requirements and expectations in connection with the new cloud paradigms.

The local Kubernetes is real (but intricate)

There were several announcements on the local installation of Kubernetes, in particular, in a corporate environment, for example, Kublr VMware and VMware Integration, and kubeadm . Red Hat participated in all OpenShift discussions, and more than once I heard how people like OpenShift abstractions, and the potential dependency is compensated for by the improved workflow and the SLA agreements that come with it.

But they all repeated the same thing: you do not need to install and maintain Kubernetes on your own, if this is not absolutely necessary. And even if you think your company is special, take your time: almost any company that can use a public cloud can use Kubernetes as a service.

Consider clusters as a herd.

In his interesting report “How Spotify unintentionally deleted all Kube clusters, but users didn’t notice anything,” Spotify developer David Xia told what they learned when they deleted several (!) Working clusters. I will not describe the whole situation (watch the video), but David’s main message was to treat the Kubernetes clusters as a herd. I think many of us have heard this phrase: "Treat the servers as a herd, not your beloved pets." But David believes that as computing abstraction develops (when we perceive “Data Center as a Computer” ), we must apply the same principle and not be too attached to our infrastructure.

In their report, the joint development of Kubernetes and GCP networks Purvi Desai (Purvi Desai) and Tim Hockin (Tim Hockin) suggest that organizations constantly destroy, recreate and transfer Kubernetes clusters so as not to become close to them. The main argument: if you do not constantly check the ability to restore clusters and transfer data, you may not succeed when a problem arises. Imagine that this is chaos engineering for clusters.

The success of the Kubernetes still depends on the communities.

In reports, at lunch and everywhere, one of the key topics was the importance of community and diversity. On Thursday morning, Lucas Käldström and Nikita Raghunath in their report “First steps in the Kubernetes community” not only told two amazing stories, but also broke all the excuses of those who are not involved in the open-source projects and projects of the CNCF .

Cheryl Hung in her report “2.66 million” thanked for her huge contribution to the project and gave a strong argument in favor of diversity and strong leadership. I was surprised when I learned that more than 300 scholarships were established to support the diversity of donations from various organizations within the CNCF.

KubeCon EU Conclusion

Thanks again to everyone we talked to in Barcelona. If you were unable to attend our presentations, here is a complete list:

- Creating a border control panel using Kubernetes and Envoy : Flynn from Datawire talks about the evolution of the Ambassador API gateway and its architecture.

- Ensuring cloud-based collaboration from end user to service : Nick Jackson from HashiCorp and Daniel Bryant from Datawire discuss how to integrate the Ambassador API gateway with Consul service mesh.

- Scaling boundary operations in Onefootball with Ambassador: 0 to 6,000 requests per second : Onefootball SRE engineers tell us how they used Ambassador in production.

- Knative Extensions for Fun and Profit : The Google Knative Team talks about the Knative platform and shows how they used Ambassador.

- Telepresence: Quickly developing workflows for Kubernetes : A discussion of past, present, and future Telepresence.

- Reproducible designs and shipments with Bazel and Telepresence : Christian Roggia tells us what approach Engel & Volkers is using to develop Telepresence microservices.

We at Datawire are tracking these trends so that Ambassador continues to evolve and meet the changing needs of cloud developers. If you haven’t used Ambassador lately, try our latest release, Ambassador 0.70, with built-in support for Consul service mesh, support for user-defined resource definitions, and many other features.

If you have any problems with the update, create a task or find us in Slack . If you need a pre-installed Ambassador installation with integrated authentication, speed limit and support, try our commercial product Ambassador Pro .

And if you like working with the Ambassador, tell us about it. Leave a comment under the article or write @getambassadorio on Twitter.

')

Source: https://habr.com/ru/post/456144/

All Articles