How to create a cool action for Google Assistant. Just AI's life hacking

The ecosystem around Google Assistant is growing incredibly fast. In April 2017, only 165 action games were available to users, and today there are more than 4500 of them in English only. The Russian-speaking corner of the Google Assistant’s universe will be as diverse and interesting as it depends on the developers. Is there a “perfect action” formula? Why separate code and content from script? What should be remembered while working on a conversational interface? We asked the Just AI team, colloquial AI technology developers, to share the life hacks to create apps for Google Assistant. On the platform of Aimylogic from Just AI several hundreds of action games have been created, among which there are very popular ones - more than 140 thousand people have already played the game “Yes, my Lord” . How to build a dream action game, says Dmitry Chechetkin, Just AI strategic project manager.

Shake, but do not mix: the role of the script, content and code

Any voice application consists of three components - an interactive script, content with which the action interacts, and programmable logic, i.e. code.

')

Scenario - this is probably the main thing. It describes what phrases a user can say, how an action should react to them, to which states he goes into and how he responds. I have been programming for 12 years, but when it comes to creating a conversational interface, I resort to various visual tools.

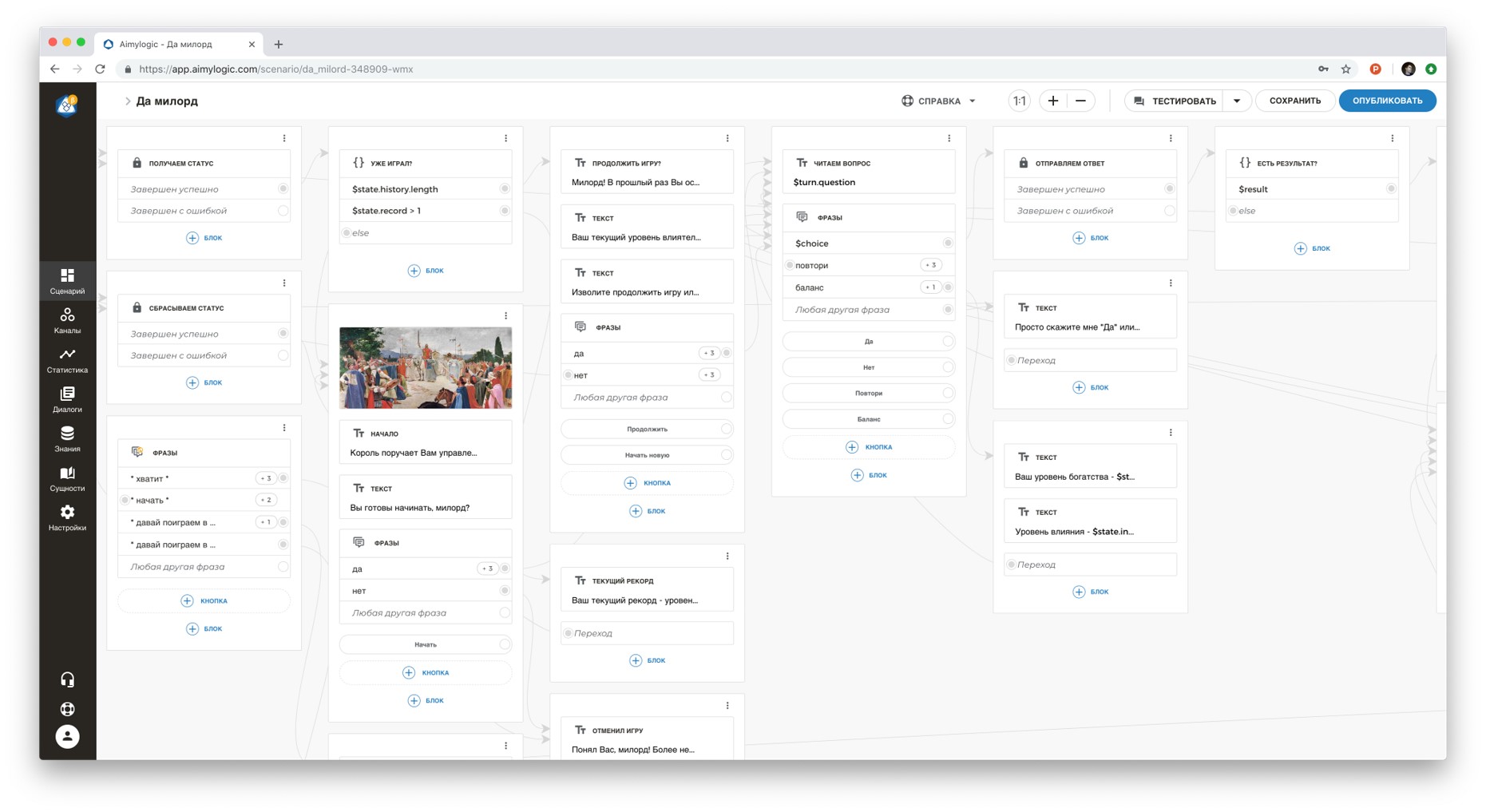

For a start, it doesn’t hurt to draw a simple script of the script on paper. So you decide what and what follows in the dialogue. Then you can transfer the script to some product for its visualization. Google offers Dialogflow to create a fully customized dialog, and for the simplest and shortest scenarios that do not require a broad understanding of the language, the Actions SDK . Another option is a visual designer with NLU Aimylogic ( how to create an action for Google Assistant in Aimylogic ), in which you can build a script without deep programming skills, besides, you can immediately test the action. I use Aimylogic to see how all the transitions in my dialogue will work, to test and validate the hypothesis itself and the idea of what I want to implement.

Frequently, programmable logic is required. For example, your website may look cool, but in order for it to “know how”, it will have to refer to the code on the server - and the code can already calculate, save and return the result. The same with the script for the action. The code should run smoothly, and better, if completely free. Today there is no need to pay thousands of dollars so that a code of 50, 100, 1000 lines is available for your action 24/7. I use several services for this: Google Cloud Functions , Heroku, Webtask.io, Amazon Lambda. The Google Cloud Platform generally provides for free a fairly wide range of services in its Free Tier .

The script can access the code using the simplest http calls that we are all used to. But at the same time, the code and the dialogue script do not mix. And this is good, because this way you can keep both of these components up to date, expand them as you please, without complicating the work on the action.

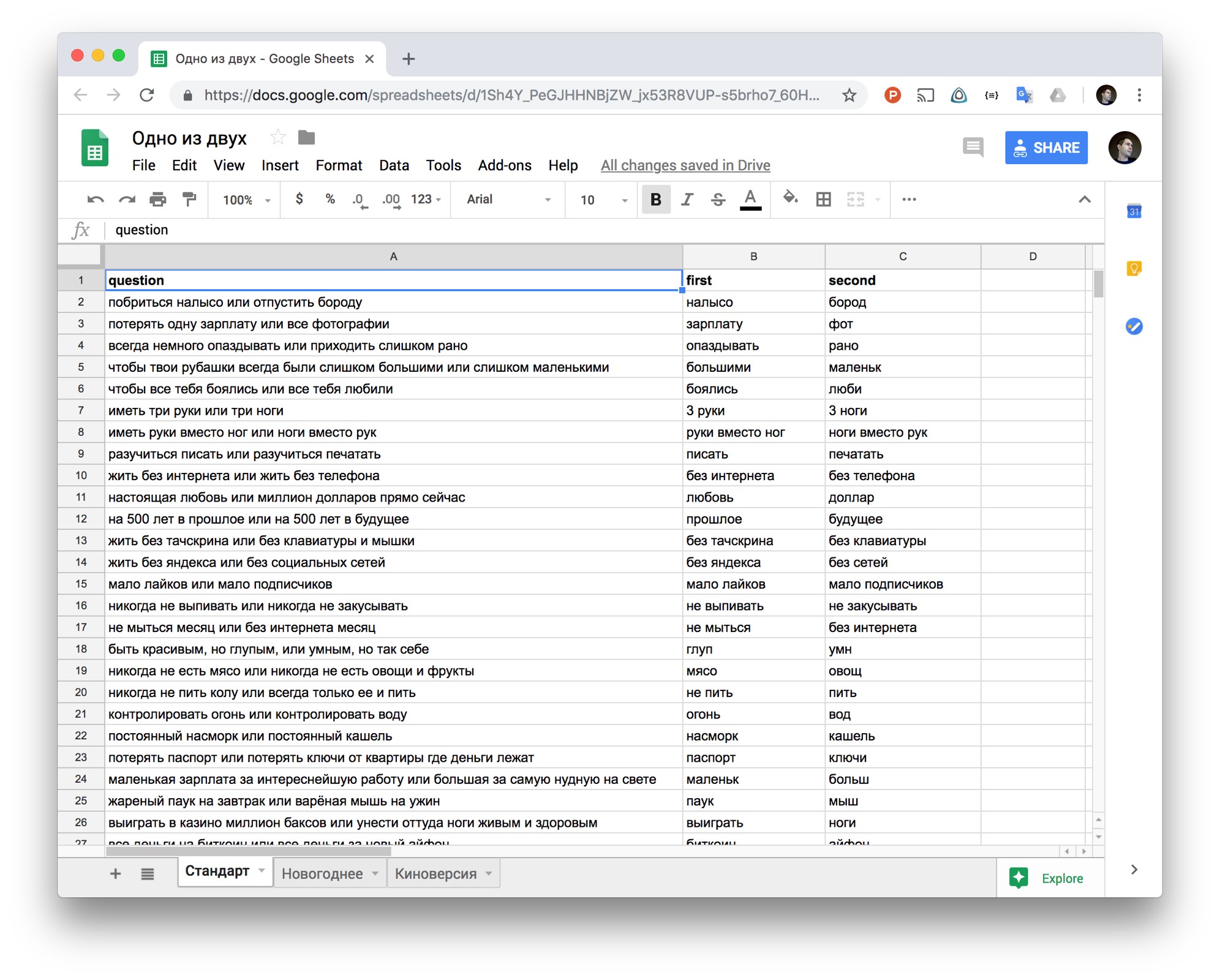

The third component is content. These are data that can change all the time without affecting the structure of the script itself. For example, quiz questions or episodes in our game "Yes, my lord . " If the content lived with the script or with the code, then such a script would become increasingly cumbersome. And in this case, no matter what tool you use to create the action, you will still be inconvenient to work with it. Therefore, I recommend storing the content separately: in a database, in a file in the cloud storage or in a table, to which the script can also be accessed via the API to receive data on the fly. Separating the content from the script and from the code, you can attract other people to work on the action - they can replenish the content independently of you. And the development of content is very important, because the user expects fresh and diverse content from the action to which he returns time after time.

How to use ordinary tables in the cloud, so as not to store all the content in the script itself? For example, in the First or Second game , we used the cloud Excel table, where any of the project participants could add new questions and answers for the action. This table script on Aimylogic is accessed using a single http request through a special API. As you can see, the script itself is small - because it does not store all the data from the table, which is updated every day. Thus, we separate the interactive script from the content, which allows us to work with the content independently and collectively replenish the script with fresh data. By the way, 50 thousand people have already played this game.

Checklist: what you need to remember, creating a conversational interface

Any interface has components with which the user interacts: lists, buttons, images, and so on. The conversational interface exists according to the same laws, but the fundamental difference is that a person communicates with the program in a voice. From this we must make a start, creating our own action.

The correct action should not be able to do anything. When a person speaks with a program, he cannot keep a lot of information in his head (remember how you listen to high-rise personal proposals from a bank or a mobile operator by phone). Discard the superfluous and focus on one single, but the most important function of your service, which will be most conveniently performed with your voice, without touching the screen.

For example, you have a ticket service. You should not hope that the client will turn the entire familiar scenario with his voice - look for a ticket according to five or six criteria, choose between carriers, compare and pay. But an application that prompts the minimum price for the chosen direction may well come in handy: this is a very fast operation, and it is convenient to perform it with a voice, without opening the site, without having to re-run the “form-filling” script each time (when you fill in the fields and select filters ).

Action is about the voice, not about the service as a whole. The user should not regret that he launched the action in the Assistant, and did not go, for example, to the application or to the site. But how to understand that without a voice can not do? To begin with, try on the idea of action for yourself. If you can easily perform the same action without a voice, there will be no sense. One of my first apps for Assistant is Yoga for the Eyes . This is such a virtual personal trainer who helps to do exercises for sight. There is no doubt that you need a voice here: your eyes are busy with exercises, you are relaxed and focused on oral recommendations. Peeping into the memo, distracting from the training, would be inconvenient and inefficient.

Or here is an example of an unsuccessful script for a voice application. Often I hear about how another online store wants to sell something through a virtual assistant. But filling the basket with a voice is inconvenient and impractical. And it is unlikely that the client will understand why he needs it. But the ability to repeat the last order with a voice or throw something on the go to the shopping list is another matter.

Remember the UX. The action must be at one with the user: accompany and guide him along the course of the dialogue so that he can easily understand what needs to be said. If a person comes to a dead end, begins to think, "And then what?" - this is a failure. No need to rely on the fact that your user will always refer to help. Dead ends should be monitored (for example, in the analytics in the Actions Console ), and the user should be assisted with leading questions or prompts. In the case of voice action, predictability is not a vice. For example, in our game “Yes, my Lord,” each phrase ends in such a way that the participant can answer either “yes” or “no.” He is not required to invent something on his own. And it's not that this is such an elementary game. Just the rules are designed so that the user is very clear.

"He speaks well!" The action “hears” well thanks to the Assistant, and “speaks” well — thanks to the developer of the script. The recent update gave Google Assistant new voices and more realistic pronunciation. Everything is cool, but the developer should reflect on the phrase, its structure, sound, so that the user can understand everything the first time. Set accents, use pauses to make action phrases sound human.

Never load the user. For action games that sound news feeds or read fairy tales to children, this is not a problem. But endlessly listening to the voice of a voice assistant, when you want to order a pizza, is difficult. Try to make the replicas concise, but not monosyllabic and diverse (for example, consider several options for greetings, farewell and even phrases in case the assistant misunderstood something). The dialogue should sound natural and friendly, for this you can add to the phrases elements of speaking, emotions, interjections.

The user does not forgive nonsense. People often blame voice assistants for being stupid. And basically it happens when an assistant or application for it cannot recognize different variations of the same phrase. Let your action be as simple as setting an alarm, it is important that it still understands synonyms, different forms of the same meaning of words and does not give a failure if the user responds unpredictably.

How to get out of situations where the action refuses to understand? First, you can diversify the answers in the Default Fallback Intent - use not only the standard provided, but also custom ones. And secondly, you can train Fallback Intent with all sorts of spam phrases that are not related to the game. This will teach the application not only to respond adequately to irrelevant requests, but also increase the accuracy of the classification of other types of requests.

And one more tip. Never, never make a push-button menu out of your action to make the user's life easier - it annoys, distracts from the dialogue and makes you doubt the need to use voice.

Teach action politeness. Even the coolest action game should end. Ideally, a farewell, after which you will want to return to it again. By the way, remember that if an action does not ask a question, but simply answers a user's question, he must “close the microphone” (otherwise the application will not pass the moderation and will not be published). In the case of Aimylogic, you just need to add the “End Script” block to the script.

And if you are counting on retenshen, it is important to provide for other scenarios of good form in the scenario: the action should work in context - remember the name and gender of the user and not ask again what has already been clarified.

How to work with ratings and reviews

Google Assistant users can rate action and thereby affect their rating. Therefore, it is important to learn how to use the rating system to your advantage. It would seem that you just need to give the user a link to the page with your action and ask him to leave a review. But here there are some rules. For example, do not offer to evaluate the action in the first message: the user must understand what he puts the assessment. Wait until the application really performs some useful or interesting mission to the user, and only then offer to leave a review.

And it is better not to try to voice this request with your voice, using speech synthesis - you will only spend the time of the user. Moreover, he may not follow the link, but say “I bet five,” and this is not at all what you need in this case.

In the game “Yes, my lord,” we display a link for feedback only after the user has played the next round. And while not voicing the request, but simply display a link to the screen and offer to play again. Once again, pay attention - offer this link, when the user is guaranteed to have received some benefit or pleasure. If you do it at the wrong time, when the action is not understood or slowed down, you can get a negative feedback.

In general, try our own action games “Yoga for the Eyes” , games “First or Second” and “Yes, my lord” created in Aimylogic (and soon there will be transactions in it, and it will be easier for my lord to save his power and wealth!). And recently, we released the first voice quest for Google Assistant “World of Lovecraft” - an interactive drama in the mystical style of “Call of Cthulhu”, where scenes are voiced by professional actors, the plot can be controlled by voice and it also make in-game payments. This action has been developed on the Just AI Conversational Platform, a professional enterprise solution.

Three secrets of Google Assistant

- Use of music. Of the voice assistants in Russian, only Google Assistant allows you to use music directly in the action script. The musical design sounds great in gaming action, and from the yoga to the music quite different sensations.

- Payment options inside the action. For purchases inside the action (in-app purchasing) on Google Assistant, the Google Play platform is used. Conditions for working with the platform for the creators of gaming action games are the same as for developers of mobile applications - 70% is deducted from each transaction to the developer.

- Moderation To successfully pass moderation, an action must have a Personal Data Processing Policy. It should be placed on sites.google.com , specify the name of your action and email - the same as that of the developer in the developer console, and write that the application does not use user data. Moderation of an action without transactions lasts 2-3 days, but moderation of an application with embedded payments can take 4-6 weeks. Read more about the review procedure.

More life hacking, more case studies and instructive epic files are waiting for developers at the conference on conversational AI Conversations , which will be held on June 27-28 in St. Petersburg. Andrey Lipattsev, Strategic Partner Development Manager Google, will talk about international experience and Russian specifics of Google Assistant. And in Developers' Day, Tanya Lando, a leading Google linguist, will talk with participants about interactive corpus, signals and methodologies and how to choose them for their tasks; and the developers themselves will share their personal experience in creating voice applications for assistants - from the virtual secretary for Google Home to voice games and B2B action games that can work with the company's closed infrastructure.

And, by the way, on June 28, within the framework of the Google and Just AI conference, an open hackathon will be held for professional and novice developers - it will be possible to work on actions for the Assistant, experiment with spoken UX, speech synthesis and NLU tools and compete for cash prizes! Register - the number of places is limited!

Source: https://habr.com/ru/post/455816/

All Articles