Automatically export Google Forms to Notion using IFTTT and Django

Good day to all! I think the article will be interesting to everyone who uses Notion, but for some reason could not move to it completely.

I am developing my project . On the landing page after entering the email, a link to a Google Forms based survey is issued. Answers are recorded in a tablet on Google Drive.

The problem is that Icarry everything I keep with myself in Notion. It is trite more convenient. Managed by manual copy-paste, while there were few reviews. Then there were more of them - and I had to think of something. Who cares what happened - welcome under cat.

Google Forms records responses only in a tablet - that is, there is no other recipe. Therefore, I had a plan: let's listen to the updates of the tablets through IFTTT, send new data to the webbook, somehow process them and upload them to Notion.

')

For those who are not familiar with IFTTT: this is a service that allows you to make chains of actions. Say, “the post has come to telegrams” - “we export it to VKontakte”.

The plan began to fail: Notion has no official API. But someone reversed it and made an unofficial API .

The final plan was:

The second problem appeared when it turned out that IFTTT broke integration with Google Sheets, and therefore the applet does not work.

Therefore, we had to change the plan: we extort csv'shka from Google Sheets, parse it on the server and throw everything new in Notion. IFTTT is used as a trigger for the whole process.

This part is probably the easiest. Open the table for viewing (so as not to have to mess with the cookies). Next, take and copy the link to export CSV. For this action, by lightly pressing the keyboard, type Ctrl + Shift + J (that is, open the developer console), go to the Network tab. Then click on File - Download - CSV. We see the request and copy the link.

Since we have Python library, we will write on Django.

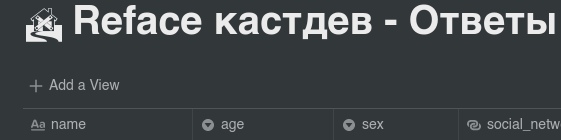

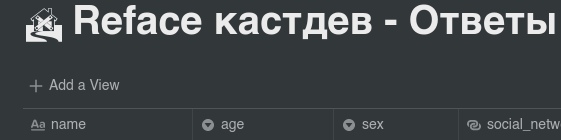

Now a little about the structure of my particular table. A table in Notion, unlike a table in Google Sheets, has a column “Reference”. This is a link to another table (in my case, a description of the features that users liked). The rest is generally clear: just columns with just data.

Go to Notion, with the usual Ctrl + Shift + J, open the console, go to Application - Cookies, copy token_v2 and call it TOKEN. Then we go to the necessary page with a tablet and copy the link to it. Call NOTION. If you also have a Relation, go to the page with Relation, copy the link and call, for example, NOTION_FUNCTION

Next, we write the following code (we import notion beforehand):

In it we connect NotionClient, say “Databases? Give two! “And we receive directly the data from these two plates (by default request, but it is possible also with sorting, in more detail - in documentation to library).

Then we have to do the following: request CSV from Google and parse it. We will do it pandas'om.

Then we have to go through all the data from this tablet and check whether they are added to Notion or not yet. For this we requested data from the plates.

We should also say about row.name, because an attentive reader will surely ask: what is that all about?

This is the name of the column in Notion (where the recording times are stored). I somehow did not manage to add Russian names, so I changed all the names to English and add them.

And now the code to check the data and add a line to the Notion table:

checkEmptiness is a function that checks whether a null piece has been passed to it. Notion somehow reluctantly worked when I fed him zero fields, so you should write.

We now turn to the analysis of Relation, because I have not seen it in the official documentation. To make a link to a line from another database, you need to take it (this line) and send it. Accordingly, if an array of references to rows from another table is implied, it is necessary to take an array of their IDs. I personally added Relation's by function name.

At the end, after creating the lines, we add an answer so that we know at the end that the request has arrived.

Tachemta with the most important server finished, go to IFTTT.

Go to the tab create applets . We select the trigger (in our case it is Date & time), set “every hour”. Select the triggered (that is, “that”) Webhook, specify our (for now) local address in order to test. Well, that's it. Test

You thought why we were busy with this trigger by the IFTTT - this is in order not to pay. Heroku offers a free rate for hosting our stuff. The main thing is that the service sleeps for at least 6 hours. And he will definitely sleep, because we call him to work every hour, not every minute.

Next, do the following. Go to heroku to create a new project . Next, install their client on your operating system. And then we do everything according to the instructions that appeared after creating the application.

Downloading everything to heroku, go to our applet and edit the URL to a new one.

Now every hour the list should be updated. Hypothetically, the IFTTT may give an error message that you have a long request, but this is not so important.

It turned out to be important. When the IFTTT catches constant errors, it starts skipping applets.

To solve this problem, simply launch a new thread for this whole thing, immediately giving an answer.

Another idea that I forgot to voice in the article is to check for nullity using the standard pandas method.

That is, your check will look something like this:

Prehistory

I am developing my project . On the landing page after entering the email, a link to a Google Forms based survey is issued. Answers are recorded in a tablet on Google Drive.

The problem is that I

Problem

Google Forms records responses only in a tablet - that is, there is no other recipe. Therefore, I had a plan: let's listen to the updates of the tablets through IFTTT, send new data to the webbook, somehow process them and upload them to Notion.

')

For those who are not familiar with IFTTT: this is a service that allows you to make chains of actions. Say, “the post has come to telegrams” - “we export it to VKontakte”.

The plan began to fail: Notion has no official API. But someone reversed it and made an unofficial API .

The final plan was:

- We make an applet in IFTTT: “A line has been added to the table - we send it to the server

- We make directly the server that receives the data and sends it to Notion

The second problem appeared when it turned out that IFTTT broke integration with Google Sheets, and therefore the applet does not work.

Therefore, we had to change the plan: we extort csv'shka from Google Sheets, parse it on the server and throw everything new in Notion. IFTTT is used as a trigger for the whole process.

Part 1. CSV with Google Sheets

This part is probably the easiest. Open the table for viewing (so as not to have to mess with the cookies). Next, take and copy the link to export CSV. For this action, by lightly pressing the keyboard, type Ctrl + Shift + J (that is, open the developer console), go to the Network tab. Then click on File - Download - CSV. We see the request and copy the link.

Part 2. We write the server

Since we have Python library, we will write on Django.

Now a little about the structure of my particular table. A table in Notion, unlike a table in Google Sheets, has a column “Reference”. This is a link to another table (in my case, a description of the features that users liked). The rest is generally clear: just columns with just data.

Go to Notion, with the usual Ctrl + Shift + J, open the console, go to Application - Cookies, copy token_v2 and call it TOKEN. Then we go to the necessary page with a tablet and copy the link to it. Call NOTION. If you also have a Relation, go to the page with Relation, copy the link and call, for example, NOTION_FUNCTION

Next, we write the following code (we import notion beforehand):

def index(request): if request.method == "POST": client = NotionClient(token_v2=TOKEN) database = client.get_collection_view(NOTION) current_rows = database.default_query().execute() database_functions = client.get_collection_view(NOTION_FUNCTIONS) current_rows_functions = database_functions.default_query().execute() In it we connect NotionClient, say “Databases? Give two! “And we receive directly the data from these two plates (by default request, but it is possible also with sorting, in more detail - in documentation to library).

Then we have to do the following: request CSV from Google and parse it. We will do it pandas'om.

result = requests.get(SHEET).content pandas_result = pd.read_csv(io.StringIO(result.decode('utf-8'))) timestamps = pandas_result[[" "]].values ages = pandas_result[[" "]].values sexes = pandas_result[[" "]].values cities = pandas_result[[" "]].values socials = pandas_result[[" ( )"]].values agreements = pandas_result[[" , - ."]].values control_usages = pandas_result[[" "]].values health_usages = pandas_result[[" "]].values prices = pandas_result[[" . :)"]].values mentions = pandas_result[[", , "]].values Then we have to go through all the data from this tablet and check whether they are added to Notion or not yet. For this we requested data from the plates.

def checkTimestamp(rows, timestamp): for i in range(0, len(rows)): row = rows[i] if row.name == timestamp: return True return False We should also say about row.name, because an attentive reader will surely ask: what is that all about?

This is the name of the column in Notion (where the recording times are stored). I somehow did not manage to add Russian names, so I changed all the names to English and add them.

And now the code to check the data and add a line to the Notion table:

for i in range(0, len(timestamps)): if not checkTimestamp(current_rows, timestamps[i]): row = database.collection.add_row() health_usage = health_usages[i][0] control_usage = control_usages[i][0] ticks = health_usage + "," + control_usage row.title = timestamps[i][0] row.age = ages[i][0] row.sex = sexes[i][0] row.social_network = checkEmptiness(socials[i][0]) row.can_we_write_you = checkEmptiness(agreements[i][0]) row.city = checkEmptiness(cities[i][0]) row.controlling_examples = checkEmptiness(control_usages[i][0]) row.health_examples = checkEmptiness(health_usages[i][0]) row.cost = checkEmptiness(prices[i][0]) row.noticements = checkEmptiness(mentions[i][0]) row.castdev_relation = findIds(current_rows_functions, ticks) checkEmptiness is a function that checks whether a null piece has been passed to it. Notion somehow reluctantly worked when I fed him zero fields, so you should write.

We now turn to the analysis of Relation, because I have not seen it in the official documentation. To make a link to a line from another database, you need to take it (this line) and send it. Accordingly, if an array of references to rows from another table is implied, it is necessary to take an array of their IDs. I personally added Relation's by function name.

def findIds(current_rows, titles): print("titles", titles) print("current rows", current_rows) array = [] for a in range(0, len(current_rows)): if current_rows[a].name in titles: array.append(current_rows[a].id) print("Ids", array) return array At the end, after creating the lines, we add an answer so that we know at the end that the request has arrived.

return HttpResponse("Hello, habr.") Tachemta with the most important server finished, go to IFTTT.

Part 3. IFTTT

Go to the tab create applets . We select the trigger (in our case it is Date & time), set “every hour”. Select the triggered (that is, “that”) Webhook, specify our (for now) local address in order to test. Well, that's it. Test

Part 4. Heroku

You thought why we were busy with this trigger by the IFTTT - this is in order not to pay. Heroku offers a free rate for hosting our stuff. The main thing is that the service sleeps for at least 6 hours. And he will definitely sleep, because we call him to work every hour, not every minute.

Next, do the following. Go to heroku to create a new project . Next, install their client on your operating system. And then we do everything according to the instructions that appeared after creating the application.

Downloading everything to heroku, go to our applet and edit the URL to a new one.

Now every hour the list should be updated. Hypothetically, the IFTTT may give an error message that you have a long request, but this is not so important.

Update

It turned out to be important. When the IFTTT catches constant errors, it starts skipping applets.

To solve this problem, simply launch a new thread for this whole thing, immediately giving an answer.

if request.method == "POST": thread = Thread(target=run_notion_import) thread.start() return HttpResponse("Hello, habr.") Another idea that I forgot to voice in the article is to check for nullity using the standard pandas method.

That is, your check will look something like this:

if not pd.isna(health_usages[i][0]): row.health_examples = health_usages[i][0] Source: https://habr.com/ru/post/455714/

All Articles