Whether the bubble of machine learning burst, or the beginning of a new dawn

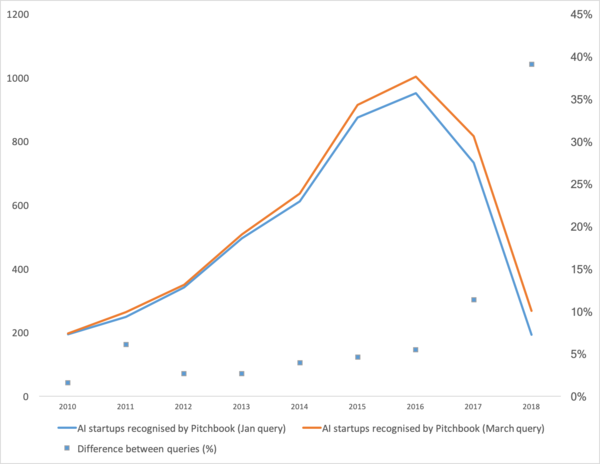

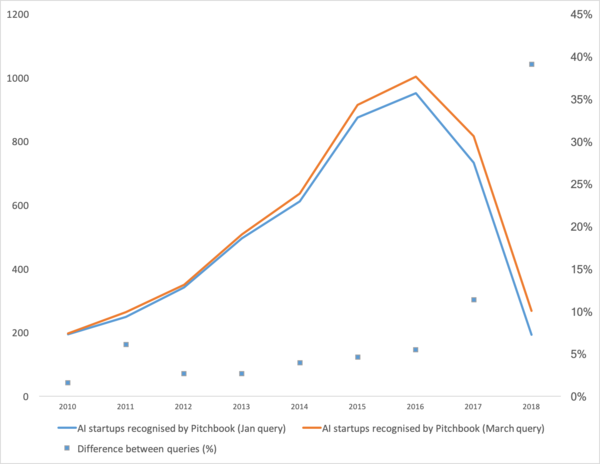

Recently an article has been published that shows quite well the trend in machine learning in recent years. In short, the number of machine learning startups has plummeted in the past two years.

Well. Let's analyze whether the bubble has burst, how to continue to live, and we’ll talk about where such a squiggle comes from.

First, let's talk about what was a booster of this curve. Where did she come from. Probably everyone will remember the victory of machine learning in 2012 in the competition ImageNet. After all, this is the first global event! But in reality it is not. And the growth of the curve begins a little earlier. I would break it into a few moments.

“Bubble burst? HYIP overheated? Did they die like a blockchain? ”

Well then! Tomorrow Siri will stop working on your phone, and the day after Tesla will not distinguish the turn from the kangaroo.

Neural networks are already working. They are dozens of devices. They really allow you to make money, change the market and the world around it. HYIP looks a little different:

Just neural networks have ceased to be something new. Yes, many people have high expectations. But a large number of companies have learned how to apply neurons and make products based on them. Neurons provide new functionality, allow to reduce workplaces, reduce the price of services:

But the main thing, and not the most obvious: “New ideas are no more, or they will not bring instant capital.” Neural networks have solved dozens of problems. And they will decide even more. All the obvious ideas that were - spawned many startups. But all that was on the surface - already collected. Over the past two years I have not met a single new idea for the use of neural networks. No new approach (well, ok, there are some problems with GANs).

And each next startup is more and more difficult. It requires no longer two guys who teach neuron on open data. It requires programmers, a server, a team of markers, complex support, and so on.

As a result, startups are getting smaller. But more production. Need to attach license plate recognition? There are hundreds of professionals on the market with relevant experience. You can hire and a couple of months your employee will make the system. Or buy ready. But to make a new startup? .. Madness!

We need to make a visitor tracking system - why pay for a bunch of licenses, when you can make your own in 3-4 months, sharpen it for your business.

Now neural networks go the same way that dozens of other technologies have gone.

Remember how the concept of "site developer" has changed since 1995? While the market is not saturated with experts. There are very few professionals. But I can bet that in 5-10 years there will not be much difference between a Java programmer and a developer of neural networks. And those and those experts will be enough in the market.

There will simply be a class of tasks for which is solved by neurons. There was a task - you hire a specialist.

"What's next? Where is the promised artificial intelligence? ”

And here there is a small but interesting neponyatchka :)

The technology stack that exists today, apparently, will not lead us to artificial intelligence. Ideas, their novelty - largely exhausted themselves. Let's talk about what keeps the current level of development.

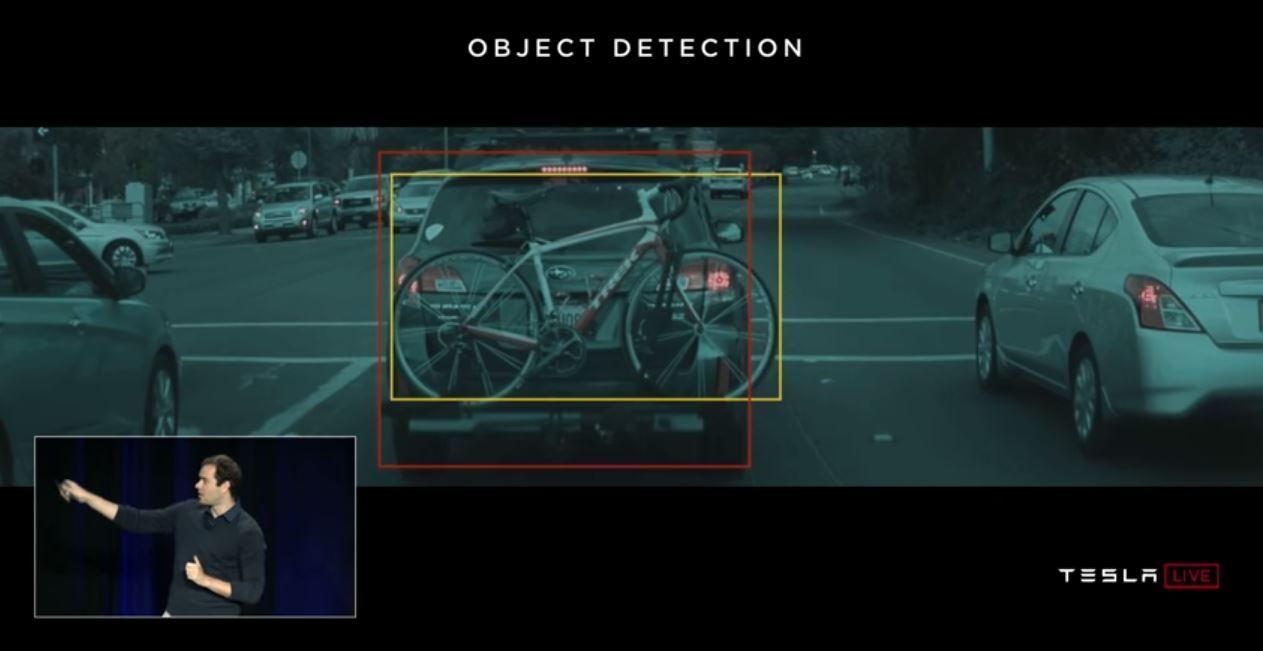

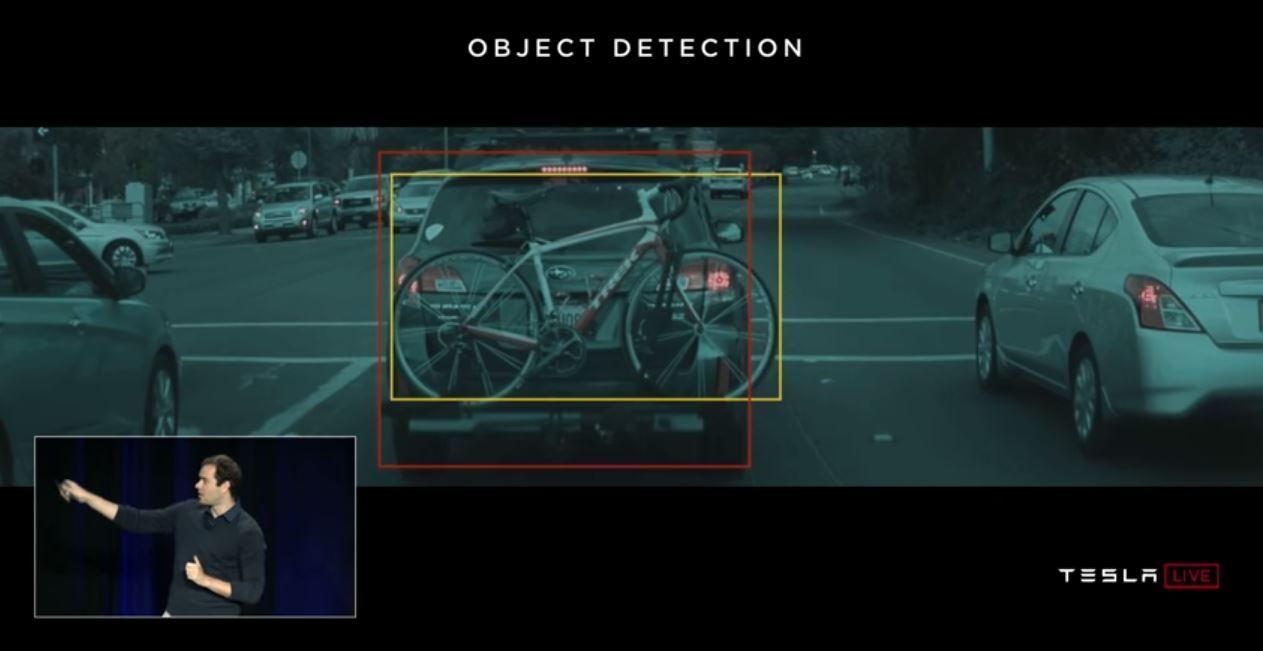

Let's start with the auto-drones. It seems clear that it is possible to make fully autonomous cars with today's technologies. But after how many years it will happen - it is not clear. Tesla believes that this will happen in a couple of years -

There are many other professionals who rate this as 5-10 years.

Most likely, in my opinion, in 15 years, the infrastructure of cities will change itself in such a way that the emergence of autonomous cars will become inevitable, become its continuation. But this cannot be considered an intellect. Modern Tesla is a very complex pipeline for filtering data, their search and retraining. These are rules, rules, rules, data collection and filters above them ( here I wrote a little more about it, or look at this mark).

And it is here that we see the first fundamental problem . Big data. This is exactly what caused the current wave of neural networks and machine learning. Now, to do something complex and automatic you need a lot of data. Not just a lot, but very, very much. We need automated algorithms for their collection, markup, use. We want to make the car see the trucks against the sun - you must first collect enough of them. We want the car to not go crazy with the bike bolted to the trunk - more samples.

And one example is not enough. Hundreds? Thousands?

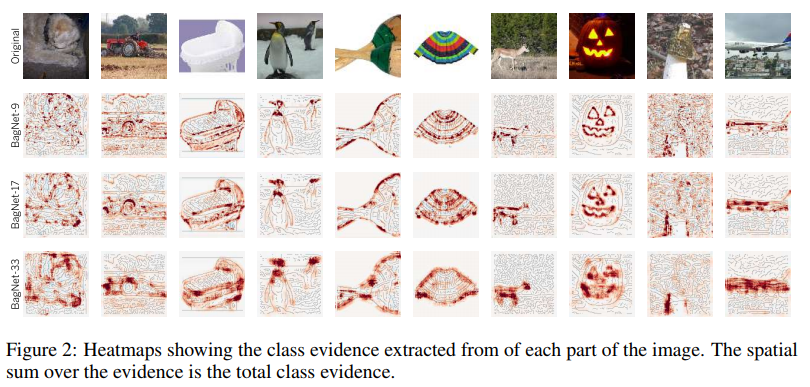

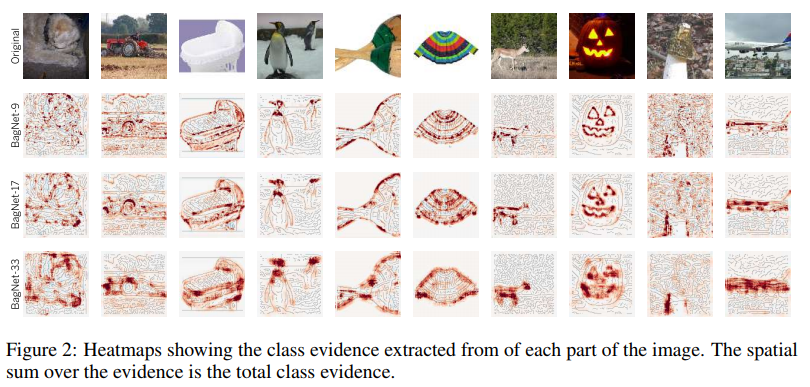

The second problem is the visualization of what our neural network understood. This is a very nontrivial task. Until now, few people understand how to visualize it. These articles are very recent, these are just a few examples, even remote ones:

Visualization of obsession with textures. Well shows, on what the neuron is inclined to go in cycles + that it perceives as the initial information.

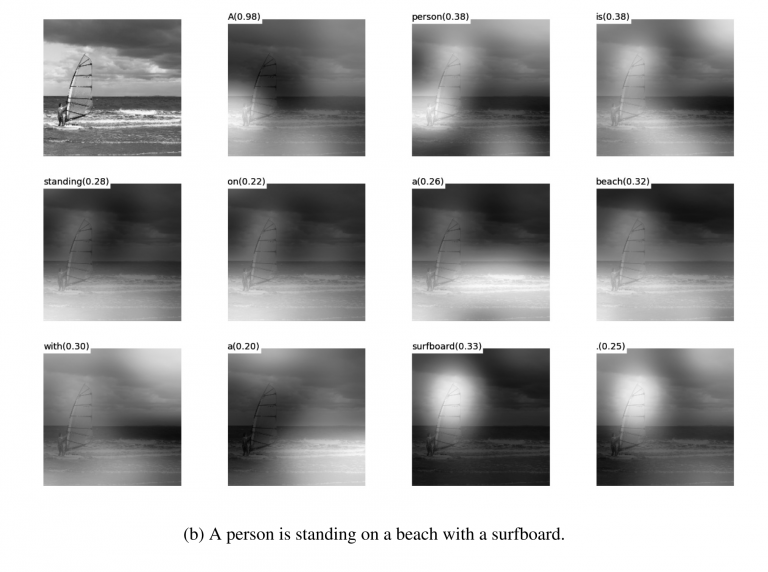

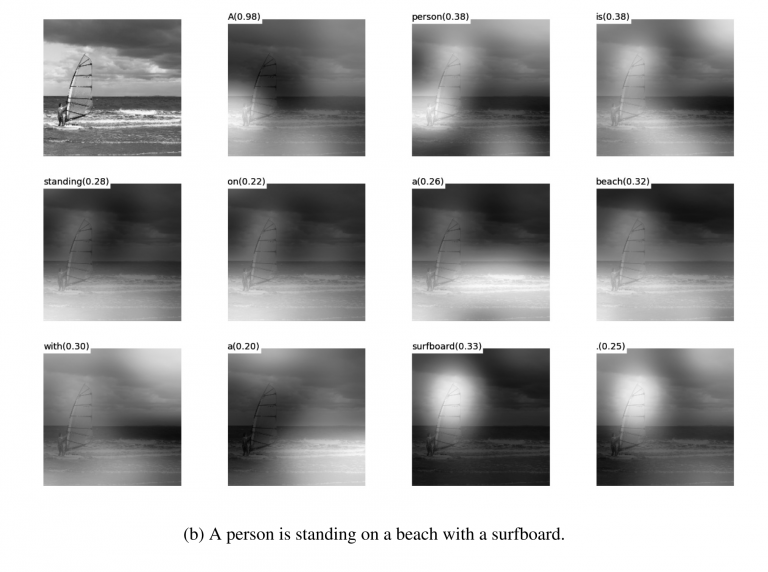

Attentive visualization during translations . In reality, attenuation can often be used precisely to show what caused such a network response. I met such things for debugging and for product solutions. There are a lot of articles on this topic. But the more complex the data, the harder it is to understand how to achieve sustainable visualization.

Well, yes, the good old set of "look at the grid inside the filters ." These pictures were popular 3-4 years ago, but everyone quickly realized that the pictures were beautiful, but there was not much point in them.

I did not name dozens of other gadgets, ways, hacks, research on how to display the insides of the network. Do these tools work? Do they help to quickly understand what the problem is and to debug the network? .. Pull out the last percent? Well, about the same:

You can watch any competition on Kaggle. And a description of how people make final decisions. We nastakali 100-500-800 models and it worked!

I, of course, exaggerate. But these approaches do not give quick and direct answers.

With sufficient experience, having tried various options, you can give a verdict on why your system made such a decision. But to correct the behavior of the system will be difficult. To put a crutch, to move a threshold, to add dataset, to take another backend-network.

The third fundamental problem is that grids do not teach logic, but statistics. Statistically this face :

Logically - not very similar. Neural networks do not learn something difficult, if they are not forced. They always learn the simplest signs. Have eyes, nose, head? So this face! Or give an example where the eyes will not mean a face. And again - millions of examples.

I would say that these three global problems today limit the development of neural networks and machine learning. And where these problems are not limited - is already actively used.

This is the end? Neural networks got up?

Unknown. But, of course, everyone hopes not.

There are many approaches and directions to solving the fundamental problems that I have highlighted above. But so far, none of these approaches has allowed for something fundamentally new to be done, to solve something that has not yet been solved. So far, all fundamental projects are being made on the basis of stable approaches (Tesla), or remain test projects of institutions or corporations (Google Brain, OpenAI).

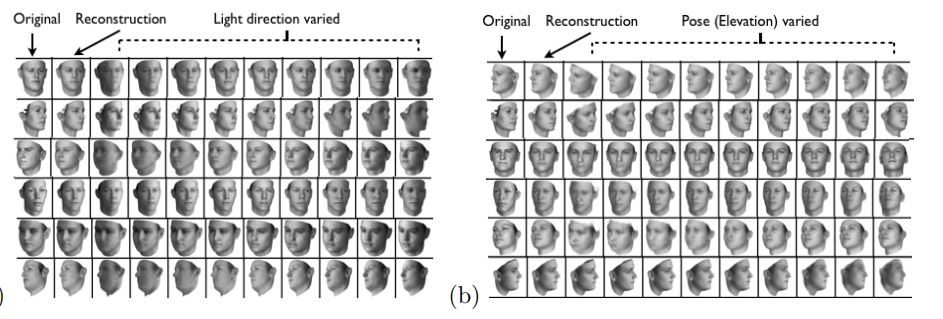

Roughly speaking, the main direction is the creation of some high-level representation of input data. In a sense, “memory”. The simplest example of memory is the various “Embedding” - representations of images. Well, for example, all face recognition systems. The network is learning to get some stable view from the face that does not depend on rotation, lighting, resolution. In essence, the network minimizes the metric “different faces - far away” and “same — close”.

For such training, tens and hundreds of thousands of examples are needed. But the result is some of the rudiments of “One-shot Learning”. Now we do not need hundreds of faces to remember a person. Just one face, and that's it — we'll find out !

Only here is a problem ... The grid can learn only fairly simple objects. When trying to distinguish not individuals, but, for example, “people by clothes” (the Re-indentification task ), the quality fails by many orders of magnitude. And the network can no longer learn enough obvious changes of perspectives.

Yes, and learn from the millions of examples - also somehow so-so entertainment.

There are works to significantly reduce the election. For example, you can immediately recall one of the first works on OneShot Learning from Google :

There are many such works, for example, 1 or 2 or 3 .

Minus one - usually the training works quite well on some simple, “MNIST examples”. And in the transition to complex tasks - you need a large base, a model of objects, or some kind of magic.

In general, one-shot training is a very interesting topic. You find a lot of ideas. But for the most part, those two problems that I have listed (pre-training on a huge dataset / instability on complex data) - greatly impede learning.

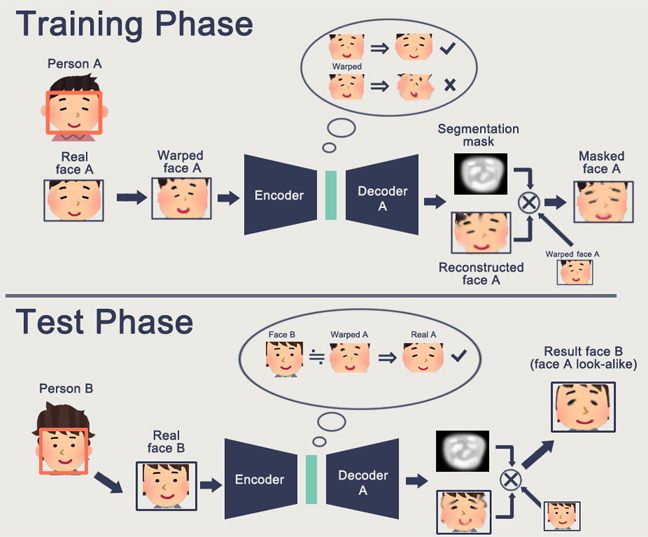

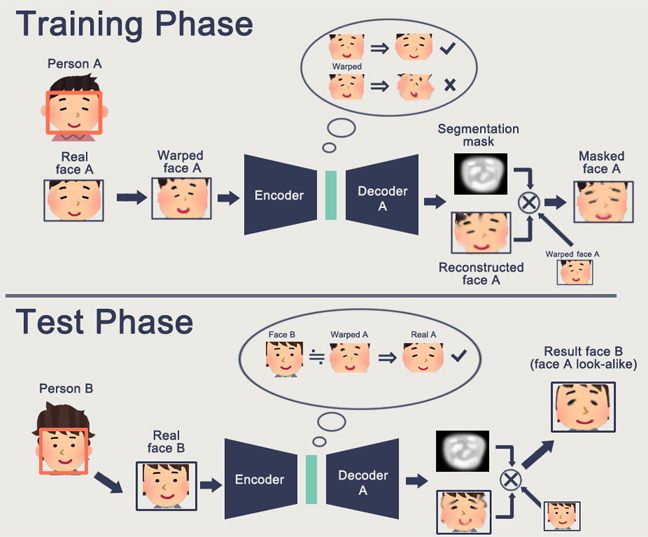

On the other hand, GAN - generatively competitive networks approach the topic of Embedding. You probably read a bunch of articles on this topic on Habré. ( 1 , 2 , 3 )

A feature of GAN is the formation of some internal state space (essentially the same Embedding), which allows you to draw an image. This may be a person may be action .

The GAN problem - the more complex the generated object, the more difficult it is to describe it in the “generator-discriminator” logic. As a result, of the real applications of GAN, which are widely known, only DeepFake, which, again, manipulates the ideas of individuals (for which there is a huge base).

I have seen very few other useful uses. Usually some whistles perdelki with drawing pictures.

And again. No one has an understanding of how this will allow us to move into a bright future. Representing logic / space in a neural network is good. But we need a huge number of examples, we do not understand how a neuron represents this in itself, we don’t understand how to make a neuron memorize some really complex representation.

Reinforcement learning is a completely different approach. Surely you remember how Google beat everyone in Go. Recent victories in Starcraft and in Dota. But here everything is not so rosy and promising. The best thing about RL and its complexity is this article .

To summarize what the author wrote:

The key point is that RL is not yet in production. Google has some experiments ( 1 , 2 ). But I have not seen a single grocery system.

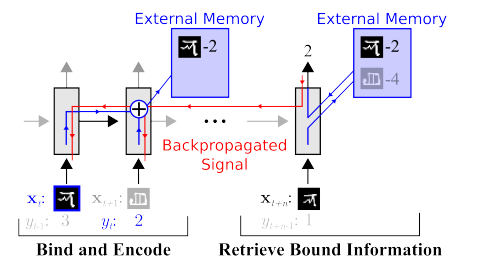

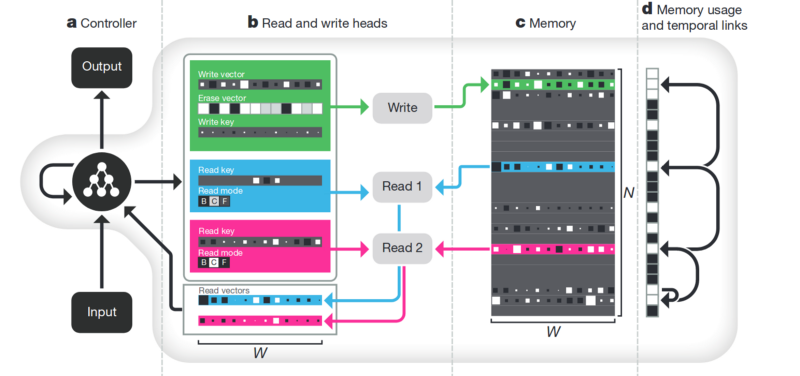

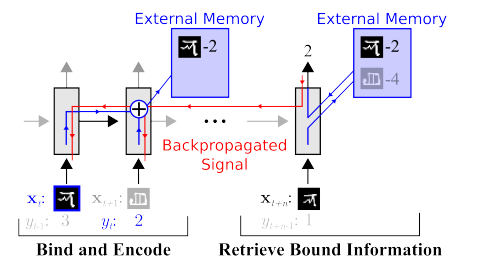

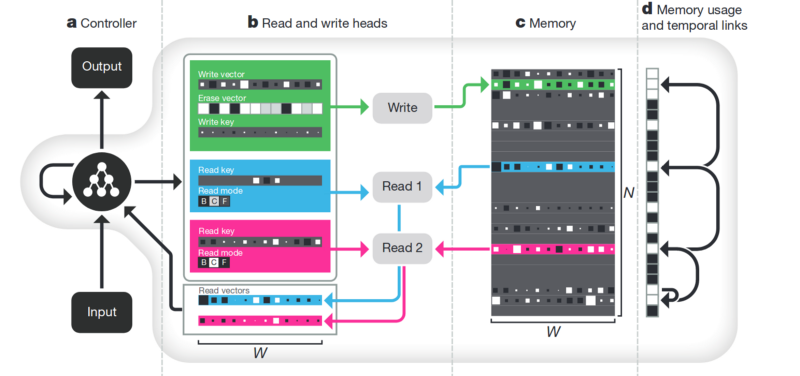

Memory . The disadvantage of everything described above is unstructured. One approach to trying to tidy up all this is to give the neural network access to a separate memory. So that she can record and re-record the results of her steps there. Then the neural network can be determined by the current state of the memory. This is very similar to classic processors and computers.

The most famous and popular article is from DeepMind:

It seems that here it is, the key to understanding the intellect? But rather not. The system still needs a huge amount of data for training. And it works mainly with structured tabular data. At the same time, when Facebook solved a similar problem, they took the path of “nafig memory, just make the neuron more complicated, and there are more examples - and she will learn”.

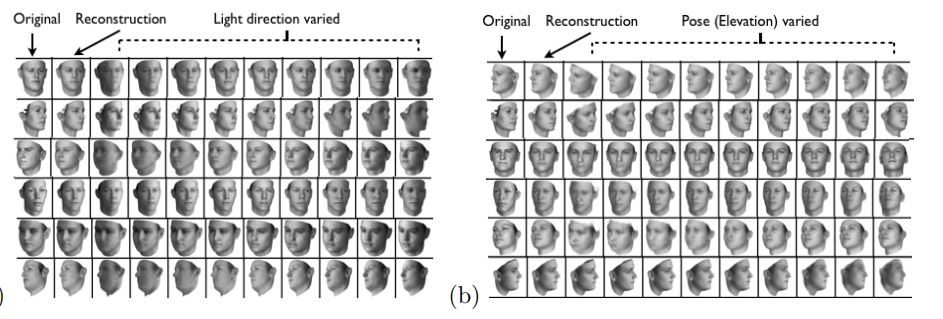

Disentanglement . Another way to create meaningful memory is to take the same embeddings, but when learning to introduce additional criteria that would allow to highlight the “meanings” in them. For example, we want to train a neural network to distinguish the behavior of a person in a store. If we followed the standard path, we would have to make a dozen networks. One is looking for a person, the second determines what he does, his third age, the fourth - gender. A separate logic looks at the part of the store where he does / learns about it. The third determines its trajectory, and so on.

Or, if there were infinitely a lot of data, then it would be possible to train one network for all sorts of outcomes (it is obvious that such an array of data cannot be collected).

The intelligence approach tells us - and let's teach the network so that she can distinguish between concepts. So that she formed an embedding according to the video, where one area would define the action, one is the position on the floor in time, one is the height of a person, and another is his gender. At the same time, when training, I would almost not like to prompt the network with such key concepts, and that she herself singled out and grouped areas. There are quite a few such articles (some of them are 1 , 2 , 3 ) and, in general, they are quite theoretical.

But this direction, at least theoretically, should close the problems listed at the beginning.

Image decomposition according to the parameters “color of walls / color of floor / shape of object / color of object / itd”

Decomposition of the face by the parameters “size, eyebrows, orientation, skin color, etc.”

There are many other, not so much global, directions that allow you to somehow reduce the database, work with more heterogeneous data, etc.

Attention . It probably does not make sense to single it out as a separate method. Just an approach that enhances others. He is devoted to many articles ( 1 , 2 , 3 ). The meaning of Attention is to enhance the response of the network to significant objects during training. Often some external target designation, or a small external network.

3D simulation . If you make a good 3D engine, then they can often close 90% of the training data (I even saw an example when almost 99% of the data was closed with a good engine). There are many ideas and hacks on how to make a network trained on a 3D engine to work on real data (Fine tuning, style transfer, etc.). But it is often to make a good engine - several orders of magnitude more difficult than typing data. Examples when making engines:

Learning robots ( google , braingarden )

Learning to recognize products in the store (but in two projects that we did - we quietly did without it).

Training in Tesla (again, the video that was higher).

The whole article is in some sense conclusions. Probably the main message I wanted to make was “the freebie is over, the neurons do not provide more simple solutions”. Now it is necessary to work hard building complex decisions. Or work hard doing complex scientific research.

In general, the topic is debatable. Maybe readers have more interesting examples?

Well. Let's analyze whether the bubble has burst, how to continue to live, and we’ll talk about where such a squiggle comes from.

First, let's talk about what was a booster of this curve. Where did she come from. Probably everyone will remember the victory of machine learning in 2012 in the competition ImageNet. After all, this is the first global event! But in reality it is not. And the growth of the curve begins a little earlier. I would break it into a few moments.

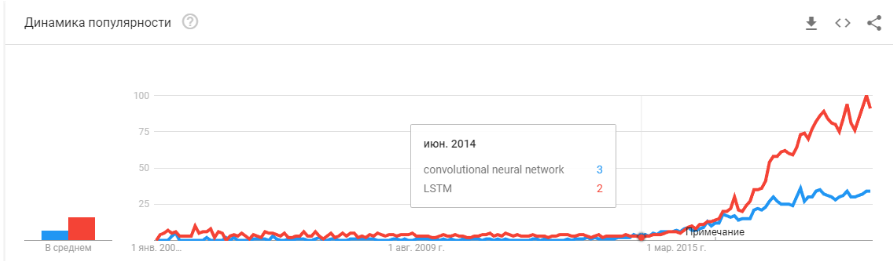

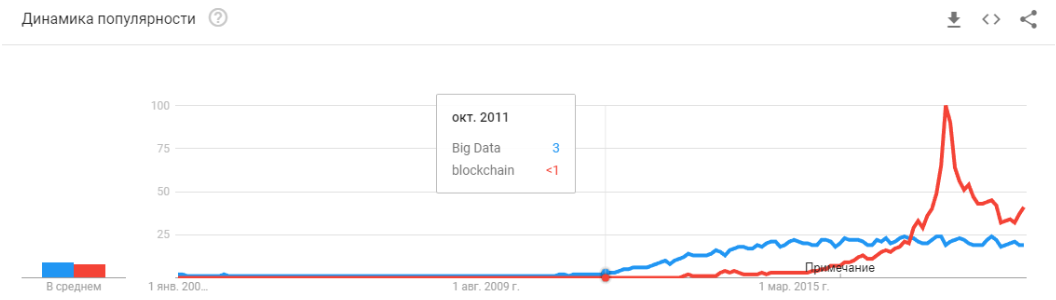

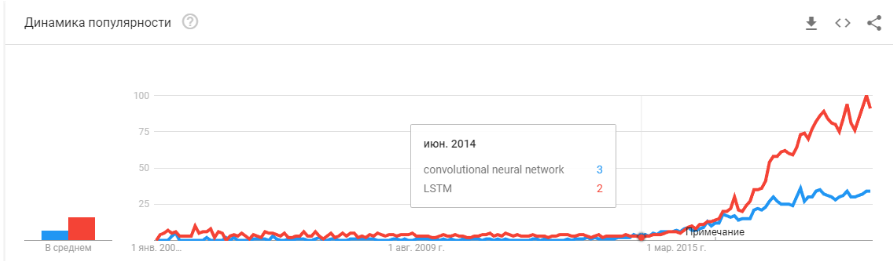

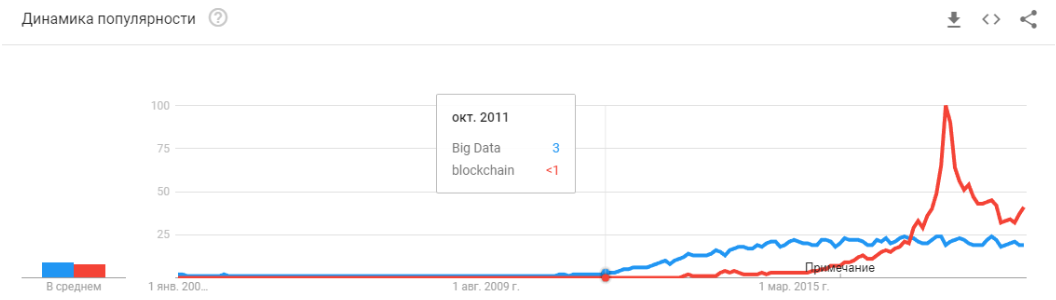

- 2008 is the emergence of the term “big data”. Real products began to appear from 2010. Big data is directly related to machine learning. Without big data, stable operation of algorithms that existed at that time is impossible. And these are not neural networks. Until 2012, neural networks are the lot of the marginal minority. But then completely different algorithms began to work, which have existed for years, or even decades: SVM (1963, 1993), Random Forest (1995), AdaBoost (2003), ... Startups of those years are primarily associated with automatic processing of structured data : box office, users, advertising, much more.

')

The derivative of this first wave is a set of frameworks such as XGBoost, CatBoost, LightGBM, etc. - In 2011-2012, convolutional neural networks won a series of image recognition contests. Their actual use was somewhat delayed. I would say that massively meaningful startups and solutions began to appear in 2014. It took two years to digest that neurons still work, make convenient frameworks that could be installed and run in a reasonable time, develop methods that would stabilize and speed up the time of convergence.

Convolutional networks made it possible to solve problems of machine vision: the classification of images and objects in an image, the detection of objects, the recognition of objects and people, the improvement of images, etc., etc. - 2015-2017 years. The boom of algorithms and projects linked to recurrent networks or their analogues (LSTM, GRU, TransformerNet, etc.). Well-functioning speech-to-text algorithms, machine translation systems appeared. Partially, they are based on convolutional networks to highlight basic features. Partly on the fact that they learned how to collect really big and good datasets.

“Bubble burst? HYIP overheated? Did they die like a blockchain? ”

Well then! Tomorrow Siri will stop working on your phone, and the day after Tesla will not distinguish the turn from the kangaroo.

Neural networks are already working. They are dozens of devices. They really allow you to make money, change the market and the world around it. HYIP looks a little different:

Just neural networks have ceased to be something new. Yes, many people have high expectations. But a large number of companies have learned how to apply neurons and make products based on them. Neurons provide new functionality, allow to reduce workplaces, reduce the price of services:

- Manufacturing companies integrate algorithms for the analysis of defects on the conveyor.

- Cattle farms buy systems to control cows.

- Automatic combines.

- Automated Call Centers.

- Filters in. (

well, at least something sensible!)

But the main thing, and not the most obvious: “New ideas are no more, or they will not bring instant capital.” Neural networks have solved dozens of problems. And they will decide even more. All the obvious ideas that were - spawned many startups. But all that was on the surface - already collected. Over the past two years I have not met a single new idea for the use of neural networks. No new approach (well, ok, there are some problems with GANs).

And each next startup is more and more difficult. It requires no longer two guys who teach neuron on open data. It requires programmers, a server, a team of markers, complex support, and so on.

As a result, startups are getting smaller. But more production. Need to attach license plate recognition? There are hundreds of professionals on the market with relevant experience. You can hire and a couple of months your employee will make the system. Or buy ready. But to make a new startup? .. Madness!

We need to make a visitor tracking system - why pay for a bunch of licenses, when you can make your own in 3-4 months, sharpen it for your business.

Now neural networks go the same way that dozens of other technologies have gone.

Remember how the concept of "site developer" has changed since 1995? While the market is not saturated with experts. There are very few professionals. But I can bet that in 5-10 years there will not be much difference between a Java programmer and a developer of neural networks. And those and those experts will be enough in the market.

There will simply be a class of tasks for which is solved by neurons. There was a task - you hire a specialist.

"What's next? Where is the promised artificial intelligence? ”

And here there is a small but interesting neponyatchka :)

The technology stack that exists today, apparently, will not lead us to artificial intelligence. Ideas, their novelty - largely exhausted themselves. Let's talk about what keeps the current level of development.

Restrictions

Let's start with the auto-drones. It seems clear that it is possible to make fully autonomous cars with today's technologies. But after how many years it will happen - it is not clear. Tesla believes that this will happen in a couple of years -

There are many other professionals who rate this as 5-10 years.

Most likely, in my opinion, in 15 years, the infrastructure of cities will change itself in such a way that the emergence of autonomous cars will become inevitable, become its continuation. But this cannot be considered an intellect. Modern Tesla is a very complex pipeline for filtering data, their search and retraining. These are rules, rules, rules, data collection and filters above them ( here I wrote a little more about it, or look at this mark).

First problem

And it is here that we see the first fundamental problem . Big data. This is exactly what caused the current wave of neural networks and machine learning. Now, to do something complex and automatic you need a lot of data. Not just a lot, but very, very much. We need automated algorithms for their collection, markup, use. We want to make the car see the trucks against the sun - you must first collect enough of them. We want the car to not go crazy with the bike bolted to the trunk - more samples.

And one example is not enough. Hundreds? Thousands?

Second problem

The second problem is the visualization of what our neural network understood. This is a very nontrivial task. Until now, few people understand how to visualize it. These articles are very recent, these are just a few examples, even remote ones:

Visualization of obsession with textures. Well shows, on what the neuron is inclined to go in cycles + that it perceives as the initial information.

Attentive visualization during translations . In reality, attenuation can often be used precisely to show what caused such a network response. I met such things for debugging and for product solutions. There are a lot of articles on this topic. But the more complex the data, the harder it is to understand how to achieve sustainable visualization.

Well, yes, the good old set of "look at the grid inside the filters ." These pictures were popular 3-4 years ago, but everyone quickly realized that the pictures were beautiful, but there was not much point in them.

I did not name dozens of other gadgets, ways, hacks, research on how to display the insides of the network. Do these tools work? Do they help to quickly understand what the problem is and to debug the network? .. Pull out the last percent? Well, about the same:

You can watch any competition on Kaggle. And a description of how people make final decisions. We nastakali 100-500-800 models and it worked!

I, of course, exaggerate. But these approaches do not give quick and direct answers.

With sufficient experience, having tried various options, you can give a verdict on why your system made such a decision. But to correct the behavior of the system will be difficult. To put a crutch, to move a threshold, to add dataset, to take another backend-network.

Third problem

The third fundamental problem is that grids do not teach logic, but statistics. Statistically this face :

Logically - not very similar. Neural networks do not learn something difficult, if they are not forced. They always learn the simplest signs. Have eyes, nose, head? So this face! Or give an example where the eyes will not mean a face. And again - millions of examples.

There's a lot of room at the bottom

I would say that these three global problems today limit the development of neural networks and machine learning. And where these problems are not limited - is already actively used.

This is the end? Neural networks got up?

Unknown. But, of course, everyone hopes not.

There are many approaches and directions to solving the fundamental problems that I have highlighted above. But so far, none of these approaches has allowed for something fundamentally new to be done, to solve something that has not yet been solved. So far, all fundamental projects are being made on the basis of stable approaches (Tesla), or remain test projects of institutions or corporations (Google Brain, OpenAI).

Roughly speaking, the main direction is the creation of some high-level representation of input data. In a sense, “memory”. The simplest example of memory is the various “Embedding” - representations of images. Well, for example, all face recognition systems. The network is learning to get some stable view from the face that does not depend on rotation, lighting, resolution. In essence, the network minimizes the metric “different faces - far away” and “same — close”.

For such training, tens and hundreds of thousands of examples are needed. But the result is some of the rudiments of “One-shot Learning”. Now we do not need hundreds of faces to remember a person. Just one face, and that's it — we'll find out !

Only here is a problem ... The grid can learn only fairly simple objects. When trying to distinguish not individuals, but, for example, “people by clothes” (the Re-indentification task ), the quality fails by many orders of magnitude. And the network can no longer learn enough obvious changes of perspectives.

Yes, and learn from the millions of examples - also somehow so-so entertainment.

There are works to significantly reduce the election. For example, you can immediately recall one of the first works on OneShot Learning from Google :

There are many such works, for example, 1 or 2 or 3 .

Minus one - usually the training works quite well on some simple, “MNIST examples”. And in the transition to complex tasks - you need a large base, a model of objects, or some kind of magic.

In general, one-shot training is a very interesting topic. You find a lot of ideas. But for the most part, those two problems that I have listed (pre-training on a huge dataset / instability on complex data) - greatly impede learning.

On the other hand, GAN - generatively competitive networks approach the topic of Embedding. You probably read a bunch of articles on this topic on Habré. ( 1 , 2 , 3 )

A feature of GAN is the formation of some internal state space (essentially the same Embedding), which allows you to draw an image. This may be a person may be action .

The GAN problem - the more complex the generated object, the more difficult it is to describe it in the “generator-discriminator” logic. As a result, of the real applications of GAN, which are widely known, only DeepFake, which, again, manipulates the ideas of individuals (for which there is a huge base).

I have seen very few other useful uses. Usually some whistles perdelki with drawing pictures.

And again. No one has an understanding of how this will allow us to move into a bright future. Representing logic / space in a neural network is good. But we need a huge number of examples, we do not understand how a neuron represents this in itself, we don’t understand how to make a neuron memorize some really complex representation.

Reinforcement learning is a completely different approach. Surely you remember how Google beat everyone in Go. Recent victories in Starcraft and in Dota. But here everything is not so rosy and promising. The best thing about RL and its complexity is this article .

To summarize what the author wrote:

- Boxed models do not fit / work in most cases poorly.

- Practical tasks are easier to solve in other ways. Boston Dynamics does not use RL because of its complexity / unpredictability / calculation complexity

- To make RL work, you need a complex function. It is often difficult to create / write

- It's hard to train models. You have to spend a lot of time to swing and remove from local optima

- As a consequence, it is difficult to repeat the model, the model’s instability with the slightest changes

- Often overfits on some left regularities, up to a random number generator

The key point is that RL is not yet in production. Google has some experiments ( 1 , 2 ). But I have not seen a single grocery system.

Memory . The disadvantage of everything described above is unstructured. One approach to trying to tidy up all this is to give the neural network access to a separate memory. So that she can record and re-record the results of her steps there. Then the neural network can be determined by the current state of the memory. This is very similar to classic processors and computers.

The most famous and popular article is from DeepMind:

It seems that here it is, the key to understanding the intellect? But rather not. The system still needs a huge amount of data for training. And it works mainly with structured tabular data. At the same time, when Facebook solved a similar problem, they took the path of “nafig memory, just make the neuron more complicated, and there are more examples - and she will learn”.

Disentanglement . Another way to create meaningful memory is to take the same embeddings, but when learning to introduce additional criteria that would allow to highlight the “meanings” in them. For example, we want to train a neural network to distinguish the behavior of a person in a store. If we followed the standard path, we would have to make a dozen networks. One is looking for a person, the second determines what he does, his third age, the fourth - gender. A separate logic looks at the part of the store where he does / learns about it. The third determines its trajectory, and so on.

Or, if there were infinitely a lot of data, then it would be possible to train one network for all sorts of outcomes (it is obvious that such an array of data cannot be collected).

The intelligence approach tells us - and let's teach the network so that she can distinguish between concepts. So that she formed an embedding according to the video, where one area would define the action, one is the position on the floor in time, one is the height of a person, and another is his gender. At the same time, when training, I would almost not like to prompt the network with such key concepts, and that she herself singled out and grouped areas. There are quite a few such articles (some of them are 1 , 2 , 3 ) and, in general, they are quite theoretical.

But this direction, at least theoretically, should close the problems listed at the beginning.

Image decomposition according to the parameters “color of walls / color of floor / shape of object / color of object / itd”

Decomposition of the face by the parameters “size, eyebrows, orientation, skin color, etc.”

Other

There are many other, not so much global, directions that allow you to somehow reduce the database, work with more heterogeneous data, etc.

Attention . It probably does not make sense to single it out as a separate method. Just an approach that enhances others. He is devoted to many articles ( 1 , 2 , 3 ). The meaning of Attention is to enhance the response of the network to significant objects during training. Often some external target designation, or a small external network.

3D simulation . If you make a good 3D engine, then they can often close 90% of the training data (I even saw an example when almost 99% of the data was closed with a good engine). There are many ideas and hacks on how to make a network trained on a 3D engine to work on real data (Fine tuning, style transfer, etc.). But it is often to make a good engine - several orders of magnitude more difficult than typing data. Examples when making engines:

Learning robots ( google , braingarden )

Learning to recognize products in the store (but in two projects that we did - we quietly did without it).

Training in Tesla (again, the video that was higher).

findings

The whole article is in some sense conclusions. Probably the main message I wanted to make was “the freebie is over, the neurons do not provide more simple solutions”. Now it is necessary to work hard building complex decisions. Or work hard doing complex scientific research.

In general, the topic is debatable. Maybe readers have more interesting examples?

Source: https://habr.com/ru/post/455676/

All Articles