Understanding Machine Learning in the Elastic Stack (aka Elasticsearch, aka ELK)

Recall that the Elastic Stack is based on the non-relational Elasticsearch database, the Kibana web interface and data collectors (the most well-known Logstash, various Beats, APM, and others). One of the nice additions to the entire listed product stack is data analysis using machine learning algorithms. In the article we understand what these algorithms are. We ask under the cat.

Machine learning is a paid shareware Elastic Stack feature and is included in the X-Pack. To start using it, it is enough to activate a 30-day trials after installation. After the expiration of the trial period, you can request support for its renewal or buy a subscription. The subscription price is calculated not on the amount of data, but on the number of nodes used. No, the amount of data affects, of course, the number of nodes required, but still this approach to licensing is more humane in relation to the company's budget. If there is no need for high performance - you can save.

ML in the Elastic Stack is written in C ++ and works outside the JVM, in which Elasticsearch itself is spinning. That is, the process (which, by the way, is called autodetect) consumes everything that it does not swallow the JVM. On a demo stand, this is not so critical, but in a productive environment it is important to single out separate nodes for ML tasks.

')

Machine learning algorithms are divided into two categories - with a teacher and without a teacher . In the Elastic Stack algorithm from the "no teacher" category. At this link you can see the mathematical apparatus of machine learning algorithms.

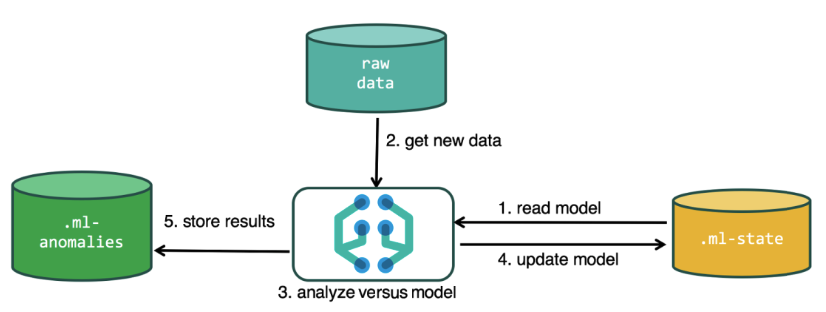

For analysis, the machine learning algorithm uses data stored in Elasticsearch indexes. You can create tasks for analysis both from the Kibana interface and through the API. If you do this through Kibana, then some things are not necessary to know. For example, additional indexes that the algorithm uses in the process.

Additional indexes used in the analysis process

.ml-state - information about statistical models (analysis settings);

.ml-anomalies- * - the results of the ML algorithms;

.ml-notifications - settings for alerts based on the analysis results.

.ml-anomalies- * - the results of the ML algorithms;

.ml-notifications - settings for alerts based on the analysis results.

The data structure in the Elasticsearch database consists of indexes and the documents stored in them. If you compare with a relational database, then the index can be compared with the database schema, and the document with the record in the table. This comparison is conditional and is given to simplify the understanding of further material for those who have only heard about Elasticsearch.

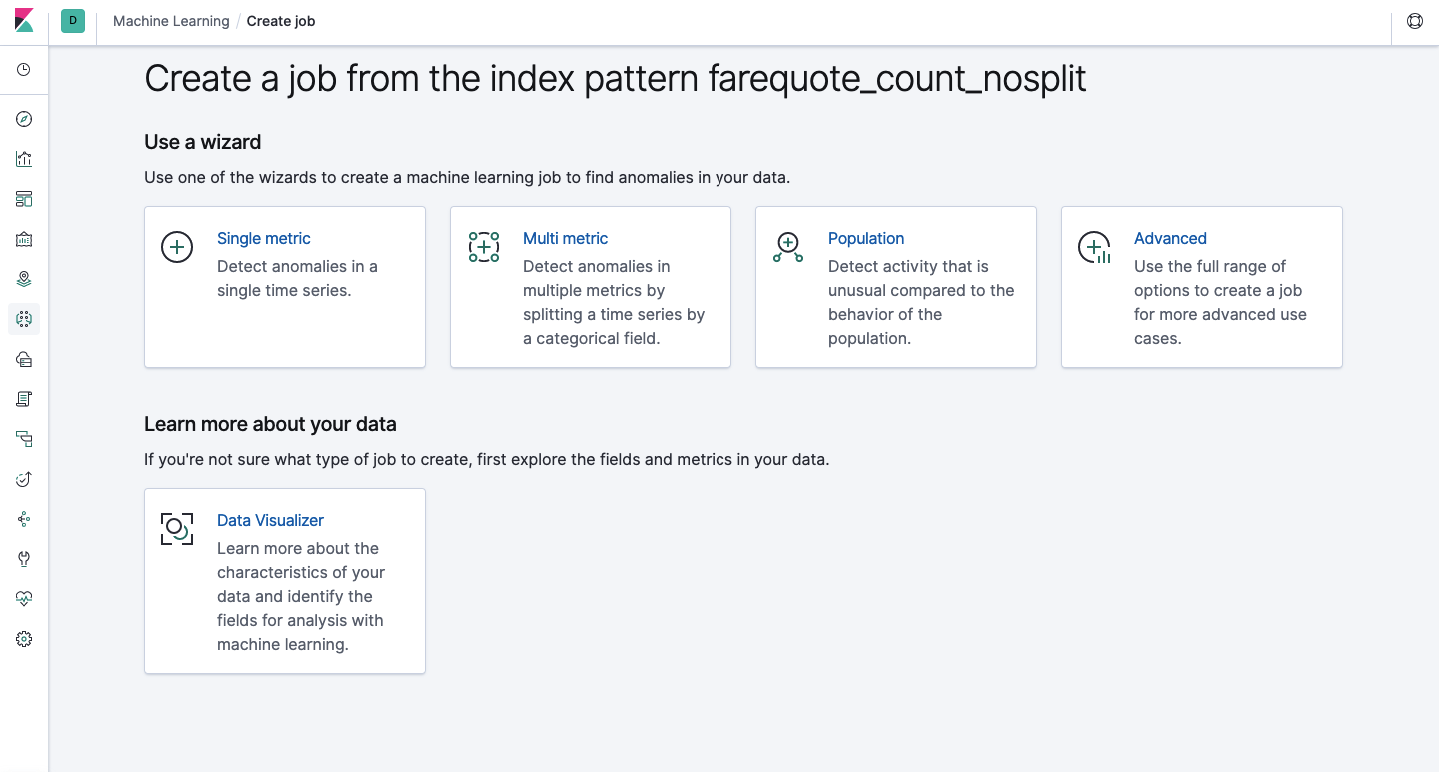

Through the API, the same functionality is available as through the web interface, so for clarity and understanding of the concepts we will show how to configure through Kibana. The menu on the left has a Machine Learning section, in which you can create a new job (Job). In the Kibana interface, it looks like the image below. Now we will analyze each type of task and show the types of analysis that can be constructed here.

Single Metric - analysis of one metric, Multi Metric - analysis of two or more metrics. In both cases, each metric is analyzed in an isolated environment, i.e. the algorithm does not take into account the behavior of concurrently analyzed metrics as it might seem in the case of Multi Metric. To carry out the calculation taking into account the correlation of various metrics, you can apply Population-analysis. And Advanced is a fine-tuning of algorithms with additional options for certain tasks.

Single metric

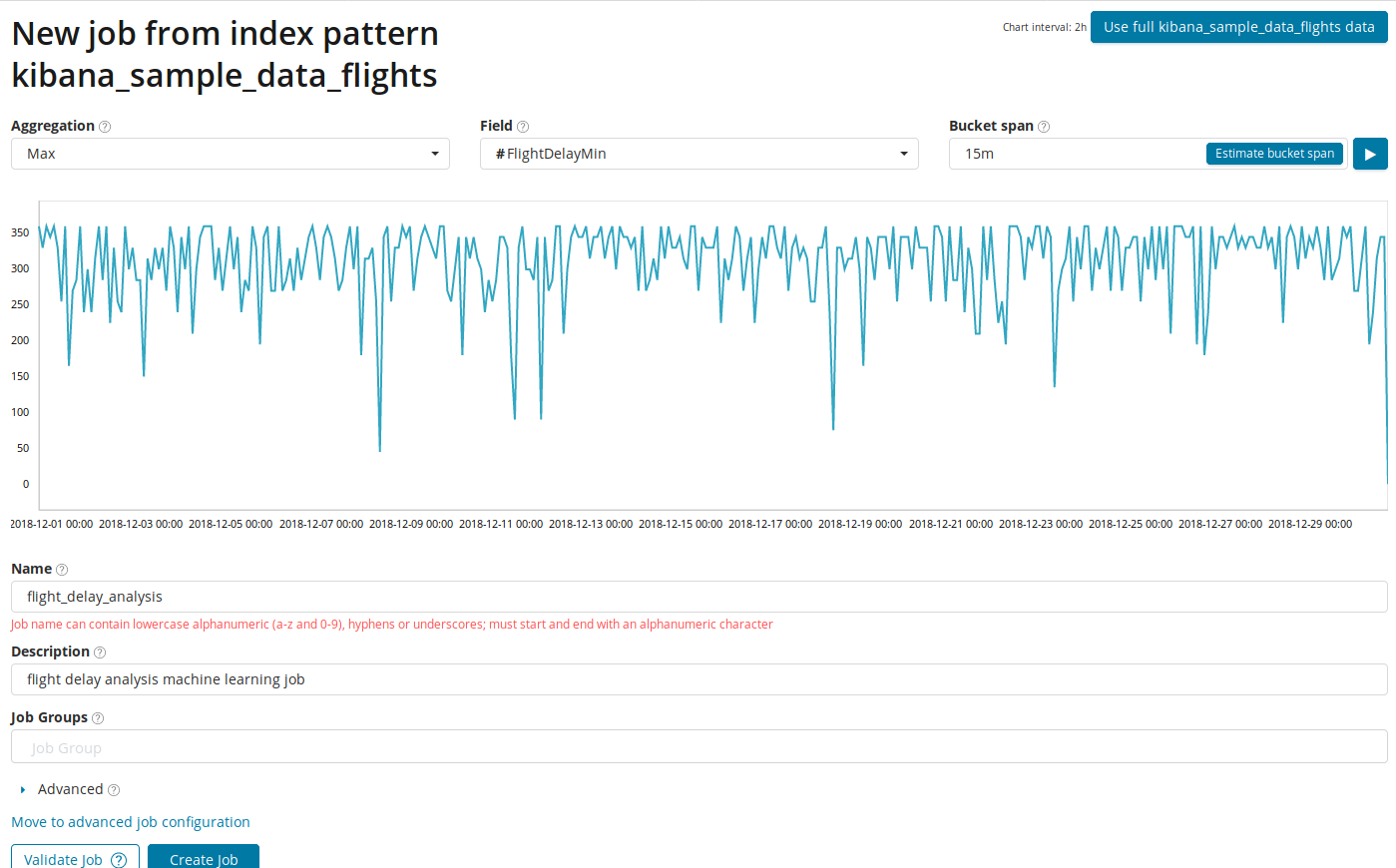

Analysis of changes to a single metric is the simplest thing you can do here. After clicking on Create Job, the algorithm will look for anomalies.

In the Aggregation field, you can choose the approach to search for anomalies. For example, at Min, values below typical will be considered abnormal. There are Max, Hign Mean, Low, Mean, Distinct and others. Description of all functions can be viewed at the link .

In the Field field indicates the numeric field in the document on which we will conduct the analysis.

In the Bucket span field - the granularity of the intervals on the timeline, which will be analyzed. You can trust the automation or choose manually. The image below shows an example of too low granularity - you can skip the anomaly. With this setting, you can change the sensitivity of the algorithm to anomalies.

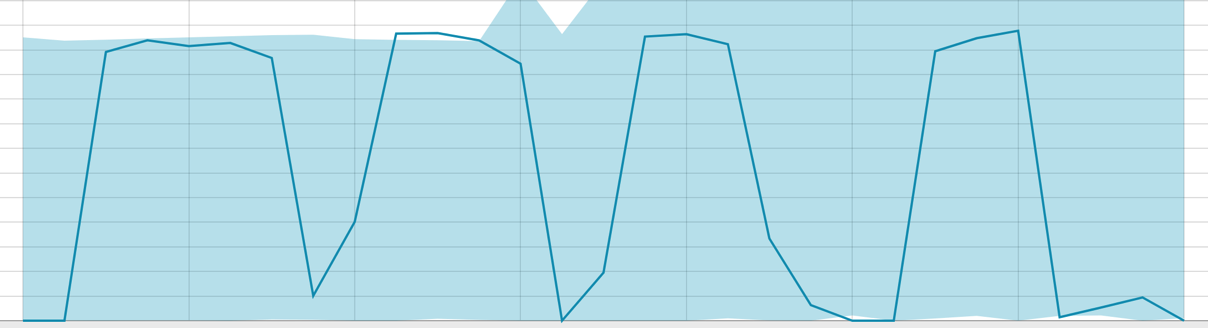

The duration of the data collected is the key thing that affects the efficiency of the analysis. In the analysis, the algorithm determines the repeated intervals, calculates the confidence interval (baselines) and identifies anomalies - atypical deviations from the usual behavior of the metric. Just for example:

Baselines with a small segment of data:

When the algorithm is on what to learn - the baseline looks like this:

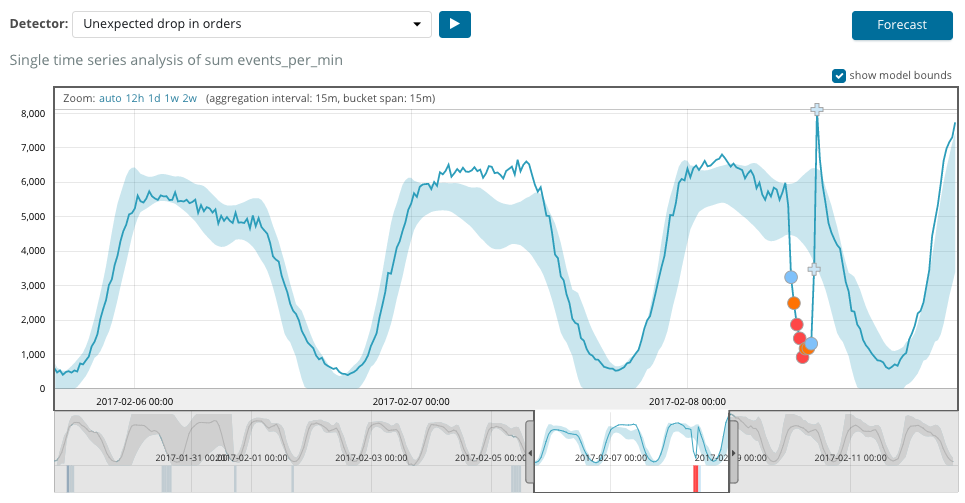

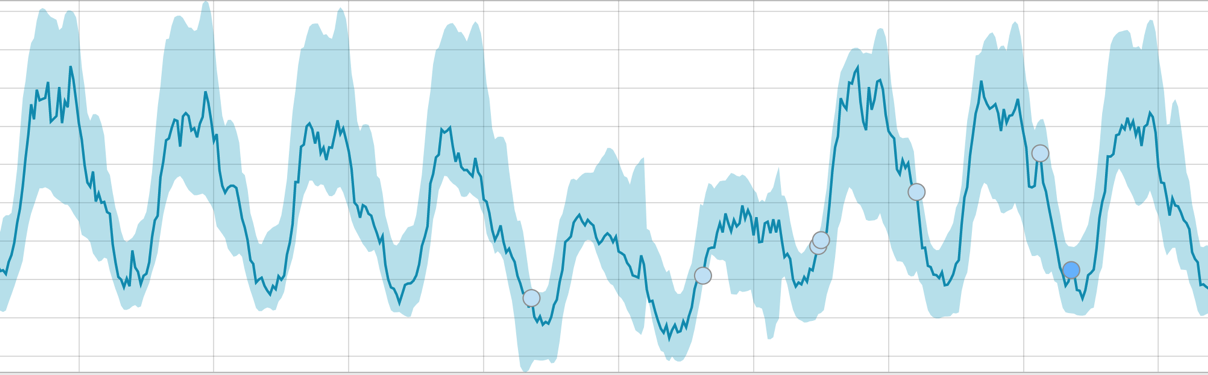

After starting the task, the algorithm determines the abnormal deviations from the norm and ranks them according to the probability of the anomaly (the color of the corresponding label is indicated in brackets):

Warning (blue): less than 25

Minor (yellow): 25-50

Major (orange): 50-75

Critical (red): 75-100

On the graph below an example with the anomalies found.

Here you can see the number 94, which indicates the probability of an anomaly. It is clear that if the value is close to 100, then we have an anomaly. The column below the graph indicates a disproportionately low probability of 0.000063634% of the appearance of the metric value there.

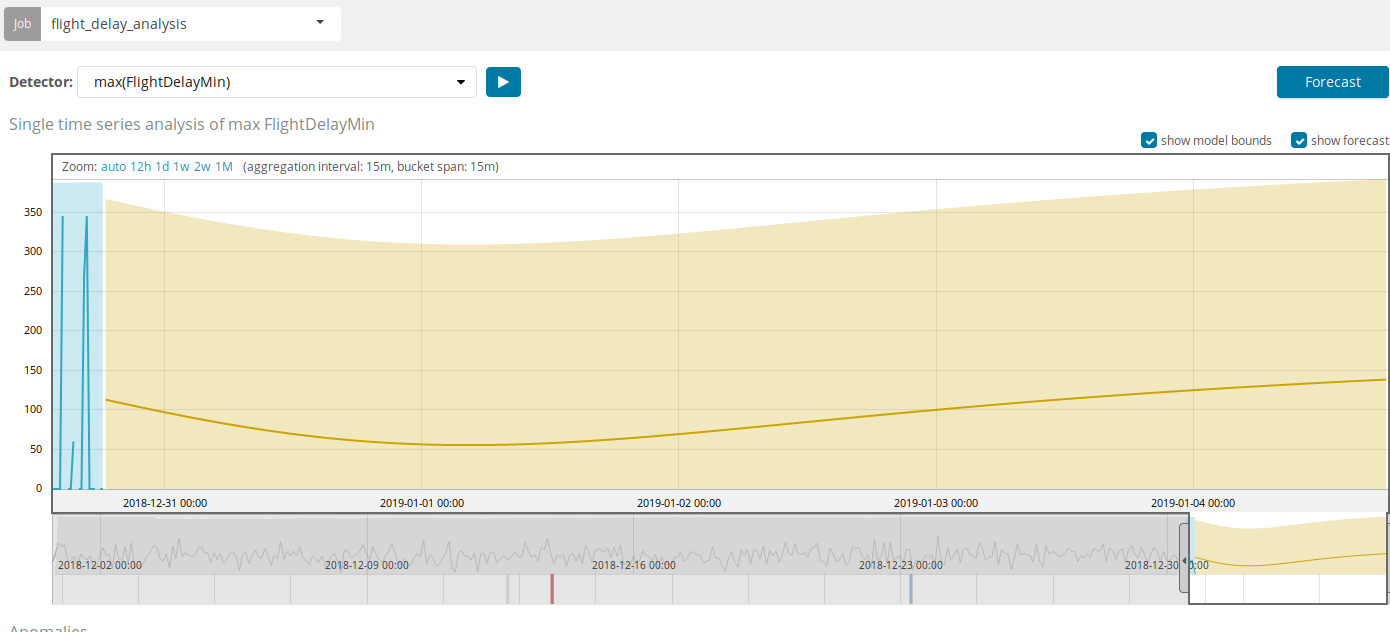

In addition to searching for anomalies in Kibana, you can run a prediction. This is done elementary and from the same presentation with anomalies - the Forecast button in the upper right corner.

The forecast is built a maximum of 8 weeks ahead. Even if you really want - you can no longer by design.

In some situations, the forecast will be very useful, for example, when the user load on the infrastructure is monitored.

Multi metric

Moving on to the next ML opportunity in Elastic Stack — analyzing several metrics with one bundle. But this does not mean that the dependence of one metric on another will be analyzed. This is the same as Single Metric with only multiple metrics on one screen for easy comparison of the effects of one on the other. About analyzing the dependence of one metric on another we will tell in the part of Population.

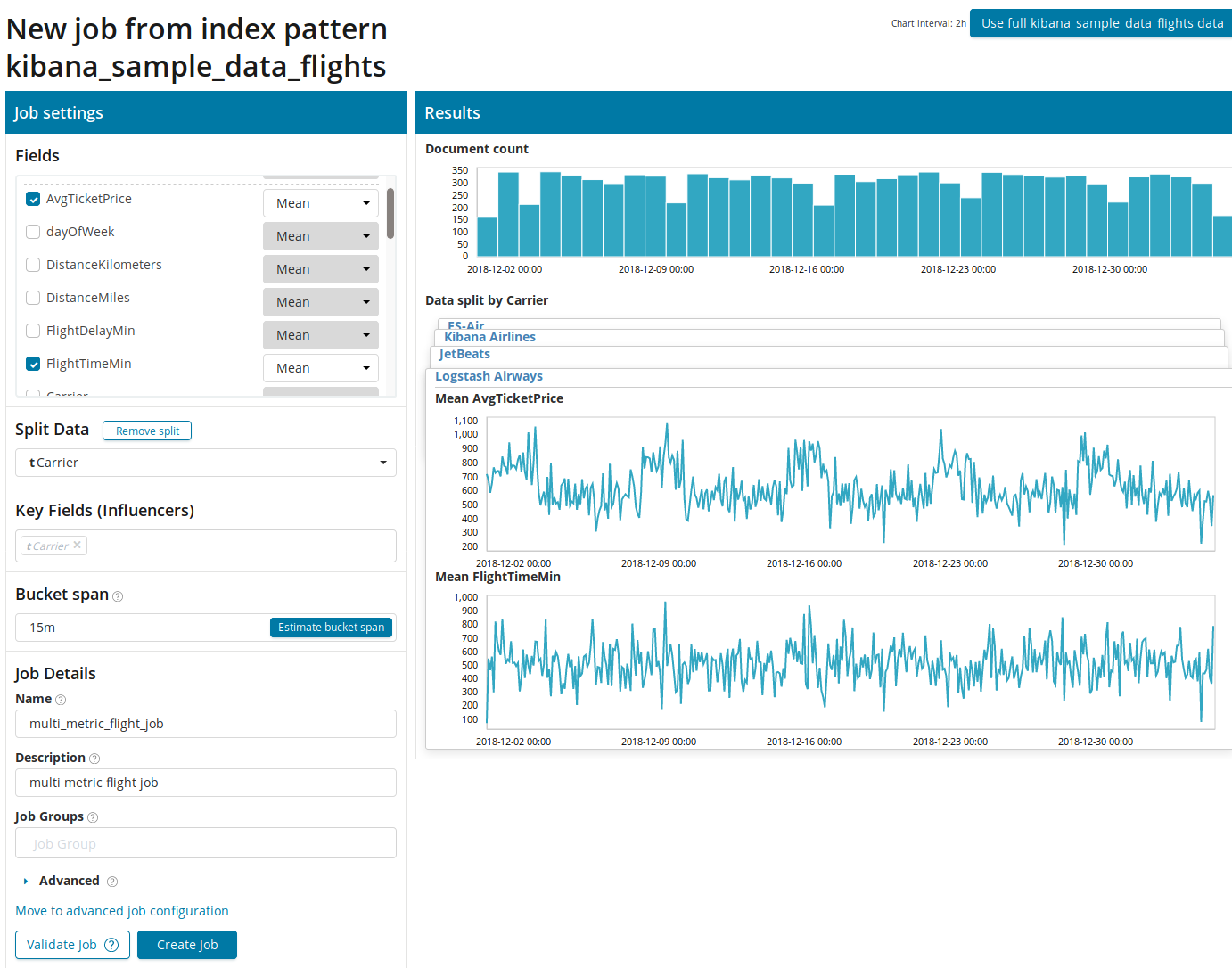

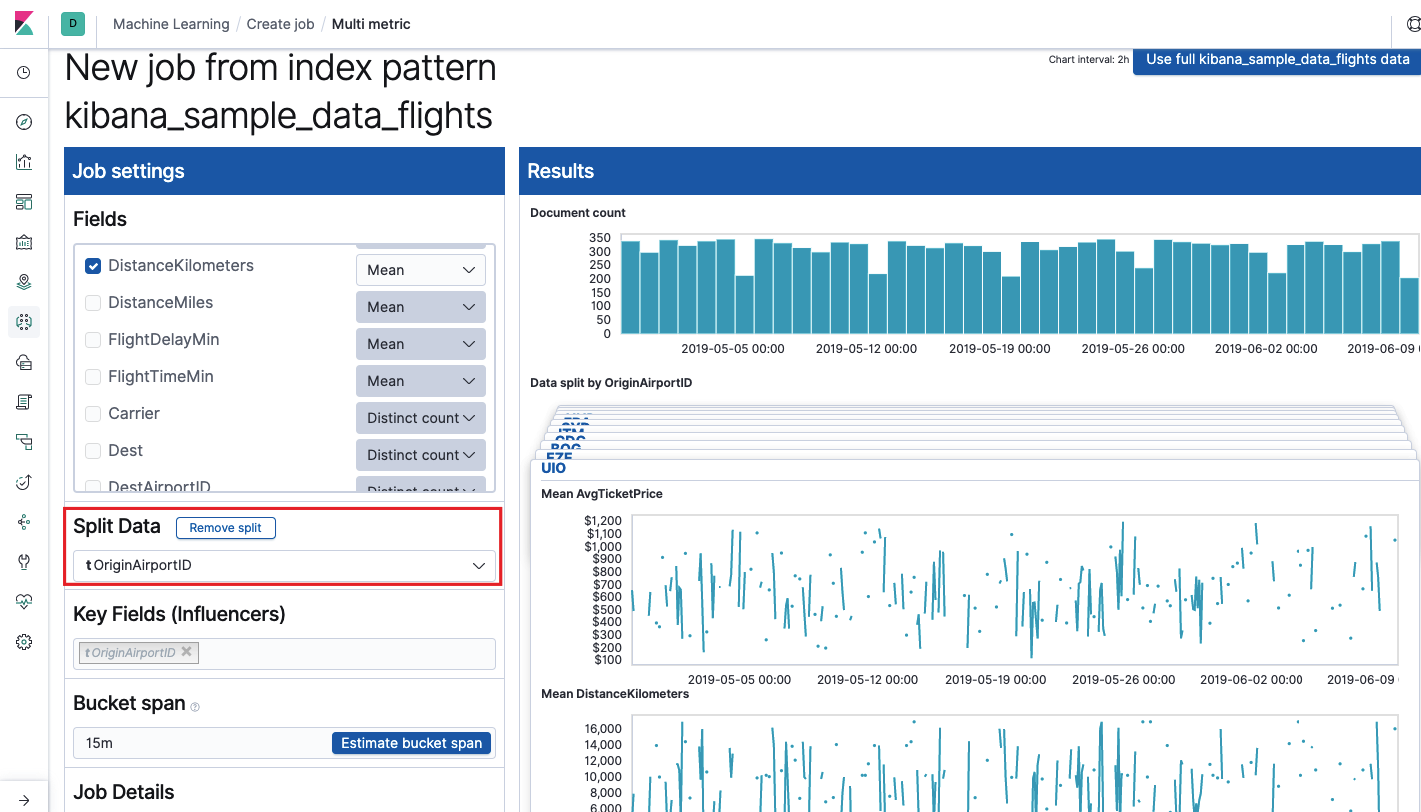

After clicking on the square with Multi Metric, a window with settings will appear. We dwell on them in more detail.

First you need to select the fields for analysis and data aggregation by them. The aggregation options are the same as for Single Metric ( Max, Hign Mean, Low, Mean, Distinct and others). Further, the data is optionally divided into one of the fields ( Split Data field). In the example, we did this in the OriginAirportID field. Note that the graph of the metrics on the right is now represented as a set of graphs.

The Key Fields (Influencers) field directly affects the anomalies found. By default, there will always be at least one value, and you can add additional ones. The algorithm will take into account the influence of these fields in the analysis and show the most "influential" values.

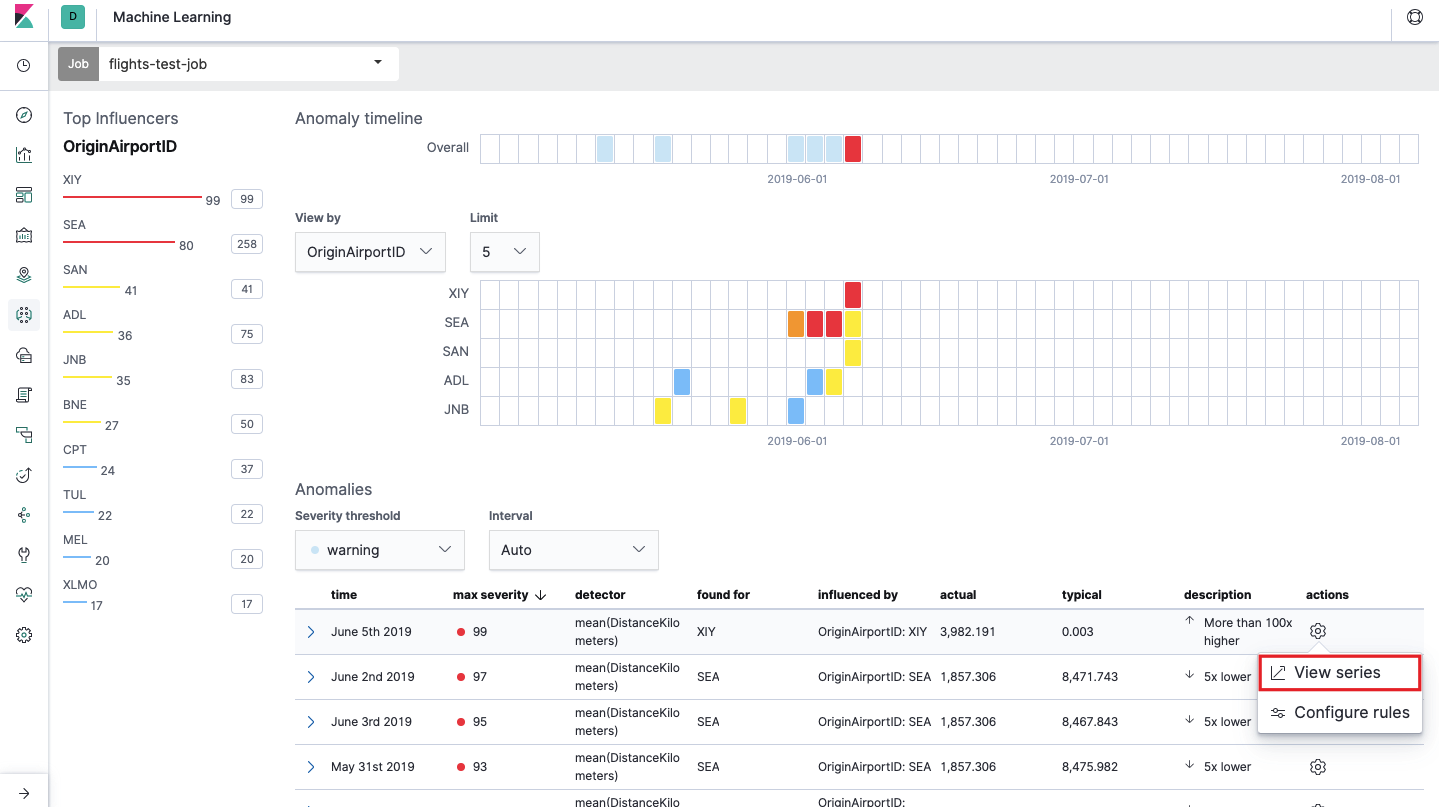

After launch, a similar picture will appear in the Kibana interface.

This is the so-called. heat map of anomalies for each value of the OriginAirportID field, which we indicated in Split Data . As in the case of Single Metric, the color indicates the level of abnormal deviation. A similar analysis is conveniently done, for example, on workstations to track those where there are many suspicious authorizations, etc. We have already written about suspicious events in EventLog Windows , which can also be collected and analyzed here.

Under the heat map a list of anomalies, from each you can go to the Single Metric view for detailed analysis.

Population

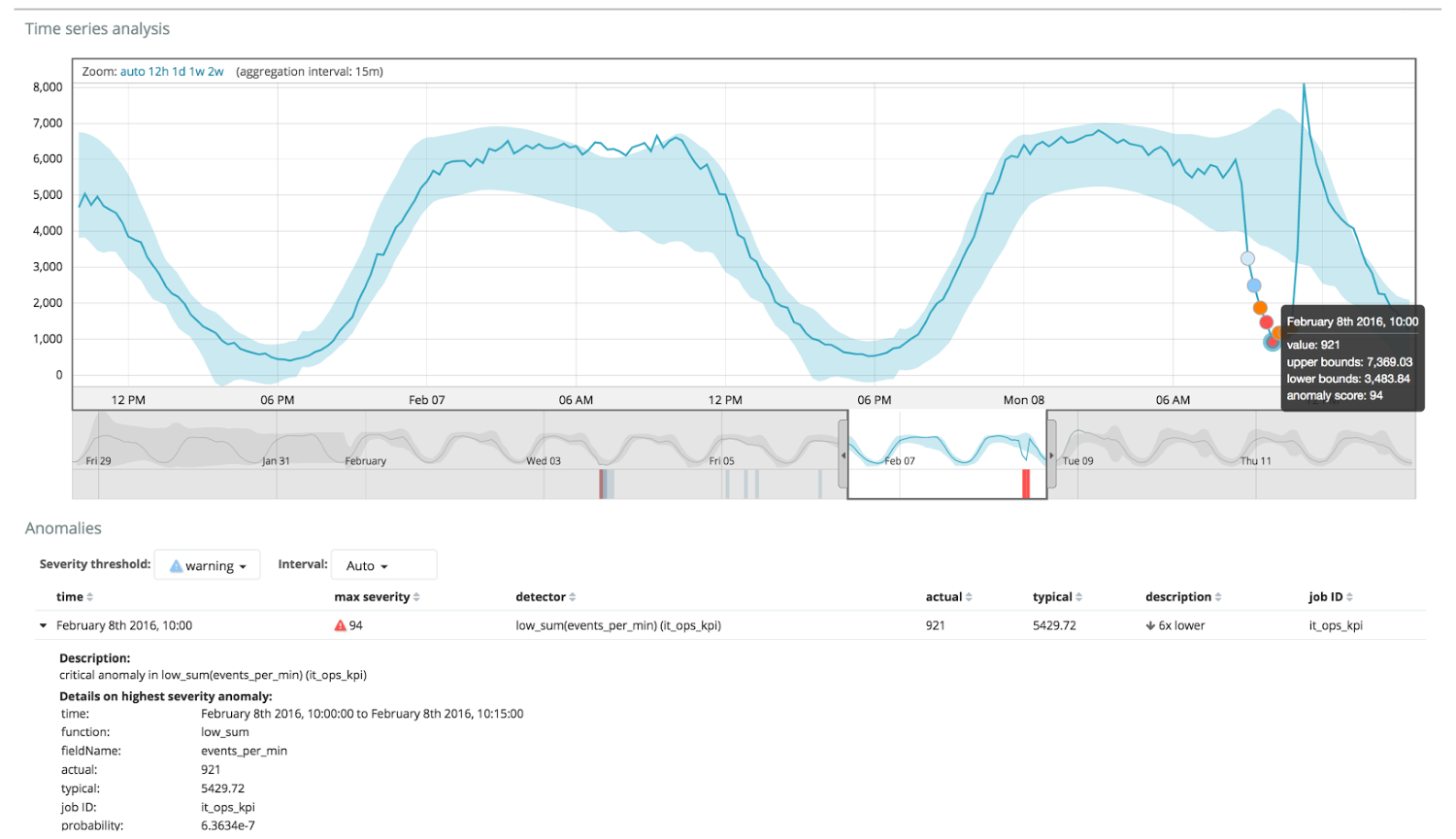

To look for anomalies among the correlations between different metrics in the Elastic Stack there is a specialized Population analysis. It is with the help of it that one can look for anomalous values in the performance of any server in comparison with the others with, for example, an increase in the number of requests to the target system.

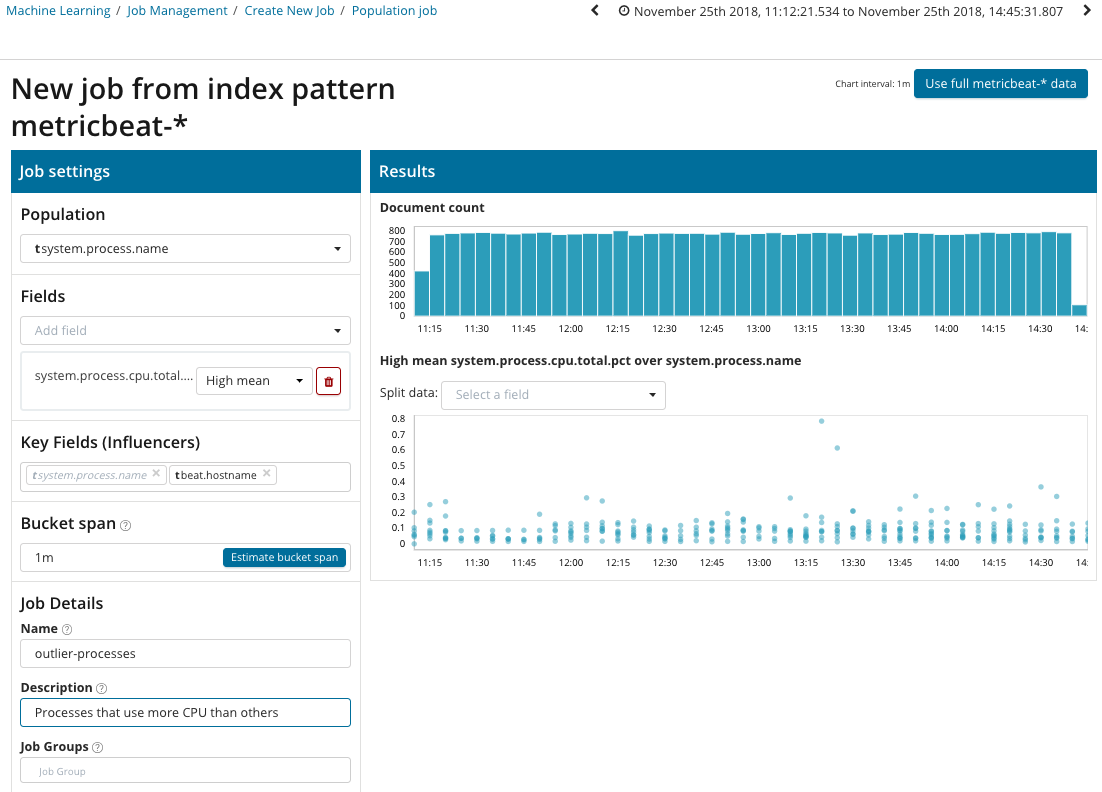

In this illustration, the Population field indicates the value to which the analyzed metrics will refer. In this is the name of the process. As a result, we will see how the processor load by each of the processes influenced each other.

Please note that the graph of the analyzed data differs from the cases with Single Metric and Multi Metric. This is done in Kibana by design for improved perception of the distribution of the values of the analyzed data.

From the graph it can be seen that the stress process (by the way, generated by a special utility) on the poipu server, which influenced (or turned out to be a fluencer) to the occurrence of this anomaly, behaved abnormally.

Advanced

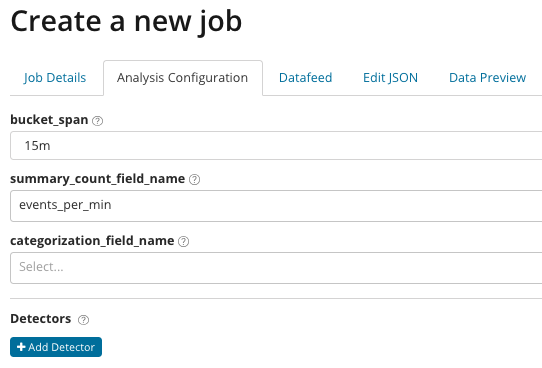

Analytics with fine tuning. With advanced analysis in Kibana additional settings appear. After clicking on the Create menu on the Advanced tile, this tab window appears. The Job Details tab was missed intentionally, there the basic settings are not directly related to the analysis settings.

In the summary_count_field_name optionally, you can specify the name of the field from documents containing aggregated values. In this example, the number of events per minute. The categorization_field_name specifies the name of the field value from the document that contains some variable value. According to the mask, this field can be used to break the analyzed data into subsets. Pay attention to the Add detector button in the previous illustration. Below is the result of clicking on this button.

Here is an additional block of settings for setting up the anomaly detector for a specific task. We will discuss the specific use cases (especially for security) in the following articles. For an example, look at one of the disassembled cases. It is associated with the search for rarely appearing values and is implemented by the rare function .

In the function field, you can select a specific function to search for anomalies. In addition to rare , there are a couple of interesting features - time_of_day and time_of_week . They bring out anomalies in the behavior of metrics throughout the day or week, respectively. The remaining analysis functions are in the documentation .

The field_name specifies the field of the document that will be analyzed. By_field_name can be used to separate the analysis results for each individual value of the document field specified here. If you fill in over_field_name, you get a population analysis, which we considered above. If you specify a value in partition_field_name , then this field of the document will calculate individual baselines for each value (values can be, for example, the name of the server or the process on the server). In exclude_frequent, you can select all or none , which will mean exclusion (or inclusion) of frequently encountered field values of documents.

In the article we tried to give an idea of the possibilities of machine learning in the Elastic Stack as concisely as possible, there are still a lot of details left overs. Tell us in the comments which cases you managed to solve with the help of the Elastic Stack and for what tasks you use it. To contact us you can use personal messages on Habré or a feedback form on the site .

Source: https://habr.com/ru/post/455387/

All Articles