Linux network application performance. Introduction

Web applications are now used everywhere, and among all transport protocols the lion's share is taken by HTTP. Studying the nuances of developing web applications, the majority devotes very little attention to the operating system, where these applications actually run. Separation of development (Dev) and operation (Ops) only worsened the situation. But with the spread of DevOps culture, developers are beginning to take responsibility for running their applications in the cloud, so it’s very useful for them to thoroughly get acquainted with the operating system backend. This is especially useful if you are trying to deploy a system for thousands or tens of thousands of simultaneous connections.

The limitations in web services are very similar to those in other applications. Whether they are load balancers or database servers, all of these applications have similar problems in a high-performance environment. Understanding these fundamental limitations and ways to overcome them in general will allow you to evaluate the performance and scalability of your web applications.

I am writing this series of articles in response to questions from young developers who want to become well-informed system architects. It is impossible to clearly understand the methods of optimizing Linux applications, not immersed in the basics, how they work at the operating system level. Although there are many types of applications, in this cycle I want to explore network applications, rather than desktop ones, such as a browser or text editor. This material is intended for developers and architects who want to understand how Linux or Unix programs work and how to structure them for high performance.

Linux is a server operating system, and most often your applications run on this OS. Although I say “Linux”, most of the time you can safely assume that all Unix-like operating systems are meant as a whole. However, I have not tested the accompanying code on other systems. So, if you are interested in FreeBSD or OpenBSD, the result may differ. When I try something Linux-specific, I point it out.

')

Although you can use this knowledge to create an application from scratch, and it will be perfectly optimized, but it is better not to do so. If you write a new C or C ++ web server for your organization’s business application, it may be your last day at work. However, knowledge of the structure of these applications will help in the selection of existing programs. You will be able to compare systems based on processes with systems based on threads as well as based on events. You will understand and appreciate why Nginx works better than Apache httpd, why a Tornado-based Python application can serve more users than a Django-based Python application.

ZeroHTTPd is a web server that I wrote from scratch in C as an educational tool. He has no external dependencies, including access to Redis. We run our own Redis routines. See below for details.

Although we could discuss the theory for a long time, there is nothing better than writing code, running it and comparing all the server architectures with each other. This is the most visual method. Therefore, we will write a simple ZeroHTTPd web server, applying each model: based on processes, threads and events. Let's check each of these servers and see how they work compared to each other. ZeroHTTPd is implemented in a single C file. The event-based server includes uthash , an excellent implementation of a hash table that is supplied in a single header file. In other cases, there are no dependencies, so as not to complicate the project.

The code has a lot of comments to help you figure it out. Being a simple web server in a few lines of code, ZeroHTTPd is also a minimal framework for web development. It has limited functionality, but it is capable of generating static files and very simple “dynamic” pages. I must say that ZeroHTTPd is well suited for learning how to create high-performance Linux applications. By and large, most web services are waiting for requests, check them and process them. This is exactly what ZeroHTTPd will do. This is a tool for learning, not for production. He is not good at handling errors and hardly boasts the best security practices (oh yeah, I used

The main page ZeroHTTPd. It can produce different types of files, including images.

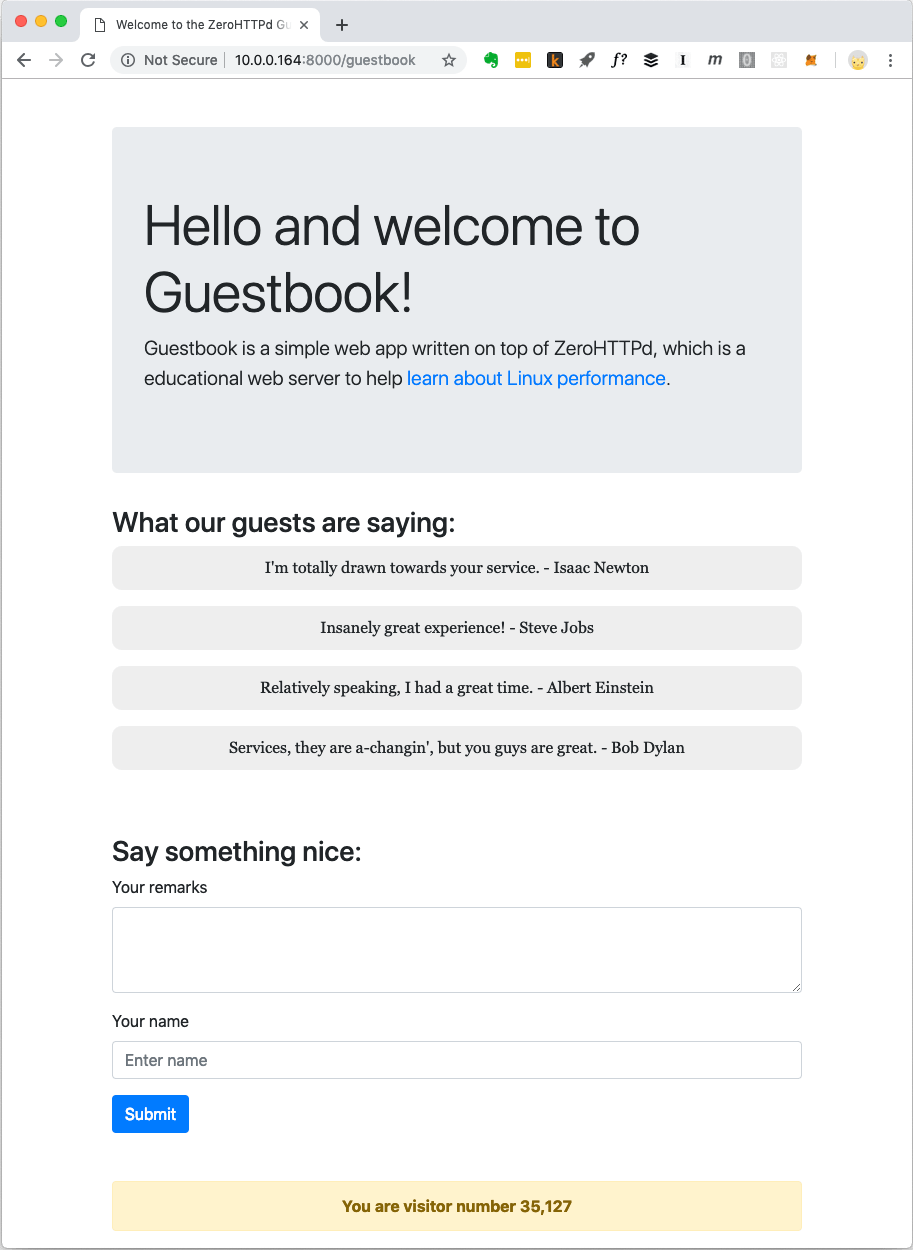

Modern web applications are usually not limited to static files. They have complex interactions with various databases, caches, etc. Therefore, we will create a simple web application called Guestbook, where visitors leave entries under their own names. In the guest book saved entries left earlier. There is also a visitor counter at the bottom of the page.

Guest book web application ZeroHTTPd

Visitor counters and guestbook entries are stored in Redis. Own procedures are implemented for communications with Redis, they do not depend on an external library. I'm not a big fan of rolling out homebrew code when there are generally available and well-tested solutions. But the goal of ZeroHTTPd is to study Linux performance and access to external services, while serving HTTP requests has a serious impact on performance. We must fully control the communication with Redis in each of our server architectures. In one architecture, we use blocking calls, in others, event-based procedures. Using the Redis external client library will not give such control. In addition, our little Redis client performs only a few functions (getting, setting and increasing the key; getting and adding to the array). In addition, the Redis protocol is extremely elegant and simple. He doesn’t even need to teach him. The fact that the protocol does all the work in about a hundred lines of code indicates how well-thought it is.

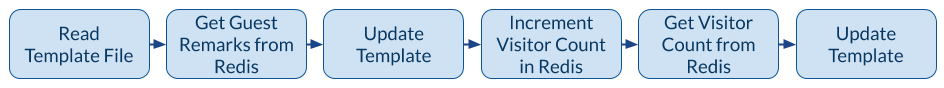

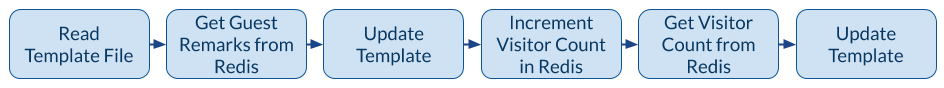

The following figure shows the actions of the application when the client (browser) requests

The mechanism of the guestbook application

When you need to issue a guestbook page, there is one call to the file system to read the template into memory and three network calls to Redis. The template file contains most of the HTML content for the page in the screenshot above. There are also special placeholders for the dynamic part of the content: records and visitor counters. We get them from Redis, paste them into the page and give the client the fully formed content. The third Redis call can be avoided because Redis returns the new key value when incremented. However, for our server with an asynchronous, event-based architecture, numerous network calls are a good test for training purposes. Thus, we discard the return value of Redis about the number of visitors and request it with a separate call.

We build seven versions of ZeroHTTPd with the same functionality, but different architectures:

We measure the performance of each architecture by loading the server with HTTP requests. But when comparing architectures with a high degree of parallelism, the number of queries increases. We test three times and consider the average.

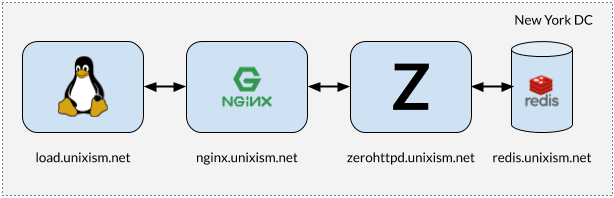

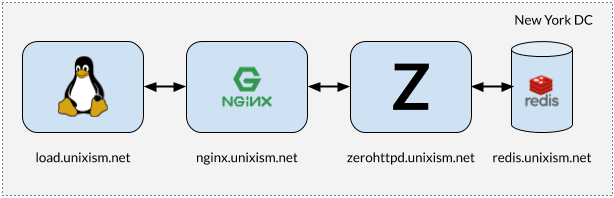

Installation for load testing ZeroHTTPd

It is important that when performing tests all components do not work on the same machine. In this case, the OS incurs additional planning overhead, as the components compete for the CPU. Measuring the operating system overhead for each of the selected server architectures is one of the most important goals of this exercise. Adding more variables will be detrimental to the process. Therefore, the setting in the figure above works best.

All servers run on the same processor core. The idea is to evaluate the maximum performance of each of the architectures. Since all server programs are tested on the same hardware, this is the basic level for comparing them. My test setup consists of virtual servers rented from Digital Ocean.

You can measure different indicators. We estimate the performance of each architecture in this configuration by loading servers with requests at different levels of parallelism: the load grows from 20 to 15,000 simultaneous users.

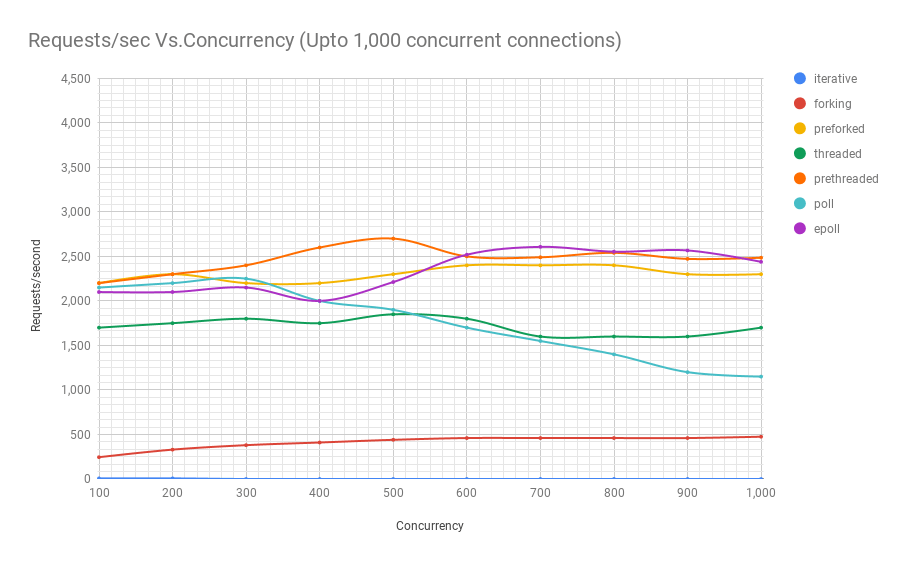

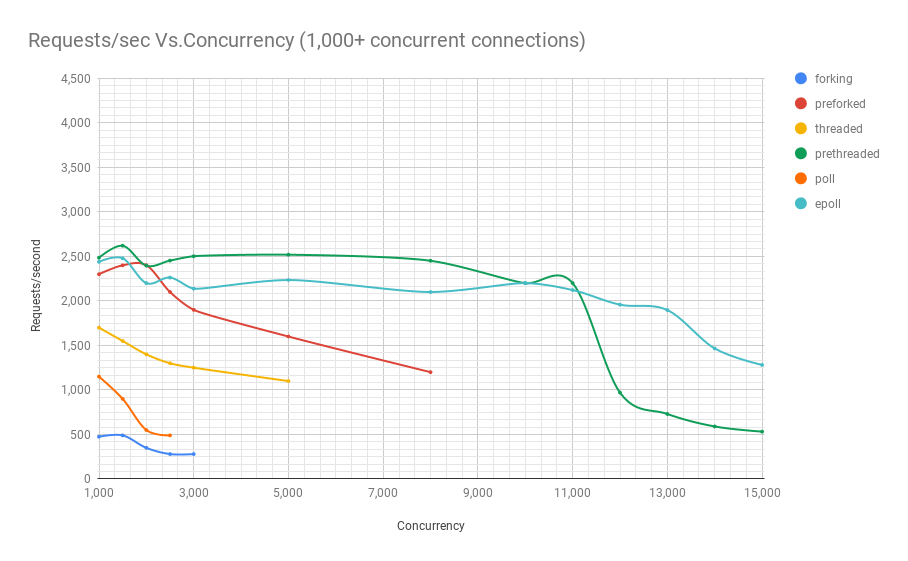

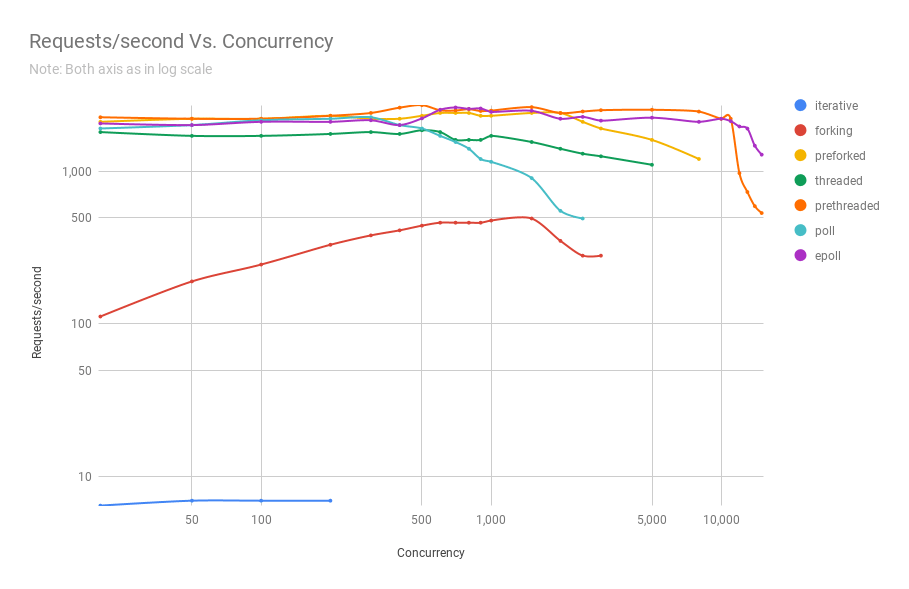

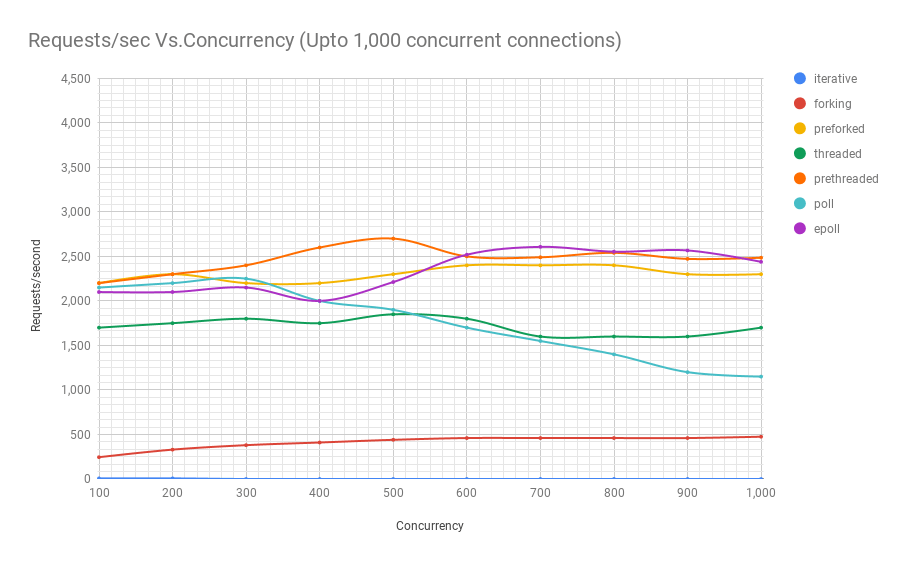

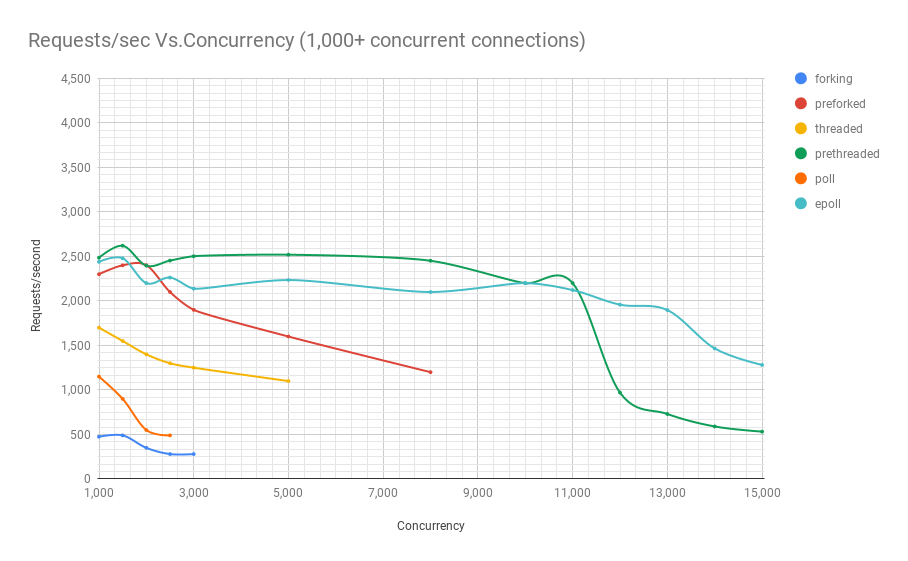

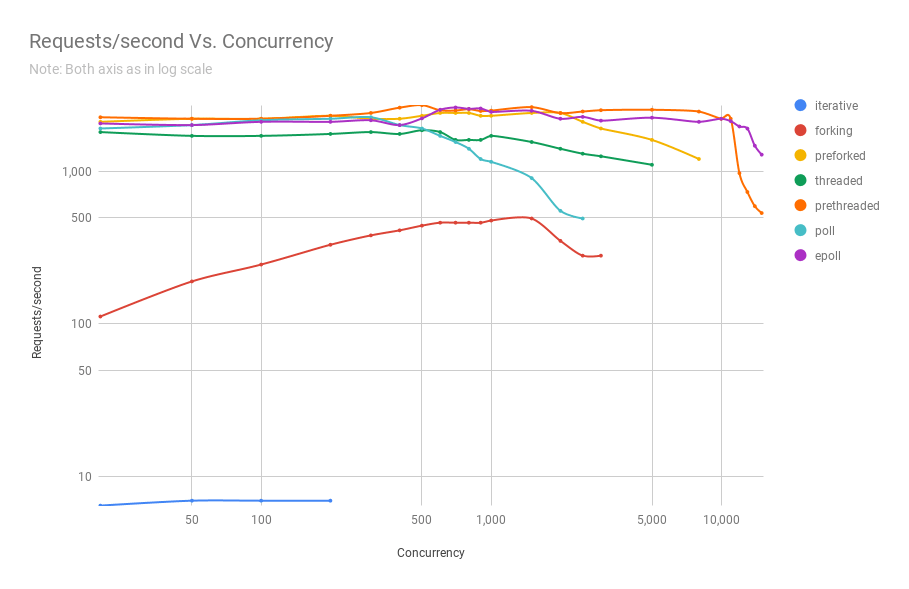

The following diagram shows the performance of servers on different architectures with different levels of parallelism. On the y axis - the number of requests per second, on the x axis - parallel connections.

Below is a table with the results.

From the graph and the table it is clear that above 8000 simultaneous requests, we have only two players left: pre-fork and epoll. As the load grows, a server based on poll works worse than a streaming one. The pre-threading architecture makes epoll worthy of competition: this is evidence of how well the Linux kernel plans a large number of threads.

ZeroHTTPd source code here . For each architecture, a separate directory.

In addition to the seven directories for all architectures, there are two more in the top-level directory: public and templates. The first one contains the index.html file and the image from the first screenshot. You can put other files and folders there, and ZeroHTTPd should easily issue these static files. If the path in the browser corresponds to the path in the public folder, then ZeroHTTPd searches the index.html file in this directory. Content for the guest book is generated dynamically. It has only the main page, and its content is based on the 'templates / guestbook / index.html' file. Dynamic pages for extensions are easily added to ZeroHTTPd. The idea is that users can add templates to this directory and extend ZeroHTTPd as needed.

To build all seven servers, run

To understand the information in this series of articles, it is not necessary to understand the Linux API well. However, I recommend reading more on this topic, there are many reference resources on the Web. Although we will cover several categories of the Linux API, our focus will be mainly on processes, threads, events, and the network stack. In addition to books and articles about the Linux API, I also recommend reading mana for system calls and library functions used.

One note about performance and scalability. Theoretically, there is no connection between them. You may have a web service that works very well, with a response time of a few milliseconds, but it does not scale at all. Similarly, there may be a poorly functioning web application that takes a few seconds to respond, but it scales to tens to handle tens of thousands of simultaneous users. However, the combination of high performance and scalability is a very powerful combination. High-performance applications generally save resources and, therefore, effectively serve more concurrent users on the server, reducing costs.

Finally, in calculations there are always two possible types of tasks: for I / O and CPU. Getting requests via the Internet (network I / O), file serving (network and disk I / O), communication with the database (network and disk I / O) are all I / O actions. Some queries to the database may slightly load the CPU (sorting, calculating the average value of a million results, etc.). Most web applications are limited to the maximum possible I / O, and the processor is rarely used at full capacity. When you see that a lot of CPUs are used in some I / O task, this is most likely a sign of a poor application architecture. This may mean that CPU resources are spent on managing processes and context switching - and this is not entirely useful. If you do something like image processing, audio file conversion or machine learning, then the application requires powerful CPU resources. But for most applications this is not the case.

The limitations in web services are very similar to those in other applications. Whether they are load balancers or database servers, all of these applications have similar problems in a high-performance environment. Understanding these fundamental limitations and ways to overcome them in general will allow you to evaluate the performance and scalability of your web applications.

I am writing this series of articles in response to questions from young developers who want to become well-informed system architects. It is impossible to clearly understand the methods of optimizing Linux applications, not immersed in the basics, how they work at the operating system level. Although there are many types of applications, in this cycle I want to explore network applications, rather than desktop ones, such as a browser or text editor. This material is intended for developers and architects who want to understand how Linux or Unix programs work and how to structure them for high performance.

Linux is a server operating system, and most often your applications run on this OS. Although I say “Linux”, most of the time you can safely assume that all Unix-like operating systems are meant as a whole. However, I have not tested the accompanying code on other systems. So, if you are interested in FreeBSD or OpenBSD, the result may differ. When I try something Linux-specific, I point it out.

')

Although you can use this knowledge to create an application from scratch, and it will be perfectly optimized, but it is better not to do so. If you write a new C or C ++ web server for your organization’s business application, it may be your last day at work. However, knowledge of the structure of these applications will help in the selection of existing programs. You will be able to compare systems based on processes with systems based on threads as well as based on events. You will understand and appreciate why Nginx works better than Apache httpd, why a Tornado-based Python application can serve more users than a Django-based Python application.

ZeroHTTPd: a learning tool

ZeroHTTPd is a web server that I wrote from scratch in C as an educational tool. He has no external dependencies, including access to Redis. We run our own Redis routines. See below for details.

Although we could discuss the theory for a long time, there is nothing better than writing code, running it and comparing all the server architectures with each other. This is the most visual method. Therefore, we will write a simple ZeroHTTPd web server, applying each model: based on processes, threads and events. Let's check each of these servers and see how they work compared to each other. ZeroHTTPd is implemented in a single C file. The event-based server includes uthash , an excellent implementation of a hash table that is supplied in a single header file. In other cases, there are no dependencies, so as not to complicate the project.

The code has a lot of comments to help you figure it out. Being a simple web server in a few lines of code, ZeroHTTPd is also a minimal framework for web development. It has limited functionality, but it is capable of generating static files and very simple “dynamic” pages. I must say that ZeroHTTPd is well suited for learning how to create high-performance Linux applications. By and large, most web services are waiting for requests, check them and process them. This is exactly what ZeroHTTPd will do. This is a tool for learning, not for production. He is not good at handling errors and hardly boasts the best security practices (oh yeah, I used

strcpy ) or abstruse stunts of the C language. But I hope he will cope well with his task.

The main page ZeroHTTPd. It can produce different types of files, including images.

Guestbook application

Modern web applications are usually not limited to static files. They have complex interactions with various databases, caches, etc. Therefore, we will create a simple web application called Guestbook, where visitors leave entries under their own names. In the guest book saved entries left earlier. There is also a visitor counter at the bottom of the page.

Guest book web application ZeroHTTPd

Visitor counters and guestbook entries are stored in Redis. Own procedures are implemented for communications with Redis, they do not depend on an external library. I'm not a big fan of rolling out homebrew code when there are generally available and well-tested solutions. But the goal of ZeroHTTPd is to study Linux performance and access to external services, while serving HTTP requests has a serious impact on performance. We must fully control the communication with Redis in each of our server architectures. In one architecture, we use blocking calls, in others, event-based procedures. Using the Redis external client library will not give such control. In addition, our little Redis client performs only a few functions (getting, setting and increasing the key; getting and adding to the array). In addition, the Redis protocol is extremely elegant and simple. He doesn’t even need to teach him. The fact that the protocol does all the work in about a hundred lines of code indicates how well-thought it is.

The following figure shows the actions of the application when the client (browser) requests

/guestbookURL .

The mechanism of the guestbook application

When you need to issue a guestbook page, there is one call to the file system to read the template into memory and three network calls to Redis. The template file contains most of the HTML content for the page in the screenshot above. There are also special placeholders for the dynamic part of the content: records and visitor counters. We get them from Redis, paste them into the page and give the client the fully formed content. The third Redis call can be avoided because Redis returns the new key value when incremented. However, for our server with an asynchronous, event-based architecture, numerous network calls are a good test for training purposes. Thus, we discard the return value of Redis about the number of visitors and request it with a separate call.

ZeroHTTPd Server Architectures

We build seven versions of ZeroHTTPd with the same functionality, but different architectures:

- Iterative

- Fork server (one child process per request)

- Pre-fork server (pre-forking processes)

- Server with threads of execution (one thread per request)

- Server with pre-threading

poll()based architecture- Epoll-based architecture

We measure the performance of each architecture by loading the server with HTTP requests. But when comparing architectures with a high degree of parallelism, the number of queries increases. We test three times and consider the average.

Testing Methodology

Installation for load testing ZeroHTTPd

It is important that when performing tests all components do not work on the same machine. In this case, the OS incurs additional planning overhead, as the components compete for the CPU. Measuring the operating system overhead for each of the selected server architectures is one of the most important goals of this exercise. Adding more variables will be detrimental to the process. Therefore, the setting in the figure above works best.

What each of these servers does

- load.unixism.net: here we run

ab, the Apache Benchmark utility. It generates the workload required to test our server architectures. - nginx.unixism.net: sometimes we want to run more than one instance of the server program. To do this, the Nginx server with the appropriate settings works as a load balancer from ab to our server processes.

- zerohttpd.unixism.net: here we run our server programs on seven different architectures, one at a time.

- redis.unixism.net: the Redis daemon is running on this server, where guestbook entries and the visitors counter are stored.

All servers run on the same processor core. The idea is to evaluate the maximum performance of each of the architectures. Since all server programs are tested on the same hardware, this is the basic level for comparing them. My test setup consists of virtual servers rented from Digital Ocean.

What do we measure?

You can measure different indicators. We estimate the performance of each architecture in this configuration by loading servers with requests at different levels of parallelism: the load grows from 20 to 15,000 simultaneous users.

Test results

The following diagram shows the performance of servers on different architectures with different levels of parallelism. On the y axis - the number of requests per second, on the x axis - parallel connections.

Below is a table with the results.

| requests per second | |||||||

| parallelism | iterative | fork | pre-fork | streaming | pre-streaming | poll | epoll |

| 20 | 7 | 112 | 2100 | 1800 | 2250 | 1900 | 2050 |

| 50 | 7 | 190 | 2200 | 1700 | 2200 | 2000 | 2000 |

| 100 | 7 | 245 | 2200 | 1700 | 2200 | 2150 | 2100 |

| 200 | 7 | 330 | 2300 | 1750 | 2300 | 2200 | 2100 |

| 300 | - | 380 | 2200 | 1800 | 2400 | 2250 | 2150 |

| 400 | - | 410 | 2200 | 1750 | 2600 | 2000 | 2000 |

| 500 | - | 440 | 2300 | 1850 | 2700 | 1900 | 2212 |

| 600 | - | 460 | 2400 | 1800 | 2500 | 1700 | 2519 |

| 700 | - | 460 | 2400 | 1600 | 2490 | 1550 | 2607 |

| 800 | - | 460 | 2400 | 1600 | 2540 | 1400 | 2553 |

| 900 | - | 460 | 2300 | 1600 | 2472 | 1200 | 2567 |

| 1000 | - | 475 | 2300 | 1700 | 2485 | 1150 | 2439 |

| 1500 | - | 490 | 2400 | 1550 | 2620 | 900 | 2479 |

| 2000 | - | 350 | 2400 | 1400 | 2396 | 550 | 2200 |

| 2500 | - | 280 | 2100 | 1300 | 2453 | 490 | 2262 |

| 3000 | - | 280 | 1900 | 1250 | 2502 | large scatter | 2138 |

| 5000 | - | large scatter | 1600 | 1100 | 2519 | - | 2235 |

| 8,000 | - | - | 1200 | large scatter | 2451 | - | 2100 |

| 10,000 | - | - | large scatter | - | 2200 | - | 2200 |

| 11,000 | - | - | - | - | 2200 | - | 2122 |

| 12,000 | - | - | - | - | 970 | - | 1958 |

| 13,000 | - | - | - | - | 730 | - | 1897 |

| 14,000 | - | - | - | - | 590 | - | 1466 |

| 15,000 | - | - | - | - | 532 | - | 1281 |

From the graph and the table it is clear that above 8000 simultaneous requests, we have only two players left: pre-fork and epoll. As the load grows, a server based on poll works worse than a streaming one. The pre-threading architecture makes epoll worthy of competition: this is evidence of how well the Linux kernel plans a large number of threads.

ZeroHTTPd source code

ZeroHTTPd source code here . For each architecture, a separate directory.

ZeroHTTPd

│

01── 01_iterative

│ ├── main.c

02── 02_forking

│ ├── main.c

03── 03_preforking

│ ├── main.c

04── 04_threading

│ ├── main.c

05── 05_prethreading

│ ├── main.c

06── 06_poll

│ ├── main.c

07── 07_epoll

│ └── main.c

Make── Makefile

Public──public

│ ├── index.html

│ └── tux.png

Templates── templates

Guest── guestbook

Index── index.html In addition to the seven directories for all architectures, there are two more in the top-level directory: public and templates. The first one contains the index.html file and the image from the first screenshot. You can put other files and folders there, and ZeroHTTPd should easily issue these static files. If the path in the browser corresponds to the path in the public folder, then ZeroHTTPd searches the index.html file in this directory. Content for the guest book is generated dynamically. It has only the main page, and its content is based on the 'templates / guestbook / index.html' file. Dynamic pages for extensions are easily added to ZeroHTTPd. The idea is that users can add templates to this directory and extend ZeroHTTPd as needed.

To build all seven servers, run

make all from the top-level directory - and all builds will appear in this directory. Executable files look for public and templates directories in the directory from which they are launched.Linux API

To understand the information in this series of articles, it is not necessary to understand the Linux API well. However, I recommend reading more on this topic, there are many reference resources on the Web. Although we will cover several categories of the Linux API, our focus will be mainly on processes, threads, events, and the network stack. In addition to books and articles about the Linux API, I also recommend reading mana for system calls and library functions used.

Performance and Scalability

One note about performance and scalability. Theoretically, there is no connection between them. You may have a web service that works very well, with a response time of a few milliseconds, but it does not scale at all. Similarly, there may be a poorly functioning web application that takes a few seconds to respond, but it scales to tens to handle tens of thousands of simultaneous users. However, the combination of high performance and scalability is a very powerful combination. High-performance applications generally save resources and, therefore, effectively serve more concurrent users on the server, reducing costs.

CPU and I / O tasks

Finally, in calculations there are always two possible types of tasks: for I / O and CPU. Getting requests via the Internet (network I / O), file serving (network and disk I / O), communication with the database (network and disk I / O) are all I / O actions. Some queries to the database may slightly load the CPU (sorting, calculating the average value of a million results, etc.). Most web applications are limited to the maximum possible I / O, and the processor is rarely used at full capacity. When you see that a lot of CPUs are used in some I / O task, this is most likely a sign of a poor application architecture. This may mean that CPU resources are spent on managing processes and context switching - and this is not entirely useful. If you do something like image processing, audio file conversion or machine learning, then the application requires powerful CPU resources. But for most applications this is not the case.

Learn more about server architectures.

Source: https://habr.com/ru/post/455212/

All Articles