Compiling C to WebAssembly without Emscripten

The compiler is part of Emscripten . And what if you remove all the whistles and leave only him?

Emscripten is required to compile C / C ++ into WebAssembly . But this is much more than just a compiler. Emscripten's goal is to completely replace your C / C ++ compiler and run code on the web that was not originally intended for the Web. For this, Emscripten emulates the entire POSIX operating system. If the program uses fopen () , then Emscripten will provide file system emulation. If OpenGL is used, then Emscripten will provide a C-compatible GL context supported by WebGL . This is a lot of work, and a lot of code that will have to be implemented in the final package. But can you just ... remove it?

The actual compiler in the Emscripten toolbox is LLVM. It was he who translates the C code into WebAssembly byte code. This is a modern modular framework for analyzing, transforming and optimizing programs. LLVM is modular in the sense that it never compiles directly into machine code. Instead, the built-in front-end compiler generates an intermediate representation (IR). This intermediate presentation is actually called LLVM, an abbreviation of Low-Level Virtual Machine, hence the name of the project.

Then the backend compiler translates the IR into host machine code. The advantage of such a strict separation is that new architectures are supported by the “simple” addition of a new compiler. In this sense, WebAssembly is just one of the many compilation goals that LLVM supports, and for some time it has been activated by a special flag. Starting with LLVM 8, the goal of compiling WebAssembly is available by default.

')

On MacOS, you can install LLVM using homebrew :

Check for WebAssembly support:

It seems we are ready!

What a magnificent engineering feat! Especially because the program is called add , but in fact it does not add anything (does not add). More importantly: the program does not use the standard library, and of the types here, only 'int'.

The first step is to turn our C program into LLVM IR. This is the task of the

As a result, we get

LLVM IR is full of additional metadata and annotations, which allows the compiler to make more informed decisions when generating machine code.

The next step is to call the backend compiler

The output file

If we had

The output shows that our add () function is in this module, but it also contains custom sections with metadata and, surprisingly, several imports. At the next stage of the layout, custom sections will be analyzed and deleted, and the linker will understand the import.

Traditionally, the task of the linker is to assemble several object files into an executable file. The LLVM linker is called

The result is a 262-byte WebAssembly module.

Of course, the most important thing is to see that everything really works. As in the previous article , you can use a couple of lines of embedded JavaScript to load and run this WebAssembly module.

If everything is fine, you will see the number 17 in the DevTool console. We have just successfully compiled C into WebAssembly, without touching Emscripten. It is also worth noting that there is no glue code here to configure and load the WebAssembly module.

We have taken quite a few steps to compile C into WebAssembly. As I said, for educational purposes, we have reviewed in detail all the steps. Let's skip the human-readable intermediate formats and immediately apply the C compiler as a Swiss army knife, as it was designed:

Here we get the same

Let's look at the WAT of our WebAssembly module by running

Wow, what a great code. To my surprise, the module uses memory (as seen in

Using C without the standard libc library seems rather rude. It is logical to add it, but I will be honest: it will not be easy. In fact, we do not directly invoke any libc libraries in this article . There are several suitable ones, especially glibc , musl and dietlibc . However, most of these libraries are supposed to run on the POSIX operating system, which implements a specific set of system calls. Since we do not have a kernel interface in JavaScript, we will have to independently implement these POSIX system calls, probably through JavaScript. This is a difficult task and I am not going to do this here. The good news is that this is what Emscripten is doing for you .

Of course, not all libc functions rely on system calls. Functions like

Without libc, fundamental C interfaces such as

The way that WebAssembly is segmented will surprise experienced programmers. First, WebAssembly technically admits a null address, but often it is still treated as an error. Secondly, the stack comes first and grows down (to lower addresses), and the heap appears later and grows up. The reason is that the WebAssembly memory may increase at runtime. This means that there is no fixed end for placing the stack or heap.

Here is the layout of the

The stack grows down, and the heap grows up. The stack starts with

If you go back and look at the globals section in our WAT, we find these values:

It is known that the heap area begins with

But why not write your own

Global variables from WAT are actually defined by

To check, let's make a function C, which takes an array of numbers of arbitrary size and calculates the sum. Not very interesting, but it forces the use of dynamic memory, since we do not know the size of the array during assembly:

The sum () function, I hope, is pretty clear. A more interesting question is how to transfer an array from JavaScript to WebAssembly - after all, WebAssembly understands only numbers. The general idea is to use

After starting, you should see in the DevTools console the answer is 15, which is really the sum of all numbers from 1 to 5.

So, you read to the end. Congratulations! Again, if you feel a bit overwhelmed, everything is fine. It is not necessary to read all the details. Understanding them is completely optional for a good web developer and is not even required for excellent use of WebAssembly . But I wanted to share this information, because it allows you to really appreciate all the work that a project like Emscripten does for you. At the same time, it gives an understanding of how small purely computational modules WebAssembly can be. The Wasm module for the summation of the array contained just 230 bytes, including the dynamic memory allocator . Compiling the same code with Emscripten will produce 100 bytes of WebAssembly code and 11K of junction JavaScript code. We need to try for the sake of such a result, but there are situations when it is worth it.

Emscripten is required to compile C / C ++ into WebAssembly . But this is much more than just a compiler. Emscripten's goal is to completely replace your C / C ++ compiler and run code on the web that was not originally intended for the Web. For this, Emscripten emulates the entire POSIX operating system. If the program uses fopen () , then Emscripten will provide file system emulation. If OpenGL is used, then Emscripten will provide a C-compatible GL context supported by WebGL . This is a lot of work, and a lot of code that will have to be implemented in the final package. But can you just ... remove it?

The actual compiler in the Emscripten toolbox is LLVM. It was he who translates the C code into WebAssembly byte code. This is a modern modular framework for analyzing, transforming and optimizing programs. LLVM is modular in the sense that it never compiles directly into machine code. Instead, the built-in front-end compiler generates an intermediate representation (IR). This intermediate presentation is actually called LLVM, an abbreviation of Low-Level Virtual Machine, hence the name of the project.

Then the backend compiler translates the IR into host machine code. The advantage of such a strict separation is that new architectures are supported by the “simple” addition of a new compiler. In this sense, WebAssembly is just one of the many compilation goals that LLVM supports, and for some time it has been activated by a special flag. Starting with LLVM 8, the goal of compiling WebAssembly is available by default.

')

On MacOS, you can install LLVM using homebrew :

$ brew install llvm $ brew link --force llvm Check for WebAssembly support:

$ llc --version LLVM (http://llvm.org/): LLVM version 8.0.0 Optimized build. Default target: x86_64-apple-darwin18.5.0 Host CPU: skylake Registered Targets: # …, … systemz - SystemZ thumb - Thumb thumbeb - Thumb (big endian) wasm32 - WebAssembly 32-bit # ! ! ! wasm64 - WebAssembly 64-bit x86 - 32-bit X86: Pentium-Pro and above x86-64 - 64-bit X86: EM64T and AMD64 xcore - XCore It seems we are ready!

Compiling C in a Difficult Way

Note: here we look at some low-level RAW WebAssembly formats. If you find them hard to understand, this is normal. Good use of WebAssembly does not necessarily require an understanding of the entire text in this article. If you are looking for code for copy-paste, see the compiler call in the "Optimization" section . But if you're interested, keep reading! I previously wrote an introduction to clean Webassembly and WAT: these are the basics you need to understand this post.Warning: I deviate a little from the standard and at every step I will try to use readable formats (as far as possible). Our program here will be very simple to avoid borderline situations and not be distracted:

// Filename: add.c int add(int a, int b) { return a*a + b; } What a magnificent engineering feat! Especially because the program is called add , but in fact it does not add anything (does not add). More importantly: the program does not use the standard library, and of the types here, only 'int'.

Turning C into an internal LLVM view

The first step is to turn our C program into LLVM IR. This is the task of the

clang front-end compiler, which is installed with LLVM: clang \ --target=wasm32 \ # Target WebAssembly -emit-llvm \ # Emit LLVM IR (instead of host machine code) -c \ # Only compile, no linking just yet -S \ # Emit human-readable assembly rather than binary add.c As a result, we get

add.ll with the internal representation of LLVM IR. I show it only for the sake of completeness . When working with WebAssembly or even clang, you, as a C developer, never come into contact with LLVM IR. ; ModuleID = 'add.c' source_filename = "add.c" target datalayout = "em:ep:32:32-i64:64-n32:64-S128" target triple = "wasm32" ; Function Attrs: norecurse nounwind readnone define hidden i32 @add(i32, i32) local_unnamed_addr #0 { %3 = mul nsw i32 %0, %0 %4 = add nsw i32 %3, %1 ret i32 %4 } attributes #0 = { norecurse nounwind readnone "correctly-rounded-divide-sqrt-fp-math"="false" "disable-tail-calls"="false" "less-precise-fpmad"="false" "min-legal-vector-width"="0" "no-frame-pointer-elim"="false" "no-infs-fp-math"="false" "no-jump-tables"="false" "no-nans-fp-math"="false" "no-signed-zeros-fp-math"="false" "no-trapping-math"="false" "stack-protector-buffer-size"="8" "target-cpu"="generic" "unsafe-fp-math"="false" "use-soft-float"="false" } !llvm.module.flags = !{!0} !llvm.ident = !{!1} !0 = !{i32 1, !"wchar_size", i32 4} !1 = !{!"clang version 8.0.0 (tags/RELEASE_800/final)"} LLVM IR is full of additional metadata and annotations, which allows the compiler to make more informed decisions when generating machine code.

Turn LLVM IR into Object Files

The next step is to call the backend compiler

llc to make an object file from the internal representation.The output file

add.o is already a valid WebAssembly module, which contains all the compiled code of our C file. But usually you will not be able to run the object files because they lack essential parts.If we had

-filetype=obj in the command, we would get the LLVM assembler for WebAssembly, a human-readable format that is somewhat similar to WAT. However, the llvm-mc tool for working with such files does not yet fully support the format and often cannot process files. Therefore, we disassemble the object files after the fact. To validate these object files, you need a specific tool. In the case of a WebAssembly, this was wasm-objdump , part of WebAssembly Binary Toolkit or wabt for short. $ brew install wabt # in case you haven't $ wasm-objdump -x add.o add.o: file format wasm 0x1 Section Details: Type[1]: - type[0] (i32, i32) -> i32 Import[3]: - memory[0] pages: initial=0 <- env.__linear_memory - table[0] elem_type=funcref init=0 max=0 <- env.__indirect_function_table - global[0] i32 mutable=1 <- env.__stack_pointer Function[1]: - func[0] sig=0 <add> Code[1]: - func[0] size=75 <add> Custom: - name: "linking" - symbol table [count=2] - 0: F <add> func=0 binding=global vis=hidden - 1: G <env.__stack_pointer> global=0 undefined binding=global vis=default Custom: - name: "reloc.CODE" - relocations for section: 3 (Code) [1] R_WASM_GLOBAL_INDEX_LEB offset=0x000006(file=0x000080) symbol=1 <env.__stack_pointer> The output shows that our add () function is in this module, but it also contains custom sections with metadata and, surprisingly, several imports. At the next stage of the layout, custom sections will be analyzed and deleted, and the linker will understand the import.

Layout

Traditionally, the task of the linker is to assemble several object files into an executable file. The LLVM linker is called

lld , and it is called with the target symbolic link. For WebAssembly, this was wasm-ld . wasm-ld \ --no-entry \ # We don't have an entry function --export-all \ # Export everything (for now) -o add.wasm \ add.o The result is a 262-byte WebAssembly module.

Launch

Of course, the most important thing is to see that everything really works. As in the previous article , you can use a couple of lines of embedded JavaScript to load and run this WebAssembly module.

<!DOCTYPE html> <script type="module"> async function init() { const { instance } = await WebAssembly.instantiateStreaming( fetch("./add.wasm") ); console.log(instance.exports.add(4, 1)); } init(); </script> If everything is fine, you will see the number 17 in the DevTool console. We have just successfully compiled C into WebAssembly, without touching Emscripten. It is also worth noting that there is no glue code here to configure and load the WebAssembly module.

Compiling C is a bit simpler.

We have taken quite a few steps to compile C into WebAssembly. As I said, for educational purposes, we have reviewed in detail all the steps. Let's skip the human-readable intermediate formats and immediately apply the C compiler as a Swiss army knife, as it was designed:

clang \ --target=wasm32 \ -nostdlib \ # Don't try and link against a standard library -Wl,--no-entry \ # Flags passed to the linker -Wl,--export-all \ -o add.wasm \ add.c Here we get the same

.wasm file, but with one command.Optimization

Let's look at the WAT of our WebAssembly module by running

wasm2wat : (module (type (;0;) (func)) (type (;1;) (func (param i32 i32) (result i32))) (func $__wasm_call_ctors (type 0)) (func $add (type 1) (param i32 i32) (result i32) (local i32 i32 i32 i32 i32 i32 i32 i32) global.get 0 local.set 2 i32.const 16 local.set 3 local.get 2 local.get 3 i32.sub local.set 4 local.get 4 local.get 0 i32.store offset=12 local.get 4 local.get 1 i32.store offset=8 local.get 4 i32.load offset=12 local.set 5 local.get 4 i32.load offset=12 local.set 6 local.get 5 local.get 6 i32.mul local.set 7 local.get 4 i32.load offset=8 local.set 8 local.get 7 local.get 8 i32.add local.set 9 local.get 9 return) (table (;0;) 1 1 anyfunc) (memory (;0;) 2) (global (;0;) (mut i32) (i32.const 66560)) (global (;1;) i32 (i32.const 66560)) (global (;2;) i32 (i32.const 1024)) (global (;3;) i32 (i32.const 1024)) (export "memory" (memory 0)) (export "__wasm_call_ctors" (func $__wasm_call_ctors)) (export "__heap_base" (global 1)) (export "__data_end" (global 2)) (export "__dso_handle" (global 3)) (export "add" (func $add))) Wow, what a great code. To my surprise, the module uses memory (as seen in

i32.load and i32.store ), eight local and several global variables. Probably, you can manually write a more concise version. This program is so big because we have not applied any optimizations. Let's do it: clang \ --target=wasm32 \ + -O3 \ # Agressive optimizations + -flto \ # Add metadata for link-time optimizations -nostdlib \ -Wl,--no-entry \ -Wl,--export-all \ + -Wl,--lto-O3 \ # Aggressive link-time optimizations -o add.wasm \ add.c Note: technically, optimizing with linking (LTO) does not provide any advantages, since we compose only one file. In large projects, LTO will help to significantly reduce the file size.After executing these commands, the

.wasm file .wasm reduced from 262 to 197 bytes, and WAT also became much simpler: (module (type (;0;) (func)) (type (;1;) (func (param i32 i32) (result i32))) (func $__wasm_call_ctors (type 0)) (func $add (type 1) (param i32 i32) (result i32) local.get 0 local.get 0 i32.mul local.get 1 i32.add) (table (;0;) 1 1 anyfunc) (memory (;0;) 2) (global (;0;) (mut i32) (i32.const 66560)) (global (;1;) i32 (i32.const 66560)) (global (;2;) i32 (i32.const 1024)) (global (;3;) i32 (i32.const 1024)) (export "memory" (memory 0)) (export "__wasm_call_ctors" (func $__wasm_call_ctors)) (export "__heap_base" (global 1)) (export "__data_end" (global 2)) (export "__dso_handle" (global 3)) (export "add" (func $add))) Calling the standard library

Using C without the standard libc library seems rather rude. It is logical to add it, but I will be honest: it will not be easy. In fact, we do not directly invoke any libc libraries in this article . There are several suitable ones, especially glibc , musl and dietlibc . However, most of these libraries are supposed to run on the POSIX operating system, which implements a specific set of system calls. Since we do not have a kernel interface in JavaScript, we will have to independently implement these POSIX system calls, probably through JavaScript. This is a difficult task and I am not going to do this here. The good news is that this is what Emscripten is doing for you .

Of course, not all libc functions rely on system calls. Functions like

strlen() , sin() or even memset() are implemented in simple C. This means that you can use these functions or even just copy / paste their implementation from some of the mentioned libraries.Dynamic memory

Without libc, fundamental C interfaces such as

malloc() and free() are not available to us. In a non-optimized WAT, we have seen that the compiler uses memory if necessary. This means that we cannot simply use the memory as we like without risking damage. Need to understand how it is used.LLVM memory models

The way that WebAssembly is segmented will surprise experienced programmers. First, WebAssembly technically admits a null address, but often it is still treated as an error. Secondly, the stack comes first and grows down (to lower addresses), and the heap appears later and grows up. The reason is that the WebAssembly memory may increase at runtime. This means that there is no fixed end for placing the stack or heap.

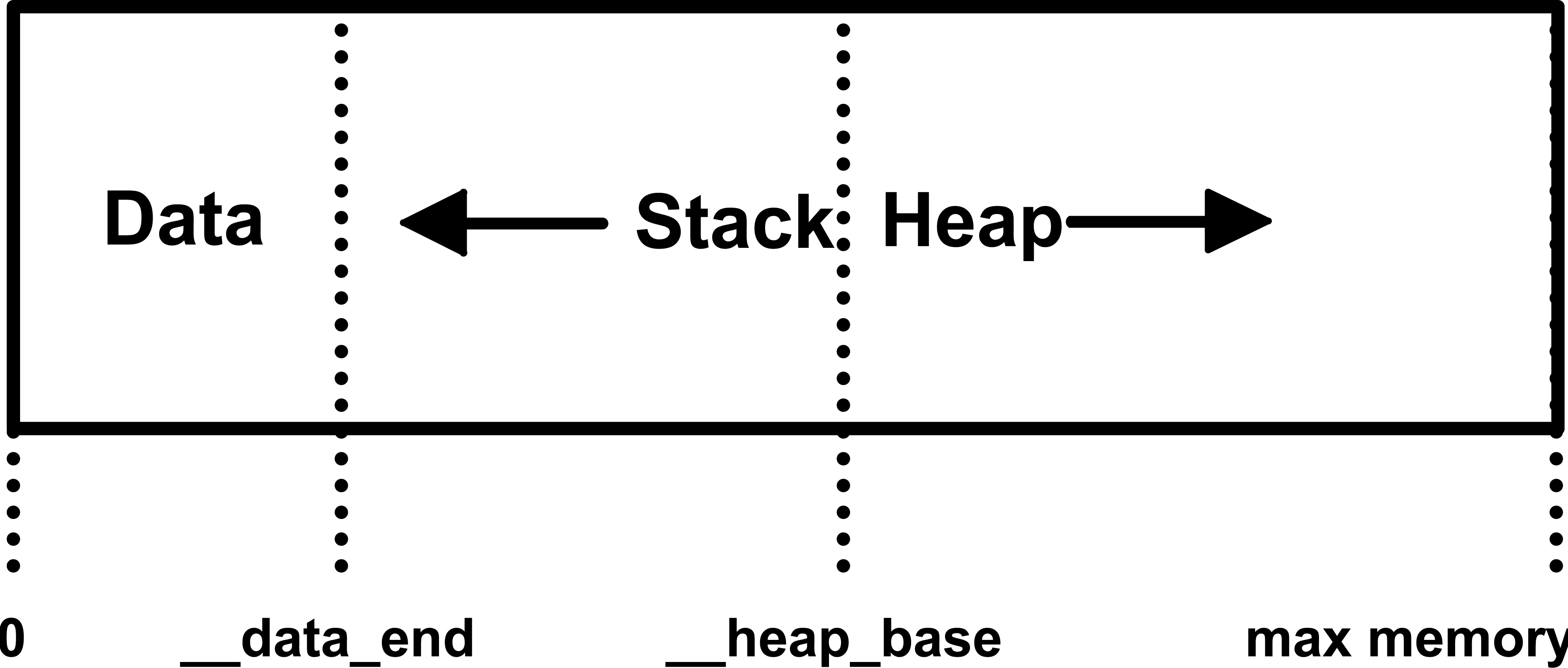

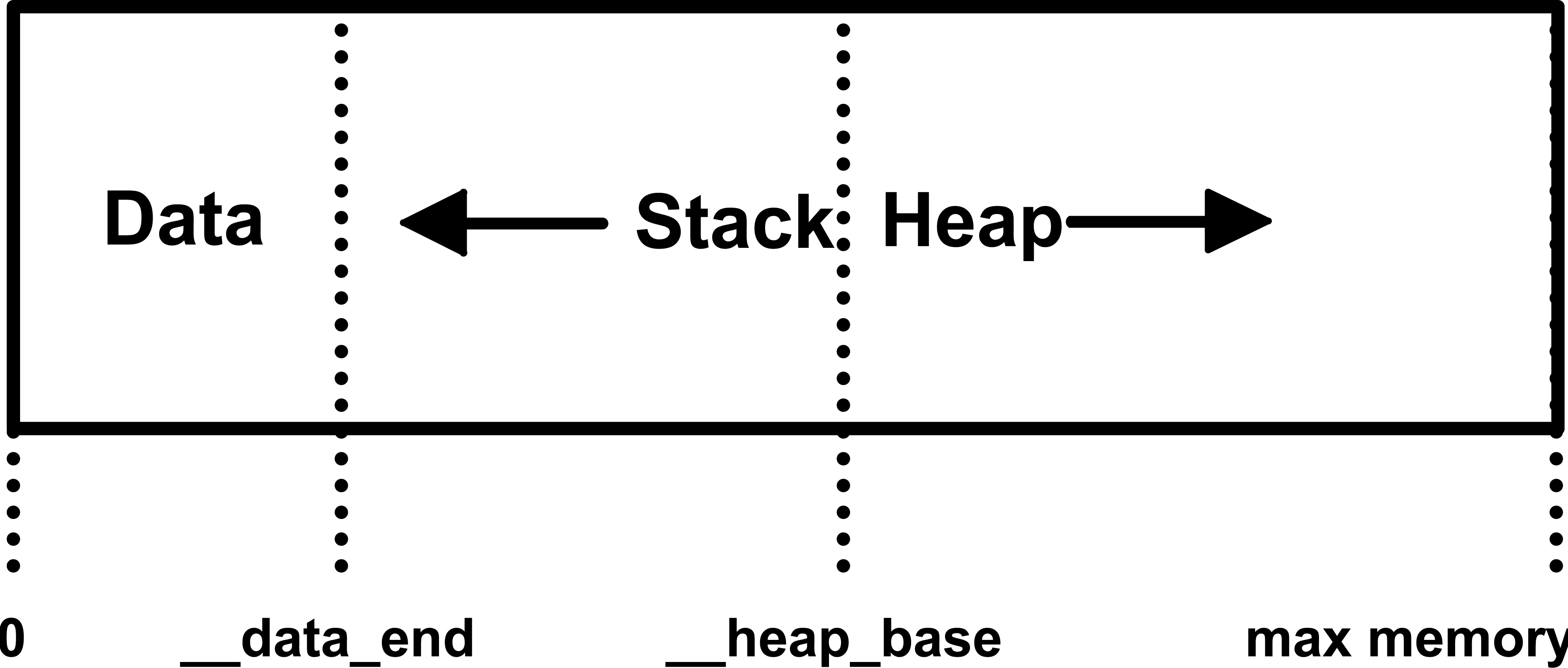

Here is the layout of the

wasm-ld :

The stack grows down, and the heap grows up. The stack starts with

__data_end , and the heap __heap_base with __heap_base . Because the stack is placed first, it is limited to the maximum size set at compilation, that is, __heap_base minus __data_endIf you go back and look at the globals section in our WAT, we find these values:

__heap_base set to 66560, and __data_end is to 1024. This means that the stack can grow to a maximum of 64 KiB, which is not a lot. Fortunately, wasm-ld allows you to change this value: clang \ --target=wasm32 \ -O3 \ -flto \ -nostdlib \ -Wl,--no-entry \ -Wl,--export-all \ -Wl,--lto-O3 \ + -Wl,-z,stack-size=$[8 * 1024 * 1024] \ # Set maximum stack size to 8MiB -o add.wasm \ add.c Build allocator

It is known that the heap area begins with

__heap_base . Since the malloc() function is missing, we know that the next memory region can be safely used. We can place the data there as you wish, and you don’t need to be afraid of memory damage as the stack grows in the opposite direction. However, a heap free for all can quickly become clogged, so some kind of dynamic memory management is usually required. One option is to take the full implementation of malloc (), such as the malloc implementation from Dag Lee , which is used in Emscripten. There are a few smaller implementations with different tradeoffs.But why not write your own

malloc() ? We are so deeply stuck that it makes no difference. One of the simplest is the bump-allocator: it is super-fast, extremely small and easy to implement. But there is a drawback: you cannot free memory. Although at first glance, such an allocator seems incredibly useless, but when developing Squoosh I ran into precedents where it would be an excellent choice. The concept of a bump allocator is that we store the starting address of unused memory as global. If the program requests n bytes of memory, we move the marker to n and return the previous value: extern unsigned char __heap_base; unsigned int bump_pointer = &__heap_base; void* malloc(int n) { unsigned int r = bump_pointer; bump_pointer += n; return (void *)r; } void free(void* p) { // lol } Global variables from WAT are actually defined by

wasm-ld , so we can access them from our C code as ordinary variables if we declare them extern . So, we just wrote our own malloc() ... in five lines C.Note: our bump allocator is not fully compatible with malloc() from C. For example, we do not give any guarantees of alignment. But it works well enough, so ...Dynamic Memory Usage

To check, let's make a function C, which takes an array of numbers of arbitrary size and calculates the sum. Not very interesting, but it forces the use of dynamic memory, since we do not know the size of the array during assembly:

int sum(int a[], int len) { int sum = 0; for(int i = 0; i < len; i++) { sum += a[i]; } return sum; } The sum () function, I hope, is pretty clear. A more interesting question is how to transfer an array from JavaScript to WebAssembly - after all, WebAssembly understands only numbers. The general idea is to use

malloc() from JavaScript to allocate a piece of memory, copy the values there and pass the address (number!) Where the array is located: <!DOCTYPE html> <script type="module"> async function init() { const { instance } = await WebAssembly.instantiateStreaming( fetch("./add.wasm") ); const jsArray = [1, 2, 3, 4, 5]; // Allocate memory for 5 32-bit integers // and return get starting address. const cArrayPointer = instance.exports.malloc(jsArray.length * 4); // Turn that sequence of 32-bit integers // into a Uint32Array, starting at that address. const cArray = new Uint32Array( instance.exports.memory.buffer, cArrayPointer, jsArray.length ); // Copy the values from JS to C. cArray.set(jsArray); // Run the function, passing the starting address and length. console.log(instance.exports.sum(cArrayPointer, cArray.length)); } init(); </script> After starting, you should see in the DevTools console the answer is 15, which is really the sum of all numbers from 1 to 5.

Conclusion

So, you read to the end. Congratulations! Again, if you feel a bit overwhelmed, everything is fine. It is not necessary to read all the details. Understanding them is completely optional for a good web developer and is not even required for excellent use of WebAssembly . But I wanted to share this information, because it allows you to really appreciate all the work that a project like Emscripten does for you. At the same time, it gives an understanding of how small purely computational modules WebAssembly can be. The Wasm module for the summation of the array contained just 230 bytes, including the dynamic memory allocator . Compiling the same code with Emscripten will produce 100 bytes of WebAssembly code and 11K of junction JavaScript code. We need to try for the sake of such a result, but there are situations when it is worth it.

Source: https://habr.com/ru/post/454868/

All Articles