Backup, part 4: zbackup, restic, borgbackup review and testing

This article will be considered software tools for backup, which by splitting the data stream into separate components (chunks), form a repository.

The components of the repository can be further compressed and encrypted, and most importantly, during repeated backup processes, they can be reused.

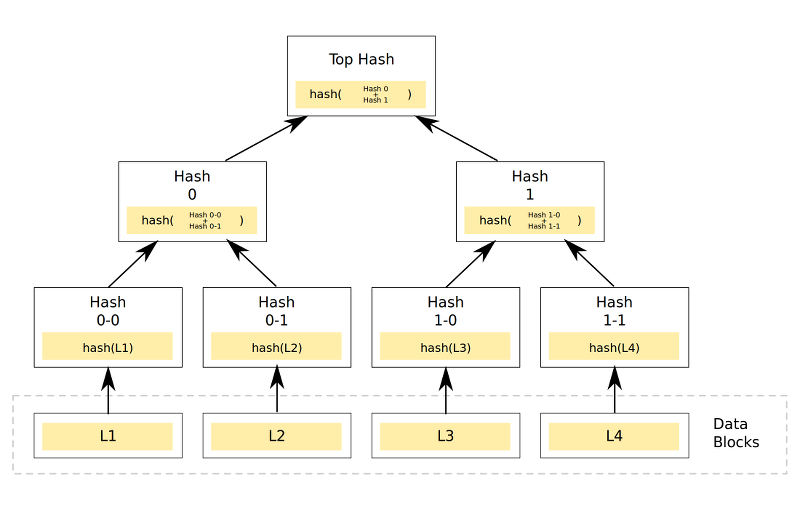

A backup in a similar repository is a named chain of related components, for example, based on various hash functions.

There are several similar solutions, I will focus on 3: zbackup, borgbackup and restic.

Expected results

Since all applicants in one way or another require the creation of a repository, one of the most important factors will be an estimate of the repository size. In the ideal case, its size should be no more than 13 GB in accordance with the adopted method, or even less, subject to good optimization.

It is also highly desirable to be able to create backup copies of files directly, without using tar archivers, as well as working with ssh / sftp without additional tools like rsync and sshfs.

Behavior when creating backups:

- The size of the repository will be equal to the size of the changes, or less.

- A large processor load is expected when using compression and / or encryption, and a sufficiently large load on the network and disk subsystem is likely if the backup and / or encryption process runs on the backup storage server.

- If the repository is damaged, a delayed error is likely both when creating new backups and when attempting to restore. It is necessary to plan additional measures to ensure the integrity of the repository or use the built-in tools to check its integrity.

Working with tar is taken as a reference value, as was shown in one of the previous articles.

Zbackup testing

The general mechanism of zbackup is that the program finds in the data stream supplied at the input areas containing the same data, then optionally compresses them, encrypts, saving each area only 1 time.

For deduplication, a 64-bit ring hash function with a sliding window is used for byte-by-byte checking for coincidence with already existing data blocks (similar to how it is implemented in rsync).

For compression, lzma and lzo are used in a multi-threaded version, and for encryption - aes. In the latest versions there is an opportunity in the future to delete old data from the repository.

The program is written in C ++ with minimal dependencies. The author apparently was inspired by the unix-way, so the program takes data to stdin when creating backups, producing a similar data stream to stdout when restoring. Thus, zbackup can be used as a very good "brick" when writing your own backup solutions. For example, the author of the article, this program is the main backup tool for home machines from about 2014.

Normal tar will be used as the data stream, unless stated otherwise.

Verification of the work was carried out in 2 versions:

- a repository is created and zbackup is launched on the server with the original data, then the contents of the repository are transferred to the backup storage server.

- a repository is created on the backup storage server, zbackup is started via ssh on the backup storage server, and data is output to it via pipe.

The results of the first variant were as follows: 43m11s - when using an unencrypted repository and the lzma compressor, 19m13s - when replacing the compressor with lzo.

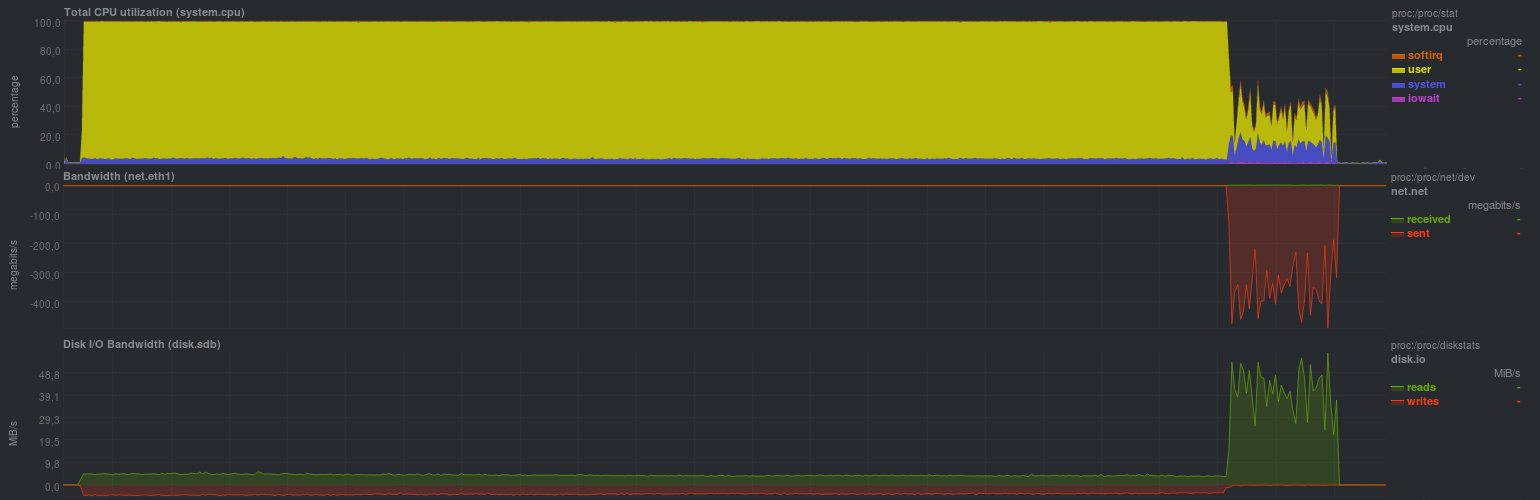

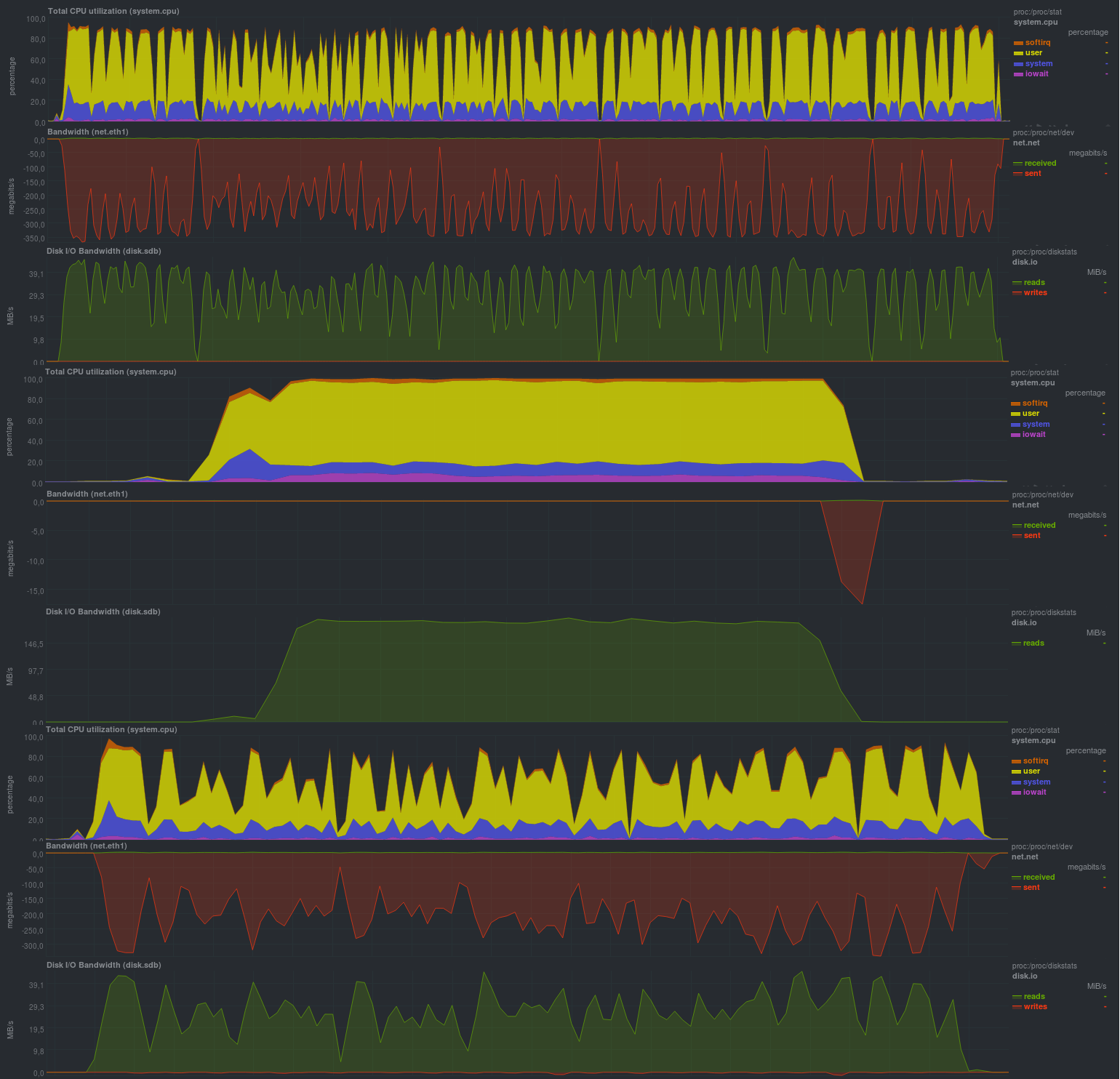

The load on the server with the source data was as follows (an example with lzma is shown, with lzo there was about the same picture, but the rsync share was about a quarter of the time):

It is clearly seen that this backup process is only suitable for relatively rare and small changes. It is also highly desirable to limit the work of zbackup to 1 stream, otherwise there will be a very high load on the processor, since The program is very well able to work in multiple threads. The load on the disk was small, which in general with the modern ssd-based disk subsystem will be imperceptible. You can also clearly see the start of the process of synchronizing repository data to a remote server, the speed is comparable to normal rsync and depends on the performance of the disk subsystem of the backup storage server. The downside is the storage of the local repository and, as a result, duplication of data.

More interesting and applicable in practice is the second option with running zbackup immediately on the backup storage server.

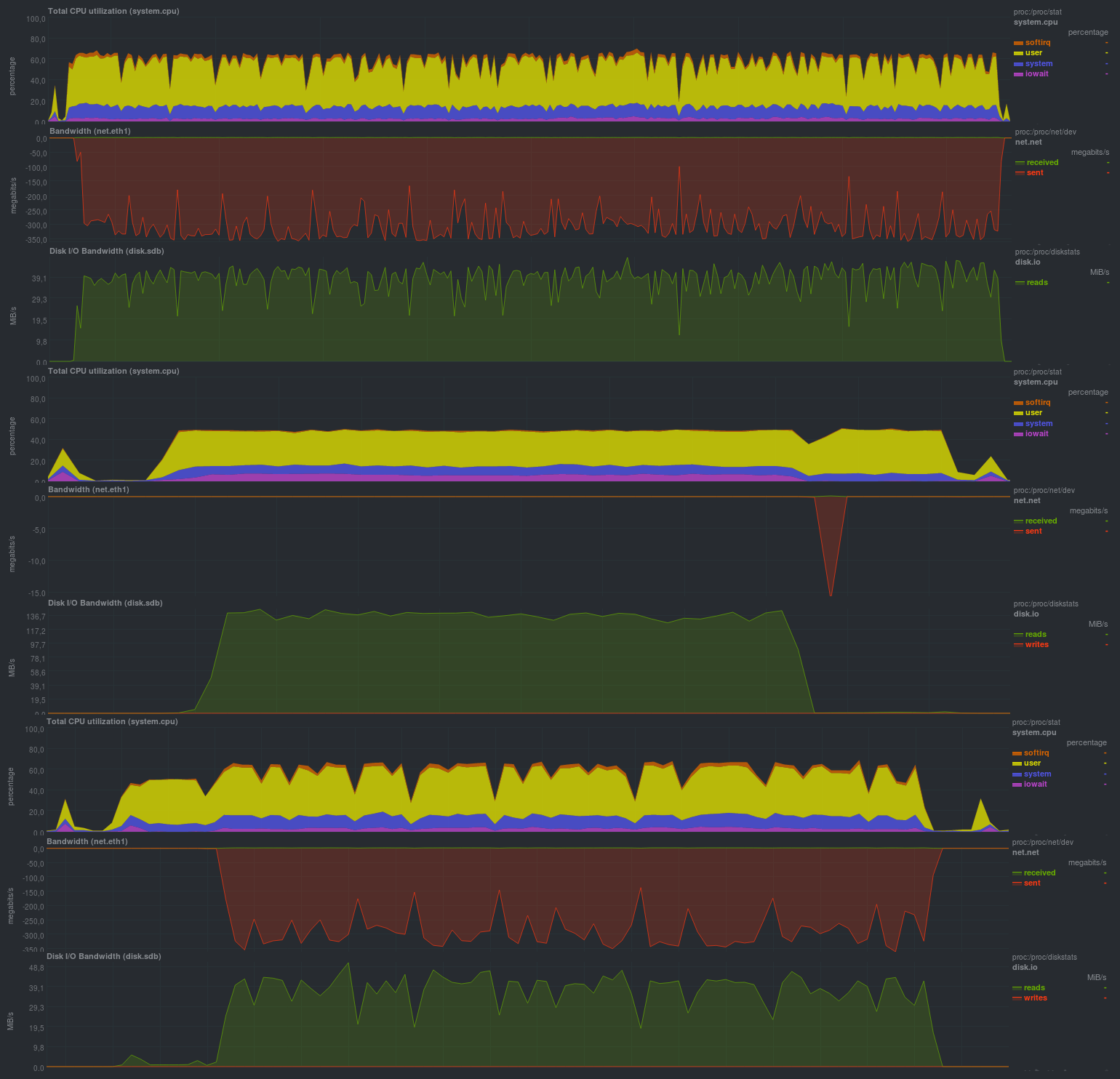

To begin, work without encryption with the lzma compressor will be tested:

The time of each test run:

| Run 1 | Run 2 | Run 3 |

|---|---|---|

| 39m45s | 40m20s | 40m3s |

| 7m36s | 8m3s | 7m48s |

| 15m35s | 15m48s | 15m38s |

If you enable encryption using aes, the results are pretty close:

Operating time on the same data, with encryption:

| Run 1 | Run 2 | Run 3 |

|---|---|---|

| 43m40s | 44m12s | 44m3s |

| 8m3s | 8m15s | 8m12s |

| 15m0s | 15m40s | 15m25s |

If you combine encryption with compression on lzo, it turns out like this:

Working hours:

| Run 1 | Run 2 | Run 3 |

|---|---|---|

| 18m2s | 18m15s | 18m12s |

| 5m13s | 5m24s | 5m20s |

| 8m48s | 9m3s | 8m51s |

The size of the resulting repository was relatively the same and was 13GB. This means that deduplication works correctly. Also on already compressed data, the use of lzo gives a tangible effect, with the total running time zbackup comes close to duplicity / duplicati, but lags 2-5 times behind the base librsync.

The advantages are obvious - saving disk space on the backup storage server. As for the repository verification tools - they are not provided by the zbackup, it is recommended to use a fail-safe disk array or a cloud provider.

In general, a very good impression, despite the fact that the project is about 3 years in place (the last feature request was about a year ago, but without an answer).

Borgbackup testing

Borgbackup is a fork of attic, another similar to the zbackup system. It is written in python, it has a list of features similar to zbackup, but it can additionally:

- Mount backups through fuse

- Check repository contents

- Work in client-server mode

- Use different compressors for data, as well as a heuristic definition of the file type during its compression.

- 2 encryption options, aes and blake

- Built-in tool for

borgbackup benchmark crud ssh: // backup_server / repo / path local_dir

The results were as follows:

CZ-BIG 96.51 MB / s (10 100.00 MB all-zero files: 10.36s)

RZ-BIG 57.22 MB / s (10 100.00 MB all-zero files: 17.48s)

UZ-BIG 253.63 MB / s (10 100.00 MB all-zero files: 3.94s)

DZ-BIG 351.06 MB / s (10 100.00 MB all-zero files: 2.85s)

CR-BIG 34.30 MB / s (10 100.00 MB random files: 29.15s)

RR-BIG 60.69 MB / s (10 100.00 MB random files: 16.48s)

UR-BIG 311.06 MB / s (10 100.00 MB random files: 3.21s)

DR-BIG 72.63 MB / s (10 100.00 MB random files: 13.77s)

CZ-MEDIUM 108.59 MB / s (1000 1.00 MB all-zero files: 9.21s)

RZ-MEDIUM 76.16 MB / s (1000 1.00 MB all-zero files: 13.13s)

UZ-MEDIUM 331.27 MB / s (1000 1.00 MB all-zero files: 3.02s)

DZ-MEDIUM 387.36 MB / s (1000 1.00 MB all-zero files: 2.58s)

CR-MEDIUM 37.80 MB / s (1000 1.00 MB random files: 26.45s)

RR-MEDIUM 68.90 MB / s (1000 1.00 MB random files: 14.51s)

UR-MEDIUM 347.24 MB / s (1000 1.00 MB random files: 2.88s)

DR-MEDIUM 48.80 MB / s (1000 1.00 MB random files: 20.49s)

CZ-SMALL 11.72 MB / s (10,000 10.00 kB all-zero files: 8.53s)

RZ-SMALL 32.57 MB / s (10,000 10.00 kB all-zero files: 3.07s)

UZ-SMALL 19.37 MB / s (10000 10.00 kB all-zero files: 5.16s)

DZ-SMALL 33.71 MB / s (10000 10.00 kB all-zero files: 2.97s)

CR-SMALL 6.85 MB / s (10,000 10.00 kB random files: 14.60s)

RR-SMALL 31.27 MB / s (10,000 10.00 kB random files: 3.20s)

UR-SMALL 12.28 MB / s (10,000 10.00 kB random files: 8.14s)

DR-SMALL 18.78 MB / s (10,000 10.00 kB random files: 5.32s)

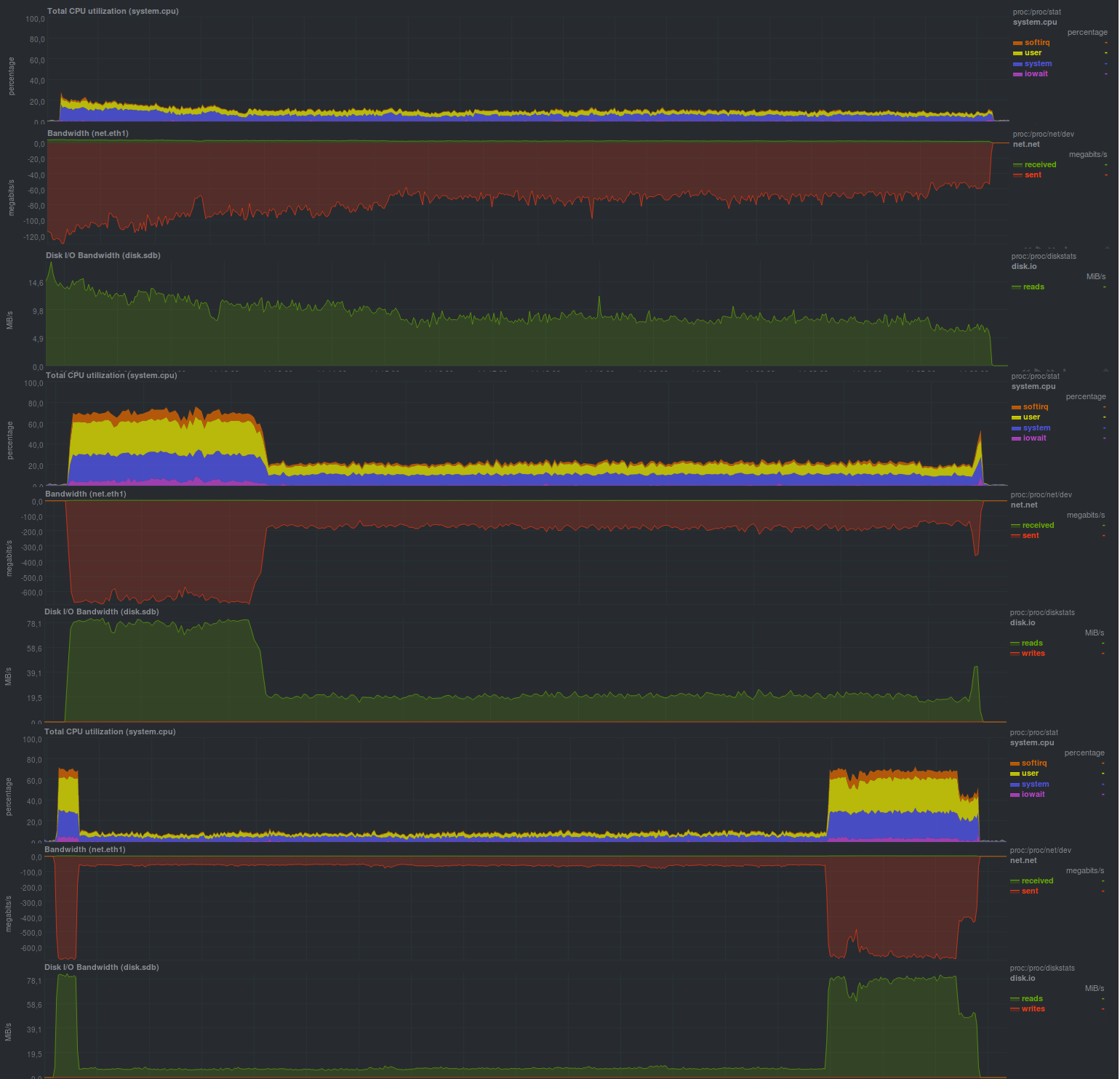

During testing, heuristics will be used for compression with file type definition (compression auto), and the results will be as follows:

Working hours:

| Run 1 | Run 2 | Run 3 |

|---|---|---|

| 4m6s | 4m10s | 4m5s |

| 56s | 58s | 54s |

| 1m26s | 1m34s | 1m30s |

If you enable repository authorization (authenticated mode), the results will be obtained by your friends:

Working hours:

| Run 1 | Run 2 | Run 3 |

|---|---|---|

| 4m11s | 4m20s | 4m12s |

| 1m0s | 1m3s | 1m2s |

| 1m30s | 1m34s | 1m31s |

When activating aes encryption, the results did not deteriorate much:

| Run 1 | Run 2 | Run 3 |

|---|---|---|

| 4m55s | 5m2s | 4m58s |

| 1m0s | 1m2s | 1m0s |

| 1m49s | 1m50s | 1m50s |

And if you change aes to blake, the situation will improve:

Working hours:

| Run 1 | Run 2 | Run 3 |

|---|---|---|

| 4m33s | 4m43s | 4m40s |

| 59s | 1m0s | 1m0s |

| 1m38s | 1m43s | 1m40s |

As in the case of zbackup, the size of the repository was 13 GB and even slightly smaller, which is generally expected. Very pleased with the time of work, it is comparable to solutions based on librsync, providing much wider opportunities. I was also pleased with the ability to set various parameters via environment variables, which gives a very serious advantage when using borgbackup in automatic mode. Also pleased with the load when backing up: judging by the processor load - borgbackup works in 1 stream.

Special disadvantages when using was not found.

Restic testing

Despite the fact that restic is a fairly new solution (the first 2 candidates have been known since 2013 and older), it has quite good characteristics. Written on Go.

When compared with zbackup, it additionally gives:

- Checking the integrity of the repository (including checking in parts).

- A huge list of supported protocols and backup storage providers, as well as rclone-rsync support for cloud solutions.

- Comparison of 2 backups between each other.

- Mounting the repository via fuse.

In general, the list of opportunities is quite close to borgbackup, in some places more, in some places less. Of the features - the inability to disable encryption, and therefore, backup copies will always be encrypted. Let's take a practical look at what can be squeezed out of this software:

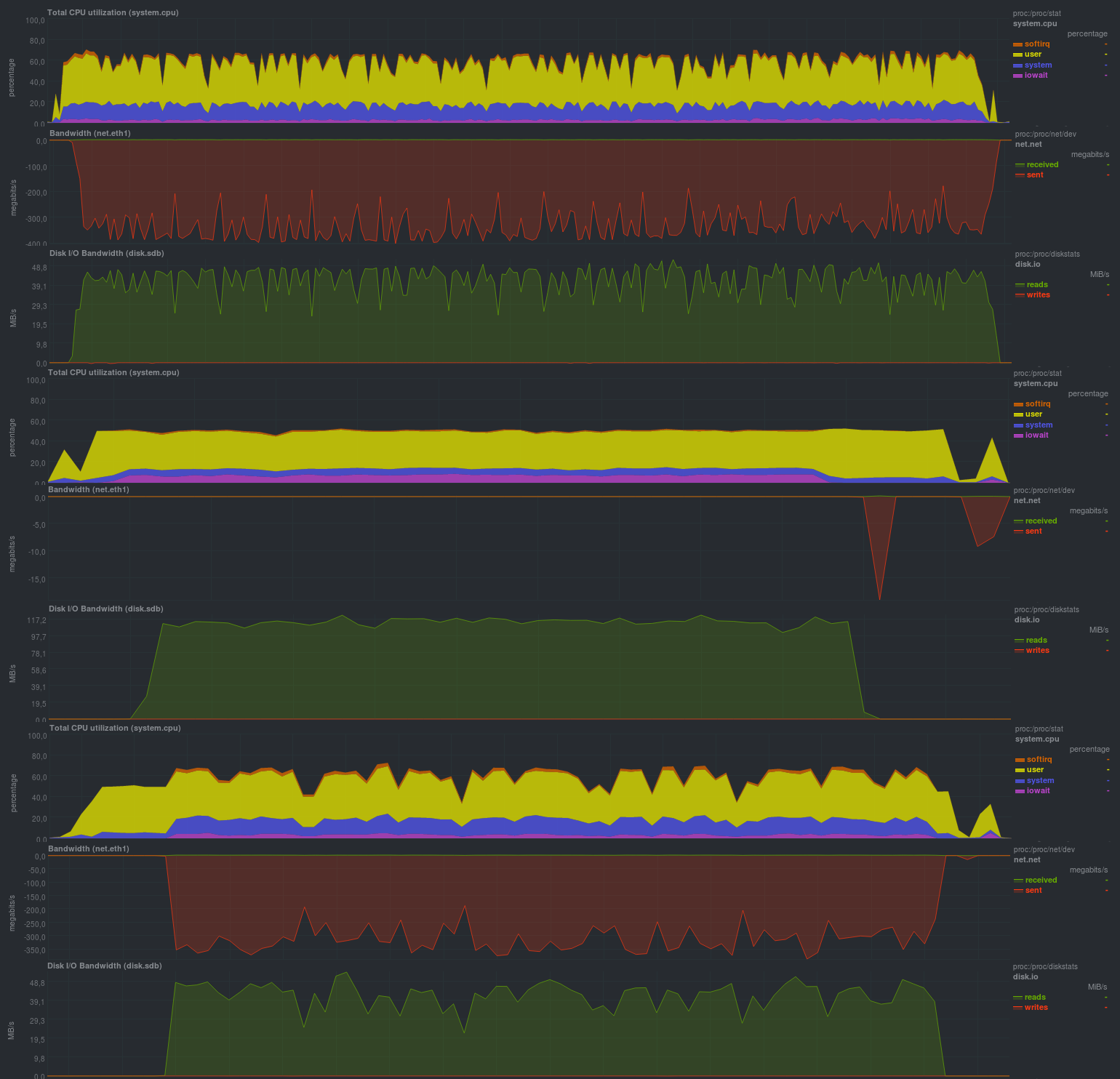

The results of the work are also comparable with rsync-based solutions and, in general, are very close to borgbackup, but the processor load is higher (several threads are running) and sawtooth.

Most likely, the program rests on the performance of the disk subsystem on the data storage server, as it was with rsync. The size of the repository was 13GB, like in zbackup or borgbackup, there were no obvious drawbacks with this solution.

results

In fact, all candidates obtained similar indicators, but at a different price. The borgbackup proved to be the best of all, a bit slower - restic, zbackup, probably, you should not start applying,

and if it is already in use, try changing to borgbackup or restic.

findings

The most promising solution is restic, because it is he who has the best ratio of performance to speed, but for now let's not rush to general conclusions.

Borgbackup is in principle no worse, but zbackup is probably better to replace. True, to ensure the operation of the rule 3-2-1 zbackup can still be used. For example, in addition to backup tools based on (lib) rsync.

Announcement

Backup, Part 1: Why do I need backup, review of methods, technologies

Backup, part 2: Review and test rsync-based backup tools

Backup, part 3: Review and testing duplicity, duplicati

Backup, part 4: zbackup, restic, borgbackup review and testing

Backup, Part 5: Bacula and veeam backup for linux testing

Backup Part 6: Comparing Backup Tools

Backup, Part 7: Conclusions

Publisher: Pavel Demkovich

')

Source: https://habr.com/ru/post/454734/

All Articles