Building an automatic message moderation system

Automatic moderation systems are embedded in web services and applications where it is necessary to process a large number of user messages. Such systems can reduce the cost of manual moderation, speed it up and process all user messages in real-time. The article will talk about building an automatic moderation system for processing the English language using machine learning algorithms. We will discuss the whole workline from research tasks and the choice of ML algorithms to roll out in production. Let's see where to look for ready datasets and how to collect data for a task independently.

Prepared in collaboration with Ira Stepanyuk, Data Scientist at Poteha Labs

Task Description

We work with multiplayer active chat rooms, where short messages from dozens of users can come every minute in one chat. The task is to isolate toxic messages and messages with any obscene statements in the dialogues from such chats. From the point of view of machine learning, this is the problem of binary classification, where each message must be assigned to one of the classes.

')

To solve this problem, first of all it was necessary to understand what toxic messages are and what exactly makes them toxic. To do this, we looked at a large number of typical user posts on the Internet. Here are some examples that we have already divided into toxic messages and normal ones.

| Toxic | Normal |

|---|---|

| Your are a damn fag * ot | this book is so dummy |

| ur child is so ugly (1) | Winners losers make excuses |

| White people are owners of black (2) | black like my soul (2) |

It can be seen that toxic messages often contain obscene words, but still this is not a prerequisite. The message may not contain inappropriate words, but be offensive to anyone (example (1)). In addition, sometimes toxic and normal messages contain the same words that are used in different contexts - offensive or not (example (2)). Such messages also need to be able to distinguish.

After examining various messages, for our moderation system, we called toxic messages that contain utterances with obscene, offensive expressions, or hate for someone.

Data

Open data

One of the most famous moderation datasets is dataset from a competition at the Kaggle Toxic Comment Classification Challenge . Part of the markup in dataset is incorrect: for example, messages with obscene words can be marked as normal. Because of this, one cannot simply take the Kernel competition and get a well-functioning classification algorithm. We need to work more with the data, look at what examples are not enough, and add additional data with such examples.

In addition to competitions, there are several scientific publications with links to suitable datasets ( example ), but not all can be used in commercial projects. Mostly in such datasets collected messages from the social network Twitter, where you can meet a lot of toxic tweets. In addition, data is collected from Twitter, since certain hashtags can be used to search for and mark up toxic messages from users.

Manual data

After we collected datasets from open sources and trained on it the basic model, it became clear that open data is not enough: the quality of the model does not suit. In addition to open data for solving the problem, we had access to unplaced selection of messages from the game messenger with a large number of toxic messages.

To use this data for your task, you had to somehow mark it up. At that time, there was a baseline-trained classifier, which we decided to use for semi-automatic markup. Having driven all messages through the model, we obtained the probabilities of the toxicity of each message and sorted them in descending order. At the beginning of this list were collected messages with obscene and offensive words. At the end, on the contrary, there are normal user messages. Thus, most of the data (with very large and very small probability values) could not be marked out, but immediately attributed to a certain class. It remains to mark the messages that hit the middle of the list, which was done manually.

Data augmentation

Often in datasets you can see changed messages, in which the classifier is mistaken, and the person correctly understands their meaning.

That's because users adapt and learn to deceive the moderation systems so that the algorithms are mistaken on toxic messages, and the meaning of the person remains clear. What users are doing now:

- generate typos: you are stupid asswhole, fack you ,

- replace alphabetic characters with numbers similar in description: n1gga, b0ll0cks ,

- insert extra spaces: idiot ,

- remove spaces between words: dieyoustupid .

In order to train a classifier that is resistant to such substitutions, you need to do what users do: generate the same changes in the messages and add them to the training sample to the main data.

In general, this struggle is inevitable: users will always try to find vulnerabilities and hacks, and moderators will implement new algorithms.

Subtask description

We were faced with subtasks for analyzing the message in two different modes:

- online mode - real-time analysis of messages, with a maximum response rate;

- offline mode - analyzing log messages and highlighting toxic dialogs.

In online mode, we process each message of users and run it through the model. If the message is toxic, then we hide it in the chat interface, and if normal, then output it. In this mode, all messages should be processed very quickly: the model should produce a response so quickly that it does not violate the structure of the dialogue between users.

There is no time limit in the offline mode, and therefore I wanted to implement a model with the highest quality.

Online mode. Search for words in the dictionary

Regardless of which model is chosen further, we must find and filter messages with obscene words. To solve this subtask, the easiest way is to compile a dictionary of unacceptable words and expressions that cannot be skipped exactly and search for such words in each message. The search should occur quickly, so the naive algorithm for searching for substrings for such a time is not suitable. The appropriate algorithm for searching for a set of words in a string is the Aho-Korasik algorithm . Due to this approach, it is possible to quickly identify some toxic examples and block messages before they are transferred to the main algorithm. Using the ML algorithm will allow you to "understand the meaning" of messages and improve the quality of classification.

Online mode. Basic machine learning model

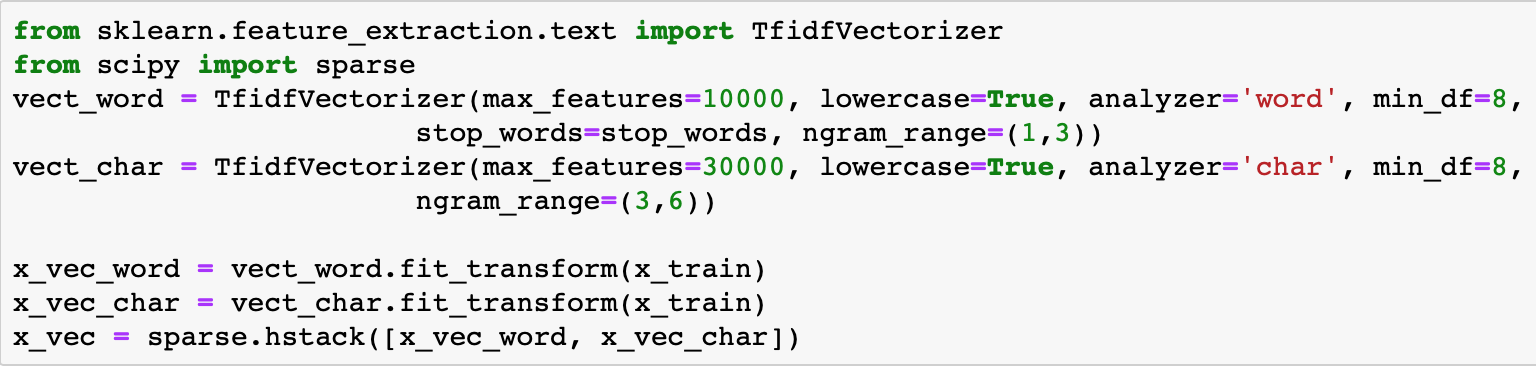

For the base model, it was decided to use the standard approach for text classification: TF-IDF + classical classification algorithm. Again for reasons of speed and performance.

TF-IDF is a statistical measure that allows you to determine the most important words for text in a body using two parameters: the word frequencies in each document and the number of documents containing a specific word (in more detail here ). Having calculated for each word in the message TF-IDF, we obtain a vector representation of this message.

TF-IDF can be calculated for words in the text, as well as for n-gram words and characters. Such an extension will work better, as it will be able to handle frequently encountered phrases and words that were not in the out-of-vocabulary.

An example of using TF-IDF on n-grams of words and symbols

After converting messages into vectors, you can use any classical method for classification: logistic regression, SVM , random forest, boosting .

In our problem, we decided to use logistic regression, since this model increases performance in comparison with other classical ML classifiers and predicts the probabilities of classes, which allows flexible selection of the classification threshold in production.

The algorithm obtained using TF-IDF and logistic regression works quickly and well identifies messages with obscene words and expressions, but does not always understand the meaning. For example, often messages with the words ' black ' and ' feminizm ' fall into the toxic class. I wanted to fix this problem and learn to better understand the meaning of the messages using the next version of the classifier.

Offline mode

In order to better understand the meaning of messages, you can use neural network algorithms:

- Embedding (Word2Vec, FastText)

- Neural Networks (CNN, RNN, LSTM)

- New pre-trained models (ELMo, ULMFiT, BERT)

We discuss some of these algorithms and how they can be used in more detail.

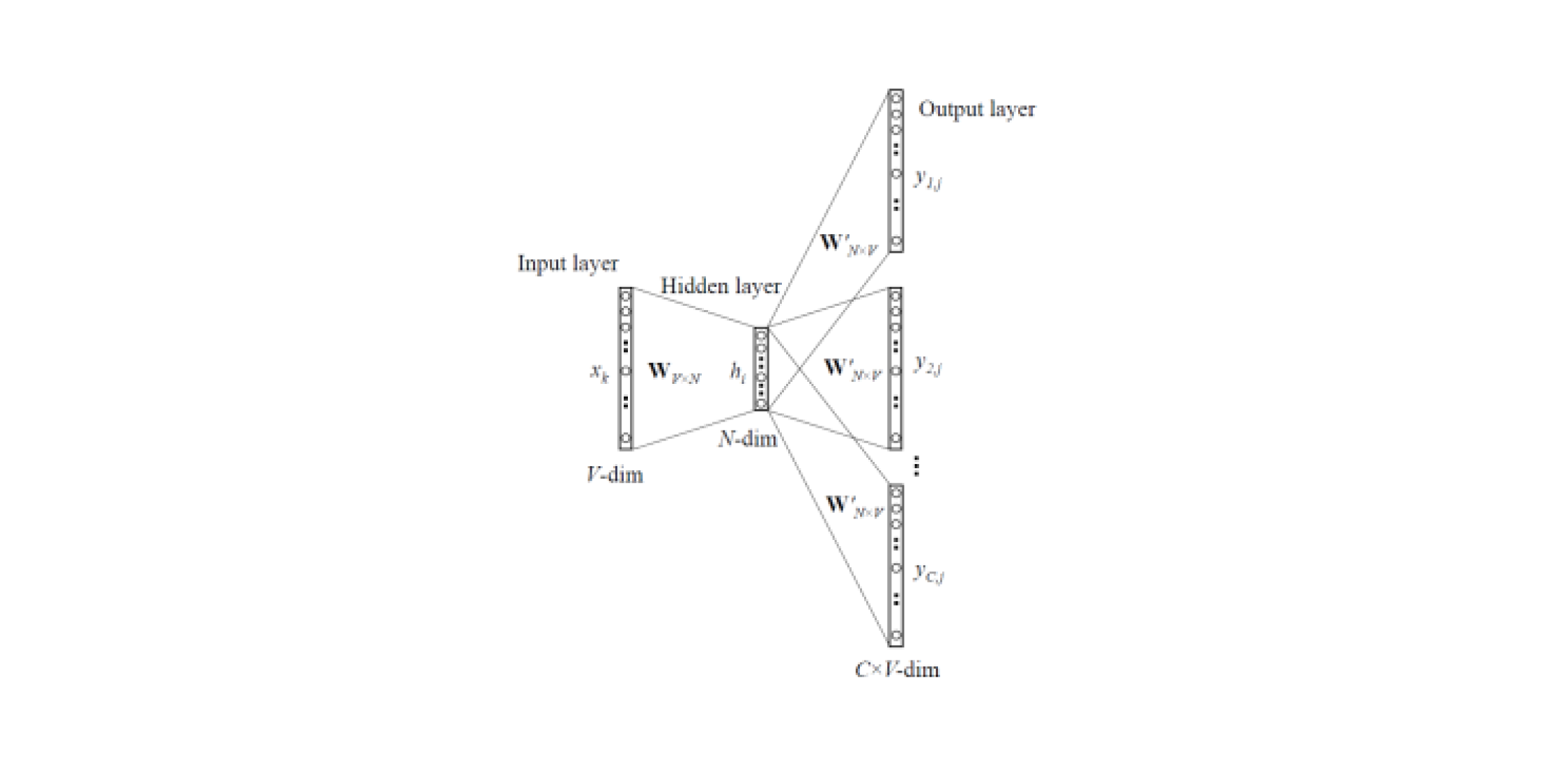

Word2Vec and FastText

Embedding patterns allow you to get vector representations of words from texts. There are two types of Word2Vec : Skip-gram and CBOW (Continuous Bag of Words). In Skip-gram, the context is predicted by the word, and in CBOW, the opposite: by the context, the word is predicted.

Such models are trained in large text corpora and allow to get vector representations of words from a hidden layer of a trained neural network. The disadvantage of such an architecture is that the model is trained on a limited set of words contained in the corpus. This means that for all words that were not in the corpus of texts at the training stage, there will be no embeddingings. And this situation often happens when the pre-trained models are used for their tasks: for a part of the words there will be no embeddingings, respectively, a large amount of useful information will be lost.

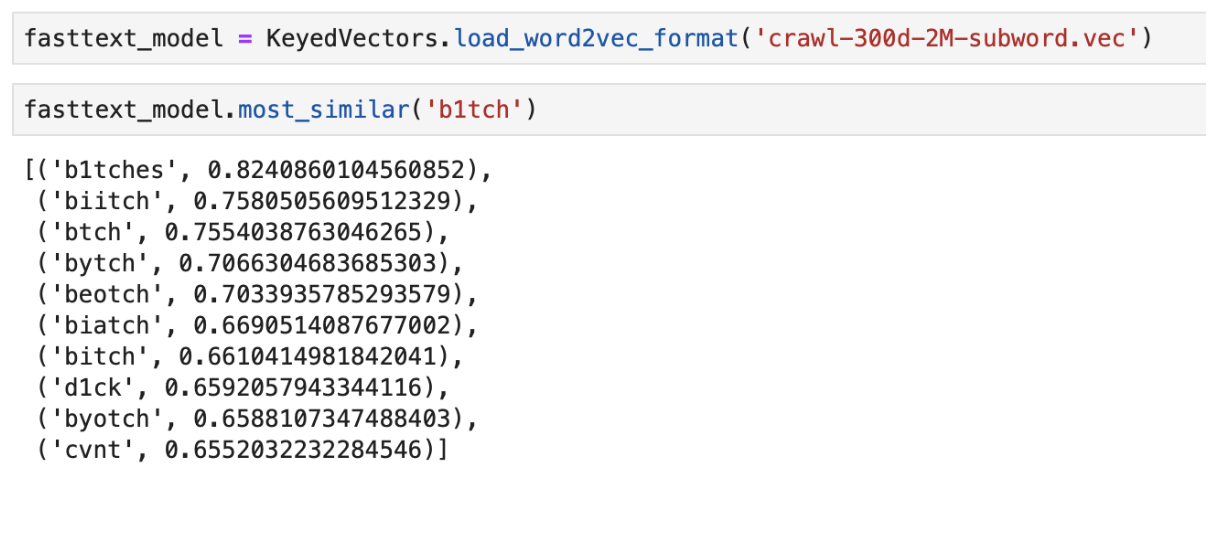

To solve the problem with words that are not in the dictionary, (OOV, out-of-vocabulary) there is an improved embedding model - FastText . Instead of using separate words to train a neural network, FastText breaks words into n-grams (subwords) and learns them. To get a vector representation of a word, you need to get the vector representations of the n-gram of this word and put them together.

Thus, to obtain feature vectors from messages, you can use the pre-trained Word2Vec and FastText models. These characteristics can be classified using classical ML classifiers or a fully connected neural network.

An example of the output of “closest” within the meaning of words using pre- trained FastText

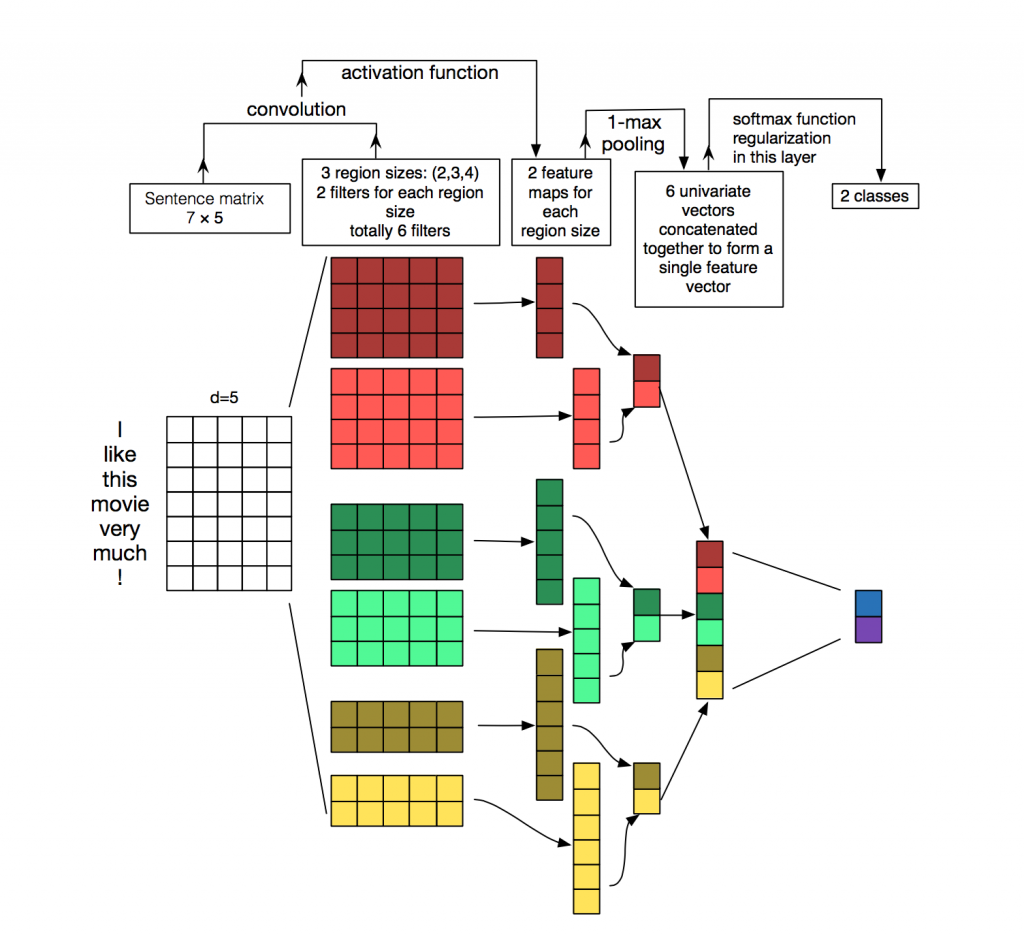

CNN classifier

For the processing and classification of texts from neural network algorithms, recurrent networks (LSTM, GRU) are more often used, since they work well with sequences. Convolutional networks (CNN) are most often used for image processing, but they can also be used in the task of text classification. Consider how this can be done.

Each message is a matrix in which its vector representation is written on each line for a token (word). Convolution is applied to such a matrix in a certain way: the convolution filter “slides” across whole rows of the matrix (word vectors), but at the same time captures several words at a time (usually 2-5 words), thus processing words in the context of adjacent words. Details on how this happens can be seen in the picture .

Why use convolutional networks for word processing when you can use recurrent ones? The fact is that convolutions work much faster. Using them for the task of classifying messages can greatly save time on learning.

ELMo

ELMo (Embeddings from Language Models) is a model of embeddings based on a language model that was recently introduced . The new embedding model is different from Word2Vec and FastText models. ELMo word vectors have certain advantages:

- The representation of each word depends on the whole context in which it is used.

- The representation is based on symbols, which makes it possible to form reliable representations for out-of-vocabulary words.

ELMo can be used for different tasks in NLP. For example, for our task, the message vectors obtained using ELMo can be sent to the classic ML classifier or use a convolutional or fully meshed network.

The pre-trained ELMo embeddings are quite simple to use for your task, an example of usage can be found here .

Features of the implementation

API on Flask

The API prototype was written in Flask, as it is easy to use.

Two Docker Images

For deployment, we used two docker images: the base one, where all the dependencies were installed, and the main one for launching the application. This greatly saves assembly time, since the first image is rarely rebuilt and due to this, time is saved during the deployment. Quite a lot of time is spent on building and downloading machine learning libraries, which is not necessary at every commit.

Testing

A specific feature of the implementation of a fairly large number of machine learning algorithms is that even with high values of metrics on a validation dataset, the actual quality of the algorithm in production can be low. Therefore, to test the operation of the algorithm, the whole team used a bot in Slack. This is very convenient, because any team member can check which answer algorithms give to a specific message. This method of testing allows you to immediately see how the algorithms will work on live data.

A good alternative is to launch solutions on public sites like Yandex Toloki and AWS Mechanical Turk.

Conclusion

We considered several approaches to solving the problem of automatic message moderation and described the features of our implementation.

The main observations obtained during the work:

- A vocabulary search and machine learning algorithm based on TF-IDF and logistic regression made it possible to classify messages quickly, but not always correctly.

- Neural network algorithms and pre-trained embedding patterns do a better job and can determine toxicity within the meaning of a message.

Of course, we posted the open demo of Poteha Toxic Comment Detection on Facebook bot. Help us make the bot better!

I will be glad to answer questions in the comments.

Source: https://habr.com/ru/post/454628/

All Articles