The history of assembling a “village supercomputer” from spare parts from eBay, Aliexpress and a computer store. Part 2

Good day, dear Habrovchane!

Link to the first part of the story for those who missed it

I want to continue my story about the assembly of the “village supercomputer”. And I will explain why it is so named - the reason is simple. I myself live in the village. And the name is an easy trolling over those who scream on the Internet “There is no life outside the Moscow Ring Road!”, “The Russian village has drank and dies!” So, somewhere this may be so, and I will be the exception to the rule. I do not drink, I do not smoke, I do things that are not for every “city cracker (c)” in my mind and pocket. But back to our sheep, more precisely - the server, which at the end of the first part of the article already "showed signs of life."

')

The board was lying on the table, I climbed the BIOS to tweak it to my liking, I rolled Ubuntu 16.04 Desktop for simplicity and decided to connect a video card to the “super machine”. But only the GTS 250 with a hefty non-native fan was stuck at hand. Which I installed in PCI-E 16x slot near the power button.

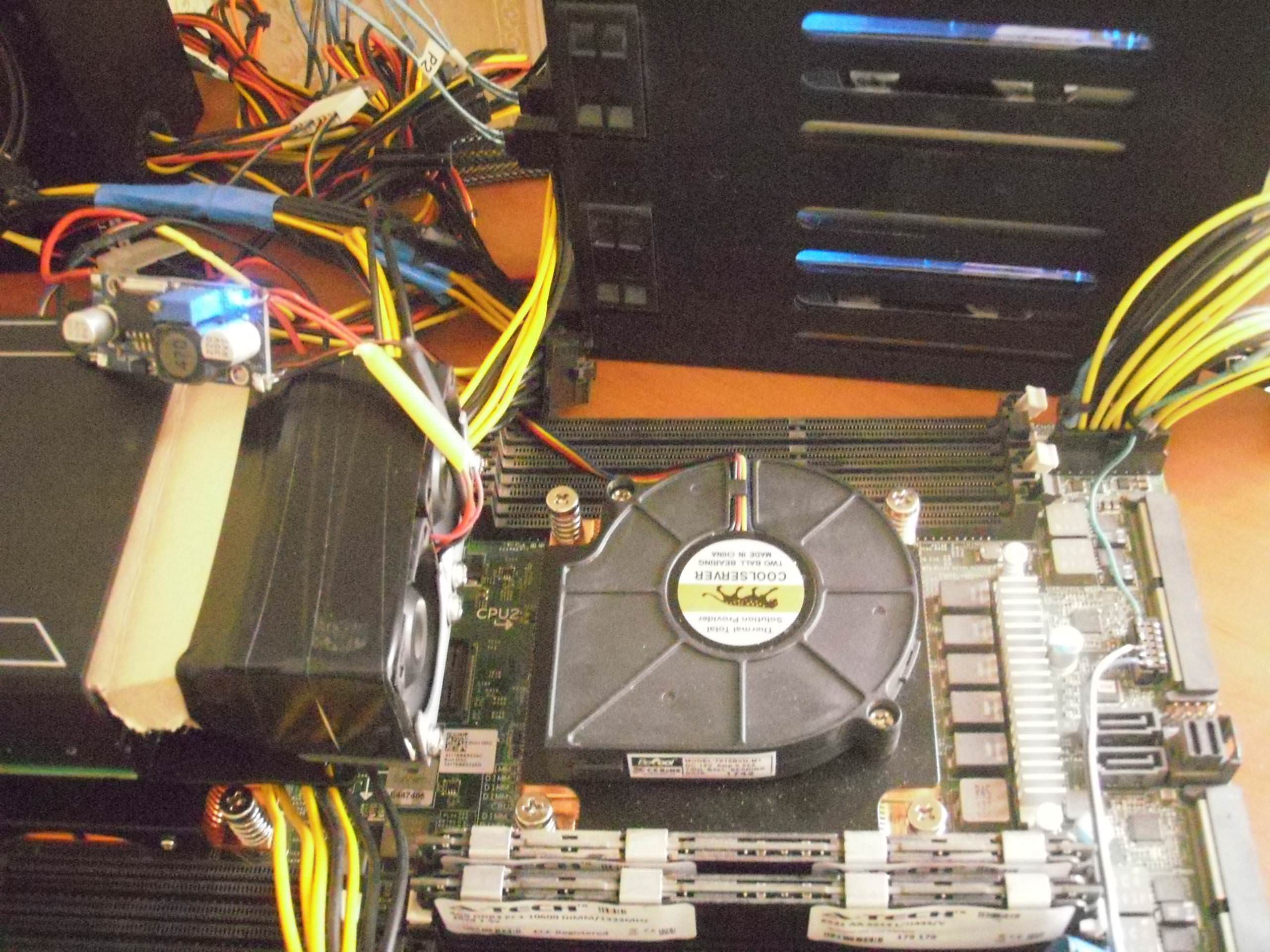

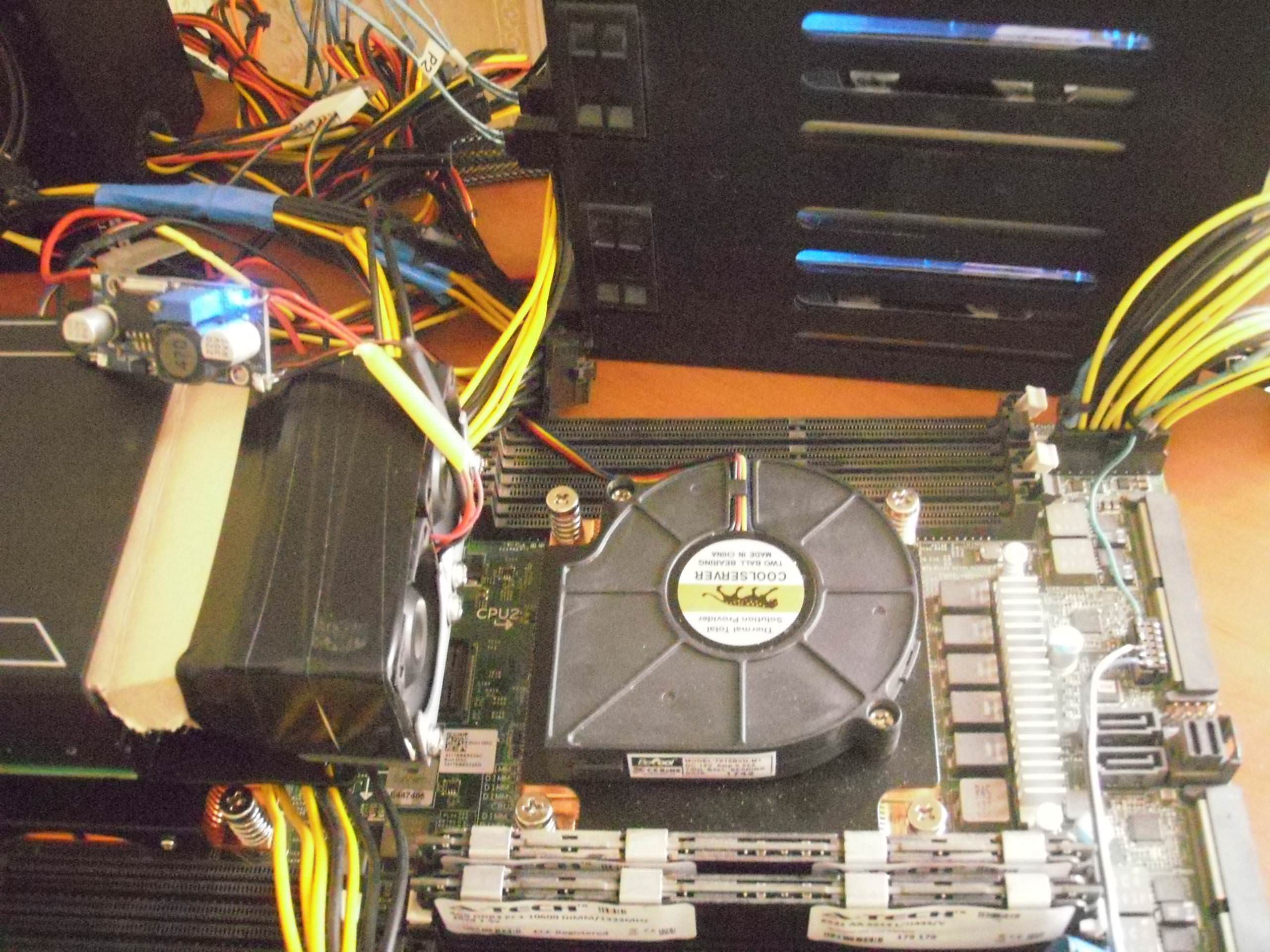

"I took a pack of Belomor (c)" so please do not kick for the quality of the photo. I would rather comment on what is imprinted on them.

Firstly, it turned out that when installed in a slot, even a short video card rests against the memory slots with the board, in which for this occasion it cannot be installed and even has to lower the latches. Secondly, the iron mounting plate of the video card closes the power button, so it had to be removed. By the way, the power button itself is illuminated by a two-color LED that turns green when everything is in order and flashes orange if there are any problems, the circuit and the protection of the PSU or the + 12VSB power supply are over or under-charged.

In fact, this motherboard is not designed so that video cards “directly” are included in its PCI-E 16x slots, they all join on risers. To install an expansion card in the slots near the power button, there are angular risers, low for installing short cards up to the first processor heatsink, and a high angle card with an additional + 12V power connector for installing the video card “above” with a regular low 1U cooler. It can include large graphics cards like the GTX 780, GTX 980, GTX 1080, or specialized Nvidia Tesla K10-K20-K40 GPGPU cards or Intel Xeon Phi 5110p "compute cards" and the like.

But in GPGPU-riser, the card included in EdgeSlot can be turned on directly, only by connecting additional power with the same connector as on a high-angle riser. Who cares - on eBay this flexible riser is called “Dell PowerEdge C8220X PCI-E GPGPU DJC89” and costs about 2.5-3 thousand rubles. Corner risers with extra power are much more rare and I had to arrange to buy them from a specialized store of server parts through Shopota. They went to 7 thousand apiece.

I’ll say at once that “risky guys (tm)” can even connect a pair of GTX 980 to a motherboard with Chinese flexible risers 16x as one person did on the “Tom Forum itself”, the Chinese, by the way, do quite nicely working on ThermalTack flexibles for PCI-E 16x risers, but if you once run out of such a power supply circuit on the server board - you will only blame yourself. I did not risk expensive equipment and used original risers with additional power and one Chinese flexible, considering that the connection of one card "directly" does not burn the board.

Then the long-awaited connectors for additional power came, and I made the tail for my riser in EdgeSlot. And this is the same connector, but with a different pinout used to apply additional power to the motherboard. This connector is just near this very EdgeSlot connector, there is an interesting pinout. If on the riser there are 2 wires +12 and 2 common, then on the board there are 3 wires +12 and 1 common.

That's actually the very GTS 250 is included in the GPGPU-riser. Additional power, by the way, is taken on risers and motherboard - from the second power connector + 12V CPU of my PSU. I decided that it would be more correct.

The fairy tale is quickly felt, but slowly the parcels are traveling to Russia from China and other places on the globe. Therefore, in the assembly of "supercomputer" there were large gaps. But finally, the server-side Nvidia Tesla K20M came to me with a passive radiator. And absolutely zero, from storage, sealed in the native box, in the native package, with warranty notes. And the suffering began how to cool it?

First, a custom cooler with two small “turbines” was bought from England, here it is in the photo, with a homemade cardboard diffuser.

And they turned out to be a complete crap. They made a lot of noise, the mount didn’t fit at all, they blew weakly and gave such a vibration that I was afraid that the components would fall off the Tesla board! Why they were sent to the trash almost immediately.

By the way, in the photo under Tesla, the LGA 2011 1U server-mounted copper radiators with a snail from Coolerserver purchased from Aliexpress are visible on the processors. Very worthy though noisy coolers. They fit perfectly.

But actually, while I was waiting for a new cooler on Tesla, this time having ordered a snail from Australia already, a large BFB1012 N with a mount printed on a 3D printer, it came to the server’s storage system. On the server board there is a mini-SAS connector through which 4 SATA and 2 more SATA conventional connectors are derived. All SATA standard 2.0 but it suits me.

The Intel C602 integrated into the RAID chipset is not bad, and the main thing is that the TRIM command for the SSD is missing, that external RAID controllers are not expensive at many.

On eBay, I bought a mini-SAS to 4 SATA cable with a meter length, and on Avito, a hot-swap basket in the 5.25 "compartment for 4 x 2.5" SAS-SATA. So when the cable and the basket arrived - 4 Terabyte Seigates were installed in it, RAID5 was assembled into 4 BIOSes in the BIOS, I started to install the server Ubuntu ... and stumbled into the fact that the disk layout program did not allow the swap partition to be made on the raid.

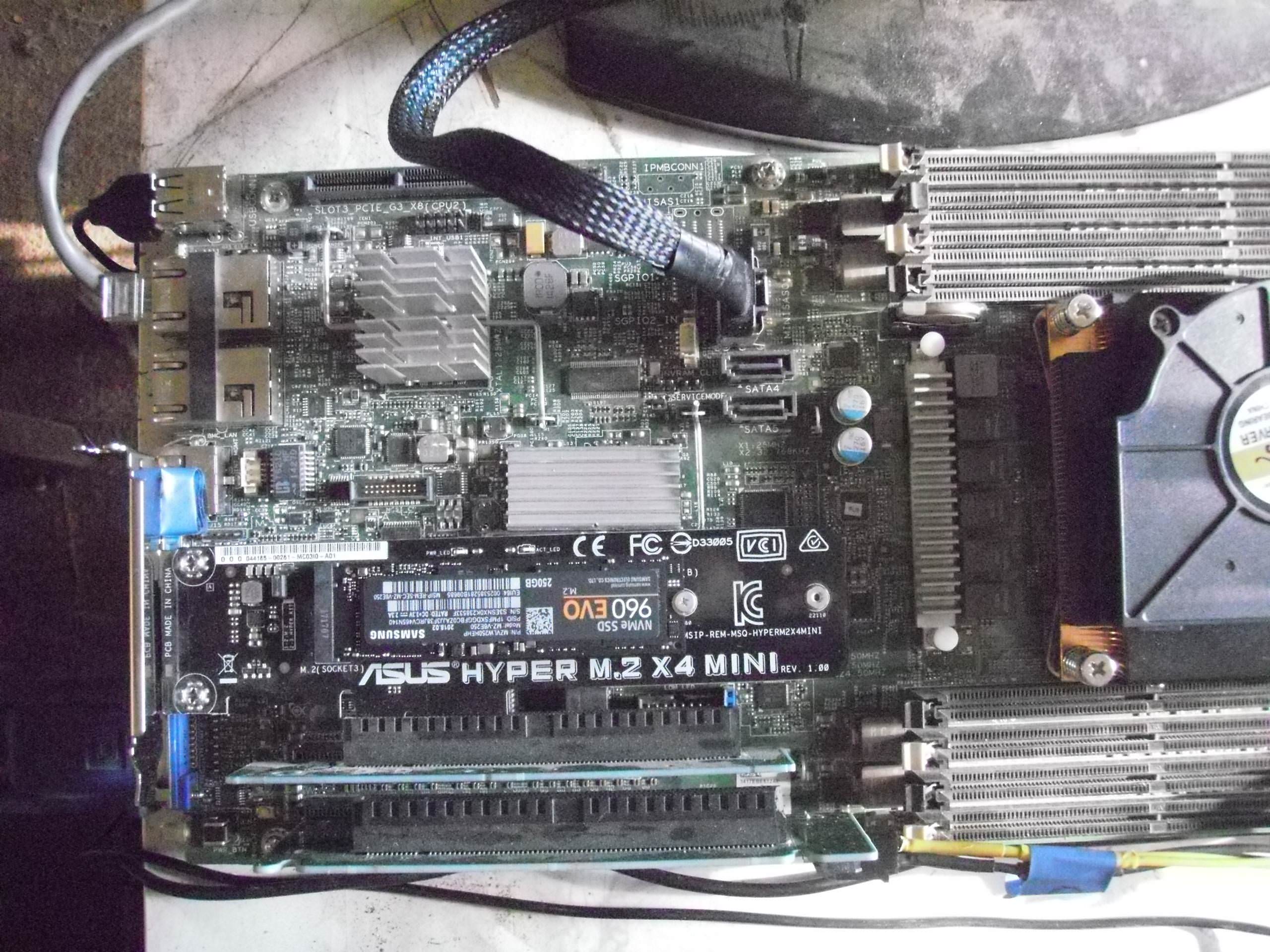

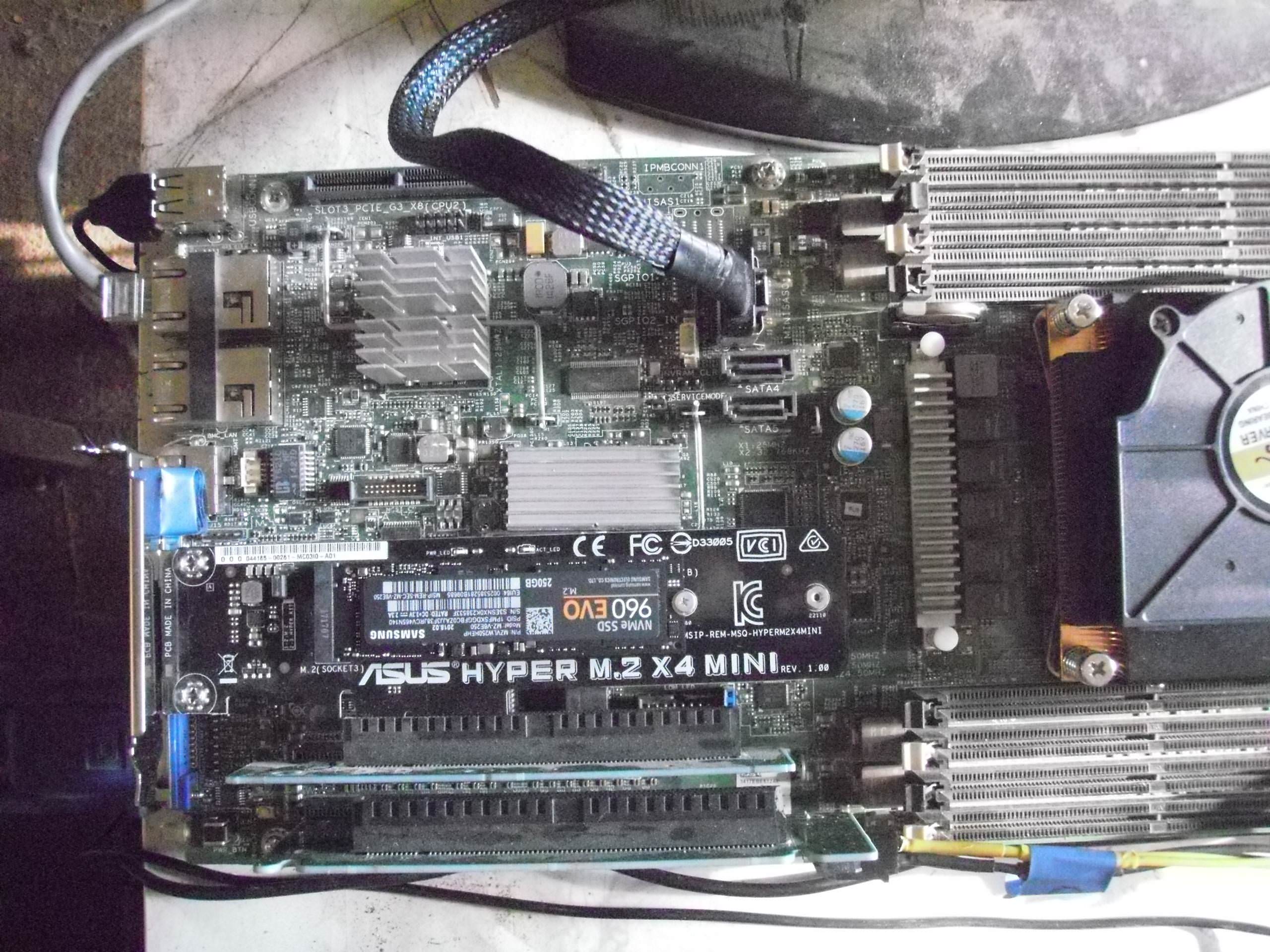

I solved the problem in the forehead - I bought an adapter ASUS HYPER M.2 x 4 MINI and M.2 SSD Samsung 960 EVO 250 Gb in DNS PCI-E to M.2. Decide that under the swap you need to select the maximum speed device, since the system will work with high computational load, and the memory is still obviously smaller than the size of the data. Yes, and 256 GB of memory was more expensive than this SSD.

This is the very adapter with an installed SSD in a low angle riser.

Anticipating the questions - “Why not make the whole system on M.2 and have a maximum access speed higher than that of a SATA raid?” I will answer. Firstly for 1 or more TB M2 SSD are too expensive for me. Secondly, even after updating the BIOS to the latest version 2.8.1, the server still does not support loading NVE devices into M.2. I did the experience when the system put / boot on USB FLASH 64 Gb and everything else on M.2 SSD, but I did not like it. Although, in principle, such a bundle is quite efficient. If M.2 NVE large capacity cheaper - I might go back to this option, but for now SATA RAID as a storage system suits me perfectly.

When I decided on a disk subsystem to come up with a combination of 2 x SSD Kingston 240 Gb RAID1 "/" + 4 x HDD Seagate 1 Tb RAID5 "/ home" + M.2 SSD Samsung 960 EVO 250 Gb "swap" it was time to continue my experiments with GPU. I already had a Tesla and just arrived an Australian cooler with an “evil” snail eating already 2.94A by 12V, the second slot occupied M.2 and for the third I borrowed GT 610 for the experiments.

Here, in the photo, all 3 devices are connected, with M.2 SSD through a flexible ThermalTack riser for video cards that works on the 3.0 bus without errors. It is one of the many individual "ribbons" in the likeness of those of which SATA cables are made. PCI-E 16x risers made from a monolithic flat cable seem to be like the old IDE-SCSI - into the firebox, they are tortured with errors due to mutual pickups. And as I said, the Chinese are now also making such risers like Thermaltak, but shorter.

In combination with the Tesla K20 + GT 610, I tried a lot of things, at the same time finding out that when connecting an external video card and switching to its output in the BIOS, vKVM does not work, which didn’t really upset me. I didn’t plan to use external video on this system anyway, there are no video outputs on Teslah, and remote SSH admin panel without X-Owls works fine when you’ll remember a bit what a command line is without GUI. But IPMI + vKVM greatly simplifies management, reinstallation and other moments with a remote server.

In general, this IPMI board is gorgeous. A separate 100 Mb port, the ability to reconfigure packet injection into one of 10 Gbps ports, a built-in Web server for power management and server control, download a vKVM Java client and a remote mount client or images for reinstalling directly from it ... The only thing is that the clients are old Java Oraklovskaya, which is no longer supported in Linux and for the remote admin it was necessary to start a laptop with Win XP SP3 with this very ancient Toad. Well, the client is slow, for the admin and all this is enough, but you can not play remotely with toys, FPS is small. And the ASPEED video that is integrated with IPMI is weak, only VGA.

During the trial with the server, I learned a lot and learned a lot in the field of professional server hardware from Dell. What I do not regret at all, as well as about the time spent with the use of time and money. The continuation of the cognitive story about the actual assembly of the frame with all the components of the server will be later.

Link to part 3: habr.com/en/post/454480

Link to the first part of the story for those who missed it

I want to continue my story about the assembly of the “village supercomputer”. And I will explain why it is so named - the reason is simple. I myself live in the village. And the name is an easy trolling over those who scream on the Internet “There is no life outside the Moscow Ring Road!”, “The Russian village has drank and dies!” So, somewhere this may be so, and I will be the exception to the rule. I do not drink, I do not smoke, I do things that are not for every “city cracker (c)” in my mind and pocket. But back to our sheep, more precisely - the server, which at the end of the first part of the article already "showed signs of life."

')

The board was lying on the table, I climbed the BIOS to tweak it to my liking, I rolled Ubuntu 16.04 Desktop for simplicity and decided to connect a video card to the “super machine”. But only the GTS 250 with a hefty non-native fan was stuck at hand. Which I installed in PCI-E 16x slot near the power button.

"I took a pack of Belomor (c)" so please do not kick for the quality of the photo. I would rather comment on what is imprinted on them.

Firstly, it turned out that when installed in a slot, even a short video card rests against the memory slots with the board, in which for this occasion it cannot be installed and even has to lower the latches. Secondly, the iron mounting plate of the video card closes the power button, so it had to be removed. By the way, the power button itself is illuminated by a two-color LED that turns green when everything is in order and flashes orange if there are any problems, the circuit and the protection of the PSU or the + 12VSB power supply are over or under-charged.

In fact, this motherboard is not designed so that video cards “directly” are included in its PCI-E 16x slots, they all join on risers. To install an expansion card in the slots near the power button, there are angular risers, low for installing short cards up to the first processor heatsink, and a high angle card with an additional + 12V power connector for installing the video card “above” with a regular low 1U cooler. It can include large graphics cards like the GTX 780, GTX 980, GTX 1080, or specialized Nvidia Tesla K10-K20-K40 GPGPU cards or Intel Xeon Phi 5110p "compute cards" and the like.

But in GPGPU-riser, the card included in EdgeSlot can be turned on directly, only by connecting additional power with the same connector as on a high-angle riser. Who cares - on eBay this flexible riser is called “Dell PowerEdge C8220X PCI-E GPGPU DJC89” and costs about 2.5-3 thousand rubles. Corner risers with extra power are much more rare and I had to arrange to buy them from a specialized store of server parts through Shopota. They went to 7 thousand apiece.

I’ll say at once that “risky guys (tm)” can even connect a pair of GTX 980 to a motherboard with Chinese flexible risers 16x as one person did on the “Tom Forum itself”, the Chinese, by the way, do quite nicely working on ThermalTack flexibles for PCI-E 16x risers, but if you once run out of such a power supply circuit on the server board - you will only blame yourself. I did not risk expensive equipment and used original risers with additional power and one Chinese flexible, considering that the connection of one card "directly" does not burn the board.

Then the long-awaited connectors for additional power came, and I made the tail for my riser in EdgeSlot. And this is the same connector, but with a different pinout used to apply additional power to the motherboard. This connector is just near this very EdgeSlot connector, there is an interesting pinout. If on the riser there are 2 wires +12 and 2 common, then on the board there are 3 wires +12 and 1 common.

That's actually the very GTS 250 is included in the GPGPU-riser. Additional power, by the way, is taken on risers and motherboard - from the second power connector + 12V CPU of my PSU. I decided that it would be more correct.

The fairy tale is quickly felt, but slowly the parcels are traveling to Russia from China and other places on the globe. Therefore, in the assembly of "supercomputer" there were large gaps. But finally, the server-side Nvidia Tesla K20M came to me with a passive radiator. And absolutely zero, from storage, sealed in the native box, in the native package, with warranty notes. And the suffering began how to cool it?

First, a custom cooler with two small “turbines” was bought from England, here it is in the photo, with a homemade cardboard diffuser.

And they turned out to be a complete crap. They made a lot of noise, the mount didn’t fit at all, they blew weakly and gave such a vibration that I was afraid that the components would fall off the Tesla board! Why they were sent to the trash almost immediately.

By the way, in the photo under Tesla, the LGA 2011 1U server-mounted copper radiators with a snail from Coolerserver purchased from Aliexpress are visible on the processors. Very worthy though noisy coolers. They fit perfectly.

But actually, while I was waiting for a new cooler on Tesla, this time having ordered a snail from Australia already, a large BFB1012 N with a mount printed on a 3D printer, it came to the server’s storage system. On the server board there is a mini-SAS connector through which 4 SATA and 2 more SATA conventional connectors are derived. All SATA standard 2.0 but it suits me.

The Intel C602 integrated into the RAID chipset is not bad, and the main thing is that the TRIM command for the SSD is missing, that external RAID controllers are not expensive at many.

On eBay, I bought a mini-SAS to 4 SATA cable with a meter length, and on Avito, a hot-swap basket in the 5.25 "compartment for 4 x 2.5" SAS-SATA. So when the cable and the basket arrived - 4 Terabyte Seigates were installed in it, RAID5 was assembled into 4 BIOSes in the BIOS, I started to install the server Ubuntu ... and stumbled into the fact that the disk layout program did not allow the swap partition to be made on the raid.

I solved the problem in the forehead - I bought an adapter ASUS HYPER M.2 x 4 MINI and M.2 SSD Samsung 960 EVO 250 Gb in DNS PCI-E to M.2. Decide that under the swap you need to select the maximum speed device, since the system will work with high computational load, and the memory is still obviously smaller than the size of the data. Yes, and 256 GB of memory was more expensive than this SSD.

This is the very adapter with an installed SSD in a low angle riser.

Anticipating the questions - “Why not make the whole system on M.2 and have a maximum access speed higher than that of a SATA raid?” I will answer. Firstly for 1 or more TB M2 SSD are too expensive for me. Secondly, even after updating the BIOS to the latest version 2.8.1, the server still does not support loading NVE devices into M.2. I did the experience when the system put / boot on USB FLASH 64 Gb and everything else on M.2 SSD, but I did not like it. Although, in principle, such a bundle is quite efficient. If M.2 NVE large capacity cheaper - I might go back to this option, but for now SATA RAID as a storage system suits me perfectly.

When I decided on a disk subsystem to come up with a combination of 2 x SSD Kingston 240 Gb RAID1 "/" + 4 x HDD Seagate 1 Tb RAID5 "/ home" + M.2 SSD Samsung 960 EVO 250 Gb "swap" it was time to continue my experiments with GPU. I already had a Tesla and just arrived an Australian cooler with an “evil” snail eating already 2.94A by 12V, the second slot occupied M.2 and for the third I borrowed GT 610 for the experiments.

Here, in the photo, all 3 devices are connected, with M.2 SSD through a flexible ThermalTack riser for video cards that works on the 3.0 bus without errors. It is one of the many individual "ribbons" in the likeness of those of which SATA cables are made. PCI-E 16x risers made from a monolithic flat cable seem to be like the old IDE-SCSI - into the firebox, they are tortured with errors due to mutual pickups. And as I said, the Chinese are now also making such risers like Thermaltak, but shorter.

In combination with the Tesla K20 + GT 610, I tried a lot of things, at the same time finding out that when connecting an external video card and switching to its output in the BIOS, vKVM does not work, which didn’t really upset me. I didn’t plan to use external video on this system anyway, there are no video outputs on Teslah, and remote SSH admin panel without X-Owls works fine when you’ll remember a bit what a command line is without GUI. But IPMI + vKVM greatly simplifies management, reinstallation and other moments with a remote server.

In general, this IPMI board is gorgeous. A separate 100 Mb port, the ability to reconfigure packet injection into one of 10 Gbps ports, a built-in Web server for power management and server control, download a vKVM Java client and a remote mount client or images for reinstalling directly from it ... The only thing is that the clients are old Java Oraklovskaya, which is no longer supported in Linux and for the remote admin it was necessary to start a laptop with Win XP SP3 with this very ancient Toad. Well, the client is slow, for the admin and all this is enough, but you can not play remotely with toys, FPS is small. And the ASPEED video that is integrated with IPMI is weak, only VGA.

During the trial with the server, I learned a lot and learned a lot in the field of professional server hardware from Dell. What I do not regret at all, as well as about the time spent with the use of time and money. The continuation of the cognitive story about the actual assembly of the frame with all the components of the server will be later.

Link to part 3: habr.com/en/post/454480

Source: https://habr.com/ru/post/454448/

All Articles