From daily accidents to stability: Informatica 10 through the eyes of the admin

The ETL component of the data warehouse is often overshadowed by the storage itself and receives less attention than the main database or front-end component, BI, for generating reports. At the same time, from the point of view of the mechanics of filling the data warehouse, ETL plays a key role and requires no less attention of administrators than other components. My name is Alexander, now I administer ETL at Rostelecom, and in this article I will try to share a bit of what the administrator of one of the famous ETL systems in the large data warehouse of the company Rostelecom has to face.

If dear readers are already familiar with our data warehouse project as a whole and with the Informatica PowerCenter product, then you can skip to the next section.

A few years ago, the idea of a single corporate data warehouse became ripe in Rostelecom and began to be implemented. A number of repositories that solved individual tasks were already created, but the number of scenarios grew, support costs also increased, and it became clear that the future was centralized. Architecturally, this is a very multi-layer repository implemented on Hadoop and GreenPlum, auxiliary databases, ETL mechanisms, and BI.

')

At the same time, due to the large number of geographically distributed, heterogeneous data sources, a special data upload mechanism was created, which is controlled by Informatica. As a result, the data packets are in the Hadoop interface area, after which the data loading process begins in the storage layers in Hadoop and GreenPlum, and they are controlled by the so-called ETL control mechanism implemented in Informatica. Thus, the Informatica system is one of the key elements ensuring the operation of the repository.

In more detail about our storage will be told in one of the following posts.

Informatica PowerCenter / Big Data Management is currently considered the leading software in the field of data integration tools. This is a product of the American company Informatica, which is one of the strongest players of ETL (Extract Transform Load), data quality management, MDM (Master Data Management), ILM (Information Lifecycle Management) and more.

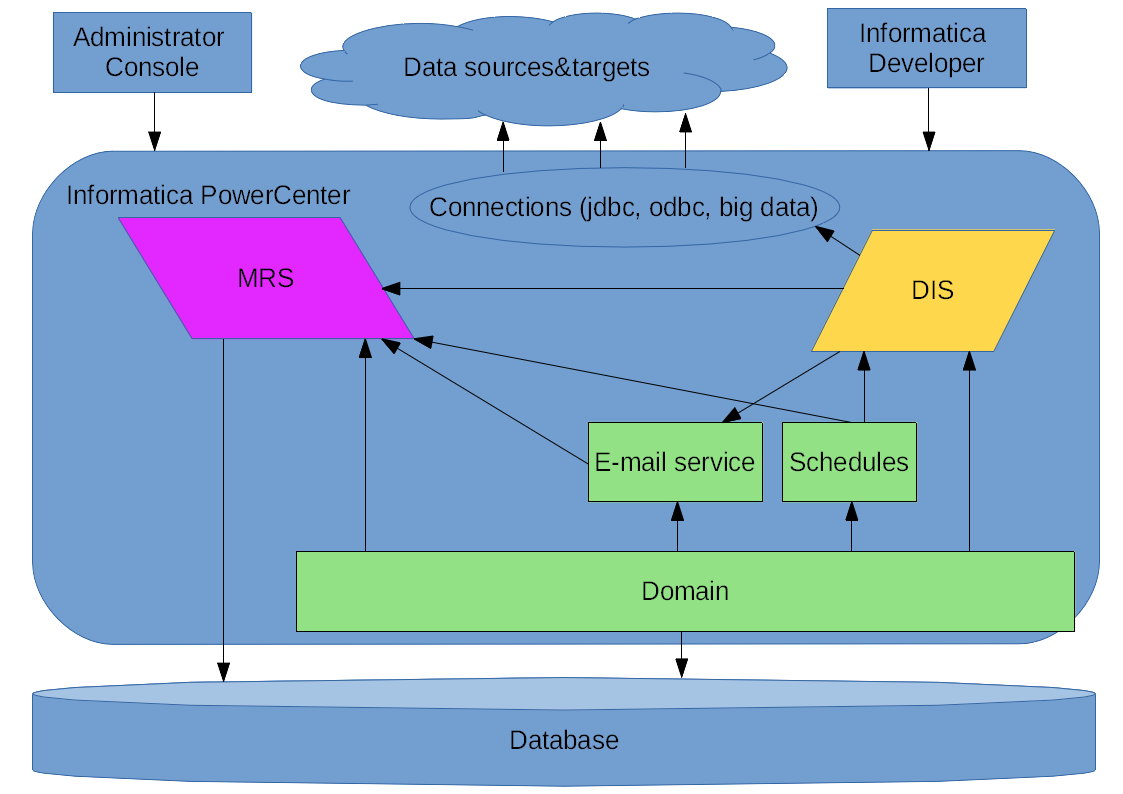

The PowerCenter we use is an integrated Tomcat application server in which the Informatica applications themselves run their services:

Domain , in fact, is the basis for everything else, within the domain, there are services, users, GRID components.

Administrator Console , a web-based management and monitoring tool, besides the client Informatica Developer, the main tool for interacting with the product

MRS, Model Repository Service , a metadata repository, is a layer between the base in which the metadata is stored physically and the Informatica Developer client in which development is underway. The repositories store both the data description and other information, including for a number of other Infromatica services, for example, the schedule of task launches (Schedules) or monitoring data, as well as the parameterset of applications, in particular, allowing the same application to be used with various data sources and receivers.

DIS, Data Integration Service , is a service in which the main functional processes take place, applications run in it and the Workflows themselves (descriptions of the sequence of mappings and their interactions) and Mappings (transformations, blocks in which the transformations themselves, data processing) occur.

The GRID configuration is essentially a variant of building a complex using several servers, when the load launched by DIS is distributed among the nodes (that is, the servers that make up the domain). In the case of this option, in addition to distributing the load in DIS through an additional layer of GRID abstraction, combining several nodes on which DIS works instead of working on a specific single node, additional backup MRS instances can also be created. You can even realize high availability, when external calls can be made through backup nodes if the primary fails. We have so far refused such a construction option.

Informatica PowerCenter, schematically

In the early stages of work, the data supply chain regularly encountered problems, some of them due to the unstable work of Informatica at that time. I am going to share some of the memorable moments of this saga - mastering Informatica 10.

Former logo Informatica

Our areas of responsibility also include other Informatica environments, there is some specifics due to a different workload, but for now, I’ll remember exactly how Informatica developed as an ETL component of the data warehouse itself.

As it happened

In 2016, when we became responsible for the work of Informatica, it already reached version 10.0, and for optimistic colleagues who made the decision to use a product with a minor version .0 in a serious decision, everything seemed obvious - you need to use a new version! In terms of hardware resources, everything was fine at that time.

Since spring 2016, the contractor has been responsible for the work of Informatica, and according to the few users of the system “it worked a couple of times a week”. Here it is necessary to clarify that the storage was de facto at the PoC stage, there were no administrators in the team and the system was constantly falling for various reasons, after which the contractor’s engineer picked it up anew.

In the fall, three administrators appeared in the team, who divided the areas of responsibility among themselves and began to build up the normal work of operating the systems in the project, including the Informatica. Separately, it must be said that this product is not widespread and has a large community in which you can find the answer to any questions and solve any problem. Therefore, it was very important to have full-fledged technical support from the Russian partner Informatica, with the help of which all our errors and mistakes were corrected young then Informatca 10.

The first thing we had to do for the developers of our team and the contractor was to stabilize the work of Informatica itself, to make the administration web console work (Informatica Administrator).

So we often met developers Informatica

Leaving behind the process of finding out the reasons, the main reason for the crashes was the scheme of interaction between Informatica software and the repository database located on a relatively remote server in terms of network landscape. This led to delays and disrupted the mechanisms that control the status of the Informatica domain. After some tuning of the database, changing the parameters of Informatica, which made it more tolerant to database delays, and as a result of upgrading the version of Informatica to 10.1 and transferring the database from the previous server to the server closer to Informatica, the problem has become irrelevant. we do not observe.

One attempt to get Informatica Monitor to work

With the administration console, the situation was also critical. Since there was an active development right on conditionally productive environment, colleagues constantly needed to analyze the work of mappings, workflow “on the go”. In the new Informatica, in the Data Integration Service there is no separate tool for such monitoring, but a monitoring section has appeared in the administration web console (Informatica Administrator Monitor), in which you can monitor applications, workflow and mappings, launches, logs. Periodically, the console became completely inaccessible, or information about current processes in the DIS was no longer updated, or errors occurred when loading pages.

Selection of java parameters to stabilize the work

The problem was corrected in many ways, experiments were carried out to change the parameters, logs were collected, jstack was sent to support, an active googling was going on at the same time, and monitoring was just carried out.

First of all, a separate MRS was created for monitoring, as it turned out, this is one of the main consumers of resources in our environments, since mappings starts very intensively. The parameters concerning java heap and a number of others have been changed.

As a result, by the next update of Informatica 10.1.1, the work of the console and monitor was stabilized, the developers began to work more efficiently, and regular processes became more and more regular.

The experience of interaction between development and administration can be interesting. The question of a general understanding of how everything works, what can be done, and what cannot be, is always important when using complex systems. Therefore, we can safely recommend first teaching the team of administrators how to manage the software, and the development team about how to write code and draw processes in the system, and then send the first and second to work on the result. This is really important when time is not an endless resource. Many problems can be solved even by random search of options, but sometimes some require a priori knowledge - our case confirms the importance of understanding this axiom.

For example, when trying to turn on versioning in MRS (as it turned out, another version of SVN was needed), after some time, we were alarmed to find that the system restart time had increased to several tens of minutes. Going to the reason for the delay of the start and turning off the versioning, they did it well again.

Of the noticeable obstacles associated with Informatica, we can recall the epic battle with the growing java-streams. At some point, it was time to replicate, that is, to extend the established processes to a large number of source systems. It turned out that not all processes in 10.1.1 worked well, and after some time DIS became inoperable. Tens of thousands of streams were found, their number grew particularly noticeable during the application deployment procedure. Sometimes I had to do a restart several times a day to restore functionality.

Here you need to thank the support, relatively quickly the problems were localized and corrected with the help of EBF (Emergency Bug Fix) - after that, everyone had a feeling that the tool really works.

It still works!

By the time we started working in the target mode, Informatica looked like this. Informatica version 10.1.1HF1 (HF1 is HotFix1, vendor assembly from EBF complex) with additional EBF installed that fixes our problems with scaling and some others on one of the three GRID server, 20 x86_64 cores and the repository, on A huge slow array of local drives is the server configuration for the Hadoop cluster. On another server of the same kind - the Oracle DBMS that the Informatica domain and the ETL control mechanism work with. All this is monitored by standard monitoring tools used in the team (Zabbix + Grafana), two sides - both Informatica itself with its services, and the loading processes going into it. Now and performance and stability without taking into account external factors, now depends on the settings that limit the load.

Separately, you can say about GRID. The environment was built on three nodes, with the possibility of load balancing. However, during testing, it was found that due to the problems of interaction between the launched instances of our applications, this configuration did not work as expected, and temporarily they decided to abandon this construction scheme, deriving two of the three nodes from the domain. At the same time, the scheme itself remained the same, and now it is a GRID service, but degenerate to one node.

Right now there remains the difficulty associated with a drop in performance with regular cleaning of the monitor circuit - with simultaneous processes in the CNN and the cleaning started, there can be failures in the operation of the ETL control mechanism. This is solved so far "crutch" - manual cleaning of the monitor circuit, with the loss of all its previous data. This is not too critical for the product, with normal full-time work, but so far there is a search for a normal solution.

One more problem also follows from the same situation - sometimes multiple launches of our controlling mechanism occur.

Multiple application launches leading to mechanism breakdown

When launched on a schedule at times of high load on the system, sometimes such situations occur that lead to a breakdown of the mechanism. Until now, the problem is corrected manually, we are searching for a permanent solution.

In general, it can be summarized that with a large load it is very important to provide adequate resources for it, this also applies to the hardware resources for Informatica itself, and the same for its database repository, as well as to ensure optimal settings for them. In addition, the question remains open as to which database layout is better - on a separate host, or on the same one where the Informatica software works. On the one hand, on one server it will turn out cheaper, and combining it almost eliminates a possible problem with network interaction, on the other hand, the load on the host from the database is complemented by the load from Informatica.

As with any serious product, Informatica has some funny moments.

Once, when sorting out some kind of accident, I noticed that in the MRS logs, the time of events is strangely marked.

Temporary dualism in MRS “by design” logs

It turned out that time stamps are written in the format of 12 hours, without specifying AM / PM, that is, before noon or after. An application was even opened on this occasion, and an official response was received - this was intended, the logs of the MRS are written in this format. That is, there is sometimes some intrigue about the time of the emergence of some ERROR ...

Strive for the best

Today Informatica is a fairly stable tool, convenient for the administrator and users, extremely powerful in terms of current capabilities and potential. It exceeds functionally many times our needs and is now de facto used in a project not in the most typical and typical way. The difficulties are partly related to how the mechanisms work - the specificity is that in a short period of time a large number of threads are started, which intensively update the parametersets and work from the repository database, while the server hardware resources are utilized almost completely by the CPU.

Now we have come close to the transition to Informatica 10.2.1 or 10.2.2, in which some internal mechanisms have been reworked, and support promises the absence of a number of our current problems with performance and functioning. Yes, and from a hardware point of view, we expect servers of the optimal configuration for us, considering the stock for the near future due to the growth and development of the storage.

Of course, there will be testing, compatibility testing, and possibly architectural changes in the HA GRID part. Development within the framework of Informatica will go, because in the short term, we cannot replace the system.

And those who will continue to be responsible for this system will be able to bring it to the required reliability and performance indicators put forward by customers.

The article was prepared by the data management team of Rostelecom.

Current logo Informatica

Source: https://habr.com/ru/post/454126/

All Articles