Smart parser of the number written in words

Prologue

Good afternoon, dear readers. In this article, I will talk about how to parse a number written in Russian.

Smart this parser makes the ability to extract numbers from text with errors made as a result of incorrect input or as a result of optical text recognition from the image (OCR).

For the lazy:

Link to github project: link .

From algorithm to result

In this section, the algorithms used will be described. Careful, many letters!

Formulation of the problem

At work, I need to recognize the text from a printed document, photographed by the camera of a smartphone / tablet. Because of the non-disclosure agreement, I cannot give an example of a photograph, but the point is that there is a table in the document in which certain indicators are written in numbers and words, and these data must be read. Text parsing in words is required as an additional validation tool to ensure that the number is recognized correctly. But, as you know, OCR does not guarantee accurate text recognition. For example, the number twenty, written in words, can be recognized as “twenty” or even as “two-fold”. It is necessary to take this into account and extract the maximum amount of information, estimating the magnitude of the possible error.

Note. For text recognition, I use tesseract 4. For .NET there is no ready-made NuGet package of the fourth version, so I created one from a branch of the main project, who could be useful: Genesis.Tesseract4 .

Basic number parsing algorithm

Let's start with a simple one, namely with the text recognition algorithm written in words, so far without errors. If you are interested in smart parsing, you can skip this section.

I am not particularly strong in Google, so I did not immediately find a ready-made algorithm for solving this problem. However, this is even better, because the algorithm, invented by himself, gives more room for coding. And the task itself turned out to be interesting.

So, take a small number, for example, "one hundred twenty three." It consists of three words ( tokens ), each of which corresponds to a number, all these numbers are summed up:

" " = + + = 100 + 20 + 3 = 123So far, everything is simple, but let's dig deeper, for example, consider the number "two hundred and twelve thousand one hundred and five."

" " = ( + ) × + ( + ) = 212 * 1.000 + 105 = 212.105.As you can see, when there are thousands in the number (as well as millions and other degrees of thousands), the number is divided into parts consisting of a local small number, in the example above - 212, and a multiplier (1000). There may be several such fragments, but they all go in descending order of a multiplier, for example, a thousand cannot be followed by a million or another thousand. This is also true for parts of a small number, so hundreds cannot follow hundreds, and tens after tens, therefore the one hundred five hundred record is incorrect. The characteristic relating two tokens to one type is called a level , for example, one hundred and three hundred tokens have one level, and it is more than the fifty level token.

From these arguments, the idea of the algorithm is born. Let's write out all the possible tokens ( samples ), each of which will be assigned a number, as well as two parameters - the level and the sign of the multiplier.

| Token | Number | Level | Factor? |

|---|---|---|---|

| zero | 0 | one | not |

| one / one | one | one | not |

| two / two | 2 | one | not |

| ... | ... | one | not |

| nineteen | nineteen | one | not |

| twenty | 20 | 2 | not |

| ... | ... | 2 | not |

| ninety | 90 | 2 | not |

| hundred | 100 | 3 | not |

| ... | ... | 3 | not |

| nine hundreds | 900 | 3 | not |

| thousand / thousand / thousand | 1,000 | four | Yes |

| million / million / million | 1,000,000 | five | Yes |

| ... | ... | ... | Yes |

| quadrillion / quadrillion / quadrillion | 1,000,000,000,000,000 | eight | Yes |

In fact, you can add any other tokens to this table, including for foreign languages, just do not forget that in some countries a long rather than a short system for naming numbers is used .

Now let's move on to parsing. Let's get four values:

- Global level (globalLevel). Indicates which level was at the last multiplier. Initially not defined and necessary for control. If we encounter a token-factor that has a level greater than or equal to the global one, then this is a mistake.

- Global value (globalValue). Total adder, where the result of multiplying the local number and the multiplier is added.

- Local level (localLevel). Indicates what level the last token was. Initially undefined, works similarly to the global level, but is reset after detecting a multiplier.

- Local value (localValue). Non-multiplier tokens, i.e. numbers up to 999.

The algorithm is as follows:

- We divide the string into tokens using the regular "\ s +".

- We take another token, we get information about it from the sample.

- If this is a multiplier:

- If the global level is set, then we make sure that it is greater than or equal to the token level. If not - this is an error, the number is incorrect.

- Set the global level to the current token level.

- Multiply the value of the token by the local value and add the result to the global value.

- Clear local value and level.

- If it is not a multiplier:

- If the local level is set, then we make sure that it is greater than or equal to the token level. If not - this is an error, the number is incorrect.

- Set the local level to the current token level.

- We add to the local value the value of the token.

- We return the result as the sum of global and local values.

Example of work for the number “two million two hundred twelve thousand one hundred eighty five”.

| Token | globalLevel | globalValue | localLevel | localValue |

|---|---|---|---|---|

| - | - | - | - | |

| two | - | - | one | 2 |

| million | five | 2,000,000 | - | - |

| two hundred | five | 2,000,000 | 3 | 200 |

| twelve | five | 2,000,000 | one | 212 |

| thousand | four | 2.212.000 | - | - |

| hundred | four | 2.212.000 | 3 | 100 |

| eighty | four | 2.212.000 | 2 | 180 |

| five | four | 2.212.000 | one | 185 |

The result will be 2.212.185.

Smart parsing

This algorithm can be used to implement other comparisons, and not just for parsing numbers, for this reason I will try to describe it in as much detail as possible.

With parsing correctly recorded numbers sorted out. Now let's think about what errors can occur if the number of the resulting OCR is incorrect. I do not consider other options, but you can modify the algorithm for a specific task.

I identified three types of errors encountered in the process:

- Replacing characters with others with similar outlines. For example, the letter "u" for some reason is replaced by "p", and "n" by "i" and vice versa. When using the third version of tesseract, it is possible to replace the letter “o” with a zero. These errors, offhand, are the most common, and require tuning for a specific recognition library. So, the principles of operation of tesseract versions 3 and 4 have cardinal differences, therefore the errors will be different there.

- Merge tokens. Words can merge together (the reverse has not yet met). In combination with the first error, it spawns demonic phrases like “dvupatipodin.” Let's try to irritate such monsters.

- Noise - left characters and phrases in the text. Unfortunately, there is little that can be done at the moment, but the prospect is when collecting enough weighty statistics.

At the same time, the parsing algorithm itself described above almost does not change, the main difference is in splitting a string into tokens.

But let's start with collecting small statistics on the use of letters in tokens. Of the 33 letters of the Russian language, when writing non-negative integers, only 20 are used, let's call them good letters :

The remaining 13, respectively, will be called bad letters . The maximum size of the token is 12 characters (13 when counting up to quadrillion). Substrings longer than this value must be split.

To match strings and tokens, I decided to use the Wagner-Fisher algorithm , although I called it the name of Levenshteyn in code. I do not need the pre-prescription, so I implemented the economical version of the algorithm. I must admit that the implementation of this algorithm turned out to be more difficult than the parser itself.

A small educational program: Levenshtein distance is a special case of the Wagner-Fisher algorithm, when the cost of inserting, deleting and replacing characters is static. In our problem it is not. Obviously, if in the substring we meet a bad letter, then it must be replaced with a good one, but it is extremely undesirable to replace the good with the bad. Generally speaking, it is impossible, but the situation depends on the specific task.

To describe the cost of inserting, deleting and replacing characters, I created the following table: a link to a table with weights . While it is filled with the method of three P (floor, finger, ceiling), but if you fill it with data based on OCR statistics, you can significantly improve the quality of number recognition. The library code contains the resource file NumeralLevenshteinData.txt, into which you can insert data from a similar table using Ctrl + A, Ctrl + C and Ctrl + V.

If the text contains a non-tabular symbol, for example, zero, then the cost of inserting it equals the maximum value from the table, and the cost of deletion and replacement - to the minimum, so the algorithm is more likely to replace zero with the letter “o”, and if you use the third version of tesseract it makes sense to add a zero to the table and set the minimum price for replacing it with the letter “o”.

So, we have prepared the data for the Wagner-Fisher algorithm; let's make changes to the algorithm for splitting a string into tokens. To do this, we will subject each token to additional analysis, but before that we expand the information on the token with the following characteristics:

- Error level A real number from 0 (no error) to 1 (the token is incorrect), meaning how well the token was matched to the sample.

- Sign of using a token . When parsing a line with interspersing garbage, some tokens will be discarded, for them this feature will not be displayed. In this case, the total error value will be calculated as the arithmetic average of the errors of the used tokens.

Algorithm for analyzing tokens:

- We are trying to find the token in the table as it is. If we find everything is fine, we return it.

- If not, we make a list of possible options:

We are trying to match the token with the sample using the Wagner-Fisher algorithm. This variant consists of a single token (associated sample) and its error is equal to the best distance divided by the sample length.

Example: the “zero” token is matched with the “zero” sample, while the distance is equal to 0.5, since the cost of replacing a bad letter “y” with a good “o” is 0.5. The total error for this token will be 0.5 / 4 = 0.125.If the substring is large enough (I have 6 characters), try to divide it into two parts with at least 3 characters each. For a string of 6 characters will be the only option of division: 3 + 3 characters. For a string of 7 characters, there are already two options, 3 + 4 and 4 + 3, and so on. For each of the options, we recursively call the same function of the analysis of tokens, put the obtained variants into the list.

In order not to die in recursion, we determine the maximum level of falling through. In addition, the options obtained as a result of the division artificially worsen by a certain amount (optional, default 0.1), so that the direct comparison option is more valuable. This operation had to be added, because substrings of type “two-fold” were successfully divided into tokens “two” and “five”, and not resulted in “twenty”. Alas, these are the features of the Russian language.

Example: the “two-way” token has a direct comparison with the sample “twenty”, an error is 0.25. In addition, the best division is “two” + “five” costing 0.25 (replacing “a” with “I”), artificially deteriorated to 0.35, with the result that the token “twenty” is preferred.

- After compiling all the options, choose the best by the minimum amount of errors of the tokens participating in it. The result is returned.

In addition, a check of tokens is entered into the main number generation algorithm so that their error does not exceed a certain value (optional, default 0.67). With this, we screen out potential garbage, although not very successfully.

Algorithm in a nutshell for those who were too lazy to read the text above

We divide the incoming string representing the number in words into substrings using the regular \ s +, then we try to match each of the substrings with sample tokens or break them into smaller substrings, choosing the best results. As a result, we obtain a set of tokens by which we generate a number, and the error value is taken as the arithmetic average of the errors among the tokens used during the generation.

Sharpening the algorithm for a specific task

In my task, the numbers are non-negative and relatively small, so I will exclude unnecessary tokens from the "million" and above. For the test, dear readers, I, on the contrary, added additional tokens-slang, which allowed parsing lines like “five pieces”, “mower two hundred” and even “three stolnik and two gold pieces”. It's funny, but it didn't even require changes in the algorithm.

Further improvement

The existing algorithm has some flaws:

- Control of cases The lines “two thousand” and “two thousand” will be recognized as 2000 with zero error. In my task, case control is not required, it is even harmful, but if you need such a function, this is solved by introducing an additional flag to the token responsible for the case of the next token .

- Negative numbers. Introduced an additional token "minus" with special processing. Nothing complicated, but do not forget that the letter “y” is bad and does not occur in numerals, you will need to change its weight characteristics or hope that it will not change in the OCR process.

- Fractional numbers. It is solved by replacing the long type with double and introducing “tenths,” “hundredths”, etc. tokens ... Don't forget to revise the weights of letters.

- Recognition of numbers entered by users. Because When typing text manually, we most often make mistakes related to perevodovka ciBolov, this operation should be added to the Wagner-Fisher algorithm.

- Support for other languages. We introduce new tokens, expand the weights table.

- Handling trash. In some documents there is a seal on the data, the quality of the image can be bad, the cell can be tritely empty. In this case, the line gets garbage that you need to somehow clean. The best I can offer at the moment is to pre-process the document before OCR. I was greatly helped by removing the lines of the table and filling them with a color close to the color of the free space of the cell. This did not solve all the problems, but improved the quality of text recognition from documents where the table had curvatures due to the bruise of the document or the crooked photographer. Ideally, you should turn the cell itself and recognize it separately, if you, of course, have a table at all.

So what is the result?

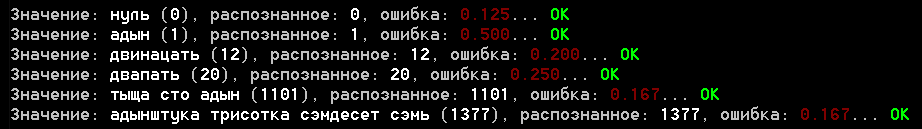

The project has an example of a console application running through the samples.txt file with examples for the parser. Here is a screenshot of the results:

')

I charge you with evaluating the result, but as for me, it is not bad. The magnitude of the error for real recognition examples does not exceed 0.25, although I have not yet chased away the entire set of available documents, probably not everything will be so smooth there.

As for the last section, I was always wondering how much this is “dofiga”. Also, the program gave itself quite an adequate answer, how many should I take for the road (I do not use, but still) and even accurately determined the meaning of the old Russian word “darkness”. And yes, one more measure was not included in the conclusion, which education did not allow to add, but the program considers that it is equal to a thousand =)

A few words about the library

Initially, my plans did not include the creation of a library, I decided to issue it exclusively for Habr. I tried to put the code in order, but if you use it, fork or copy, because most likely you will not need jargon and other tokens included in the examples.

The library itself is written under .NET Standart 2.0 and C # 7.x, and the algorithms are easily translated into other languages.

In case of a possible library extension, I will add the composition of the important components of the number parser in words (namespace Genesis.CV.NumberUtils):

- RussianNumber.cs - directly parser

- RussianNumber.Data.cs - file with description of tokens

- RussianNumber.ToString.cs - convert text to text in words

- RussianNumberParserOptions.cs - parser options

- NumeralLevenshtein.cs - implementation of the Wagner-Fisher algorithm

- NumeralLevenshteinData.txt - resource, data of weights of letters

Using:

- RussianNumber.ToString (value) - convert numbers to text

- RussianNumber.Parse (value, [options]) - convert text to number

Conclusion

I really hope that the article did not seem boring to you, despite the abundance of text. Recently, I have a lot of topics related to computer vision, which have something to say, so I would like to know the opinion about this format of articles. What should I add or, on the contrary, delete? What is more interesting for you, the readers, the algorithms themselves or code fragments?

Like this article? See others:

Source: https://habr.com/ru/post/453642/

All Articles