Introduction to machine learning

Full course in Russian can be found at this link .

The original English course is available here .

The release of new lectures is scheduled every 2-3 days.

“Hello again, I’m with you, Paige and today’s guest is Sebastian.”

- Hi, I'm Sebastian!

- ... a man who has an incredible career, who managed to do a lot of amazing things! You are the co-founder of Udacity, you founded Google X, you are a professor at Stanford. You have been doing incredible research and deep learning throughout your career. What brought you the most satisfaction and in which of the areas did you get the most reward for the work you did?

- To be honest, I really love being in Silicon Valley! I like being close to people who are significantly smarter than me, and I have always viewed technology as a tool that changes the rules of the game in various ways - from education to logistics, healthcare, etc. All this is changing so quickly, and there is an incredible desire to be a participant in these changes, to watch them. You look at your surroundings and you realize that most of what you see around does not work as it should - you can always invent something new!

- Well, this is a very optimistic view of technology! What was your biggest “Eureka” throughout your career?

- Lord, there were so many! I remember one of the days when Larry Page called me and offered to create autopilot cars that could drive through all the streets of California. At that time I was considered an expert, I was considered to be one of those, and I was the same person who said “no, this cannot be done”. After that, Larry convinced me that, in principle, it is possible to do it, one has only to start and make an attempt. And we did it! It was a moment when I realized that even the experts are wrong and saying “no” we are 100% pessimistic. I think we should be more open to new things.

- Or, for example, if Larry Page calls you and says, - “Hey, do a cool thing like Google X” and it turns out something pretty cool!

- Yes, that's for sure, no need to complain! I mean, all this is a process that goes through a lot of discussions on the way to implementation. I was really lucky to work and I am proud of it, in Google X and on other projects.

- Amazing! So, this course is all about working with TensorFlow. Do you have experience using TensorFlow or maybe you know (heard) it?

- Yes! I literally love TensorFlow, of course! In my own laboratory, we use it often and a lot; one of the most significant works based on TensorFlow was released about two years ago. We have learned that the iPhone and Android can be more effective in determining skin cancer than the best dermatologists in the world. We published our research in Nature and it produced a sort of stir in medicine.

- Sounds amazing! So you know and love TensorFlow, which in itself is great! Have you already worked with TensorFlow 2.0?

- No, unfortunately I haven't had time yet.

- He will be just amazing! All students of this course will work with this version.

- I envy them! Be sure to try!

- Perfectly! There are a lot of students in our course who have never in their life been engaged in machine learning, from the word “absolutely”. For them, the field may be new, perhaps for someone the programming itself will be a new thing. What is your advice for them?

- I would like them to remain open - to new ideas, methods, solutions, positions. Machine learning is actually simpler than programming. In the process of programming, you need to take into account each case in the source data, adapt the program logic and rules for it. At this very time, using TensorFlow and machine learning, you essentially train the computer using examples, letting the computer find the rules yourself.

- This is incredibly interesting! I can't wait to tell students of this course a little more about machine learning! Sebastian, thank you for taking the time and came to us today!

- Thank you! Stay in touch!

')

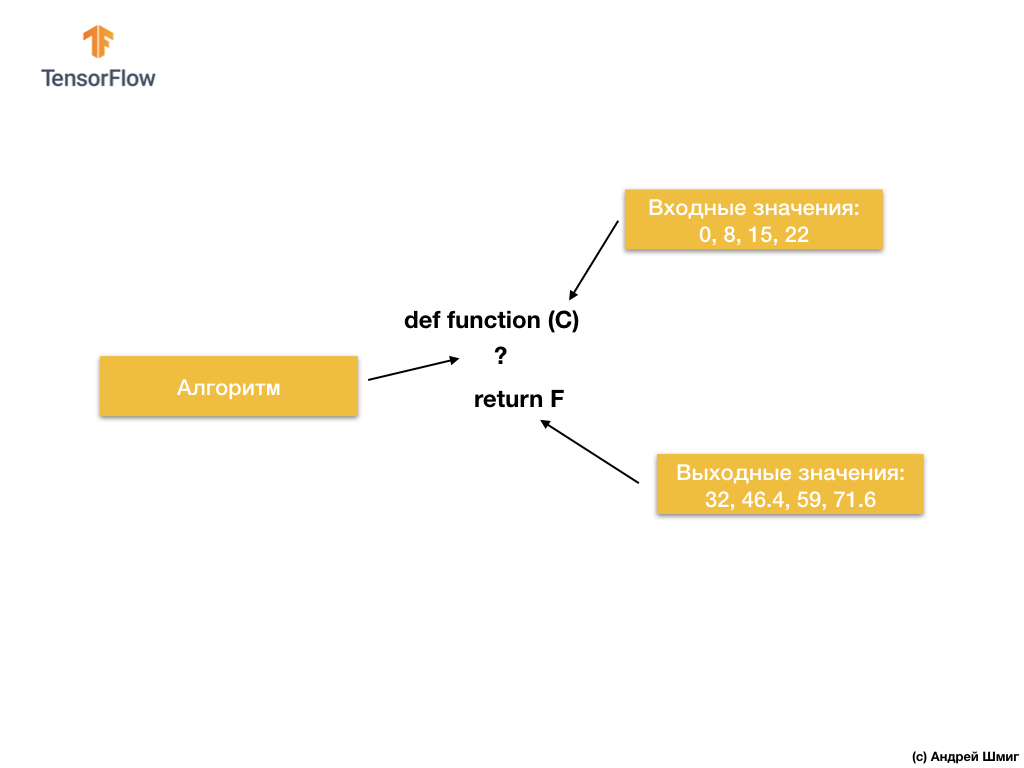

So let's start with the following task - input and output values are given.

When you have a value of 0 as an input value, then you have 32 as an output value. When you have 8 as an input value, 46.4 as an output value. When you have 15 as an input value, then 59 as an output value, and so on.

Take a closer look at these values and let me ask you a question. Can you determine what the output value will be if we get 38 at the input?

If you answered 100.4, then you were right!

So how could we solve this problem? If you take a closer look at the values, you can see that they are related by the expression:

Where C - degrees Celsius (input values), F - Fahrenheit (output values).

What your brain has now done — juxtaposed input values and output values and found a common model (link, dependency) between them — this is exactly what machine learning does.

For input and output values, machine learning algorithms will find a suitable algorithm for converting input values into output values. This can be represented as follows:

Let's take an example. Imagine that we want to develop a program that will convert degrees Celsius to degrees Fahrenheit using the formula

The solution, when approaching from the point of view of traditional software development, can be implemented in any programming language using the function:

So what do we have? The function takes the input value C, then calculates the output value F using an explicitly specified algorithm, and then returns the calculated value.

On the other hand, in the machine learning approach, we only have input and output values, but not the algorithm itself:

The machine learning approach is based on using neural networks to find the relationship between input and output values.

You can think of neural networks as a stack of layers, each of which consists of previously known mathematics (formulas) and internal variables. The input value enters the neural network and passes through a stack of neuron layers. During passage through the layers, the input value is converted according to the mathematics (given formulas) and the values of the internal variable layers, producing the output value.

In order for the neural network to be able to learn and determine the correct relationship between the input and output values, we need to train it — train it.

We train the neural network through repeated attempts to match the input values of the output.

In the process of training, there is a “fit” (selection) of the values of internal variables in the layers of the neural network until the network learns to generate the corresponding output values to the corresponding input values.

As we will see later, in order to train a neural network and allow it to choose the most appropriate values of internal variables, thousands or tens of thousands of iterations (trainings) are performed.

As a simplified version of understanding machine learning, you can imagine machine learning algorithms as functions that match the values of internal variables so that the correct input values correspond to the input values.

There are many types of neural network architectures. However, regardless of which architecture you choose, the mathematics inside (which calculations are performed and in which order) will remain unchanged during the workout. Instead of changing mathematics, internal variables (weights and displacements) change during training.

For example, in the task of converting from degrees Celsius to Fahrenheit, the model starts by multiplying the input value by a certain number (weight) and adding another value (offset). The training of the model consists in finding the appropriate values for these variables, without changing the performed multiplication and addition operations.

But one cool thing that is worth thinking about! If you have solved the task of converting degrees Celsius to Fahrenheit, which is indicated in the video and in the text below, you probably decided it because you had some previous experience or knowledge of how to perform this kind of conversion from degrees Celsius to Fahrenheit. For example, you might just know that 0 degrees Celsius corresponds to 32 degrees Fahrenheit. On the other hand, systems based on machine learning do not have previous auxiliary knowledge to solve the problem. They learn to solve problems of this kind not based on previous knowledge and in their absence.

Enough talk - go to the practical part of the lecture!

The Russian version of the CoLab source code and the English version of the CoLab source code .

Welcome to CoLab, where we will train our first machine learning model!

We will try to preserve the simplicity of the presented material and introduce only the basic concepts necessary for work. Subsequent CoLabs will contain more advanced techniques.

The task that we will be solving is the conversion of degrees Celsius to degrees Fahrenheit. The conversion formula is as follows:

Of course, it would be easier to just write a conversion function in Python or any other programming language that would perform direct calculations, but in this case it would not be machine learning :)

Instead, we will feed the input values of degrees Celsius (0, 8, 15, 22, 38) and their corresponding degrees Fahrenheit (32, 46, 59, 72, 100) to the TensorFlow input. Then we will train the model so that it approximately corresponds to the above formula.

First of all we import

Next, import

As we have seen earlier, the method of machine learning with a teacher is based on the search for an algorithm for transforming input data into a weekend. Since the task of this CoLab is to create a model that can produce the result of converting degrees Celsius to degrees Fahrenheit, we will create two lists,

Some machine learning terminology:

Next, we create a model. We will use the most simplified model - the model of a full mesh network (

We will name the layer

Once the layers are defined they need to be converted to a model.

Our model has only one layer -

Note

Quite often, you will encounter the definition of layers directly in the function of the model, rather than with their preliminary description and subsequent use:

Before training, the model must be compiled (assembled). When compiling for training are needed:

The loss function and the optimization function are used during the training model (

The effect of calculating current losses and the subsequent improvement of these values in the model is exactly what training is (one iteration).

During training, the optimization function is used to calculate the corrections of the values of internal variables. The goal is to adjust the values of internal variables in such a way in the model (and this is, in fact, a mathematical function) so that they reflect the approximate expression that exists as a Celsius to Fahrenheit degree conversion.

TensorFlow uses numerical analysis to perform this kind of optimization operations and all this complexity is hidden from our eyes, so we will not go into details in this course.

What is useful to know about these parameters:

The loss function (standard error) and the optimization function (Adam) used in this example are standard for such simple models, but many others are available. At this stage, we do not care how these functions work.

What you should pay attention to is the optimization function and the parameter — the

The model is trained by the

During training, the model receives the input values of degrees Celsius, performs transformations using the values of internal variables (called "weights") and returns values that must correspond to degrees Fahrenheit. Since the initial values of the weights are arbitrary, the resulting values will be far from the correct values. The difference between the required result and the actual is calculated using the loss function, and the optimization function determines how the weights should be corrected.

This cycle of calculations, comparisons and adjustments is controlled inside the

In the following videos, we will dive into the details of how this all works and exactly how fully connected layers (

The

For visualization we will use

We now have a model that was trained on the input values

For example, how much is 100.0 degrees Celsius Fahrenheit? Try to guess before running the code below.

Conclusion:

The correct answer is 100 × 1.8 + 32 = 212, so our model did quite well!

Review

Our model adjusted the values of internal variables (weights) in the

Let's display the values of the internal variables of the

Conclusion:

The value of the first variable is close to ~ 1.8, and the second to ~ 32. These values (1.8 and 32) are immediate values in the formula for converting degrees Celsius to Fahrenheit.

This is really very close to the actual values in the formula! We will look at this point in more detail in the following videos, where we will show how the

Since the representations are the same, the values of the internal variables of the model should have converged to those presented in the actual formula, which happened as a result.

With additional neurons, additional input values and output values, the formula becomes a bit more complicated, but the essence remains the same.

For fun! What happens if we create more

Conclusion:

As you may have noticed, the current model is also capable of predicting the corresponding values of Fahrenheit degrees quite well. However, if you look at the values of internal variables (weights) of neurons by layers, then we will not see any values similar to 1.8 and 32. The added complexity of the model hides the “simple” form of converting degrees Celsius to degrees Fahrenheit.

Stay in touch and in the next part we will look at how the Dense layers work “under the hood”.

Congratulations! You just trained your first model. In practice, we saw how the model learned from the input and output values to multiply the input value by 1.8 and add 32 to it to get the correct result.

It was really impressive considering how many lines of code we needed to write:

The above example is a general plan for all machine learning programs. You will use similar constructions to create and train neural networks and to solve subsequent problems.

The training process (occurring in the method

In order to engage in machine learning for you, in principle, there is no need to understand these details. But for those who are still interested in learning more: gradient descent, by iterations, changes the values of parameters a little bit, “pulling” them in the right direction until the best results are obtained. In this case, the “best results” (best values) mean that any subsequent change in the parameter will only worsen the model's result. The function that measures how good or bad the model at each iteration is called the “loss function” and the goal of each “pullout” (correction of internal values) is to reduce the value of the loss function.

The training process begins with the “direct distribution” block, in which the input parameters are input to the neural network, follow to the hidden neurons and then go to the weekend. The model then applies internal transformations over the input values and internal variables to predict the response.

In our example, the input value is the temperature in degrees Celsius and the model predicted the corresponding value in degrees Fahrenheit.

As soon as the value is predicted, the difference between the predicted value and the correct value is calculated. The difference is called “loss” and is a form of measuring how well the model worked. The loss value is calculated by the loss function, which we defined as one of the arguments when calling the method

After calculating the loss value, the internal variables (weights and displacements) of all layers of the neural network are adjusted to minimize the loss value in order to bring the output value closer to the correct original reference value.

This optimization process is called gradient descent . A specific optimization algorithm is used to calculate the new value for each internal variable when the method is called

For this course it is not necessary to understand the principles of the workout process, however, if you are curious enough, you can find more information in Google Crash Course(translation and practical part of the whole course are laid by the author in the plans for publication).

By this point you should already be familiar with the following terms:

In the previous section, we created a model that converts Celsius degrees to Fahrenheit degrees, using a simple neural network to find the relationship between Celsius degrees and Fahrenheit degrees.

Our network consists of a single fully connected layer. But what is a full connected layer? To understand this, let's create a more complex neural network with 3 input parameters, one hidden layer with two neurons and one output layer with a single neuron.

Recall that a neural network can be thought of as a set of layers, each of which consists of nodes called neurons. Neurons at each level can be connected to the neurons of each subsequent layer. The type of layers in which each neuron of one layer is connected with each other neuron of the next layer is called a fully connected (fully connected) or dense layer (the

Thus, when we use fully connected layers in

To create the above neural network, the following expressions are enough for us:

So, we figured out what are neurons and how they are interconnected. But how do fully connected layers actually work?

To understand what is actually happening there and what they are doing, we need to look under the hood and analyze the internal mathematics of neurons.

Imagine that our model takes as its input three parameters -

What you should definitely keep in mind - the internal mathematics of the neuron remains unchanged . In other words, in the process of training only the weights and displacements change .

When you start learning machine learning it may seem strange - the fact that it really works, but that’s how machine learning works!

Let us now return to our example of converting degrees Celsius to degrees Fahrenheit.

With a single neuron, we only have one weight and one offset. You know what?This is exactly what the formula for converting degrees Celsius to degrees Fahrenheit looks like. If we substitute a

If we return to the results of our model from the practical part, we note that the weight and displacement indicators were “calibrated” in such a way that they approximately correspond to the values from the formula.

We purposefully created just such a practical example to visually show the exact comparison between weights and displacements. By applying machine learning in practice, we can never compare the values of variables with the target algorithm in the same way, as in the example above. How can we do this? No, because we do not even know the target algorithm!

Solving machine learning problems we test various architectures of neural networks with different numbers of neurons in them - by trial and error we find the most accurate architectures and models and we hope that they will solve the task set in the learning process. In the following practical part we will be able to study concrete examples of such an approach.

Stay in touch, because now the fun begins!

In this lesson, we learned basic approaches to machine learning and learned how fully connected layers (-

... and standard call-to-action - subscribe, put a plus and share :)

YouTube: https://youtube.com/channel/ashmig

Telegram: https://t.me/ashmig

VK: https://vk.com/ashmig

The original English course is available here .

The release of new lectures is scheduled every 2-3 days.

Interview with Sebastian Trun, CEO Udacity

“Hello again, I’m with you, Paige and today’s guest is Sebastian.”

- Hi, I'm Sebastian!

- ... a man who has an incredible career, who managed to do a lot of amazing things! You are the co-founder of Udacity, you founded Google X, you are a professor at Stanford. You have been doing incredible research and deep learning throughout your career. What brought you the most satisfaction and in which of the areas did you get the most reward for the work you did?

- To be honest, I really love being in Silicon Valley! I like being close to people who are significantly smarter than me, and I have always viewed technology as a tool that changes the rules of the game in various ways - from education to logistics, healthcare, etc. All this is changing so quickly, and there is an incredible desire to be a participant in these changes, to watch them. You look at your surroundings and you realize that most of what you see around does not work as it should - you can always invent something new!

- Well, this is a very optimistic view of technology! What was your biggest “Eureka” throughout your career?

- Lord, there were so many! I remember one of the days when Larry Page called me and offered to create autopilot cars that could drive through all the streets of California. At that time I was considered an expert, I was considered to be one of those, and I was the same person who said “no, this cannot be done”. After that, Larry convinced me that, in principle, it is possible to do it, one has only to start and make an attempt. And we did it! It was a moment when I realized that even the experts are wrong and saying “no” we are 100% pessimistic. I think we should be more open to new things.

- Or, for example, if Larry Page calls you and says, - “Hey, do a cool thing like Google X” and it turns out something pretty cool!

- Yes, that's for sure, no need to complain! I mean, all this is a process that goes through a lot of discussions on the way to implementation. I was really lucky to work and I am proud of it, in Google X and on other projects.

- Amazing! So, this course is all about working with TensorFlow. Do you have experience using TensorFlow or maybe you know (heard) it?

- Yes! I literally love TensorFlow, of course! In my own laboratory, we use it often and a lot; one of the most significant works based on TensorFlow was released about two years ago. We have learned that the iPhone and Android can be more effective in determining skin cancer than the best dermatologists in the world. We published our research in Nature and it produced a sort of stir in medicine.

- Sounds amazing! So you know and love TensorFlow, which in itself is great! Have you already worked with TensorFlow 2.0?

- No, unfortunately I haven't had time yet.

- He will be just amazing! All students of this course will work with this version.

- I envy them! Be sure to try!

- Perfectly! There are a lot of students in our course who have never in their life been engaged in machine learning, from the word “absolutely”. For them, the field may be new, perhaps for someone the programming itself will be a new thing. What is your advice for them?

- I would like them to remain open - to new ideas, methods, solutions, positions. Machine learning is actually simpler than programming. In the process of programming, you need to take into account each case in the source data, adapt the program logic and rules for it. At this very time, using TensorFlow and machine learning, you essentially train the computer using examples, letting the computer find the rules yourself.

- This is incredibly interesting! I can't wait to tell students of this course a little more about machine learning! Sebastian, thank you for taking the time and came to us today!

- Thank you! Stay in touch!

')

What is machine learning?

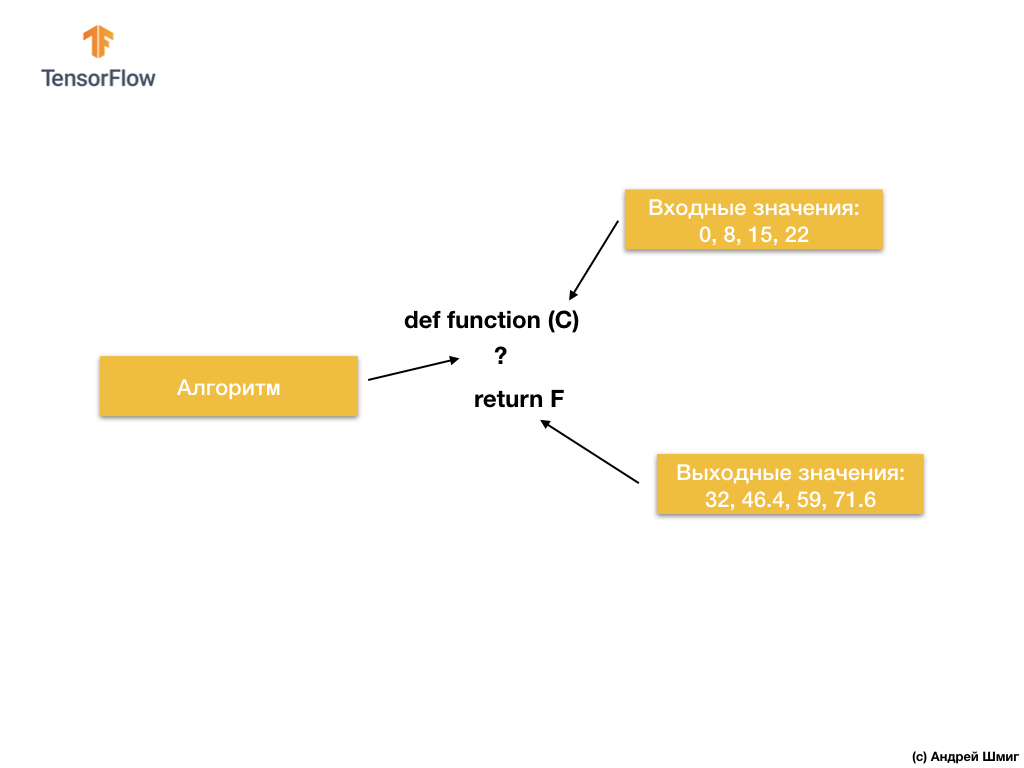

So let's start with the following task - input and output values are given.

When you have a value of 0 as an input value, then you have 32 as an output value. When you have 8 as an input value, 46.4 as an output value. When you have 15 as an input value, then 59 as an output value, and so on.

Take a closer look at these values and let me ask you a question. Can you determine what the output value will be if we get 38 at the input?

If you answered 100.4, then you were right!

So how could we solve this problem? If you take a closer look at the values, you can see that they are related by the expression:

Where C - degrees Celsius (input values), F - Fahrenheit (output values).

What your brain has now done — juxtaposed input values and output values and found a common model (link, dependency) between them — this is exactly what machine learning does.

For input and output values, machine learning algorithms will find a suitable algorithm for converting input values into output values. This can be represented as follows:

Let's take an example. Imagine that we want to develop a program that will convert degrees Celsius to degrees Fahrenheit using the formula

F = C * 1.8 + 32 .

The solution, when approaching from the point of view of traditional software development, can be implemented in any programming language using the function:

So what do we have? The function takes the input value C, then calculates the output value F using an explicitly specified algorithm, and then returns the calculated value.

On the other hand, in the machine learning approach, we only have input and output values, but not the algorithm itself:

The machine learning approach is based on using neural networks to find the relationship between input and output values.

You can think of neural networks as a stack of layers, each of which consists of previously known mathematics (formulas) and internal variables. The input value enters the neural network and passes through a stack of neuron layers. During passage through the layers, the input value is converted according to the mathematics (given formulas) and the values of the internal variable layers, producing the output value.

In order for the neural network to be able to learn and determine the correct relationship between the input and output values, we need to train it — train it.

We train the neural network through repeated attempts to match the input values of the output.

In the process of training, there is a “fit” (selection) of the values of internal variables in the layers of the neural network until the network learns to generate the corresponding output values to the corresponding input values.

As we will see later, in order to train a neural network and allow it to choose the most appropriate values of internal variables, thousands or tens of thousands of iterations (trainings) are performed.

As a simplified version of understanding machine learning, you can imagine machine learning algorithms as functions that match the values of internal variables so that the correct input values correspond to the input values.

There are many types of neural network architectures. However, regardless of which architecture you choose, the mathematics inside (which calculations are performed and in which order) will remain unchanged during the workout. Instead of changing mathematics, internal variables (weights and displacements) change during training.

For example, in the task of converting from degrees Celsius to Fahrenheit, the model starts by multiplying the input value by a certain number (weight) and adding another value (offset). The training of the model consists in finding the appropriate values for these variables, without changing the performed multiplication and addition operations.

But one cool thing that is worth thinking about! If you have solved the task of converting degrees Celsius to Fahrenheit, which is indicated in the video and in the text below, you probably decided it because you had some previous experience or knowledge of how to perform this kind of conversion from degrees Celsius to Fahrenheit. For example, you might just know that 0 degrees Celsius corresponds to 32 degrees Fahrenheit. On the other hand, systems based on machine learning do not have previous auxiliary knowledge to solve the problem. They learn to solve problems of this kind not based on previous knowledge and in their absence.

Enough talk - go to the practical part of the lecture!

CoLab: convert degrees Celsius to degrees Fahrenheit

The Russian version of the CoLab source code and the English version of the CoLab source code .

Basics: learning the first model

Welcome to CoLab, where we will train our first machine learning model!

We will try to preserve the simplicity of the presented material and introduce only the basic concepts necessary for work. Subsequent CoLabs will contain more advanced techniques.

The task that we will be solving is the conversion of degrees Celsius to degrees Fahrenheit. The conversion formula is as follows:

Of course, it would be easier to just write a conversion function in Python or any other programming language that would perform direct calculations, but in this case it would not be machine learning :)

Instead, we will feed the input values of degrees Celsius (0, 8, 15, 22, 38) and their corresponding degrees Fahrenheit (32, 46, 59, 72, 100) to the TensorFlow input. Then we will train the model so that it approximately corresponds to the above formula.

Import Dependencies

First of all we import

TensorFlow . Here and in the following, we abbreviate it as tf . We also configure the logging level - only errors.Next, import

NumPy as np . Numpy helps us present our data in the form of high-performance lists. from __future__ import absolute_import, division, print_function, unicode_literals import tensorflow as tf tf.logging.set_verbosity(tf.logging.ERROR) import numpy as np Preparation of data for training

As we have seen earlier, the method of machine learning with a teacher is based on the search for an algorithm for transforming input data into a weekend. Since the task of this CoLab is to create a model that can produce the result of converting degrees Celsius to degrees Fahrenheit, we will create two lists,

celsius_q and fahrenheit_a , which we use when training our model. celsius_q = np.array([-40, -10, 0, 8, 15, 22, 38], dtype=float) fahrenheit_a = np.array([-40, 14, 32, 46, 59, 72, 100], dtype=float) for i,c in enumerate(celsius_q): print("{} = {} ".format(c, fahrenheit_a[i])) -40.0 = -40.0

-10.0 = 14.0

0.0 = 32.0

8.0 = 46.0

15.0 = 59.0

22.0 = 72.0

38.0 = 100.0

Some machine learning terminology:

- Property - input (s) value of our model. In this case, the unit value is degrees Celsius.

- Labels are output values that our model predicts. In this case, the unit value is degrees Fahrenheit.

- An example is a pair of input-output values used for training. In this case, it is a pair of values from

celsius_qandfahrenheit_aunder a certain index, for example, (22.72).

Create a model

Next, we create a model. We will use the most simplified model - the model of a full mesh network (

Dense network). Since the task is rather trivial, the network will consist of a single layer with a single neuron.We build a network

We will name the layer

l0 ( l ayer and zero) and create it by initializing tf.keras.layers.Dense with the following parameters:input_shape=[1]- this parameter determines the dimension of the input parameter - a single value. 1 × 1 matrix with a single value. Since this is the first (and only) layer, then the dimension of the input data corresponds to the dimension of the entire model. The only value is a floating point value representing degrees Celsius.units=1- this parameter determines the number of neurons in a layer. The number of neurons determines how many of the internal variables of the layer will be used for training in finding solutions to the problem. Since this is the last layer, its dimension is equal to the dimension of the result — the output value of the model — the only floating-point number representing Fahrenheit degrees. (In a multilayer network, the size and shape of theinput_shapelayer must match the size and shape of the next layer).

l0 = tf.keras.layers.Dense(units=1, input_shape=[1]) Transform layers into a model

Once the layers are defined they need to be converted to a model.

Sequential model takes as an argument the list of layers in the order in which they should be applied - from the input value to the output value.Our model has only one layer -

l0 . model = tf.keras.Sequential([l0]) Note

Quite often, you will encounter the definition of layers directly in the function of the model, rather than with their preliminary description and subsequent use:

model = tf.keras.Sequential([ tf.keras.layers.Dense(units=1, input_shape=[1]) ]) Compile model with loss and optimization function

Before training, the model must be compiled (assembled). When compiling for training are needed:

- the loss function is a way of measuring how far the predicted value is from the desired output value (the measurable difference is called “loss”).

- optimization function - a way to adjust the internal variables to reduce losses.

model.compile(loss='mean_squared_error', optimizer=tf.keras.optimizers.Adam(0.1)) The loss function and the optimization function are used during the training model (

model.fit(...) mentioned below) to perform the primary calculations at each point and then optimize the values.The effect of calculating current losses and the subsequent improvement of these values in the model is exactly what training is (one iteration).

During training, the optimization function is used to calculate the corrections of the values of internal variables. The goal is to adjust the values of internal variables in such a way in the model (and this is, in fact, a mathematical function) so that they reflect the approximate expression that exists as a Celsius to Fahrenheit degree conversion.

TensorFlow uses numerical analysis to perform this kind of optimization operations and all this complexity is hidden from our eyes, so we will not go into details in this course.

What is useful to know about these parameters:

The loss function (standard error) and the optimization function (Adam) used in this example are standard for such simple models, but many others are available. At this stage, we do not care how these functions work.

What you should pay attention to is the optimization function and the parameter — the

learning rate ( learning rate ), which in our example is 0.1 . This is the used step size when adjusting the internal values of variables. If the value is too small, then too many training iterations will be needed to train the model. Too large - accuracy drops. Finding a good learning speed factor requires some trial and error; it is usually in the range from 0.01 (default) to 0.1 .We train model

The model is trained by the

fit method.During training, the model receives the input values of degrees Celsius, performs transformations using the values of internal variables (called "weights") and returns values that must correspond to degrees Fahrenheit. Since the initial values of the weights are arbitrary, the resulting values will be far from the correct values. The difference between the required result and the actual is calculated using the loss function, and the optimization function determines how the weights should be corrected.

This cycle of calculations, comparisons and adjustments is controlled inside the

fit method. The first argument is the input values, the second argument is the desired output values. The epochs argument determines how many times this training cycle should be executed. The verbose argument controls the level of logging. history = model.fit(celsius_q, fahrenheit_a, epochs=500, verbose=False) print(" ") In the following videos, we will dive into the details of how this all works and exactly how fully connected layers (

Dense layers) work “under the hood”.Display training statistics

The

fit method returns an object that contains information about the change in losses with each subsequent iteration. We can use this object to build an appropriate loss schedule. High loss means that the value of the Fahrenheit degrees that the model predicted is far from the true values in the fahrenheit_a array.For visualization we will use

Matplotlib . As you can see, our model improves very quickly at the very beginning, and then comes to a stable and slow improvement until the results become “about” - ideal at the very end of the training. import matplotlib.pyplot as plt plt.xlabel('Epoch') plt.ylabel('Loss') plt.plot(history.history['loss'])

Use the model for predictions.

We now have a model that was trained on the input values

celsius_q and output values fahrenheit_a to determine the relationship between them. We can use the prediction method to calculate those Fahrenheit degrees for which we previously did not know the corresponding degrees Celsius.For example, how much is 100.0 degrees Celsius Fahrenheit? Try to guess before running the code below.

print(model.predict([100.0])) Conclusion:

[[211.29639]]

The correct answer is 100 × 1.8 + 32 = 212, so our model did quite well!

Review

- We created a model using the

Denselayer - We trained it in 3500 examples (7 pairs of values, 500 training iterations)

Our model adjusted the values of internal variables (weights) in the

Dense layer in such a way as to return the correct values of Fahrenheit degrees to an arbitrary input value of degrees Celsius.We look at the weight

Let's display the values of the internal variables of the

Dense layer. print(" : {}".format(l0.get_weights())) Conclusion:

: [array([[1.8261501]], dtype=float32), array([28.681389], dtype=float32)] The value of the first variable is close to ~ 1.8, and the second to ~ 32. These values (1.8 and 32) are immediate values in the formula for converting degrees Celsius to Fahrenheit.

This is really very close to the actual values in the formula! We will look at this point in more detail in the following videos, where we will show how the

Dense layer works, but for now it’s worth knowing only that one neuron with a single input and output contains simple mathematics - y = mx + b (as an equation direct), which is nothing but our formula for converting degrees Celsius to Fahrenheit, f = 1.8c + 32 .Since the representations are the same, the values of the internal variables of the model should have converged to those presented in the actual formula, which happened as a result.

With additional neurons, additional input values and output values, the formula becomes a bit more complicated, but the essence remains the same.

Some experiments

For fun! What happens if we create more

Dense layers with a large number of neurons, which, in turn, will contain more internal variables? l0 = tf.keras.layers.Dense(units=4, input_shape=[1]) l1 = tf.keras.layers.Dense(units=4) l2 = tf.keras.layers.Dense(units=1) model = tf.keras.Sequential([l0, l1, l2]) model.compile(loss='mean_squared_error', optimizer=tf.keras.optimizers.Adam(0.1)) model.fit(celsius_q, fahrenheit_a, epochs=500, verbose=False) print(" ") print(model.predict([100.0])) print(" , 100 {} ".format(model.predict([100.0]))) print(" l0: {}".format(l0.get_weights())) print(" l1: {}".format(l1.get_weights())) print(" l2: {}".format(l2.get_weights())) Conclusion:

[[211.74748]] , 100 [[211.74748]] l0: [array([[-0.5972079 , -0.05531882, -0.00833384, -0.10636603]], dtype=float32), array([-3.0981746, -1.8776944, 2.4708805, -2.9092448], dtype=float32)] l1: [array([[ 0.09127654, 1.1659832 , -0.61909443, 0.3422218 ], [-0.7377194 , 0.20082018, -0.47870865, 0.30302727], [-0.1370897 , -0.0667181 , -0.39285263, -1.1399261 ], [-0.1576551 , 1.1161333 , -0.15552482, 0.39256814]], dtype=float32), array([-0.94946504, -2.9903848 , 2.9848468 , -2.9061244 ], dtype=float32)] l2: [array([[-0.13567649], [-1.4634581 ], [ 0.68370366], [-1.2069695 ]], dtype=float32), array([2.9170544], dtype=float32)] As you may have noticed, the current model is also capable of predicting the corresponding values of Fahrenheit degrees quite well. However, if you look at the values of internal variables (weights) of neurons by layers, then we will not see any values similar to 1.8 and 32. The added complexity of the model hides the “simple” form of converting degrees Celsius to degrees Fahrenheit.

Stay in touch and in the next part we will look at how the Dense layers work “under the hood”.

Brief summary

Congratulations! You just trained your first model. In practice, we saw how the model learned from the input and output values to multiply the input value by 1.8 and add 32 to it to get the correct result.

It was really impressive considering how many lines of code we needed to write:

l0 = tf.keras.layers.Dense(units=1, input_shape=[1]) model = tf.keras.Sequential([l0]) model.compile(loss='mean_squared_error', optimizer=tf.keras.optimizers.Adam(0.1)) history = model.fit(celsius_q, fahrenheit_a, epochs=500, verbose=False) model.predict([100.0]) The above example is a general plan for all machine learning programs. You will use similar constructions to create and train neural networks and to solve subsequent problems.

Training process

The training process (occurring in the method

model.fit(...)) consists of a very simple sequence of actions, the result of which should be the values of the internal variables that give the results as close as possible to the original. The optimization process by which such results are achieved, called gradient descent , uses numerical analysis to find the most appropriate values for the internal variables of the model.In order to engage in machine learning for you, in principle, there is no need to understand these details. But for those who are still interested in learning more: gradient descent, by iterations, changes the values of parameters a little bit, “pulling” them in the right direction until the best results are obtained. In this case, the “best results” (best values) mean that any subsequent change in the parameter will only worsen the model's result. The function that measures how good or bad the model at each iteration is called the “loss function” and the goal of each “pullout” (correction of internal values) is to reduce the value of the loss function.

The training process begins with the “direct distribution” block, in which the input parameters are input to the neural network, follow to the hidden neurons and then go to the weekend. The model then applies internal transformations over the input values and internal variables to predict the response.

In our example, the input value is the temperature in degrees Celsius and the model predicted the corresponding value in degrees Fahrenheit.

As soon as the value is predicted, the difference between the predicted value and the correct value is calculated. The difference is called “loss” and is a form of measuring how well the model worked. The loss value is calculated by the loss function, which we defined as one of the arguments when calling the method

model.compile(...).After calculating the loss value, the internal variables (weights and displacements) of all layers of the neural network are adjusted to minimize the loss value in order to bring the output value closer to the correct original reference value.

This optimization process is called gradient descent . A specific optimization algorithm is used to calculate the new value for each internal variable when the method is called

model.compile(...). In the example above, we used an optimization algorithm Adam.For this course it is not necessary to understand the principles of the workout process, however, if you are curious enough, you can find more information in Google Crash Course(translation and practical part of the whole course are laid by the author in the plans for publication).

By this point you should already be familiar with the following terms:

- Property : input value of our model;

- Examples : pairs of input + output values;

- Tags : model output values;

- Layers : a collection of nodes combined together within a neural network;

- Model : representing your neural network;

- Dense and fully connected : each node in one layer is associated with each node from the previous layer.

- Weights and offsets : internal model variables;

- Losses : the difference between the desired output value and the actual output value of the model;

- MSE : , , , .

- : , - ;

- : ;

- : «» ;

- : ;

- : ;

- : ;

- Back propagation : calculating the values of internal variables according to an optimization algorithm, starting from the output layer and towards the input layer through all intermediate layers.

Dense layers

In the previous section, we created a model that converts Celsius degrees to Fahrenheit degrees, using a simple neural network to find the relationship between Celsius degrees and Fahrenheit degrees.

Our network consists of a single fully connected layer. But what is a full connected layer? To understand this, let's create a more complex neural network with 3 input parameters, one hidden layer with two neurons and one output layer with a single neuron.

Recall that a neural network can be thought of as a set of layers, each of which consists of nodes called neurons. Neurons at each level can be connected to the neurons of each subsequent layer. The type of layers in which each neuron of one layer is connected with each other neuron of the next layer is called a fully connected (fully connected) or dense layer (the

Dense-layer).

Thus, when we use fully connected layers in

keras, we kind of inform that the neurons of this layer must be connected with all the neurons of the previous layer.To create the above neural network, the following expressions are enough for us:

hidden = tf.keras.layers.Dense(units=2, input_shape=[3]) output = tf.keras.layers.Dense(units=1) model = tf.keras.Sequential([hidden, output]) So, we figured out what are neurons and how they are interconnected. But how do fully connected layers actually work?

To understand what is actually happening there and what they are doing, we need to look under the hood and analyze the internal mathematics of neurons.

Imagine that our model takes as its input three parameters -

1, 2, 3, and 1, 2 3- the neurons of our network. Remember we said that the neuron has internal variables? So, w * and b * are the same internal variables of the neuron, also known as weights and displacements. It is the values of these variables that are adjusted in the learning process in order to obtain the most accurate results of the comparison of the input values of the output.

What you should definitely keep in mind - the internal mathematics of the neuron remains unchanged . In other words, in the process of training only the weights and displacements change .

When you start learning machine learning it may seem strange - the fact that it really works, but that’s how machine learning works!

Let us now return to our example of converting degrees Celsius to degrees Fahrenheit.

With a single neuron, we only have one weight and one offset. You know what?This is exactly what the formula for converting degrees Celsius to degrees Fahrenheit looks like. If we substitute a

w11value 1.8, and instead of b1- 32, we get the final transformation model!If we return to the results of our model from the practical part, we note that the weight and displacement indicators were “calibrated” in such a way that they approximately correspond to the values from the formula.

We purposefully created just such a practical example to visually show the exact comparison between weights and displacements. By applying machine learning in practice, we can never compare the values of variables with the target algorithm in the same way, as in the example above. How can we do this? No, because we do not even know the target algorithm!

Solving machine learning problems we test various architectures of neural networks with different numbers of neurons in them - by trial and error we find the most accurate architectures and models and we hope that they will solve the task set in the learning process. In the following practical part we will be able to study concrete examples of such an approach.

Stay in touch, because now the fun begins!

Results

In this lesson, we learned basic approaches to machine learning and learned how fully connected layers (-

Denselayers) work. You trained your first model to convert degrees Celsius to degrees Fahrenheit. You also learned the basic terms used in machine learning, such as properties, examples, tags. You, among other things, wrote the main lines of Python code that are the backbone of any machine learning algorithm. You saw that in a few lines of code you can create, train and request a prediction from a neural network using TensorFlowand Keras.... and standard call-to-action - subscribe, put a plus and share :)

Video version of the article

The video comes out the day after publication.

YouTube: https://youtube.com/channel/ashmig

Telegram: https://t.me/ashmig

VK: https://vk.com/ashmig

Source: https://habr.com/ru/post/453558/

All Articles