Style transfer

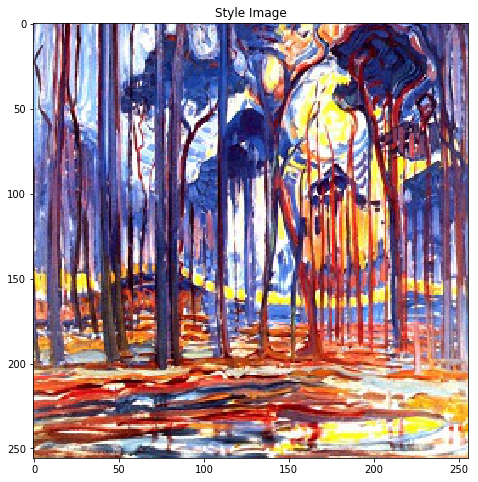

Style transfer is the process of transforming the style of the source to the style of the selected image and relies on the convolutional network type (CNN), which has been previously trained, so much will depend on the choice of this trained network. The benefit of such networks is and there are plenty to choose from, but VGG-16 will be used here.

First you need to connect the necessary libraries

Then you must declare the class of the pre-trained network VGG-16

')

Next, you need to download and upload the VGG-16 weights, first transferring it to the video card, if there is such an opportunity.

Where vgg_conv.pth is the name of the file with network weights.

At the same time, it is necessary to disable the training of parameters from the network; otherwise, it is possible to spoil the loaded weights that have been trained for several days.

After that, the functions of transforming input images are announced to bring them to the form of images on which they studied the VGG-16 network.

Next, the Gram matrix classes and loss functions for the Gram matrix are declared.

The Gram matrix is used to eliminate the spatial reference of the details of the style.

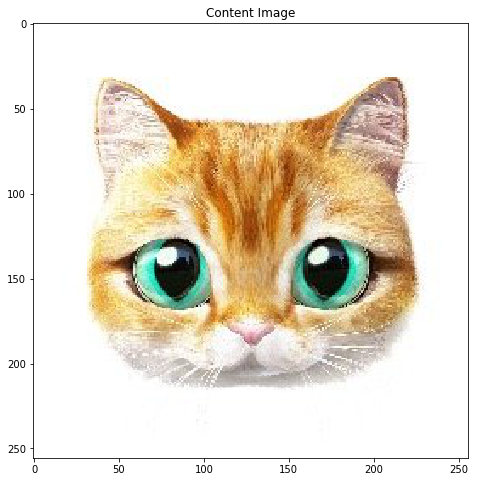

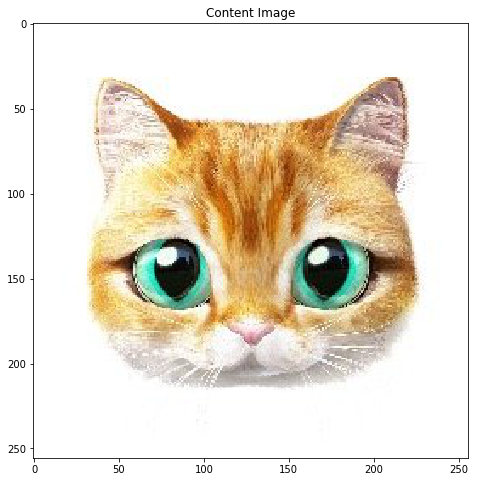

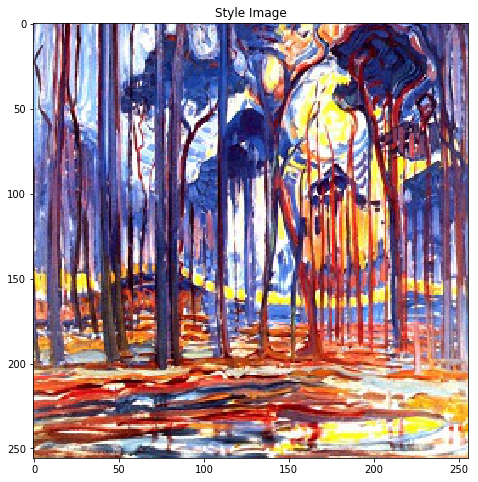

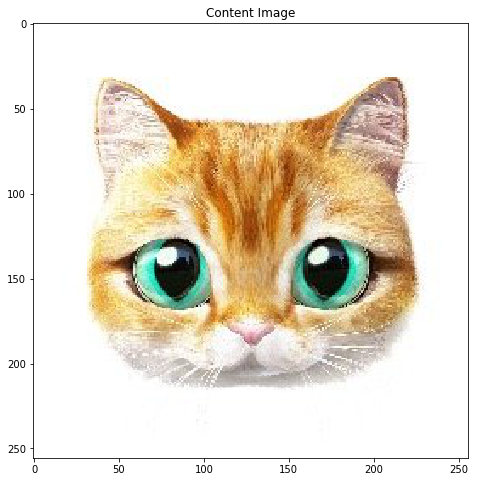

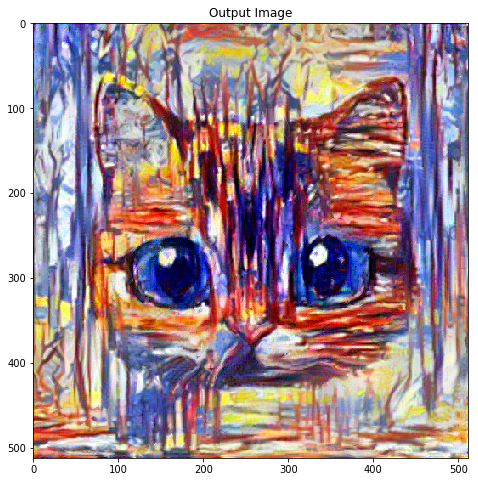

Then comes the process of loading and converting source and style images.

Where style_img and content_img are input images that are converted to tensors and transferred to the video card, if possible, and the result of style transfer will be contained in opt_img, while the initial image is taken as the initial image.

Next comes the process of selecting layers, setting weights and initializing loss functions.

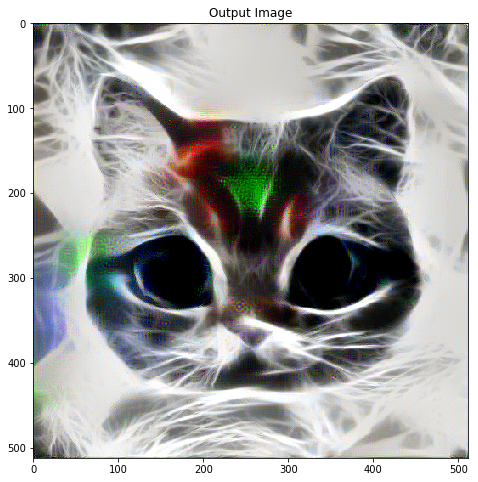

And the last stage is the process of transferring the style.

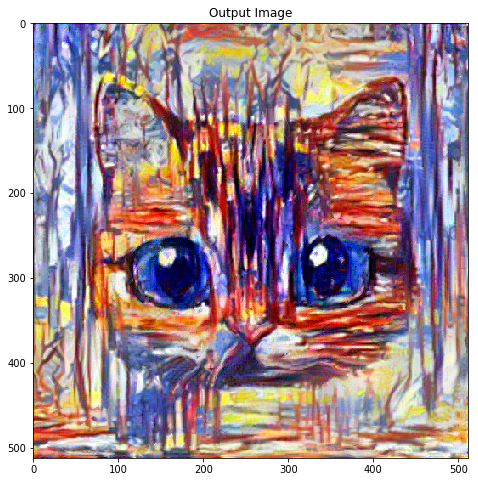

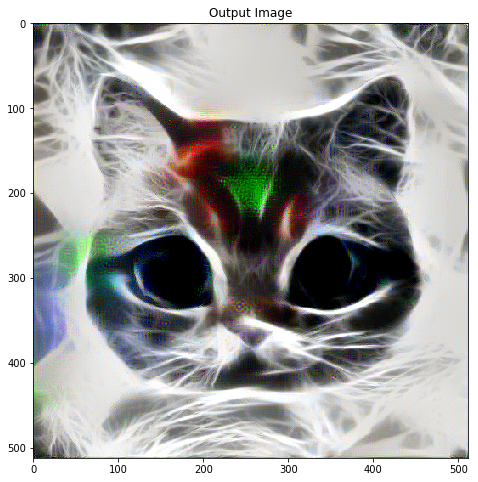

In conclusion, you can add a few examples:

First you need to connect the necessary libraries

Library declaration code

import time import torch from torch.autograd import Variable import torch.nn as nn import torch.nn.functional as F from torch import optim import torchvision from torchvision import transforms from io import BytesIO from PIL import Image from collections import OrderedDict from google.colab import files Then you must declare the class of the pre-trained network VGG-16

')

Class Code VGG-16

class VGG16(nn.Module): def __init__(self, pool='max'): super(VGG, self).__init__() self.conv1_1 = nn.Conv2d(3, 64, kernel_size=3, padding=1) self.conv1_2 = nn.Conv2d(64, 64, kernel_size=3, padding=1) self.conv2_1 = nn.Conv2d(64, 128, kernel_size=3, padding=1) self.conv2_2 = nn.Conv2d(128, 128, kernel_size=3, padding=1) self.conv3_1 = nn.Conv2d(128, 256, kernel_size=3, padding=1) self.conv3_2 = nn.Conv2d(256, 256, kernel_size=3, padding=1) self.conv3_3 = nn.Conv2d(256, 256, kernel_size=3, padding=1) self.conv3_4 = nn.Conv2d(256, 256, kernel_size=3, padding=1) self.conv4_1 = nn.Conv2d(256, 512, kernel_size=3, padding=1) self.conv4_2 = nn.Conv2d(512, 512, kernel_size=3, padding=1) self.conv4_3 = nn.Conv2d(512, 512, kernel_size=3, padding=1) self.conv4_4 = nn.Conv2d(512, 512, kernel_size=3, padding=1) self.conv5_1 = nn.Conv2d(512, 512, kernel_size=3, padding=1) self.conv5_2 = nn.Conv2d(512, 512, kernel_size=3, padding=1) self.conv5_3 = nn.Conv2d(512, 512, kernel_size=3, padding=1) self.conv5_4 = nn.Conv2d(512, 512, kernel_size=3, padding=1) if pool == 'max': self.pool1 = nn.MaxPool2d(kernel_size=2, stride=2) self.pool2 = nn.MaxPool2d(kernel_size=2, stride=2) self.pool3 = nn.MaxPool2d(kernel_size=2, stride=2) self.pool4 = nn.MaxPool2d(kernel_size=2, stride=2) self.pool5 = nn.MaxPool2d(kernel_size=2, stride=2) elif pool == 'avg': self.pool1 = nn.AvgPool2d(kernel_size=2, stride=2) self.pool2 = nn.AvgPool2d(kernel_size=2, stride=2) self.pool3 = nn.AvgPool2d(kernel_size=2, stride=2) self.pool4 = nn.AvgPool2d(kernel_size=2, stride=2) self.pool5 = nn.AvgPool2d(kernel_size=2, stride=2) def forward(self, x, layers): out = {} out['relu1_1'] = F.relu(self.conv1_1(x)) out['relu1_2'] = F.relu(self.conv1_2(out['relu1_1'])) out['pool1'] = self.pool1(out['relu1_2']) out['relu2_1'] = F.relu(self.conv2_1(out['pool1'])) out['relu2_2'] = F.relu(self.conv2_2(out['relu2_1'])) out['pool2'] = self.pool2(out['relu2_2']) out['relu3_1'] = F.relu(self.conv3_1(out['pool2'])) out['relu3_2'] = F.relu(self.conv3_2(out['relu3_1'])) out['relu3_3'] = F.relu(self.conv3_3(out['relu3_2'])) out['relu3_4'] = F.relu(self.conv3_4(out['relu3_3'])) out['pool3'] = self.pool3(out['relu3_4']) out['relu4_1'] = F.relu(self.conv4_1(out['pool3'])) out['relu4_2'] = F.relu(self.conv4_2(out['relu4_1'])) out['relu4_3'] = F.relu(self.conv4_3(out['relu4_2'])) out['relu4_4'] = F.relu(self.conv4_4(out['relu4_3'])) out['pool4'] = self.pool4(out['relu4_4']) out['relu5_1'] = F.relu(self.conv5_1(out['pool4'])) out['relu5_2'] = F.relu(self.conv5_2(out['relu5_1'])) out['relu5_3'] = F.relu(self.conv5_3(out['relu5_2'])) out['relu5_4'] = F.relu(self.conv5_4(out['relu5_3'])) out['pool5'] = self.pool5(out['relu5_4']) return [out[key] for key in layers] Next, you need to download and upload the VGG-16 weights, first transferring it to the video card, if there is such an opportunity.

vgg = VGG16() vgg.load_state_dict(torch.load('vgg_conv.pth')) for param in vgg.parameters(): param.requires_grad = False if torch.cuda.is_available(): vgg.cuda() Where vgg_conv.pth is the name of the file with network weights.

At the same time, it is necessary to disable the training of parameters from the network; otherwise, it is possible to spoil the loaded weights that have been trained for several days.

After that, the functions of transforming input images are announced to bring them to the form of images on which they studied the VGG-16 network.

Input image conversion function code

to_mean_tensor - direct conversion

normalize_image - inverse transform

SIZE_IMAGE = 512 to_mean_tensor = transforms.Compose([transforms.Resize(SIZE_IMAGE), transforms.ToTensor(), transforms.Lambda(lambda x: x[torch.LongTensor([2,1,0])]), transforms.Normalize(mean=[0.40760392, 0.45795686, 0.48501961], std=[1,1,1]), transforms.Lambda(lambda x: x.mul_(255)), ]) to_unmean_tensor = transforms.Compose([transforms.Lambda(lambda x: x.div_(255)), transforms.Normalize(mean=[-0.40760392, -0.45795686, -0.48501961], std=[1,1,1]), transforms.Lambda(lambda x: x[torch.LongTensor([2,1,0])]), ]) to_image = transforms.Compose([transforms.ToPILImage()]) normalize_image = lambda t: to_image(torch.clamp(to_unmean_tensor(t), min=0, max=1)) to_mean_tensor - direct conversion

normalize_image - inverse transform

Next, the Gram matrix classes and loss functions for the Gram matrix are declared.

class GramMatrix(nn.Module): def forward(self, input): b,c,h,w = input.size() F = input.view(b, c, h*w) G = torch.bmm(F, F.transpose(1,2)) G.div_(h*w) return G class GramMSELoss(nn.Module): def forward(self, input, target): out = nn.MSELoss()(GramMatrix()(input), target) return out The Gram matrix is used to eliminate the spatial reference of the details of the style.

Then comes the process of loading and converting source and style images.

imgs = [style_img, content_img] imgs_torch = [to_mean_tensor(img) for img in imgs] if torch.cuda.is_available(): imgs_torch = [Variable(img.unsqueeze(0).cuda()) for img in imgs_torch] else: imgs_torch = [Variable(img.unsqueeze(0)) for img in imgs_torch] style_image, content_image = imgs_torch opt_img = Variable(content_image.data.clone(), requires_grad=True) Where style_img and content_img are input images that are converted to tensors and transferred to the video card, if possible, and the result of style transfer will be contained in opt_img, while the initial image is taken as the initial image.

Next comes the process of selecting layers, setting weights and initializing loss functions.

Code of weights and losses

style_layers = ['relu1_1','relu2_1','relu3_1','relu4_1', 'relu5_1'] content_layers = ['relu4_2'] loss_layers = style_layers + content_layers losses = [GramMSELoss()] * len(style_layers) + [nn.MSELoss()] * len(content_layers) if torch.cuda.is_available(): losses = [loss.cuda() for loss in losses] style_weights = [1e3/n**2 for n in [64,128,256,512,512]] content_weights = [1e0] weights = style_weights + content_weights style_targets = [GramMatrix()(A).detach() for A in vgg(style_image, style_layers)] content_targets = [A.detach() for A in vgg(content_image, content_layers)] targets = style_targets + content_targets And the last stage is the process of transferring the style.

epochs = 300 opt = optim.LBFGS([opt_img]) def step_opt(): opt.zero_grad() out_layers = vgg(opt_img, loss_layers) layer_losses = [] for j, out in enumerate(out_layers): layer_losses.append(weights[j] * losses[j](out, targets[j])) loss = sum(layer_losses) loss.backward() return loss for i in range(0, epochs+1): loss = opt.step(step_opt) In conclusion, you can add a few examples:

Source: https://habr.com/ru/post/453512/

All Articles