Configuring Nomad Cluster with Consul and Gitlab Integration

Introduction

Recently, the popularity of Kubernetes is growing rapidly - more and more projects are introducing it at home. I wanted to touch such an orchestrator as Nomad: it is perfect for projects where other solutions from HashiCorp are already being used, for example, Vault and Consul, and the projects themselves are not complex in terms of infrastructure. In this material, there will be instructions on installing Nomad, joining two nodes into a cluster, as well as integrating Nomad with Gitlab.

')

Test stand

A little bit about the test bench: three virtual servers with the characteristics of 2 CPU, 4 RAM, 50 Gb SSD, connected to a common local area network are used. Their names and IP addresses:

- nomad-livelinux-01 : 172.30.0.5

- nomad-livelinux-02 : 172.30.0.10

- consul-livelinux-01 : 172.30.0.15

Installing Nomad, Consul. Creating a Nomad Cluster

We proceed to the basic installation. Despite the ease of installation, I will describe it for the integrity of the article: in fact, it was created from drafts and notes for quick access if necessary.

Before starting the practice we will discuss the theoretical part, because at this stage it is important to understand the future structure.

We have two Nomad nodes and we want to cluster them, also for the future we will need an automatic scaling cluster - for this we need Consul. Using this tool, clustering and adding new nodes becomes a very simple task: the created Nomad node connects to the Consul agent, and then makes the connection to the existing Nomad cluster. Therefore, at the beginning we will install the Consul server, configure the basic http authorization for the web panel (it is by default without authorization and can be accessed at an external address), as well as the Consul agents themselves on Nomad servers, and then proceed to Nomad.

Installing HashiCorp tools is very simple: in fact, we simply move the binary file to the bin directory, set up the tool configuration file and create its service file.

Load the Consul binary file and unpack it into the user's home directory:

root@consul-livelinux-01:~# wget https://releases.hashicorp.com/consul/1.5.0/consul_1.5.0_linux_amd64.zip root@consul-livelinux-01:~# unzip consul_1.5.0_linux_amd64.zip root@consul-livelinux-01:~# mv consul /usr/local/bin/ Now we have a ready-made consul binary file for further customization.

To work with Consul, we need to create a unique key using the keygen command:

root@consul-livelinux-01:~# consul keygen Let's go to the configuration configuration Consul, create a directory /etc/consul.d/ with the following structure:

/etc/consul.d/ ├── bootstrap │ └── config.json The configuration file config.json will be located in the bootstrap directory - in it we will set the Consul settings. Its contents are:

{ "bootstrap": true, "server": true, "datacenter": "dc1", "data_dir": "/var/consul", "encrypt": "your-key", "log_level": "INFO", "enable_syslog": true, "start_join": ["172.30.0.15"] } Let us analyze separately the main directives and their meanings:

- bootstrap : true. Turn on the automatic addition of new nodes in the case of their connection. Note that we do not indicate here the exact number of expected nodes.

- server : true. Turn on server mode. Consul on this virtual machine will be the only server and master at the moment, Nomad VM will be clients.

- datacenter : dc1. Specify the name of the data center to create a cluster. It must be identical on both clients and servers.

- encrypt : your-key. A key that must also be unique and match on all clients and servers. Generated using the consul keygen command.

- start_join . In this list we indicate the list of IP addresses to which the connection will be made. At the moment we leave only our own address.

At this stage, we can start the consul using the command line:

root@consul-livelinux-01:~# /usr/local/bin/consul agent -config-dir /etc/consul.d/bootstrap -ui This is a good way to debug now, however, on an ongoing basis to use this method will not work for obvious reasons. Create a file service to manage Consul via systemd:

root@consul-livelinux-01:~# nano /etc/systemd/system/consul.service Content of consul.service file:

[Unit] Description=Consul Startup process After=network.target [Service] Type=simple ExecStart=/bin/bash -c '/usr/local/bin/consul agent -config-dir /etc/consul.d/bootstrap -ui' TimeoutStartSec=0 [Install] WantedBy=default.target Run Consul via systemctl:

root@consul-livelinux-01:~# systemctl start consul We check: our service should be started, and having executed the consul members command we should see our server:

root@consul-livelinux:/etc/consul.d# consul members consul-livelinux 172.30.0.15:8301 alive server 1.5.0 2 dc1 <all> The next step: installing Nginx and setting up proxying, http authorization. Install nginx through the package manager and in the / etc / nginx / sites-enabled directory create the configuration file consul.conf with the following contents:

upstream consul-auth { server localhost:8500; } server { server_name consul.doman.name; location / { proxy_pass http://consul-auth; proxy_set_header Host $host; auth_basic_user_file /etc/nginx/.htpasswd; auth_basic "Password-protected Area"; } } Do not forget to create .htpasswd file and generate a username and password for it. This item is required to ensure that the web panel is not accessible to anyone who knows our domain. However, when setting up Gitlab, we will have to give it up - otherwise, we will not be able to shut down our application in Nomad. In my project, both Gitlab and Nomad are only in the gray network, so there is no such problem.

On the other two servers, install Consul agents using the following instructions. Repeat actions with binary file:

root@nomad-livelinux-01:~# wget https://releases.hashicorp.com/consul/1.5.0/consul_1.5.0_linux_amd64.zip root@nomad-livelinux-01:~# unzip consul_1.5.0_linux_amd64.zip root@nomad-livelinux-01:~# mv consul /usr/local/bin/ By analogy with the previous server, create a directory for the /etc/consul.d configuration files with the following structure:

/etc/consul.d/ ├── client │ └── config.json Contents of the config.json file:

{ "datacenter": "dc1", "data_dir": "/opt/consul", "log_level": "DEBUG", "node_name": "nomad-livelinux-01", "server": false, "encrypt": "your-private-key", "domain": "livelinux", "addresses": { "dns": "127.0.0.1", "https": "0.0.0.0", "grpc": "127.0.0.1", "http": "127.0.0.1" }, "bind_addr": "172.30.0.5", # "start_join": ["172.30.0.15"], # "ports": { "dns": 53 } } Save the changes and proceed to setting up the service file, its contents:

/etc/systemd/system/consul.service:

[Unit] Description="HashiCorp Consul - A service mesh solution" Documentation=https://www.consul.io/ Requires=network-online.target After=network-online.target [Service] User=root Group=root ExecStart=/usr/local/bin/consul agent -config-dir=/etc/consul.d/client ExecReload=/usr/local/bin/consul reload KillMode=process Restart=on-failure [Install] WantedBy=multi-user.target Run consul on the server. Now, after the launch, we need to see the configured service in the nsul members. This will mean that he has successfully connected to the cluster as a client. Repeat the same on the second server and after that we will be able to proceed with the installation and configuration of Nomad.

A more detailed installation of Nomad is described in its official documentation. There are two traditional installation methods: downloading a binary file and compiling from source. I will choose the first method.

Note : the project is developing very quickly, often there are new updates. Perhaps by the time the article is completed, a new version will be released. Therefore, I recommend before reading to check the current version of Nomad at the moment and download it.

root@nomad-livelinux-01:~# wget https://releases.hashicorp.com/nomad/0.9.1/nomad_0.9.1_linux_amd64.zip root@nomad-livelinux-01:~# unzip nomad_0.9.1_linux_amd64.zip root@nomad-livelinux-01:~# mv nomad /usr/local/bin/ root@nomad-livelinux-01:~# nomad -autocomplete-install root@nomad-livelinux-01:~# complete -C /usr/local/bin/nomad nomad root@nomad-livelinux-01:~# mkdir /etc/nomad.d After unpacking, we will get a 65 MB Nomad binary file - it needs to be moved to / usr / local / bin.

Create a data directory for Nomad and edit its service file (it most likely will not exist at the beginning):

root@nomad-livelinux-01:~# mkdir --parents /opt/nomad root@nomad-livelinux-01:~# nano /etc/systemd/system/nomad.service We insert the following lines there:

[Unit] Description=Nomad Documentation=https://nomadproject.io/docs/ Wants=network-online.target After=network-online.target [Service] ExecReload=/bin/kill -HUP $MAINPID ExecStart=/usr/local/bin/nomad agent -config /etc/nomad.d KillMode=process KillSignal=SIGINT LimitNOFILE=infinity LimitNPROC=infinity Restart=on-failure RestartSec=2 StartLimitBurst=3 StartLimitIntervalSec=10 TasksMax=infinity [Install] WantedBy=multi-user.target However, we are not in a hurry to run nomad - we have not yet created its configuration file:

root@nomad-livelinux-01:~# mkdir --parents /etc/nomad.d root@nomad-livelinux-01:~# chmod 700 /etc/nomad.d root@nomad-livelinux-01:~# nano /etc/nomad.d/nomad.hcl root@nomad-livelinux-01:~# nano /etc/nomad.d/server.hcl The final directory structure will be as follows:

/etc/nomad.d/ ├── nomad.hcl └── server.hcl The nomad.hcl file should contain the following configuration:

datacenter = "dc1" data_dir = "/opt/nomad" Contents of the server.hcl file:

server { enabled = true bootstrap_expect = 1 } consul { address = "127.0.0.1:8500" server_service_name = "nomad" client_service_name = "nomad-client" auto_advertise = true server_auto_join = true client_auto_join = true } bind_addr = "127.0.0.1" advertise { http = "172.30.0.5" } client { enabled = true } Do not forget to change the configuration file on the second server - there you will need to change the value of the http directive.

The last at this stage remains the Nginx configuration for proxying and installing http authorization. Contents of the nomad.conf file:

upstream nomad-auth { server 172.30.0.5:4646; } server { server_name nomad.domain.name; location / { proxy_pass http://nomad-auth; proxy_set_header Host $host; auth_basic_user_file /etc/nginx/.htpasswd; auth_basic "Password-protected Area"; } } Now we can access the web panel via an external network. Connect and go to the servers page:

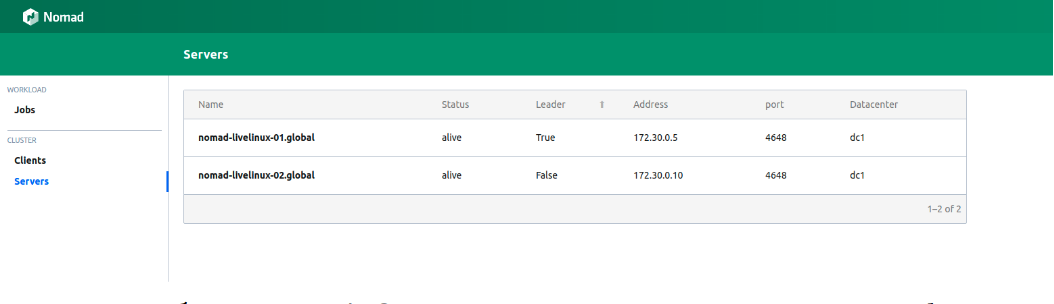

Figure 1. List of servers in a Nomad cluster

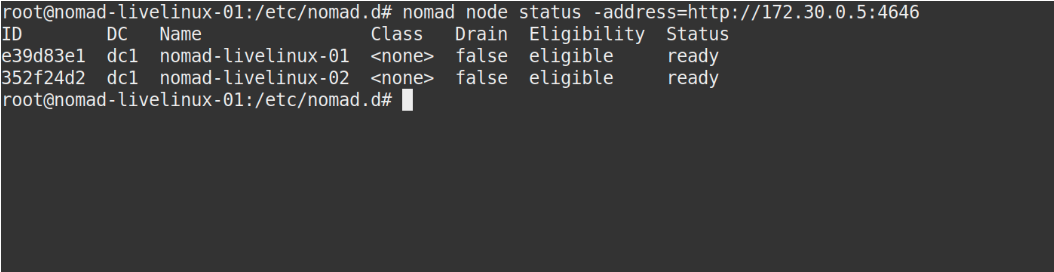

Both servers are successfully displayed in the panel, the same thing we will see in the output of the nomad node status command:

Figure 2. Output of the nomad node status command

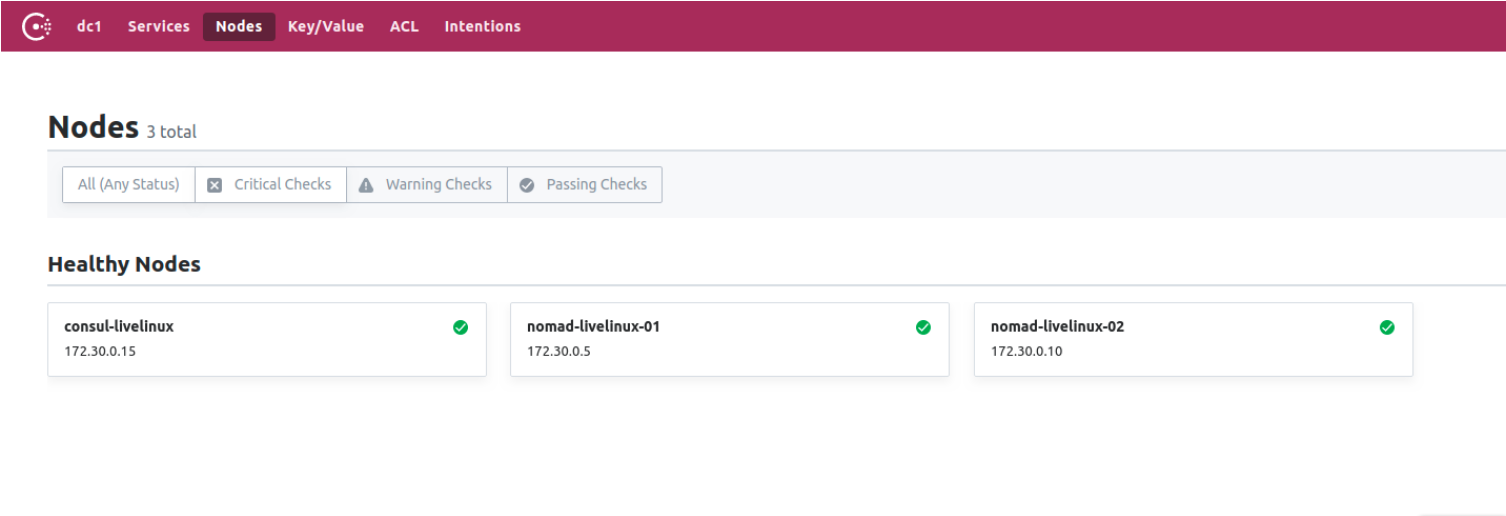

What about Consul? Let's get a look. Go to the Consul control panel, on the nodes page:

Figure 3. List of nodes in the Consul cluster

Now we have a prepared Nomad, working in conjunction with Consul. In the final stage, we will proceed to the most interesting part: we will configure the delivery of Docker containers from Gitlab to Nomad, as well as talk about some of its other distinctive features.

Creating Gitlab Runner

For the deployment of the docker images in Nomad, we will use a separate runner with the Nomad binary file inside (here, by the way, we can note another feature of the Hashicorp applications - individually, they are the only binary file). Download it to the runner directory. For it we will create the simplest Dockerfile with the following contents:

FROM alpine:3.9 RUN apk add --update --no-cache libc6-compat gettext COPY nomad /usr/local/bin/nomad In the same project we create .gitlab-ci.yml:

variables: DOCKER_IMAGE: nomad/nomad-deploy DOCKER_REGISTRY: registry.domain.name stages: - build build: stage: build image: ${DOCKER_REGISTRY}/nomad/alpine:3 script: - tag=${DOCKER_REGISTRY}/${DOCKER_IMAGE}:latest - docker build --pull -t ${tag} -f Dockerfile . - docker push ${tag} As a result, we will have an available image of the Nomad runner in the Gitlab Registry, now we can go directly to the project repository, create a Pipeline and configure Nomad job Nomad.

Project Setup

Let's start with the job's file for Nomad. My project in this article will be quite primitive: it will consist of one task. The contents of .gitlab-ci will be as follows:

variables: NOMAD_ADDR: http://nomad.address.service:4646 DOCKER_REGISTRY: registry.domain.name DOCKER_IMAGE: example/project stages: - build - deploy build: stage: build image: ${DOCKER_REGISTRY}/nomad-runner/alpine:3 script: - tag=${DOCKER_REGISTRY}/${DOCKER_IMAGE}:${CI_COMMIT_SHORT_SHA} - docker build --pull -t ${tag} -f Dockerfile . - docker push ${tag} deploy: stage: deploy image: registry.example.com/nomad/nomad-runner:latest script: - envsubst '${CI_COMMIT_SHORT_SHA}' < project.nomad > job.nomad - cat job.nomad - nomad validate job.nomad - nomad plan job.nomad || if [ $? -eq 255 ]; then exit 255; else echo "success"; fi - nomad run job.nomad environment: name: production allow_failure: false when: manual Here the deployment occurs in manual mode, but you can configure it to change the contents of the project directory. Pipeline consists of two stages: from the assembly of the image and its deployment to the nomad. At the first stage we collect the docker image and push it to our Registry, at the second we launch our job in Nomad.

job "monitoring-status" { datacenters = ["dc1"] migrate { max_parallel = 3 health_check = "checks" min_healthy_time = "15s" healthy_deadline = "5m" } group "zhadan.ltd" { count = 1 update { max_parallel = 1 min_healthy_time = "30s" healthy_deadline = "5m" progress_deadline = "10m" auto_revert = true } task "service-monitoring" { driver = "docker" config { image = "registry.domain.name/example/project:${CI_COMMIT_SHORT_SHA}" force_pull = true auth { username = "gitlab_user" password = "gitlab_password" } port_map { http = 8000 } } resources { network { port "http" {} } } } } } Please note that I have a closed Registry and for a successful docker-image pull I need to log in to it. The best solution in this case is to enter the login and password in the Vault, followed by its integration with Nomad. Nomad natively supports Vault. But first, in the Vault itself, we will install the necessary policies for Nomad, you can download them:

# Download the policy and token role $ curl https://nomadproject.io/data/vault/nomad-server-policy.hcl -O -s -L $ curl https://nomadproject.io/data/vault/nomad-cluster-role.json -O -s -L # Write the policy to Vault $ vault policy write nomad-server nomad-server-policy.hcl # Create the token role with Vault $ vault write /auth/token/roles/nomad-cluster @nomad-cluster-role.json Now, having created the necessary policies, we will add integration with the Vault in the task block in the job.nomad file:

vault { enabled = true address = "https://vault.domain.name:8200" token = "token" } I use authorization by token and prescribe it directly here, there is also the option of specifying the token as a variable when launching the nomad agent:

$ VAULT_TOKEN=<token> nomad agent -config /path/to/config Now we can use keys with a vault. The principle of operation is simple: we create a file in the Nomad job that will store the values of variables, for example:

template { data = <<EOH {{with secret "secrets/pipeline-keys"}} REGISTRY_LOGIN="{{ .Data.REGISTRY_LOGIN }}" REGISTRY_PASSWORD="{{ .Data.REGISTRY_LOGIN }}{{ end }}" EOH destination = "secrets/service-name.env" env = true } With this simple approach, you can customize the delivery of containers to the Nomad cluster and work with it in the future. I will say that to some extent I sympathize with Nomad - it is more suitable for small projects, where Kubernetes may cause additional difficulties and will not realize its potential to the end. In addition, Nomad is perfect for beginners - it is just installed and configured. However, when testing on some projects I encounter the problem of its earlier versions - many basic functions simply do not exist or they work incorrectly. Nevertheless, I believe that Nomad will continue to develop and in the future will acquire all necessary functions.

Author: Ilya Andreev, edited by Alexey Zhadan and the Live Linux team

Source: https://habr.com/ru/post/453322/

All Articles