Black Mirror with your own hands - we train the bot on the basis of its chat history

In the "Black Mirror" was a series (S2E1), in which they created robots that looked like dead people, using the history of correspondence in social networks for learning. I want to tell you how I tried to do something like that and what came of it. There will be no theory, only practice.

The idea was simple - to take the story of your chat from Telegram and, on their basis, train the seq2seq network, which is able to predict its completion at the beginning of the dialogue. Such a network can operate in three modes:

- Predict the completion of a user's phrase based on the conversation history

- Work in chat bot mode

- Synthesize entire conversations

That's what happened with me

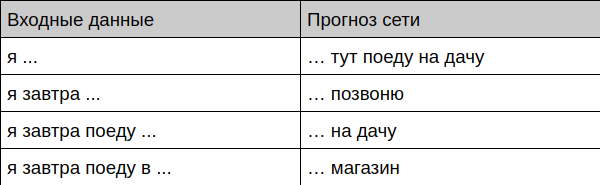

Bot offers the completion of the phrase

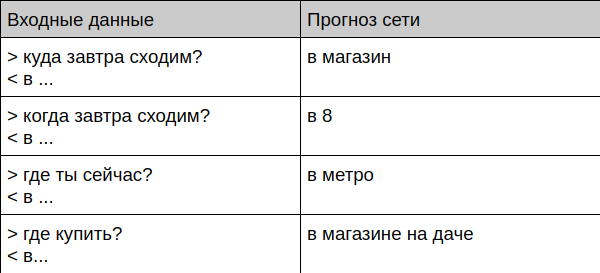

Bot offers the completion of the dialogue

Bot communicates with a living person

User: Bot: User: ? Bot: User: ? Bot: User: ? Bot: User: ? Bot: User: ? Bot: Then I will tell you how to prepare the data and train such a bot yourself.

How to train yourself

Data preparation

First of all, you need to get a lot of chats somewhere. I took all my correspondence in Telegram, since the client for the desktop allows you to download the full archive in JSON format. Then I threw away all the messages that contain quotes, links, and files, and translated the remaining texts in the lower case and threw out all the rare characters, leaving only a simple set of letters, numbers and punctuation marks - it is easier for the network to learn.

Then I brought chats to this type:

=== > < > < ! === > ? < Here, messages that begin with the symbol ">" are a question for me, the symbol "<" accordingly marks my answer, and the line "===" serves to separate the dialogues among themselves. I determined the time that one dialogue ended and another one started (if more than 15 minutes passed between the messages, then we believe that this is a new conversation. The script for converting history can be viewed on github .

Since I have been actively using telegrams for a long time, there have been a lot of messages in the end - the resulting file has 443 thousand lines.

Model selection

I promised that there would be no theory today, so I will try to explain as briefly as possible and on my fingers.

I chose the classic seq2seq GRU model. Such a model receives the text in letters by input and also gives out one letter to the output. The learning process is based on the fact that we teach the network to predict the last letter of the text, for example, we give a “lead” to the input and wait for the output to be issued “Rivet” .

To generate long texts, a simple trick is used - the result of the previous prediction is sent back to the network and so on until a text of the required length is generated.

GRU modules can be very, very simplistic, imagined as a “cunning perceptron with memory and attention,” you can read more about them, for example, here .

The model was based on a well-known example of the problem of generating Shakespeare texts.

Training

Anyone who has ever encountered neural networks probably knows that it is very boring to learn them on the CPU. Fortunately, google comes to the rescue with their Colab service - you can run your code in jupyter notebook for free using the CPU, GPU and even TPU . In my case, training on a video card is within 30 minutes, although the sane results are available after 10 hours. The main thing is not to forget to switch the hardware type (in the menu Runtime -> Change runtime type).

Testing

After training, you can proceed to model verification - I wrote several examples that allow you to access the model in different modes - from text generation to live chat. All of them are on github .

In the method of generating text there is a temperature parameter - the higher it is, the more diverse the text (and meaningless) the bot will issue. This option makes sense to customize hands for a specific task.

Further use

What can such a network be used for? The most obvious is to develop a bot (or smart keyboard) that can offer the user ready-made answers even before he writes them. Such a function has long existed in Gmail and most keyboards, but it does not take into account the context of the conversation and the manner of a particular user to correspond. For example, the G-Keyboard keyboard stably offers me completely meaningless options, for example, "I'm traveling with ... respect" in the place where I would like to get the option "I'm coming from the country," which I definitely used many times.

Is there a future for chat bot? In its pure form, it is not exactly there, it has too much personal data, no one knows at what point it will give the interlocutor the number of your credit card that you once threw to a friend. Moreover, such a bot is not completely tuned, it is very difficult to get it to perform some specific tasks or correctly answer a specific question. Rather, such a chat bot could work in conjunction with other types of bots, providing a more connected dialogue "about anything" - with this he copes well. (And nevertheless, the external expert in the wife’s face said that the bot’s manner of communication is very similar to me. And the topics he’s concerned about are clearly the same - bugs, fixes, commits and other joys and sadness of the developer constantly pop up in the texts).

What else do I advise you to try if you are interested in this topic?

- Transfer learning (to teach foreign dialogues on a large body, and then to complete the education on its own)

- Change model - increase, change type (for example, to LSTM).

- Try working with TPU. In its pure form, this model will not work, but you can adapt it. Theoretical acceleration of learning should be tenfold.

- Port to a mobile platform, for example using Tensorflow mobile.

')

Source: https://habr.com/ru/post/453314/

All Articles