I did not know how the processors work, so I wrote a software simulator

A few months ago, I was suddenly struck by the thought that I had no idea about the principles of computer hardware. I still do not know how modern computers work.

I read the book “But how does he know?” By Clark Scott with a detailed description of a simple 8-bit computer: starting with logic gates, RAM, processor transistors, ending with arithmetic logic and input-output operations. And I wanted to implement all this in the code.

Although I am not so interested in the physics of microcircuits, but the book just glides over the waves and beautifully explains the electrical circuits and how the bits move around the system - the reader does not need knowledge of electrical engineering. But the text description is not enough for me. I have to see things in action and learn from my inevitable mistakes. So I started the implementation of the schemes in the code. The path turned out to be thorny, but instructive.

The result of my work can be viewed in the simple-computer repository: a simple calculator. It is simple and it calculates.

')

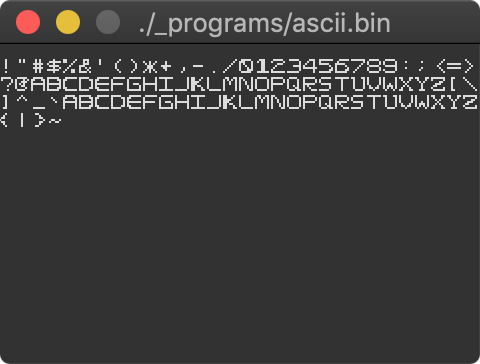

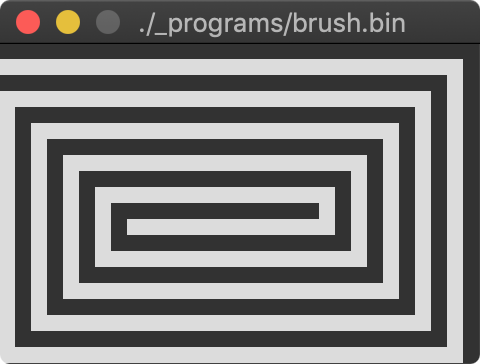

Sample programs

The processor code is implemented as a horrible bunch of logic gates that turn on and off , but it works. I drove the unit tests , and we all know that unit tests are irrefutable proof that the program works.

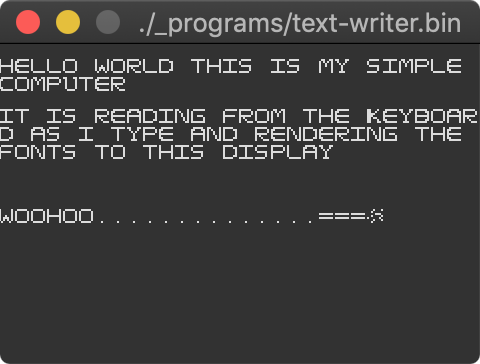

The code processes keyboard input and displays text on the display using a painstakingly created set of glyphs for a professional font, which I called Daniel Code Pro . The only cheat: in order to take input from the keyboard and output the result, I had to connect the channels via GLFW , but otherwise it is completely software simulation of the electrical circuit.

I even wrote a rough assembler that opened my eyes to many things, to say the least. He is not perfect. In fact, even a bit crappy, but he showed me the problems that other people had already solved many, many years ago.

But why are you doing this?

“Thirteen-year-olds assemble processors in Minecraft. Call when you can make a real CPU out of telegraph relays. ”

My mental model of the CPU device is stuck at the level of computer science beginner tutorials. The processor for the Gameboy emulator, which I wrote in 2013, does not really look like a modern CPU. Even if the emulator is just a finite state machine (state machine), it does not describe states at the level of logic gates. Almost everything can be implemented using only the

switch and keeping the state of the registers.I want to better understand how everything works, because I don’t know, for example, what L1 / L2 cache and pipelining are, and I’m not quite sure I understand the Meltdown and Specter vulnerabilities articles. Someone said that they optimize the code in such a way as to use the processor’s cache, but I don’t know how to check it, except to take a word. I'm not quite sure what all the x86 instructions mean. I do not understand how people send tasks to a GPU or TPU. And in general, what is TPU? I do not know how to use SIMD instructions.

All this is built on a foundation that needs to be learned first. It means going back to the basics and doing something simple. The aforementioned book by Clark Scott describes the simplest computer. That's why I started with him.

Glory to Scott! He works!

Scott's computer is an 8-bit processor, connected to 256 bytes of RAM, all connected via an 8-bit system bus. It has 4 general-purpose registers and 17 machine instructions . Someone made a visual simulator for the web : this is really great. It is terrible to think how long it took to track all the states of the circuit!

Scheme with all the components of the processor Scott. Copyright 2009-2016. Siegbert Philbinger and John Clark Scott

The book takes you along the route from modest logic gates to bits in memory and registers, and then continues to layer components until you get something similar to the scheme above. I highly recommend reading the book, even if you are already familiar with the concepts. Just not the Kindle version, because the charts are sometimes difficult to enlarge and disassemble on the screen "reader". In my opinion, this is a multi-year Kindle problem.

My computer is different from the Scott version, except that I upgraded it to 16 bits to increase the amount of available memory, because storing only the glyphs for the ASCII table takes up most of Scott’s 8-bit machine, leaving very little space for useful code.

My development process

In general, the development went according to the following scheme: reading the text, studying diagrams, and then trying to implement them in a general-purpose programming language and definitely not using any specialized tools for designing integrated circuits. I wrote a simulator on Go, simply because I was a little familiar with this language. Skeptics may say: “Blockhead! Couldn't you learn VHDL or Verilog , or LogSim , or something else. But by that time I had already written my bits, bytes, and logic gates and had sunk too deep. Maybe next time I will learn these languages and understand how much time I wasted, but these are my problems.

In a large scheme in a computer, a bunch of boolean values is simply transmitted, so any language that is friendly with Boolean algebra is suitable.

Overlaying the scheme on these Boolean values helps us (programmers) to derive meaning, and most importantly, to decide what order of bytes the system will use and make sure that all components transmit data on the bus in the correct order.

It was very difficult to implement. For the sake of bias, I chose a reverse byte order representation, but when testing ALU I couldn’t understand why the wrong digits come out. My cat heard many, many unprintable expressions.

The development was not fast: maybe it took about a month or two of my free time. But when only the processor successfully performed the operation I was in seventh heaven.

Everything went on as usual until it came to I / O. The book offered a system design with a simple keyboard and a display interface to enter data into the machine and output the result. Well, we have already gone so far , it makes no sense to stop halfway. I set a goal to implement a set on the keyboard and the display of letters on the display.

Periphery

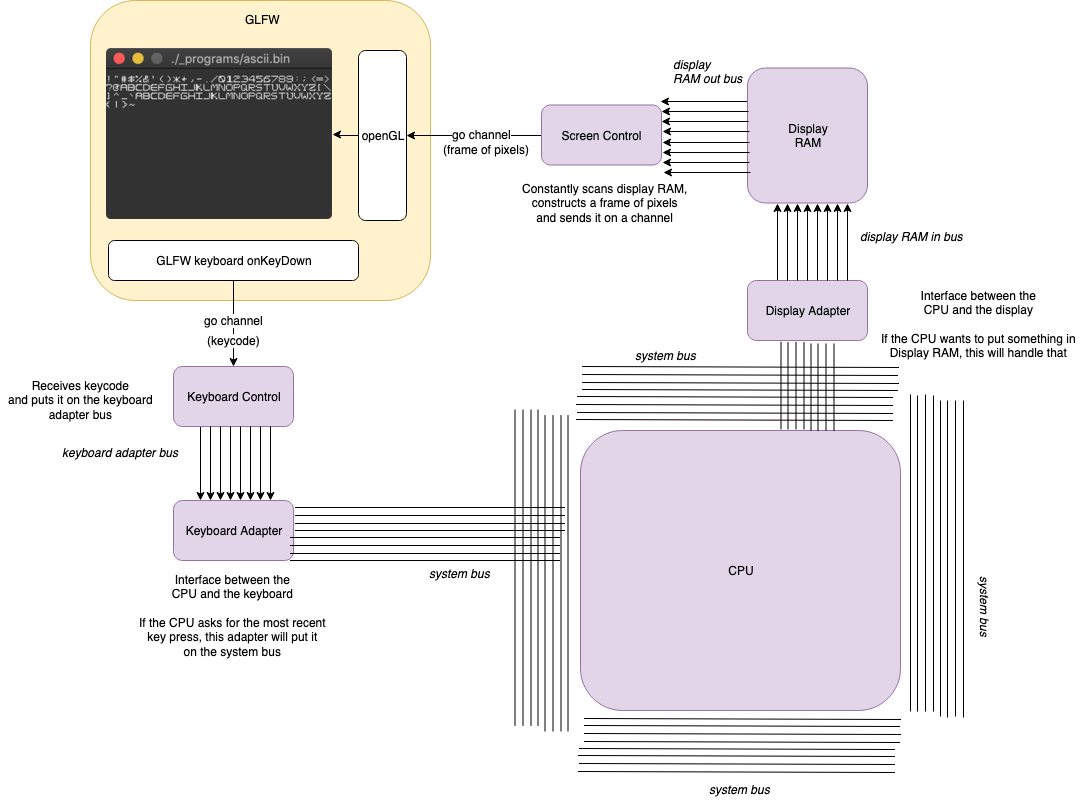

Peripheral devices use an adapter pattern as the hardware interface between the CPU and the outside world. It is probably easy to guess that this pattern is borrowed from software design.

How I / O adapters connect to the GLFW window

With this separation, it turned out to be quite easy to connect the keyboard and display to a window running GLFW. In fact, I just pulled most of the code out of my emulator and changed it a bit to make Go channels work as I / O signals.

Start the computer

This is probably the hardest part, at least the most cumbersome. It is difficult to write in an assembler with such a limited set of instructions, and on my rough assembler it is even worse, because you cannot read anyone but yourself.

The biggest problem was juggling with four registers, tracking them, pulling data from registers and temporarily storing them in memory. In the process, I remembered that the Gameboy processor has a stack pointer register for easy unloading and loading of registers. Unfortunately, this computer does not have this luxury, so you had to constantly manually move data into memory and back.

I decided to spend time on just one

CALL pseudoinstruction to call the function, and then return to the point. Without this, calls are available only one level deep.In addition, since the machine does not support interrupts, I had to implement a terrible code polling the state of the keyboard. The book discusses the steps necessary to implement interrupts, but this seriously complicates the circuit.

But enough to whine, I still wrote four programs , and most of them use some kind of common code for font rendering, keyboard input, etc. This is not exactly an operating system, but it does understand what a simple OS does.

That was not easy. The most difficult part of the text-writer program is to correctly calculate when to go to a new line or what happens when you press the Enter key.

main-getInput: CALL ROUTINE-io-pollKeyboard CALL ROUTINE-io-drawFontCharacter JMP main-getInput The main loop of the program text-writerI did not bother to implement the backspace key and modifier keys. But I realized how much work the development of text editors requires and how tedious it is.

findings

It was a fun and very useful project for me. In the midst of programming in assembler, I almost forgot about the logic gates running below. I climbed to the upper levels of abstraction.

Although this processor is very simple and far from the CPU in my laptop, but it seems to me that the project taught me a lot, in particular:

- How bits move along the bus between all components.

- How a simple ALU works.

- What a simple Fetch-Decode-Execute loop looks like.

- That a machine without a register of the stack pointer and the concept of the stack sucks.

- That the machine without interruption sucks too.

- What is an assembler and what does it do.

- How peripherals interact with a simple processor.

- How simple fonts work and how to display them on the display.

- What a simple operating system might look like.

So what next? The book states that no one has manufactured such computers since 1952. This means that I will have to study the material for the past 67 years. It will take me some time. I see that the x86 manual is 4800 pages : enough for a pleasant, easy reading before bedtime.

Maybe I'll indulge a little with the operating system, the C language, I will kill the evening with the PiDP-11 assembly kit and a soldering iron, and then I will abandon this case. I do not know we will see.

Seriously, I think to explore the architecture of RISC, perhaps RISC-V. It is probably better to start with the early RISC processors in order to understand their origin. Modern processors have much more functions: caches and stuff, I want to understand them. There is a lot to learn.

Is this knowledge useful in my main job? Perhaps useful, though unlikely. In any case, I like it, so it doesn't matter. Thank you for reading!

Source: https://habr.com/ru/post/453158/

All Articles