Make friends CI, unit tests and database

Article about testing the interaction with the database in CI. I have seen several solutions using docker and testcontainers, but I have mine and I want to share them.

My last java project was closely tied to the database. Long processing with retries, multithreading and locking sampling. The task required correcting a couple of clever SQL queries. I somehow got used to covering my code with tests, but before that all SQL was reduced to primitive queries and it could be driven on a H2 base in memory. And then hardcore on orakla.

I could test simple SQL with my hands and slaughter on automated tests, making excuses in front of me: “I’m not some kind of buggy, I can make mistakes in simple code”. Actually, errors and appear less frequently due to the presence of tests. It was possible to shove responsibility at testers - if I made a mistake somewhere, they will find it.

According to the ideology of unit-testing, tests are needed only for testing individual modules, and if a module uses something from the outside, this should be replaced with a plug. In practice, when the stub becomes too difficult to implement, the module is simply ignored. Speed and modularity are important, but it is more important not to leave the code unverified, even if it is not displayed in the coverage metrics. Therefore, they begin to consider the module is no longer a separate class, but a connected group, together with the configuration. The main thing with such statements is not to reach the level of a cluster to stretch the concept of unit.

')

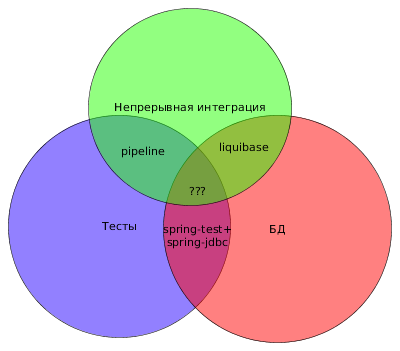

For local testing, each developer has his own scheme, but tests are run on Jenkins and there is no connection to the database yet. For CI, a separate circuit is needed, this seems obvious. But on an empty scheme, it is not very correct to run tests, creating a database structure in each test and in time is costly and fraught with a divergence of structure in the test and in battle. To start on previously prepared base - I will receive a heap of problems with branches. You can prepare the database before running all the tests using liquibase, first clearing everything under zero, and then updating it to the latest version.

Rollback is often forgotten to finalize and it is necessary to clean the bases on test environments. Let's test it too! The algorithm is as follows:

- remove everything under the root (for the purity of the experiment)

- update to latest version

- rollback to version 1-2 back

- update to the latest version (tests should be driven on the new structure of the database, plus checking that rollback did not forget to delete anything that would prevent the re-roll-up of the update)

Colleagues developers do not want to run rollback testing at the start of each test. Making a switch.

project.ext.doRollbackTest = { Boolean.parseBoolean(localConfig['test.rollback.enabled'] as String) } While there is training on cats, everything is ok. But a dynamically developing project makes its own adjustments - 2 pullrequests, simultaneous assembly. One instance is testing something, and the second one knocks the base out of his legs. Solved by a simple prohibition of parallel assemblies.

And again the fall - I decided to get rid of the tests using Jenkins account, because On personal everything is OK, and the pool of requests falls for unclear reasons. We recall fervent speeches that DevOps is a culture and the use of technical accounting for personal purposes is unacceptable.

Accumulated 10 pool rekvestov. All collected, otrevyvleny, you can merge. The first one has gone, the main branch has changed - the rest are joining the queue for reassembly. You can merge as you progress, but there are priorities. More urgent pullrequests, less urgent, are also hanging due to errors in the code. In general - parallelize back.

The assembly should be simple, consist of simple steps and be clear even to yesterday's student. In 99% there are no problems in the fact that the assemblies for the pool of requests and releases are sequential, not parallel. If a review does not accumulate more than 1-2 PR, then a ban on simultaneous assemblies is enough.

And for parallel start we need bases or schemes to which each started test will have exclusive access.

Option one - allocate dynamically. Creating a schema in the database is fast. Having a cloud with the API, you can allocate the database there.

If you do not work out the removal of old databases, you can quickly finish off the disk space when the tests will fall and “forget” to free up resources. It is “when”, not “if”.

Option two is a database / schema pool with a separate management service. The API sticks out of the daiBaseOnTime, take backFreeBaseBeforeTime. What it will return: a dedicated server with a database or only a schema, it does not matter. The main thing is that the resource will not be irretrievably lost.

Option three - a pool of databases / schemes for self-regulation. A resource is needed to exchange information about locks and the pool itself.

I stopped at the last version, as it is easier for me to fasten and support it, especially not required. The logic is as follows - several (10 for example) circuits are created and on the shared resource all the necessary information about connecting to them is added, each instance of the test makes a start mark before the start, after the end it deletes it. If the test fails before it is finalized, the circuit will be considered free at the end of the timeout.

Reading settings:

project.ext.localConfig = new Properties() localConfig.load(file("${rootDir}/local.properties").newReader()) Working with sql from gradle scripts requires loading the driver:

configurations { driver } dependencies { driver group: "oracle", name: "ojdbc6", version: "11.+" } task initDriver { doLast { ClassLoader loader = GroovyObject.class.classLoader configurations.driver.each { File file -> loader.addURL(file.toURL()) } } } Connection:

import groovy.sql.Sql project.ext.createSqlInstance = { return Sql.newInstance( url: localConfig["pool.db.url"], user: localConfig["pool.db.username"], password: localConfig["pool.db.password"], driver: localConfig["pool.db.driverClass"]) } Information can be exchanged through the database table. Initialization of the reference table (should work once, then the table lives until the end of the centuries):

task initDbPool { dependsOn initDriver doLast { Integer poolSize = 10 Sql sql = createSqlInstance() as Sql String tableName = localConfig["pool.db.referenceTable"] String baseName = localConfig["pool.db.baseName"] String basePass = localConfig["pool.db.basePass"] String token = "{id}" List tableExists = sql.rows("select table_name from all_tables where table_name=?", [tableName]) assert tableExists.isEmpty() sql.execute(""" CREATE TABLE ${tableName} ( ID NUMBER(2) NOT NULL PRIMARY KEY, METADATA VARCHAR2(200) NOT NULL, PROCESSED TIMESTAMP NULL, GUID VARCHAR2(36) NULL) """, []) for (Integer i = 0 ; i < poolSize ; i++) { String username = baseName.replace(token, i.toString()) String password = basePass.replace(token, i.toString()) sql.execute(""" CREATE USER ${username} IDENTIFIED BY "${password}" DEFAULT TABLESPACE USERS TEMPORARY TABLESPACE TEMP PROFILE DEFAULT QUOTA UNLIMITED ON USERS """, []) sql.execute("grant connect to ${username}", []) sql.execute("grant create sequence to ${username}", []) sql.execute("grant create session to ${username}", []) sql.execute("grant create table to ${username}", []) String metadata = JsonOutput.toJson([ "app.db.driverClass": localConfig["pool.db.driverClass"], "app.db.url": localConfig["pool.db.url"], "app.db.username": username, "app.db.password": password ]) sql.execute(""" INSERT INTO ${tableName} (id, metadata) values (?, ?) """, [i, metadata]) } } } Developers have their own debugging and build schemes, so the use of the pool must be turned off:

project.ext.isCiBuild = { Boolean.parseBoolean(localConfig['pool.db.enabled'] as String) } Borrow and free base:

task lockDb { dependsOn initDriver onlyIf isCiBuild doLast { project.ext.lockUid = UUID.randomUUID().toString() String tableName = localConfig["pool.db.referenceTable"] Sql sql = createSqlInstance() as Sql sql.executeUpdate("""UPDATE ${tableName} SET GUID = ?, PROCESSED = SYSDATE WHERE ID IN ( SELECT ID FROM ( SELECT ID, ROW_NUMBER() OVER (ORDER BY PROCESSED) AS RN FROM ${tableName} WHERE GUID IS NULL OR PROCESSED < (SYSDATE - NUMTODSINTERVAL(?, 'MINUTE')) ) WHERE RN = 1 ) """, [lockUid, 15]) def meta = sql.firstRow("SELECT METADATA FROM ${tableName} WHERE GUID = ?", [lockUid]) assert meta != null, "No free databases in pool" def slurper = new JsonSlurper() Map metadata = slurper.parseText(meta["METADATA"] as String) as Map localConfig.putAll(metadata) logger.info("Database locked, {}", metadata) } } task unlockDb { dependsOn lockDb // init lockUid onlyIf isCiBuild doLast { try { String tableName = localConfig["pool.db.referenceTable"] Sql sql = createSqlInstance() as Sql sql.executeUpdate("UPDATE ${tableName} SET GUID = NULL WHERE GUID = ?", [lockUid]) logger.info("Database unlocked") } catch (ignored) { logger.error(ignored) } } } If you build 2 times in a row, different schemes may be highlighted and different substituted values will remain when assembling property files. For local runs, the settings are static.

configure([processResources, processTestResources]) { Task t -> if (project.ext.isCiBuild()) { t.outputs.upToDateWhen { false } } t.filesMatching('**/*.properties') { filter(ReplaceTokens, tokens: localConfig, beginToken: '${', endToken: '}') } } Rollback test tasks:

task restoreAfterRollbackTest(type: LiquibaseTask) { command = 'update' } task rollbackTest(type: LiquibaseTask) { dependsOn lockDb command = 'rollback' requiresValue = true doFirst { project.ext.liquibaseCommandValue = localConfig['test.rollback.tag'] } doLast { project.ext.liquibaseCommandValue = null } } And configure the execution order:

configure([project]) { tasks.withType(LiquibaseTask.class) { LiquibaseTask t -> logger.info("Liquibase task {} must run after {}", t.getName(), configLiquibase.getName()) (t as Task).dependsOn configLiquibase if (isCiBuild()) { logger.info("Liquibase task {} must run after {}", t.getName(), lockDb.getName()) (t as Task).dependsOn lockDb (t as Task).finalizedBy unlockDb } } // CI: // 1. 0 (dropAll) // 2. rollback (update) // 3. , (rollback tag) // 4. (update) // 5. if (doRollbackTest()) { def setTaskOrdering = { List<Task> lst -> for (int i = 0; i < lst.size() - 1; i++) { logger.info("Task {} must run after {}", lst[i + 1].getName(), lst[i].getName()) lst[i + 1].dependsOn lst[i] } } setTaskOrdering([ lockDb, configLiquibase, dropAll, update, rollbackTest, restoreAfterRollbackTest, processTestResources, test, ]) lockDb.finalizedBy unlockDb test.finalizedBy unlockDb } } Base selection and rollback testing can be placed inside tests. Ways to run the code before and after all the tests are completed: in Junit5, this is BeforeAllCallback, in TestNG BeforeSuite.

The answer to the question “why test sql java programmer” is to test any code. There are exceptions and it is not rational to test some code at a given time.

I would like to know how the problem of testing the interaction with the database is solved by other programmers? Have containers come to every home or is integration testing shifted to testers?

Full listing

import groovy.json.JsonOutput import groovy.json.JsonSlurper import groovy.sql.Sql import org.apache.tools.ant.filters.ReplaceTokens import org.liquibase.gradle.LiquibaseTask plugins { id 'java' id 'org.liquibase.gradle' version '2.0.1' } configurations { driver } repositories { jcenter() mavenCentral() maven { url = "http://www.datanucleus.org/downloads/maven2/" } } dependencies { implementation 'com.google.guava:guava:27.0.1-jre' implementation 'org.springframework:spring-core:5.1.7.RELEASE' implementation 'org.springframework:spring-context:5.1.7.RELEASE' implementation 'org.springframework:spring-jdbc:5.1.7.RELEASE' testImplementation 'junit:junit:4.12' testImplementation 'org.springframework:spring-test:5.1.7.RELEASE' testRuntime 'oracle:ojdbc6:11.+' liquibaseRuntime 'org.liquibase:liquibase-core:3.6.1' liquibaseRuntime 'oracle:ojdbc6:11.+' liquibaseRuntime 'org.yaml:snakeyaml:1.24' driver group: "oracle", name: "ojdbc6", version: "11.+" } project.ext.localConfig = new Properties() localConfig.load(file("${rootDir}/local.properties").newReader()) project.ext.isCiBuild = { Boolean.parseBoolean(localConfig['pool.db.enabled'] as String) } project.ext.doRollbackTest = { Boolean.parseBoolean(localConfig['test.rollback.enabled'] as String) } task configLiquibase { doLast { liquibase { activities { testdb { changeLogFile 'changelog.yaml' url localConfig['app.db.url'] driver localConfig['app.db.driverClass'] username localConfig['app.db.username'] password localConfig['app.db.password'] logLevel 'debug' classpath "${project.projectDir}/db" contexts 'main' } runList = 'testdb' } } } } task initDriver { doLast { ClassLoader loader = GroovyObject.class.classLoader configurations.driver.each { File file -> loader.addURL(file.toURL()) } } } project.ext.createSqlInstance = { return Sql.newInstance( url: localConfig["pool.db.url"], user: localConfig["pool.db.username"], password: localConfig["pool.db.password"], driver: localConfig["pool.db.driverClass"]) } task initDbPool { dependsOn initDriver doLast { Integer poolSize = 10 Sql sql = createSqlInstance() as Sql String tableName = localConfig["pool.db.referenceTable"] String baseName = localConfig["pool.db.baseName"] String basePass = localConfig["pool.db.basePass"] String token = "{id}" List tableExists = sql.rows("select table_name from all_tables where table_name=?", [tableName]) assert tableExists.isEmpty() sql.execute(""" CREATE TABLE ${tableName} ( ID NUMBER(2) NOT NULL PRIMARY KEY, METADATA VARCHAR2(200) NOT NULL, PROCESSED TIMESTAMP NULL, GUID VARCHAR2(36) NULL) """, []) for (Integer i = 0 ; i < poolSize ; i++) { String username = baseName.replace(token, i.toString()) String password = basePass.replace(token, i.toString()) sql.execute(""" CREATE USER ${username} IDENTIFIED BY "${password}" DEFAULT TABLESPACE USERS TEMPORARY TABLESPACE TEMP PROFILE DEFAULT QUOTA UNLIMITED ON USERS """, []) sql.execute("grant connect to ${username}", []) sql.execute("grant create sequence to ${username}", []) sql.execute("grant create session to ${username}", []) sql.execute("grant create table to ${username}", []) String metadata = JsonOutput.toJson([ "app.db.driverClass": localConfig["pool.db.driverClass"], "app.db.url": localConfig["pool.db.url"], "app.db.username": username, "app.db.password": password ]) sql.execute(""" INSERT INTO ${tableName} (id, metadata) values (?, ?) """, [i, metadata]) } } } task lockDb { dependsOn initDriver onlyIf isCiBuild doLast { project.ext.lockUid = UUID.randomUUID().toString() String tableName = localConfig["pool.db.referenceTable"] Sql sql = createSqlInstance() as Sql sql.executeUpdate("""UPDATE ${tableName} SET GUID = ?, PROCESSED = SYSDATE WHERE ID IN ( SELECT ID FROM ( SELECT ID, ROW_NUMBER() OVER (ORDER BY PROCESSED) AS RN FROM ${tableName} WHERE GUID IS NULL OR PROCESSED < (SYSDATE - NUMTODSINTERVAL(?, 'MINUTE')) ) WHERE RN = 1 ) """, [lockUid, 15]) def meta = sql.firstRow("SELECT METADATA FROM ${tableName} WHERE GUID = ?", [lockUid]) assert meta != null, "No free databases in pool" def slurper = new JsonSlurper() Map metadata = slurper.parseText(meta["METADATA"] as String) as Map localConfig.putAll(metadata) logger.info("Database locked, {}", metadata) } } task unlockDb { dependsOn lockDb // init lockUid onlyIf isCiBuild doLast { try { String tableName = localConfig["pool.db.referenceTable"] Sql sql = createSqlInstance() as Sql sql.executeUpdate("UPDATE ${tableName} SET GUID = NULL WHERE GUID = ?", [lockUid]) logger.info("Database unlocked") } catch (ignored) { logger.error(ignored) } } } configure([processResources, processTestResources]) { Task t -> if (project.ext.isCiBuild()) { t.outputs.upToDateWhen { false } } t.filesMatching('**/*.properties') { filter(ReplaceTokens, tokens: localConfig, beginToken: '${', endToken: '}') } } task restoreAfterRollbackTest(type: LiquibaseTask) { command = 'update' } task rollbackTest(type: LiquibaseTask) { dependsOn lockDb command = 'rollback' requiresValue = true doFirst { project.ext.liquibaseCommandValue = localConfig['test.rollback.tag'] } doLast { project.ext.liquibaseCommandValue = null } } configure([project]) { tasks.withType(LiquibaseTask.class) { LiquibaseTask t -> logger.info("Liquibase task {} must run after {}", t.getName(), configLiquibase.getName()) (t as Task).dependsOn configLiquibase if (isCiBuild()) { logger.info("Liquibase task {} must run after {}", t.getName(), lockDb.getName()) (t as Task).dependsOn lockDb (t as Task).finalizedBy unlockDb } } // CI: // 1. 0 (dropAll) // 2. rollback (update) // 3. , (rollback tag) // 4. (update) // 5. if (doRollbackTest()) { def setTaskOrdering = { List<Task> lst -> for (int i = 0; i < lst.size() - 1; i++) { logger.info("Task {} must run after {}", lst[i + 1].getName(), lst[i].getName()) lst[i + 1].dependsOn lst[i] } } setTaskOrdering([ lockDb, configLiquibase, dropAll, update, rollbackTest, restoreAfterRollbackTest, processTestResources, test, ]) lockDb.finalizedBy unlockDb test.finalizedBy unlockDb } } pool.db.enabled=false test.rollback.enabled=true pool.db.driverClass=oracle.jdbc.driver.OracleDriver pool.db.url=jdbc:oracle:thin:@localhost:1527:ORCLCDB pool.db.username=SYSTEM pool.db.password=Oradoc_db1 pool.db.referenceTable=c##test_user1.REF_TABLE pool.db.baseName=C##CI_SCHEMA_{id} pool.db.basePass=CI_SCHEMA_{id}_PASS app.db.driverClass= app.db.url= app.db.username= app.db.password= test.rollback.tag=version_1 Source: https://habr.com/ru/post/452722/

All Articles