Popular misconceptions about the radiation resistance of microcircuits

Approximately in every second topic on Habré, related to astronautics or electronics, the topic of radiation resistance emerges. Through the news about the domestic cosmonautics, the subject of import substitution of a radio-resistant element base passes through the red thread, but at the same time Elon Musk uses cheap ordinary chips and is proud of it. And the Israelis in “Bereshit” used a radio-resistant processor and are also proud of it. And in principle, the microelectronic industry in Russia lives for the most part at the expense of the state order with the corresponding requirements. Observation of regular disputes about how to properly build satellites shows that participants are usually not well trained, and their arguments are burdened by stereotypes, accidentally heard facts and knowledge taken out of context and obsolete many years ago. I thought that reading is no longer the power, so, dear analysts, sit back on your couches, and I will start a small (actually big) story about the most popular misconceptions about what is the radiation resistance of integrated circuits.

Figure 1. An indispensable beautiful picture about cosmic radiation and fragile Earth.

The most popular theses on radiation resistance, used in near-cosmic disputes, look like this:

As you can see, some of these theses directly contradict each other - which is regularly the subject of controversy or the reason for far-reaching wrong conclusions.

')

You need to start a conversation with an important disclaimer: radiation resistance is not the center of the world and the only quality that must be suitable for use in space or other hostile environment chip. Radiation resistance is only one requirement from a long series, including reliability, an extended temperature range, resistance to electrostatic discharge, vibration resistance - and reliable confirmation of all the above parameters, that is, long and expensive certification. Everything that can prevent the chip from working over the entire necessary service life is important, and most applications of fast-resistant chips assume the impossibility of repair or replacement. On the other hand, if something is wrong with one of the parameters, the designer of the final product can often find a way around the limitation - put the most sensitive to radiation dose microcircuit behind a thick wall, monitor the current consumption of a chip vulnerable to the thyristor effect and reset it when necessary , or thermostat the chip with a narrow temperature range. But it may not be found, and the only way to solve the task will be to order a new fast-resistant ASIC.

It is also useful to remember that the developers of special-purpose systems are the same people as any other developers. Many of them also like to write a code filled with crutches to yesterday's deadline and use the more powerful iron to make it work on it; some would use Arduino if it were properly certified. And, of course, people who set tasks for developers of special-purpose systems and developers of microcircuits for them are rarely embarrassed in requirements, both in reliability, and in performance, and in reliability. Therefore, modern design standards on satellites are still needed - I want large amounts of DRAM, multi-core processors, and the most modern FPGAs. I have already mentioned above that the consequences of poor radiation persistence and other potential problems can be at least partially circumvented, so the use of all this magnificence of developers is largely retained by the lack of data on what should be bypassed than the commercial status of the chips.

The concepts of "radiation resistance" and "radiation-resistant chip" are ambitious simplifications. In fact, there are many different sources of ionizing radiation, and they can affect the functioning of electronic devices in different ways. Accordingly, resistance to different sets of influencing factors and different levels of exposure is necessary for different applications, so a “resistant” chip designed for work in low earth orbit is absolutely not obliged to work normally when parsing the debris in Chernobyl.

Ionizing radiation is called ionizing, because the release of energy in the bulk of a substance as it slows down the incoming particles ionizes the substance. Each material has its own energy required for ionization and the creation of an electron-hole pair. For silicon it is 3.6 eV, for its oxide - 17 eV, for gallium arsenide - 4.8 eV. Also, the arriving particle may not ionize an atom, but “move” it from the correct place in the crystal lattice (in silicon, for this you need to transfer the atom to 21 eV). Electron-hole pairs created in a substance can have different effects on the electrical and physical properties and on the behavior of an electrical circuit. The radiation effects can be divided into four large groups: the effects of total absorbed dose, the effects of dose rate, effects caused by single particles, and the effects of displacement. This separation is somewhat arbitrary: for example, the irradiation of a stream of heavy ions that cause solitary effects leads to a set of total absorbed dose.

Dose Effects

The total absorbed dose of radiation is measured in radas, with an indication of the substance absorbing the radiation. 1 rad = 0.01 J / kg, that is, the amount of energy released in a unit mass of a substance. Less commonly used is the unit of measure Gray, equal to 100 rad (or 1 J / kg). It is important to understand that the absorbed dose in different substances will vary for the same amount of ionizing particles released by a source of radiation (this is the exposure dose). In the case of silicon chips, the desired material is silicon oxide, because the effect on it, and not on silicon, mainly affects the electrical characteristics of the circuit, since the mobility of holes in SiO2 at normal temperature is so small that they accumulate in the oxide, creating built-in positive charge. Typical dosage levels of commercial microcircuits are in the range of 5-100 krad (Si), the radiation resistance levels demanded by buyers begin at 30 krad (Si) and end somewhere in the region of 1 Grad (Si), depending on the purpose of the microcircuit. The lethal dose for humans is about 6 Gray.

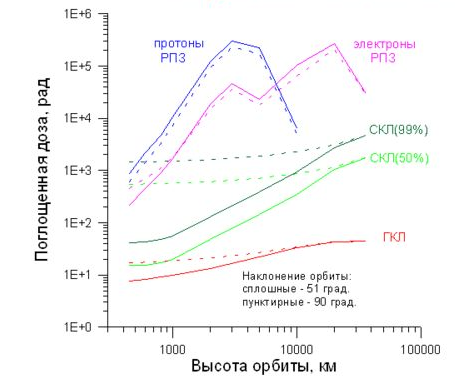

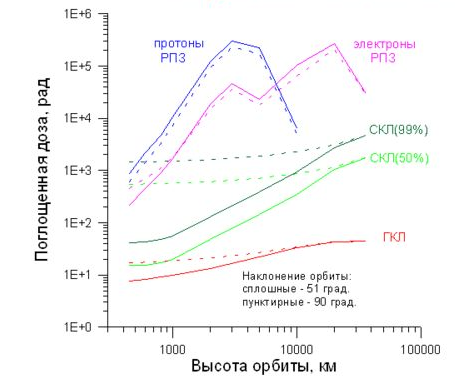

Figure 2. Examples of calculations of the total absorbed dose set for 10 years in various circular orbits behind protection of 1 g / cm ^ 2. Source - N.V. Kuznetsov, "Radiation hazard in near-earth orbits and interplanetary trajectories of spacecraft."

The effects of exposure to the full dose are associated with the accumulation of this positive charge in dielectrics and manifest themselves in CMOS circuits in several main ways:

In bipolar circuits, the main dose effect is a decrease in the gain due to an increase in the base current due to leakage from the emitter to the base at the silicon boundary and the passivating oxide. Another bipolar transistor-specific dose effect is that they can (not necessarily) react not only at the level of the dose collected, but also at the speed of its collection — the slower the dose is collected, the worse the resistance. This effect is called ELDRS (Enhanced Low Dose Rate Sensitivity) and it greatly complicates and increases the cost of testing, often not only bipolar, but also CMOS circuits - because they also sometimes have bipolar transistors and because it is easier to force everyone to be tested uniformly than Understand where ELDRS can be, and where not.

Dose rate

Another part of the effects associated with the dose rate is an ultrafast dose set at which such a large number of electron-hole pairs are generated in the chip that they do not have time to recombine, and a huge electric charge is introduced into the chip, which is absorbed through the ground and power lines during considerable time - for which the scheme stops working. This time is called “loss of health” and is the main characteristic of the resistance of the chip or device to this kind of effects. In addition, a large amount of charge introduced into the microcircuit seriously changes the potentials of the areas connected to the ground and to the power supply, which can lead to the thyristor effect.

It is the effects of high dose rates that, for the sake of resistance to which the technology “silicon on sapphire” and “silicon on insulator” originally developed, because the only way to reduce the charge introduced into the circuit is to separate the active area of the chip from the substrate volume, not giving charge of the substrate to participate in the process. Why are these effects important? A high dose rate for a short time is a typical consequence of a nuclear explosion.

Single effects

Single effects are not associated with prolonged exposure to radiation, but with a measurable effect from the ingress of a single ionizing particle. They can be divided into two large groups:

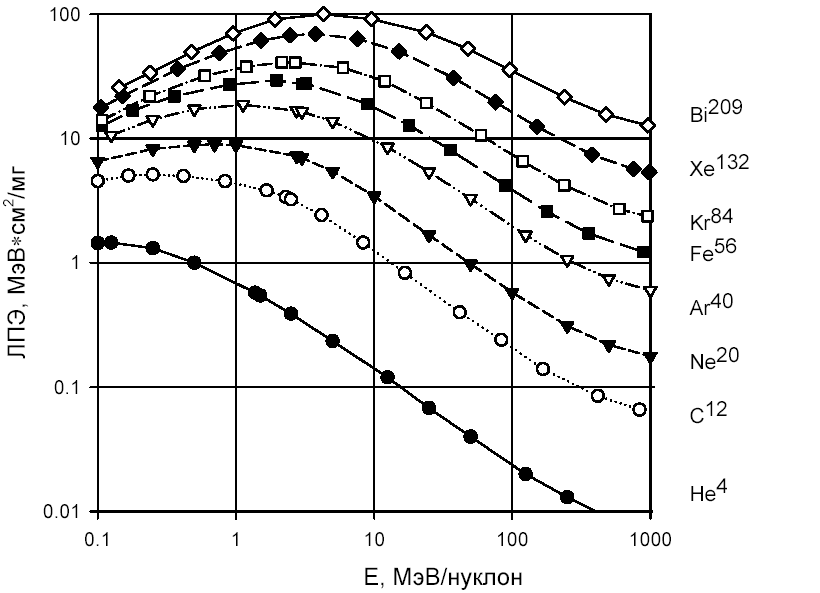

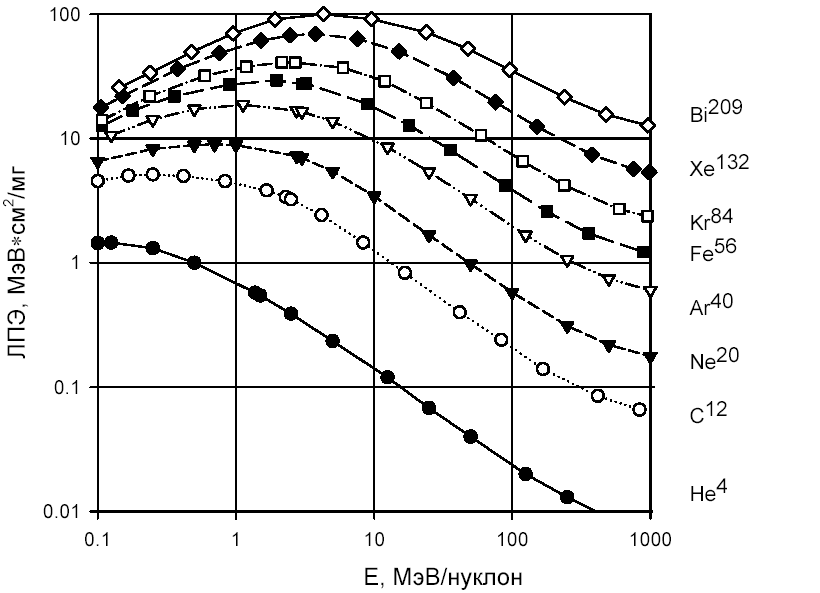

The specific energy yield of an ionizing particle is called “linear energy transfer” (LET) and is measured in MeV, transmitted per unit length of the particle's span in the material, per unit density of the material, that is, in (MeV * cm ^ 3) / (mg * cm) or (MeV * cm ^ 2) / mg. LET non-linearly and non-monotonously depends on the particle energy and is interconnected with the path length, which can be from hundreds of nanometers to hundreds of millimeters for particles and materials relevant in microelectronics.

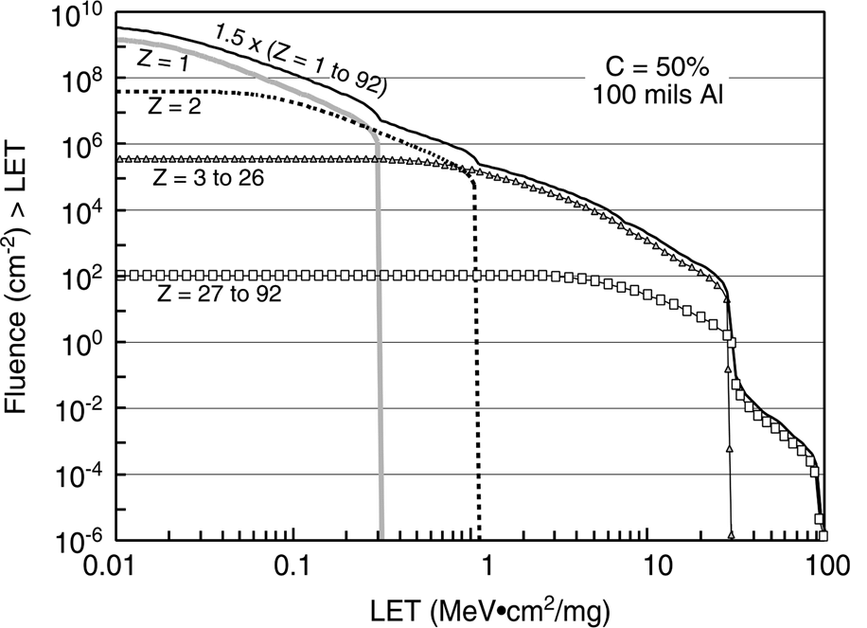

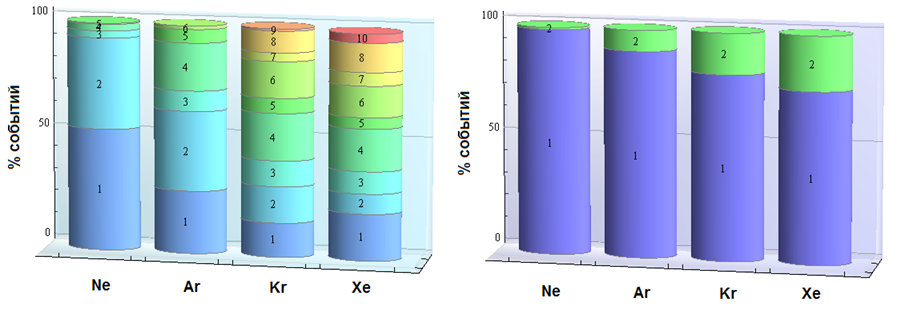

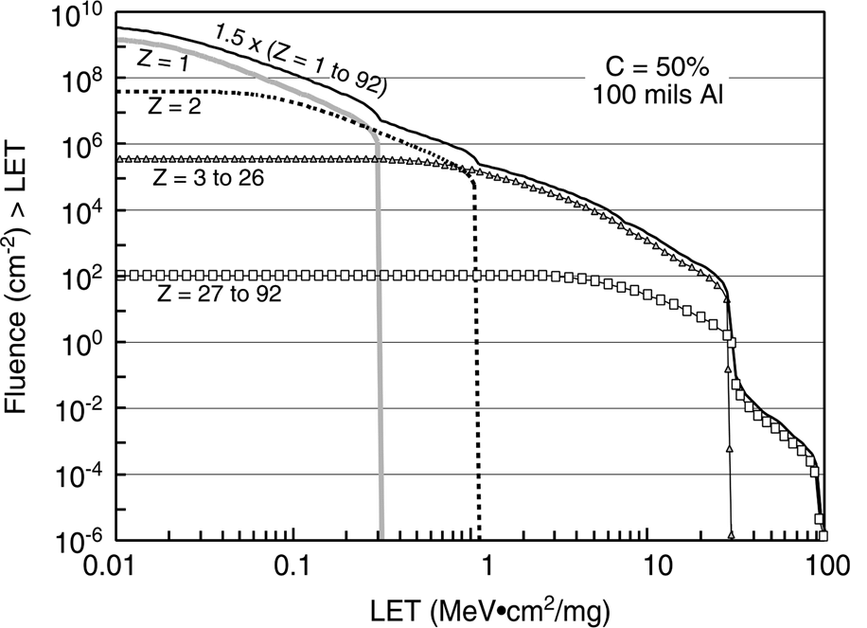

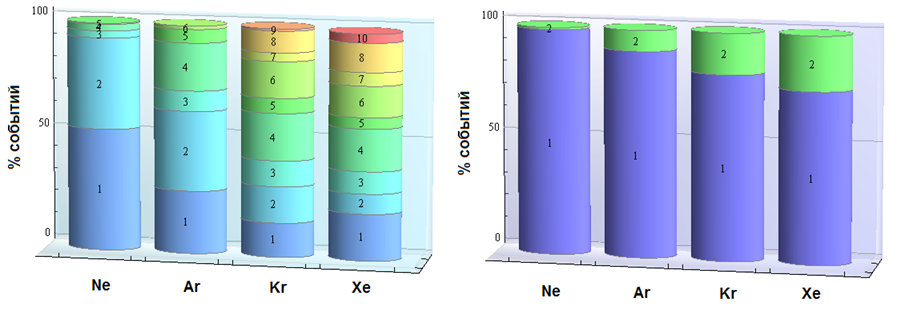

The number of particles encountered in space decreases with increasing LET (see Figure 4). Important values are 30 (corresponding to iron ions) and 60 or 80 (after which the probability of an event is considered negligible). In addition, an important figure of 15 MeV * cm ^ 2 / (mg) is the maximum LET that a nuclear reaction product can have when a proton or neutron hits silicon. Protons are one of the main types of solar radiation, and although their own LET is small (tenths of a unit), they have a significant effect due to nuclear reactions and secondary ionization. Secondary ionization can occur directly in the active region, and may be due to the proton entering the atom of some material with a large atomic number — for example, tungsten or tantalum. Heavy elements are widely used in modern microelectronic technology, for example, to create contacts from silicon to the first metallization layer. Secondary ionization is also the reason why you should not pack chips into lead boxes to increase radiation resistance.

Figure 3. The dependence of LET on energy for different types of particles.

Separately, it is worth paying attention to helium nuclei (alpha particles) - not only because there are quite a lot of them in the composition of solar radiation, but also because quite a lot of alpha sources can be found in ordinary life.

Figure 4. Comparison of the number of particles of different types for a two-year mission in orbit, under the article Xapsos et.al., "Model for Cumulative Solar Heavy Ion Energy and Linear Energy Transfer Spectra", IEEE TNS, Vol. 5, No. 6., 2007

1, 30 or 60 MeV * cm ^ 2 / (mg) - how much is this much? The failure threshold of a standard memory cell in the 7 nm technology is much lower than the unit, and at 180 nm it ranges from one to ten. The use of special circuitry allows you to raise the threshold, for example, up to a hundred, but it is usually wiser to achieve figures of 15 or 30 units, and filter the residual rare events using noise-correcting coding. 60 units is a figure, which usually appears in the requirements for resistance to damaging effects.

Offset effects

The effects of displacement is the local destruction of the crystal lattice, that is, the "knocking out" of an atom from its intended place. The energy required for damage to the crystal lattice is usually quite high, so most of the flying particles do not cause this effect. But it can be caused by a nuclear reaction as a result of a proton or neutron hit, which are many in orbit. Such local lattice defects lead to a decrease in the mobility of charge carriers, an increase in noise, and some other effects. They affect conventional CMOS chips less than “normal” dose effects, but dominate in solar cells, photodetectors, power transistors, as well as in complex semiconductors that do not have oxide, such as gallium arsenide and gallium nitride. This explains their high dose resistance - they simply have no effects that cause the rapid degradation of silicon chips, and what is, appears weaker and later. The amount of radiation causing displacement effects is measured in particles (usually protons or neutrons) per square centimeter of chip area.

So, with the description of the influencing factors of radiation figured out, now let's see where and in what combinations they threaten the chip.

Figure 2 shows an example of calculating the full dose set in different orbits. Next you need to discuss a lot of assumptions - solar activity, shape, material and thickness of protection, and so on, but in general, despite the fact that the figure is a typical spherical horse in a vacuum, the trend is clear: in different orbits, the rate of collection of the full dose may vary five orders of magnitude. At the same time, in low orbits, under the first Van Allen belt, the dose is collected so slowly that many conventional commercial chips can withstand several years in such conditions. Why, microcircuits, even much more fragile people fly there for years without serious health consequences. Meanwhile, low orbits are practically the entire manned cosmonautics, remote sensing of the earth, satellite communications, the satellite Internet promised very soon and, as the Americans say, the last but not least, almost all Kubsatans are launched into low orbits.

Commercial chips in low orbits

Actually, it is because of the popularity and importance of low orbits that the legs grow from speculations that expensive expensive chips are not needed, and it is quite possible to get along with the usual ones. But the use of commercial microcircuits in space also has pitfalls, which manifest themselves even in low orbits.

First, the van Allen belts protect the Earth and its near surroundings only from light particles, mainly from solar electrons and protons. Heavier particles, even though they are much rarer, quietly reach even our last shield - the atmosphere - and, correctly, cause single effects, including the thyristor effect, capable of irreversibly destroying some kind of microcircuit at any moment and with it the entire spacecraft . Therefore, commercial chips can be applied only if measures are taken to protect them from single effects.

The second problem is that the chips on the satellite are not only processors and memory, but also many other types of chips, including power and analog ones, and with their radiation resistance, everything is much more complicated and much less predictable. Yes, and modern systems on a chip contain a large number of non-digital blocks; for example, for most flash memory chips, the high-voltage generator used for recording stops working, and for analog CMOS circuits, the reference voltage generated by a pair of bipolar transistors shifts, and even small leaks can seriously change the operating point of low-power analog cascades. The strength of the power switches to destructive single effects can be highly dependent on the voltage applied to them, and so on and so forth.

The third important problem of using commercial microcircuits in space is that the resistance to the full dose and thyristor effect is sensitive to changes in the process parameters, including small ones, so if you change something in the factory, you can throw your test results into the trash can . And for commercial microcircuits manufacturer guarantees the stability of the functional parameters, rather than the process. Moreover, in different batches you may encounter crystals from different factories; for example, the processor from the sixth iPhone, Apple A9, was made on 16 nm TSMC and 14 nm Samsung, and the user was not informed which version was in his phone. To combat this problem all over the world, Trusted Foundry institutes or certified technical processes are used to develop high-resistance microcircuits — in a word, some form of guarantee that the technical process remains unchanged from the factory.

In total, the correct answer to the question "can conventional commercial chips be used in space?" Useful advice: if you still decide to use a commercial chip and invest in its tests, buy the stock right away ten years ahead. By the way, this is quite a business model of a large and respected 3DPlus company - they test all commercial chips in succession in a row, find those that have (in fact, by chance) sufficient indicators, buy large lots and then pack the chips into their own cases under their own brand .

Other orbits

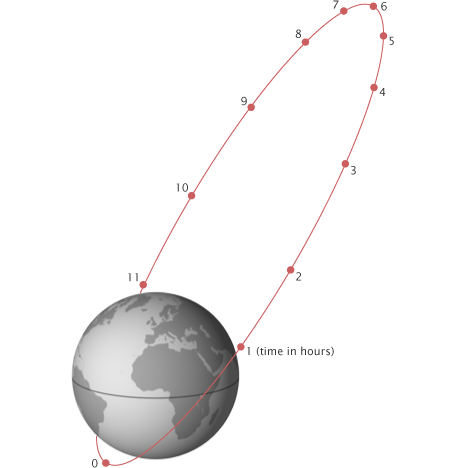

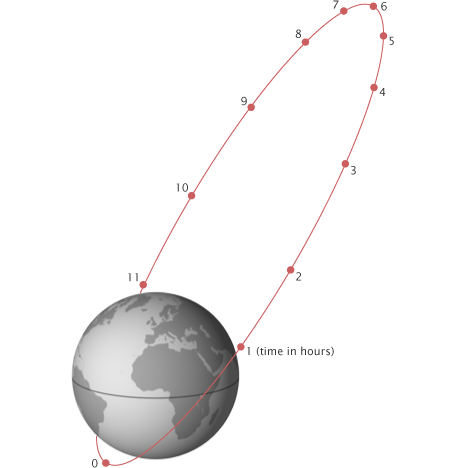

However, satellites fly not only in low-Earth orbit. As an example of other requirements, let us consider the only way to ensure a stable connection in the vicinity of the North Pole - and this is a strategically important region for Russia - the Molniya orbit, so named after the first apparatus launched to it.

Figure 5. Orbit “Lightning”.

The main feature of this orbit is that due to its large elongation (the minimum height is about 500 km above the Earth’s surface, the maximum is up to 40,000 km, the period is 12 hours) the apparatus crosses the radiation belts four times a day.The lifetime of the very first Molniya satellites was only about half a year - primarily due to a drop in the power of solar panels caused by radiation, which needed to be powered by a powerful (orbitally high) radio transmitter.

In the geostationary orbit or in the orbits of navigation devices, Figure 2 promises us a dose of several hundred steals (Si) - and the dose resistance of commercial microcircuits can easily be 5-10 crads (Si), that is, about 10-15 years of active existence of such chips in orbit speech can not be. More precisely, it can, but this will require a much thicker protection - or protection from something denser than aluminum. However, here we are plunging into the wondrous world of designing spacecraft, so let's confine ourselves to saying that delivering every kilogram to orbit is expensive, and the idea of hiding the most vulnerable electronics deeper inside the case, shielding it with other components is not bad, but completely will not decide.

Military ICs

When dealing with the question of dispelling myths about fastness, it is imperative to say that it is impossible to put an equal sign between "fast-resistant", "space" and "military" microcircuits. Not all military chips are fast-proof, and not all fast-resistant chips are military. If we turn to the US military standard Mil-Std-883 (to the American, because its Russian counterpart in terms of radiation is classified), we will find in it a lot of different tests for environmental influences - thermal cycling, humidity, air with sea salt, and t .d etc.

Radiation relate following items:

1017.2 Neutron irradiation

1019.8 Ionizing radiation (total dose) test procedure

1020.1 Dose rate induced latchup test procedure

1021.3 Dose rate upset testing of digital microcircuits

1023.3 Dose response rate of linear microcircuits

Full dose and dose rate. Single effects? No, no.The requirements specification for a microcircuit may include requirements for resistance to single failures and / or thyristor effect, but these requirements are not standardized and are determined anew each time, based on the needs of specific customers of each chip. It turns out that the status of “military” is not a guarantee of the possibility of launching a microchip into space? Yes it is.An example is the fate of the notorious Phobos-Soil, whose death was caused, according to the official version (very difficult to prove, but very convenient), by hitting a heavy charged particle into an American memory chip of the “military” class, which was not resistant to single failures .

Peaceful atom and others

The importance of radiation resistance is not limited only to space and military applications. The natural radiation background at sea level is many times lower than even what happens in a low orbit, but the Earth’s atmosphere not only serves as the final shield in the path of cosmic radiation, but also creates secondary particles when interacting with it. Secondary particles are mostly neutrons. Appearing in the upper layers of the atmosphere, they usually do not reach the surface, but at the heights of civilian airliner flights and the radiation dose is significant, and very impressive statistics have been collected for single failures. In medicine, X-ray radiation has long been used, and radiotherapy is one of the most important ways to combat malignant tumors, and electronics are also needed in such installations.

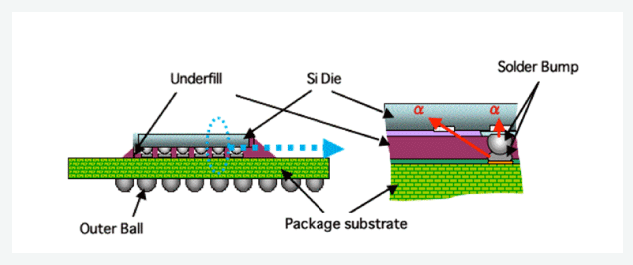

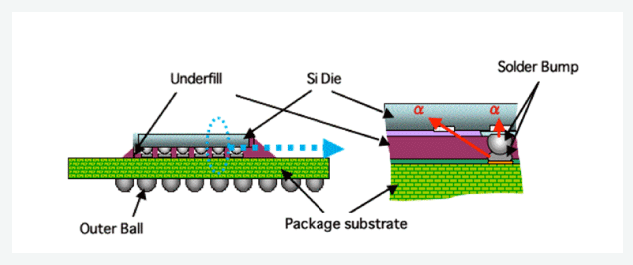

And, of course, we should not forget that all the fuss with lead electronics, which was dearly loved by all electronics, was started largely because of the fact that lead and some other materials used in the production of microcircuits contain impurities of heavier elements, in particular uranium, and their use leads to the generation of a small, but still well-measurable flux of alpha particles — right around vulnerable silicon. In the case of BGA packages or 3D assemblies, over the entire surface of vulnerable silicon.

Figure 6. Illustration of a solder ball as a source of alpha particles.

The good news is that alpha particles have a rather small depth of run in silicon (from units to tens of microns, depending on energy), and multi-layer metallization helps reduce their influence. The bad news is that at low design norms, all alpha particles that still reach silicon cause malfunctions, and not only single ones, but also multiple ones (more on this in more detail below). For example, last year TSMC published an article on measuring the number of failures from alpha particle contamination in the memory according to design standards of 7 nm for 2018 IEEE International Reliability Physics Symposium, that is, this problem continues to exist and require some kind of action in a world where everything switched to lead free solder.

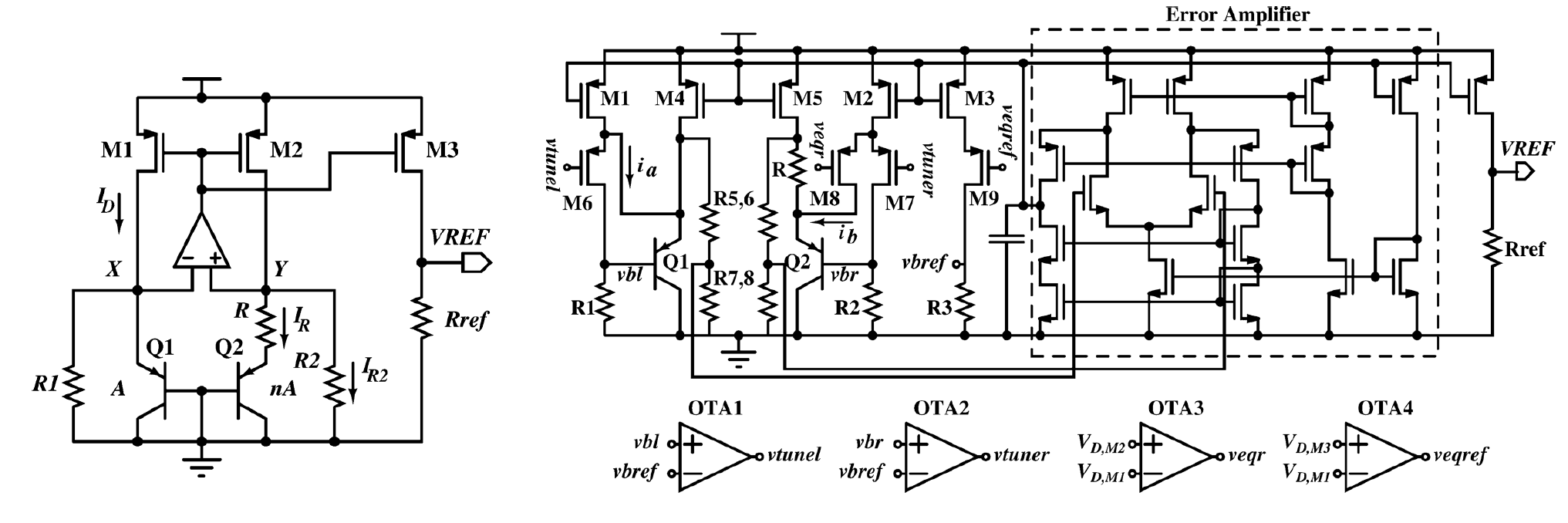

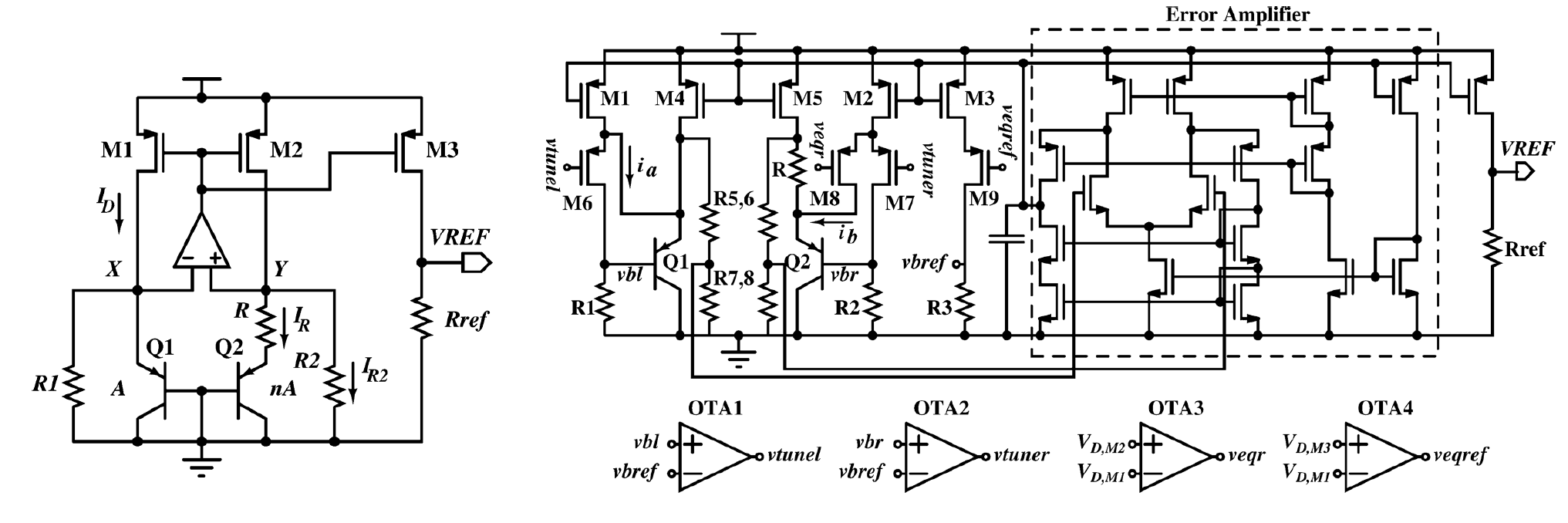

Another application of radiation-resistant chips, which I would like to say a few words - is high energy physics and atomic energy. In the cores of hadron colliders and atomic reactors (as well as in equipment designed to eliminate radiation disasters), electronics are also needed, and it is highly desirable that it does not need to be replaced and repaired for a considerable time. The requirements for the total absorbed dose for such applications are dozens and even hundreds of Megarads (Si), that is, three orders of magnitude more than in conventional space applications. Further complicating the situation is that such durability is required not from digital circuits, but from power and analog circuits - control circuits for electric drives and the primary processing of readings from multi-channel sensors.And while ensuring the dose stability of digital circuits is more and more understandable even at high doses, in the case of an analog, the development of an electrical circuit is of fundamental importance, and the resulting circuit itself is even more know-how than is usually the case in analog design.

7. . Y. Cao et.al., «A 4.5 MGy TID-Tolerant CMOS Bandgap Reference Circuit Using a Dynamic Base Leakage Compensation Technique», IEEE TNS, Vol.60, N.4, 2013

I will illustrate the scale of the tasks facing the developers of chips for the nuclear power industry with my favorite example. The reference voltage source (ION), equal to the bandgap voltage reference bandwidth, is a relatively simple and well-known scheme. When exposed to radiation, the parameters of bipolar transistors used as diodes change (the gain decreases due to emitter-base leakage). As a result, the reference voltage of the conventional ION circuit, which determines the accuracy of all measurements, can shift, say, by 15–20%, which corresponds to the effective digit capacity of the ADC of two to three bits. In the circuit on the right, the reference voltage varies within 1% (which is more than 7 bits) with an ionizing radiation dose of 4.5 MGy. In order to achieve this impressive result, the scheme needed to be seriously reworked,adding a whole scattering of feedbacks that compensate for dose leakage. In a highly resistant version, there are about four times more elements than in a normal one, and its power consumption is twice as much. And the worst news is that for each new scheme a strategy of ensuring radiation resistance and its implementation have to be developed separately. But there is still the problem of protecting analog circuits from single effects, the solution of which is also rather poorly formalized.But there is still the problem of protecting analog circuits from single effects, the solution of which is also rather poorly formalized.But there is still the problem of protecting analog circuits from single effects, the solution of which is also rather poorly formalized.

On the site of a single microelectronic factory for a long time there was a statement that radiation resistance cannot be achieved at design norms below 600 nm, because otherwise “charged particles flush silicon”. By a surprising coincidence, the minimum design standards available to that factory were just 600 nm. And one high-ranking employee of another factory said in an interview that it was technologically impossible to make microcircuits for space at design norms below 90 nm. And again it so coincided that it is technologically impossible to do something at rates below 90 nm at this particular factory. The marketing reasons for these situations and the momentary benefits from them are quite understandable, but in the long run, similar phrases to a wide audience seem to do more harm than good.

Also, there is a regular reasoning that there is no argument to any marketing that microcircuits made according to design standards that are coarser than a certain limit are immune to destructive single faults (in particular, to the thyristor effect), which means that long-term use of outdated technologies is not only justified, but and necessary.

Or vice versa, the phrases that, to the thyristor effect, are immune circuits with design norms below 250 nm, because their operating voltages are so low that the thyristor simply cannot open. Or there are opinions that in fact the problem is not in design standards, but that CMOS technology is fundamentally vulnerable to radiation (as evidenced by tests done by the applicant in the seventies), and the old fast-resistant chips are bipolar / QNS / GaAs. And since CMOS technology is fundamentally bad, but all modern chips are made on it - this means that modern chips are not destructive by definition, and the only sure way for astronautics is to invest in bringing to mind the long-abandoned gallium arsenide (at the same time in the commercial Intel win) or return to time-tested discrete logic. And even better - to the lamps.

Resistant - means old and backward?

For the sake of justice, it should be noted that in the design norms near a couple of microns and more, there is usually no thyristor effect or single failures - simply because very high energy is needed to switch elements on such design norms. In normal operation, too, so I would like to wish those who propose to continue using old chips to try to assemble an Intel Core processor on the 74 series logic, and think about which rocket will be able to lift the resulting monster into the air.

On the other hand, microelectronics is not alive by processors. There are a huge number of tasks for which very small design standards are not needed or are not so mandatory, and levels of 500-90 nm are enough. The global commercial market for microcircuits on plates with a diameter of 200 mm (and these are design standards of 90 nm and above) has been growing for several years, up to a shortage of production equipment. Both outdated design standards and completely new microcircuits are produced on “outdated” design standards, and many factories are ready to guarantee the long-term future of technical processes (but not necessarily their complete immutability). Therefore, the “backwardness” of this or that factory from the conventional TSMC does not mean at all the impossibility of commercial success, either in civilian or in special stand.

The high cost of development, production and certification of high-resistance microcircuits is an even bigger headache for manufacturers than in automotive or industrial electronics. Small circulations (and we often talk about hundreds, if not hundreds) complicate the commercialization of this kind of development, because if you need to divide a million dollars into a thousand chips (the cost of developing a relatively simple chip according to the norms of 180 nm), this is already a thousand dollars the chip, and you still need certification, which can also easily translate into several millions (especially if you experience CMOS circuits on ELDRS). And if you need to recoup a billion dollars in small circulation? That is how much is the development of standards 5-7 nm. The high cost of development and certification has led to the fact that all over the world the development of a significant part of high-resistance microcircuits is directly or indirectly subsidized by states. This greatly reduces the number of new projects, prompts longer and more ingeniously to sell what is and to reuse proven IP blocks as much as possible. As a result, popular high-resistance microcircuits are manufactured and used for many years, creating the illusion that all high-resistance microcircuits are old. And in most cases, customers are satisfied with this, because in a situation where reliability is still primary in relation to performance, re-using an already proven solution is often the best that you can think of, and having “flight heritage” is a huge competitive advantage. This also contributes to the extension of the period of active production of successful developments - even when they are already morally obsolete, and when there is already a substitute. In addition, even the installation of a pin-to-pin-compatible analog in most cases requires, at a minimum, the renegotiation of design documentation, as well as the maximum carrying out of expensive tests. And this is in a situation when no development is required, what about the case when something really needs to be redone to use a new chip? Of course, in such a situation, hardware developers are seeking to reuse proven solutions entirely.

It does not help public perception that the path of new developments into space is long and thorny - and even longer in the news, and yet it is from popular science news that ordinary people usually learn about the achievements of the space industry. In 2015, there were several reports of the kind "on the New Horizons satellite that reached Pluto, there is the same processor as the Sony PlayStation", and this one at the time of the news release was already over twenty years old. Excellent and very competent presentation of the material, do not say anything. New Horizons was launched in 2006, and the development of the project began in 2000 - the year of the first flight of the Mongoose-V processor, that is, it was the newest available processor with existing experience in orbit. The development of this processor ended in 1998, and began in 1994 - right along with the release of the PlayStation. Here is another example: the Power750 architecture processors came out for civilian applications in 1997, in 1998 iMac debuted with such a processor. In 2001, the development of the radio-resistant counterpart - RAD750 was completed. This processor first hit space into space in 2005, and only appeared in the news in 2012, after a soft landing on Mars of the Curiosity rover. Of course, then, too, was not without the yellow headers about the processor fifteen years ago, but the development of the Curiosity project began in 2003, that is, even before the first flight of the RAD750 processor.

Front line

Despite all of the above, right now the level of design standards at which computing space platforms are being developed is 65-45-22 nm. At 45 nm, the American DAHLIA chipset has already been released into mass production, at 28 nm next year, the European DAHLIA processor is to be released, and the ASIC development platform designed for long-term use is currently being actively developed at 65 nm in the Belgian IMEC. Russian developers are not lagging behind - in the road map of the NIISI RAS for the next year, the output of a radio-resistant processor at 65 nm appears, and publications on this topic talk about creating a development platform, that is, these design standards have a great future not only in Europe but also in Russia .

And even at this level of design standards, the development of radio-resistant electronics does not stop - if you look at the latest IEEE Transactions on Nuclear Science numbers, you can find there enough research works on transistors with design norms of 20-16-14 nm, which prepare the ground for new generations of space microprocessors. Developers expect a lot of new and interesting things on such design standards: firstly, ring transistors cannot be made, secondly, FinFET has a completely different channel and isolation geometry, thirdly, there are FDSOI technologies that also have enough specificity.

The reduction of design standards, of course, affects the radiation resistance of the chips manufactured on them, but not necessarily the worse. The general trend is that with a decrease in design standards, the effect of the full dose decreases, and that of single effects increases. The shift of the threshold voltage in design norms of 180 nm and below is measured in units or tens of millivolts even for large doses: the gate oxide is so thin that the charge accumulating in it tunnels into the channel instead of accumulating. The transition layer in technologies with STI insulation is compact enough, which allows in many cases to ensure low leakage at a full dose of several tens or even hundreds of crades (Si). And if we apply ring transistors and guard rings in deep submicron volumetric technology, we will get rid of all dose problems at once.

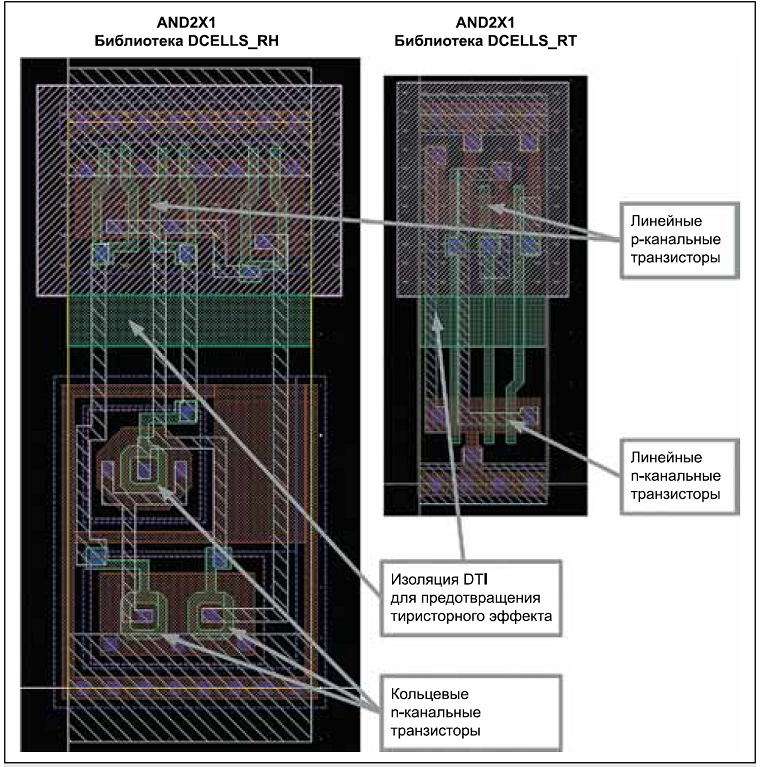

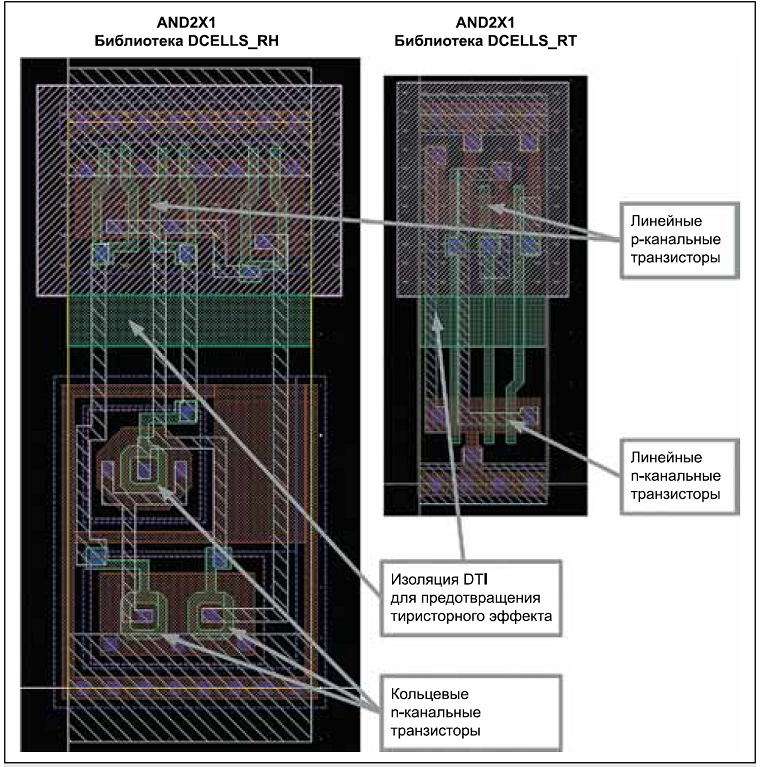

Figure 8. Examples of radiation-resistant AND elements developed by Milandr on the SOI BCD technology.

In Figure 8, two variants of the implementation of the same AND logical element for different conditions. On the left, we see a complete stuffing - ring transistors in individual security rings. On the right - a simpler version, for a low full dose: transistors are linear, instead of guard rings only good contacts in the ground. And in both cases, the dielectric isolation of n-channel transistors from p-channel, to protect the chip from the thyristor effect. In volumetric technology, guard rings perform this function. It should be noted that for many space applications, resistance to the full dose of 50-100 krad (Si) is quite sufficient, and linear transistors do an excellent job without requiring substantially degrading the functional parameters of the circuit in order to achieve durability.

With single failures, the situation is as follows: the approximate diameter of the area from which charge is collected when a single particle hits - about one micron, that is, more than the size of a memory cell made according to deeply submicron design standards. And indeed, experimentally detected so-called multiple failures, when one particle causes the switching of several bits at once. Moreover, with decreasing design standards, the energy required to switch a memory bit decreases, that is, more hits result in failures than for chips made according to coarser design standards. Including - the ingress of alpha particles from radioactive impurities in structural materials.

Figure 9. Comparison of the number of failures from hitting a single particle for two different 6T SRAM variants in technology with design standards of 65 nm. Source - A. Balbekov et al., “Questions of the applicability of VLSI for 65 nm CMOS technology under the influence of space factors”.

Figure 9 shows the experimental data on single failures in 65 nm volume technology. On the left - the usual 6T-SRAM. Ten failures from one hit! Hamming code will not protect you from such. So, if we are talking about commercial microcircuits, then on coarse design norms with single failures, everything will be somewhat better than on thin ones. At the very least, they will remain solitary, and they will really be corrected with coding. But if the microcircuit is specially created for space applications, then in the developer’s arsenal there is a huge number of architectural, circuit and topological solutions capable of providing high durability simultaneously with high performance. The right side of the figure is also 6T-SRAM, with the exact same circuitry, but with a different topology. The cost of improvements that remove multiple faults, a thyristor effect and increase resistance to the full dose is four times the area. It does not sound very nice, but no one said it would be easy. Nevertheless, Radiation Hardening by Design works and allows to achieve predetermined indicators of resistance at small design norms for any volumetric technology.

Why pre-set? Because the achievement of different levels of resistance requires the use of different methods to improve it, and for each technology and technical task the necessary set of methods is different. Then why not apply it all at once, so that it was just fine? Because the achievement of radiation resistance always occurs due to the deterioration of functional parameters (energy consumption, chip area, speed, etc.), and they are the first priority. That is why we need clear technical requirements, both in terms of functionality and durability. True, microcircuits are not so often made to solve a single task, especially withstanding ones, in which the circulation for each of the existing applications can be several dozen pieces. But still, a good understanding of the requirements allows, for example, not to use ring transistors, which greatly increase the area and current of consumption, and to end up with more competitive products.

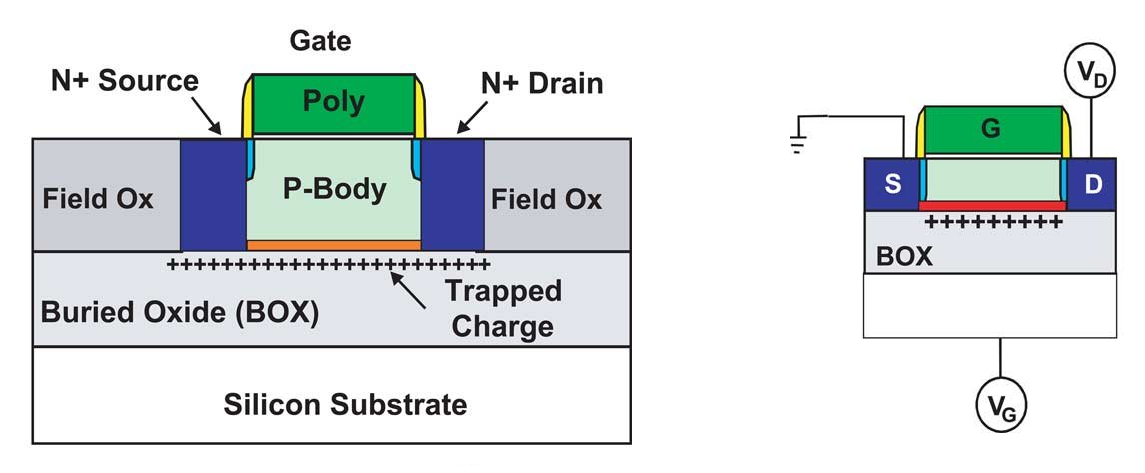

The eye of the attentive reader must have caught on the word “volume” in the phrase “pre-set indicators of resistance on small design standards on any volume technology”. Is it superfluous there? Everyone knows that fast-resistant microcircuits should be done on the “silicon on insulator” or “silicon on sapphire” technology.

The “silicon on insulator” technology has long been firmly entrenched with radiation-resistant fame. The roots of this popular fallacy go back to hoary antiquity, when its predecessor, SOI, silicon on sapphire, was actively used for military developments. Why? Transistors in this technology are electrically separated from each other and, more importantly, from the substrate. This means that the area of collection of radiation-induced charge with a short-term exposure of the chip to radiation with a high dose rate will be small. This, in turn, significantly reduces the time of disability - what is needed to work in a nuclear war. And indeed, there is no other method of reducing the time of loss of working capacity other than full dielectric isolation.

The second important part of the myth “SOI = Resistance to Resistance” is the resistance to the thyristor effect, including when exposed to a high dose rate. The thyristor effect or “latch” is almost the main headache of the developers of microchips and instruments for space, and it is not surprising that the technology that allows one to get rid of it has earned fame as radiation resistant. But in fact the situation is again somewhat more complicated.

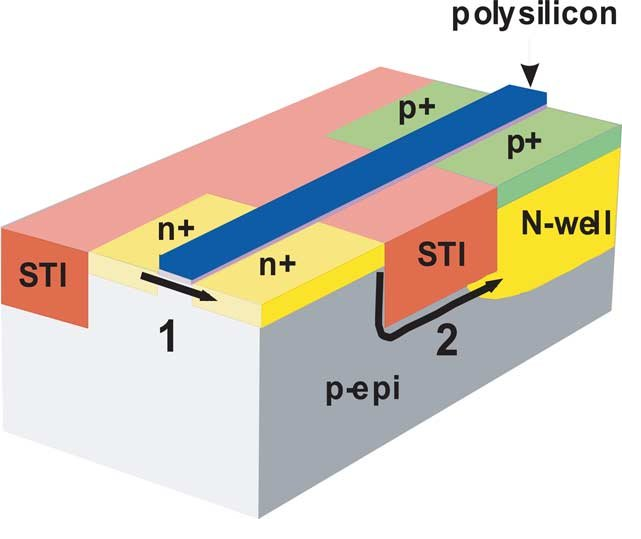

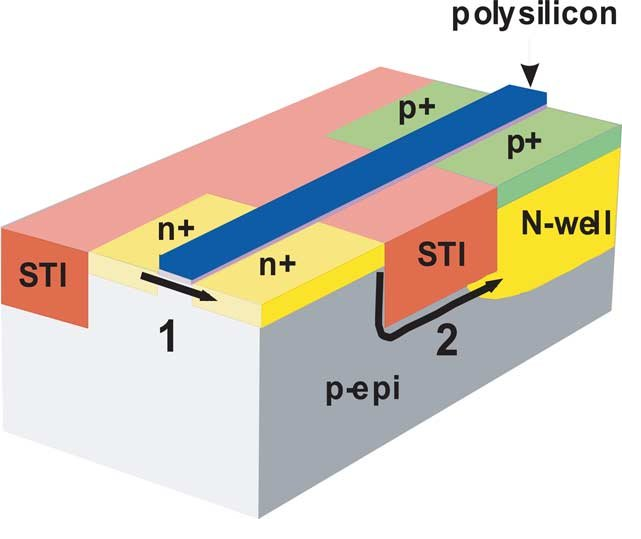

Figure 10. Cross-section of volumetric CMOS technology with parasitic thyristor.

The cause of the thyristor effect is the parasitic thyristor structure that is present inside the elements of the bulk CMOS technology. If the resistances Rs and Rw are large enough, then this thyristor structure, if a charged particle hits, can open and short circuit the chip with power, which, you know, is not good. How big are these resistances in real microcircuits? The answer to this question is quite simple: contact with the substrate or pocket is an extra area, so they try to minimize their number. And this, in turn, means that by default the thyristor effect in the “ordinary” chip is more likely than not. True, the thyristor effect can occur not only from radiation, but also when exposed, for example, to electrostatic discharge or even simply from elevated temperature and high current density with an unsuccessful topology. In "normal" applications, the manufacturers of power and automotive electronics face the thyristor effect.

In a large part of space systems, a reboot is quite acceptable in case of unforeseen circumstances, that is, you can try to apply a latch-prone chip by putting a circuit for controlling the current consumption and power supply when the norm is exceeded. This, in fact, is regularly done in situations where it is very necessary to use a high-performance commercial microcircuit, and the chips for protection against the thyristor effect (Latchup Current Limiter) are quite a popular fast-proof product. But such a solution has many limitations. Reset power can not everywhere and not always, the reboot in the process of performing an important maneuver can put an end to a long mission. The current consumption of a modern microcircuit may differ many times depending on its mode of operation, that is, consumption in the “nothing happens and there is a latch” mode may be less than in the case of regular operation in another mode. What level to set the current limit? It is also not clear. The time for which you need to have time to turn off the power and prevent the destruction of the chip depends on many factors, including the specific chip. Somewhere you can not hurry anywhere and reset the power as many times as necessary, but somewhere after the first or second time the chip still burns irreversibly.

In a microcircuit made using SOI technology, the thyristor effect cannot be in principle, because all the transistors are electrically separated. And the best thing is that in order to achieve resistance to the thyristor effect, you do not need to do anything at all. That is, commercial circuits made using SOI technology are also absolutely resistant to it, which greatly facilitates their use in space. For example, a commercial SOI processor will control the new American spacecraft Orion.

Figure 11. Radiation-induced leakage in bulk CMOS technology. Source: J. Schwank et.al., Radiation effects in MOS oxides, IEEE TNS, Vol. 55, No. 4, 2008

What is the problem?In addition to the effects of dose rate and “latch”, there are still single failures and a total absorbed dose, with which SOI technology is far from being so rosy. The figure shows two leakage paths in volumetric CMOS technology. Both of these paths are easily closed with proper topological design - one using ring n-channel transistors, the second - with the help of guard rings. These solutions have drawbacks from the point of view of the functioning of the circuit (restrictions on the minimum size of the ring transistor, loss of area when using guard rings), but from the point of view of ensuring radiation resistance, they are very effective.

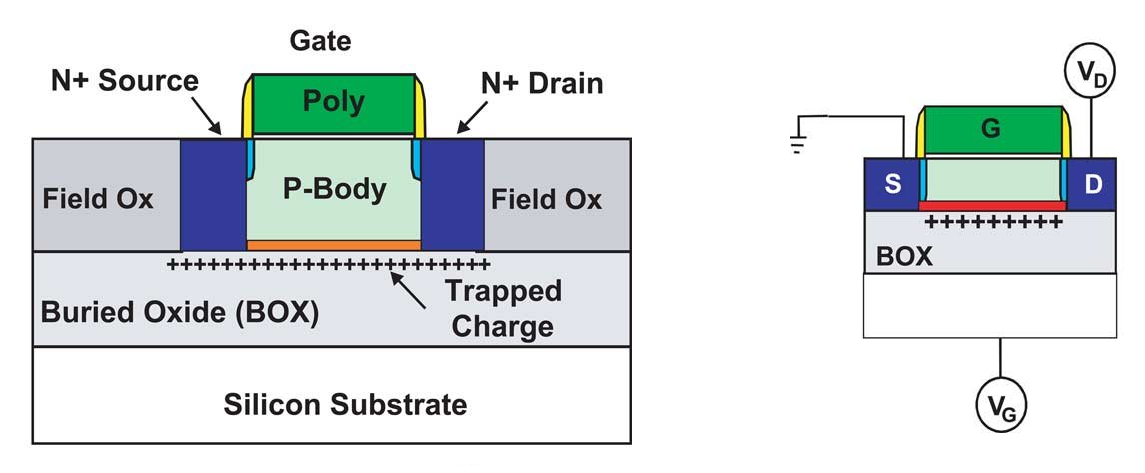

Figure 12. Additional leakage mechanism in SOI technology.

In SOI technology, there is another leakage path from the source to the drain along the boundary of silicon and latent oxide. Hidden oxide is much thicker than the gate, which means that it can accumulate a lot of positive charge. If we consider the "lower" transistor (the right part of Figure 12), for which the hidden oxide is a gate, we will see that in a normal situation, the source-gate voltage of this transistor is zero and its threshold voltage is several tens of volts, i.e. the current through this transistor does not flow. When irradiated, a positive charge is accumulated in the hidden oxide (this process is influenced by the geometry of the main transistor, in particular, by the thickness of the silicon instrument layer), and the threshold voltage of the “lower” n-channel transistor drops. As soon as it falls below zero, the current begins to flow freely through the transistor along the uncontrolled bottom channel.Thus, from the point of view of the total absorbed dose, the SOI technology is fundamentally strictly worse than the volumetric technology. But maybe there is a way to somehow fix the situation?

Usually, the chip substrate is grounded, but after all, in the SOI nothing prevents us from submitting to it not the ground, but negative voltage, and thus close the lower transistor? On the one hand, the idea is sound and it is actively used. Moreover, in the case of a fully depleted SOI (FDSOI) and the norms of 45-28 nm, the control of the potential under hidden oxide is also used during normal operation. On the other hand, the electric field in a hidden oxide not only closes the parasitic transistor, but also accelerates the accumulation of a positive charge in a hidden oxide. As a result, depending on the technical process parameters and the magnitude of the applied negative voltage, the dose resistance of the chip with negative voltage applied to the substrate may not only not improve, but also become worse! Further, as is usually the case, there are subtleties, but in principle the situation is thatthat topological and circuit engineering methods on volumetric technology make it possible to achieve almost any reasonable level of resistance to a full dose, and by “reasonable levels” I understand here, say 100 Mrad (Si). On SOI, there are fundamental limitations, and the level of durability of a specific process can be quite low. It is impossible to bypass these limitations without interfering with the technology (usually inaccessible from commercial considerations), and it will not be possible to find out the parameters of dose resistance without expensive tests.and the level of resistance of a particular process can be quite low. It is impossible to bypass these limitations without interfering with the technology (usually inaccessible from commercial considerations), and it will not be possible to find out the parameters of dose resistance without expensive tests.and the level of resistance of a particular process can be quite low. It is impossible to bypass these limitations without interfering with the technology (usually inaccessible from commercial considerations), and it will not be possible to find out the parameters of dose resistance without expensive tests.

No less interesting and the situation with single failures in the CED. On the one hand, the volume in the SOI is much smaller, from which the charge released by an ionizing particle is collected (although there have been controversy between leading scientists for many years about the exact shape of this volume). On the other hand, the gate area (“body”) of the SOI of the MOS transistor is so small that even a small charge entering it can significantly increase the voltage in it. If the voltage rises high enough to open the source-body pn junction, the parasitic source-body-drain bipolar transistor will turn on, and the charge introduced by the ionizing particle will be multiplied by the gain of this transistor. In practice, this means a drop in the threshold LET to levels below 1 MeV * cm ^ 2 / (mg), that is, anything will cause a failure in the microchip. Of course,The parasitic thyristor effect in the SOI can be managed by connecting the gate area to the source of the transistor or to ground / power. But, firstly, no one does this in commercial chips (because there is no need), and secondly, contact to the body is a loss of area, especially sensitive for keys, where it should be independent. Each cell has a cache of at least two keys, which leads to a significant increase in the area of the cache and the crystal as a whole. At the same time, in volume technology, one contact for 4-8 memory cells can be enough to suppress the thyristor effect and the parasitic bipolar effect, and even guard rings can be made with smaller area losses than contacts to the transistor body in the SOI. An important advantage of SOI in deeply submicron technologies is thatthat dielectric isolation prevents charge propagation and multiple failures with large multiplicity that can appear in volumetric technology. However, the size of the track of an ionizing particle is comparable to the size of a memory cell, and double faults in a SOI are quite self-recorded.

Overall, the situation with the radiation resistance of the “silicon on insulator” technology is such that it is not “radiation resistant by itself”, but has a number of advantages and a number of disadvantages with respect to the volumetric technology. Some shortcomings of SOIs can be circumvented using special design techniques, but the same can be done in bulk technology. Therefore, when choosing a commercial chip for use in exposure to radiation, you cannot rely on SOIs as a panacea, and when choosing a technology to develop a specialized, highly resilient VLSI, you should carefully weigh the pros and cons of SOI and volumetric technology to solve a specific problem and ensure the required levels of resistance to various radiation effects.

Hundreds of thousands of specialists all over the world are working to ensure the radiation resistance of integrated circuits, and it is impossible to embrace the immense one in a popular science article. And this is not a topic for an article or even for a dissertation. In any case, not for a master's thesis. Due to the specifics of the format, fellow experts will find here quite simplifications, inaccuracies and maybe even factual errors. I would like to not, but messages about the necessary edits are accepted in a personal. Without pretending to be complete and new, I hope that I managed to visually describe the most popular issues related to radiation resistance and delusions, and also convey to the reader the idea that microelectronics in general and space microelectronics in particular is one of the most rapidly progressing areas science and technologytherefore, time-tested knowledge and stereotypes are often obsolete, incomplete, or incorrect, and no one uses simple and obvious recipes, because in reality they do not exist.

Figure 1. An indispensable beautiful picture about cosmic radiation and fragile Earth.

The most popular theses on radiation resistance, used in near-cosmic disputes, look like this:

- Radium resistant chips are not needed. Kubsats fly perfectly on ordinary ones, ordinary Lenovo laptops stand on the ISS, ordinary chips stand in Dragon, and even Orion has installed regular chips in NASA!

- It is quite possible to put very old microcircuits on the satellites, up to the “crush”, no serious performance is needed there. But without joyfulness anywhere, and therefore fly to the old.

- The thesis, which complements the previous one: in principle, it is impossible to make high-resistance microcircuits at low design standards, therefore the use of time-tested components is not only justified, but necessary.

- In order for the microcircuit to be fast, it is necessary and sufficient to make it using the “silicon on insulator” or “silicon on sapphire” technology.

- All “military” chips are fast-resistant, and all fast-resistant chips are “military”.

As you can see, some of these theses directly contradict each other - which is regularly the subject of controversy or the reason for far-reaching wrong conclusions.

')

You need to start a conversation with an important disclaimer: radiation resistance is not the center of the world and the only quality that must be suitable for use in space or other hostile environment chip. Radiation resistance is only one requirement from a long series, including reliability, an extended temperature range, resistance to electrostatic discharge, vibration resistance - and reliable confirmation of all the above parameters, that is, long and expensive certification. Everything that can prevent the chip from working over the entire necessary service life is important, and most applications of fast-resistant chips assume the impossibility of repair or replacement. On the other hand, if something is wrong with one of the parameters, the designer of the final product can often find a way around the limitation - put the most sensitive to radiation dose microcircuit behind a thick wall, monitor the current consumption of a chip vulnerable to the thyristor effect and reset it when necessary , or thermostat the chip with a narrow temperature range. But it may not be found, and the only way to solve the task will be to order a new fast-resistant ASIC.

It is also useful to remember that the developers of special-purpose systems are the same people as any other developers. Many of them also like to write a code filled with crutches to yesterday's deadline and use the more powerful iron to make it work on it; some would use Arduino if it were properly certified. And, of course, people who set tasks for developers of special-purpose systems and developers of microcircuits for them are rarely embarrassed in requirements, both in reliability, and in performance, and in reliability. Therefore, modern design standards on satellites are still needed - I want large amounts of DRAM, multi-core processors, and the most modern FPGAs. I have already mentioned above that the consequences of poor radiation persistence and other potential problems can be at least partially circumvented, so the use of all this magnificence of developers is largely retained by the lack of data on what should be bypassed than the commercial status of the chips.

Radiation effects

The concepts of "radiation resistance" and "radiation-resistant chip" are ambitious simplifications. In fact, there are many different sources of ionizing radiation, and they can affect the functioning of electronic devices in different ways. Accordingly, resistance to different sets of influencing factors and different levels of exposure is necessary for different applications, so a “resistant” chip designed for work in low earth orbit is absolutely not obliged to work normally when parsing the debris in Chernobyl.

Ionizing radiation is called ionizing, because the release of energy in the bulk of a substance as it slows down the incoming particles ionizes the substance. Each material has its own energy required for ionization and the creation of an electron-hole pair. For silicon it is 3.6 eV, for its oxide - 17 eV, for gallium arsenide - 4.8 eV. Also, the arriving particle may not ionize an atom, but “move” it from the correct place in the crystal lattice (in silicon, for this you need to transfer the atom to 21 eV). Electron-hole pairs created in a substance can have different effects on the electrical and physical properties and on the behavior of an electrical circuit. The radiation effects can be divided into four large groups: the effects of total absorbed dose, the effects of dose rate, effects caused by single particles, and the effects of displacement. This separation is somewhat arbitrary: for example, the irradiation of a stream of heavy ions that cause solitary effects leads to a set of total absorbed dose.

Dose Effects

The total absorbed dose of radiation is measured in radas, with an indication of the substance absorbing the radiation. 1 rad = 0.01 J / kg, that is, the amount of energy released in a unit mass of a substance. Less commonly used is the unit of measure Gray, equal to 100 rad (or 1 J / kg). It is important to understand that the absorbed dose in different substances will vary for the same amount of ionizing particles released by a source of radiation (this is the exposure dose). In the case of silicon chips, the desired material is silicon oxide, because the effect on it, and not on silicon, mainly affects the electrical characteristics of the circuit, since the mobility of holes in SiO2 at normal temperature is so small that they accumulate in the oxide, creating built-in positive charge. Typical dosage levels of commercial microcircuits are in the range of 5-100 krad (Si), the radiation resistance levels demanded by buyers begin at 30 krad (Si) and end somewhere in the region of 1 Grad (Si), depending on the purpose of the microcircuit. The lethal dose for humans is about 6 Gray.

Figure 2. Examples of calculations of the total absorbed dose set for 10 years in various circular orbits behind protection of 1 g / cm ^ 2. Source - N.V. Kuznetsov, "Radiation hazard in near-earth orbits and interplanetary trajectories of spacecraft."

The effects of exposure to the full dose are associated with the accumulation of this positive charge in dielectrics and manifest themselves in CMOS circuits in several main ways:

- The shift of the threshold voltage of transistors, arising from the accumulation of a positive charge in the gate dielectric and the change in the electric field in the channel of the transistor. For n-channel transistors, the threshold is usually reduced (but the dependence may be non-monotonic), while for p-channel transistors it increases, and the magnitude of the shift correlates with the gate oxide thickness, that is, with design norms. The thresholds of transistors in circuits with coarse design norms may change so much as to cause functional failure (n-channel transistors stop closing, p-channel transistors stop opening); in submicron technologies, this effect is less important, but in analog circuits it can deliver a lot of headaches.

- The occurrence of unmanaged leakage current. It can flow from the source of the transistor to its own drain or to the adjacent transistor. The cause of the leaks is the accumulation of a positive charge, but not in the gate dielectric, but in a thick insulating one. In fact, in parallel with the main transistor, a parasitic transistor is formed, the voltage on the gate of which is controlled by the radiation dose. The manifestation of this effect is determined by the features of the geometry of the transition from the gate dielectric to an insulating one, that is, it depends much more on the specific technology than on the design norms.

- Reduced mobility of charge carriers due to the accumulation of defects on which charge carriers are scattered. The influence of this factor on submicron digital circuits on silicon is small, but it is more important for power transistors, including on complex semiconductors (gallium nitride and silicon carbide).

- Increase in 1 / f noise caused by parasitic edge transistors. It is important for analog and radio frequency circuits. The value of this effect increases with decreasing design norms, when the influence of other dose effects decreases.

In bipolar circuits, the main dose effect is a decrease in the gain due to an increase in the base current due to leakage from the emitter to the base at the silicon boundary and the passivating oxide. Another bipolar transistor-specific dose effect is that they can (not necessarily) react not only at the level of the dose collected, but also at the speed of its collection — the slower the dose is collected, the worse the resistance. This effect is called ELDRS (Enhanced Low Dose Rate Sensitivity) and it greatly complicates and increases the cost of testing, often not only bipolar, but also CMOS circuits - because they also sometimes have bipolar transistors and because it is easier to force everyone to be tested uniformly than Understand where ELDRS can be, and where not.

Dose rate

Another part of the effects associated with the dose rate is an ultrafast dose set at which such a large number of electron-hole pairs are generated in the chip that they do not have time to recombine, and a huge electric charge is introduced into the chip, which is absorbed through the ground and power lines during considerable time - for which the scheme stops working. This time is called “loss of health” and is the main characteristic of the resistance of the chip or device to this kind of effects. In addition, a large amount of charge introduced into the microcircuit seriously changes the potentials of the areas connected to the ground and to the power supply, which can lead to the thyristor effect.

It is the effects of high dose rates that, for the sake of resistance to which the technology “silicon on sapphire” and “silicon on insulator” originally developed, because the only way to reduce the charge introduced into the circuit is to separate the active area of the chip from the substrate volume, not giving charge of the substrate to participate in the process. Why are these effects important? A high dose rate for a short time is a typical consequence of a nuclear explosion.

Single effects

Single effects are not associated with prolonged exposure to radiation, but with a measurable effect from the ingress of a single ionizing particle. They can be divided into two large groups:

- Non-destructive. These include failures in various storage elements (cache memory, register files, FPGA configuration memory, etc.), transients in combinational logic and in analog circuits. The main feature of this kind of effects - they do not lead to the physical destruction of the chip and can be corrected by software or hardware. Moreover, the transients are corrected by themselves after some time (the question is how big it is). Of primary practical interest are failures in memory arrays - simply because they constitute the lion’s share of all failures due to the large amount of memory in modern microelectronic systems.

- Destroying. These include a thyristor effect and a variety of, but, fortunately, rare effects such as punching a gate or avalanche burnout of a transistor. Their distinctive feature is that they irreversibly destroy the element of the chip. In the case of the thyristor effect, the chip can usually (but not always!) Be saved if you quickly lose power. Destructive effects are a serious danger for some types of flash memory and for devices with high voltages and current densities, the most important of which are power switches.

The specific energy yield of an ionizing particle is called “linear energy transfer” (LET) and is measured in MeV, transmitted per unit length of the particle's span in the material, per unit density of the material, that is, in (MeV * cm ^ 3) / (mg * cm) or (MeV * cm ^ 2) / mg. LET non-linearly and non-monotonously depends on the particle energy and is interconnected with the path length, which can be from hundreds of nanometers to hundreds of millimeters for particles and materials relevant in microelectronics.

The number of particles encountered in space decreases with increasing LET (see Figure 4). Important values are 30 (corresponding to iron ions) and 60 or 80 (after which the probability of an event is considered negligible). In addition, an important figure of 15 MeV * cm ^ 2 / (mg) is the maximum LET that a nuclear reaction product can have when a proton or neutron hits silicon. Protons are one of the main types of solar radiation, and although their own LET is small (tenths of a unit), they have a significant effect due to nuclear reactions and secondary ionization. Secondary ionization can occur directly in the active region, and may be due to the proton entering the atom of some material with a large atomic number — for example, tungsten or tantalum. Heavy elements are widely used in modern microelectronic technology, for example, to create contacts from silicon to the first metallization layer. Secondary ionization is also the reason why you should not pack chips into lead boxes to increase radiation resistance.

Figure 3. The dependence of LET on energy for different types of particles.

Separately, it is worth paying attention to helium nuclei (alpha particles) - not only because there are quite a lot of them in the composition of solar radiation, but also because quite a lot of alpha sources can be found in ordinary life.

Figure 4. Comparison of the number of particles of different types for a two-year mission in orbit, under the article Xapsos et.al., "Model for Cumulative Solar Heavy Ion Energy and Linear Energy Transfer Spectra", IEEE TNS, Vol. 5, No. 6., 2007

1, 30 or 60 MeV * cm ^ 2 / (mg) - how much is this much? The failure threshold of a standard memory cell in the 7 nm technology is much lower than the unit, and at 180 nm it ranges from one to ten. The use of special circuitry allows you to raise the threshold, for example, up to a hundred, but it is usually wiser to achieve figures of 15 or 30 units, and filter the residual rare events using noise-correcting coding. 60 units is a figure, which usually appears in the requirements for resistance to damaging effects.

Offset effects

The effects of displacement is the local destruction of the crystal lattice, that is, the "knocking out" of an atom from its intended place. The energy required for damage to the crystal lattice is usually quite high, so most of the flying particles do not cause this effect. But it can be caused by a nuclear reaction as a result of a proton or neutron hit, which are many in orbit. Such local lattice defects lead to a decrease in the mobility of charge carriers, an increase in noise, and some other effects. They affect conventional CMOS chips less than “normal” dose effects, but dominate in solar cells, photodetectors, power transistors, as well as in complex semiconductors that do not have oxide, such as gallium arsenide and gallium nitride. This explains their high dose resistance - they simply have no effects that cause the rapid degradation of silicon chips, and what is, appears weaker and later. The amount of radiation causing displacement effects is measured in particles (usually protons or neutrons) per square centimeter of chip area.

So, with the description of the influencing factors of radiation figured out, now let's see where and in what combinations they threaten the chip.

What? Where? When?

Figure 2 shows an example of calculating the full dose set in different orbits. Next you need to discuss a lot of assumptions - solar activity, shape, material and thickness of protection, and so on, but in general, despite the fact that the figure is a typical spherical horse in a vacuum, the trend is clear: in different orbits, the rate of collection of the full dose may vary five orders of magnitude. At the same time, in low orbits, under the first Van Allen belt, the dose is collected so slowly that many conventional commercial chips can withstand several years in such conditions. Why, microcircuits, even much more fragile people fly there for years without serious health consequences. Meanwhile, low orbits are practically the entire manned cosmonautics, remote sensing of the earth, satellite communications, the satellite Internet promised very soon and, as the Americans say, the last but not least, almost all Kubsatans are launched into low orbits.

Commercial chips in low orbits

Actually, it is because of the popularity and importance of low orbits that the legs grow from speculations that expensive expensive chips are not needed, and it is quite possible to get along with the usual ones. But the use of commercial microcircuits in space also has pitfalls, which manifest themselves even in low orbits.

First, the van Allen belts protect the Earth and its near surroundings only from light particles, mainly from solar electrons and protons. Heavier particles, even though they are much rarer, quietly reach even our last shield - the atmosphere - and, correctly, cause single effects, including the thyristor effect, capable of irreversibly destroying some kind of microcircuit at any moment and with it the entire spacecraft . Therefore, commercial chips can be applied only if measures are taken to protect them from single effects.

The second problem is that the chips on the satellite are not only processors and memory, but also many other types of chips, including power and analog ones, and with their radiation resistance, everything is much more complicated and much less predictable. Yes, and modern systems on a chip contain a large number of non-digital blocks; for example, for most flash memory chips, the high-voltage generator used for recording stops working, and for analog CMOS circuits, the reference voltage generated by a pair of bipolar transistors shifts, and even small leaks can seriously change the operating point of low-power analog cascades. The strength of the power switches to destructive single effects can be highly dependent on the voltage applied to them, and so on and so forth.

The third important problem of using commercial microcircuits in space is that the resistance to the full dose and thyristor effect is sensitive to changes in the process parameters, including small ones, so if you change something in the factory, you can throw your test results into the trash can . And for commercial microcircuits manufacturer guarantees the stability of the functional parameters, rather than the process. Moreover, in different batches you may encounter crystals from different factories; for example, the processor from the sixth iPhone, Apple A9, was made on 16 nm TSMC and 14 nm Samsung, and the user was not informed which version was in his phone. To combat this problem all over the world, Trusted Foundry institutes or certified technical processes are used to develop high-resistance microcircuits — in a word, some form of guarantee that the technical process remains unchanged from the factory.

In total, the correct answer to the question "can conventional commercial chips be used in space?" Useful advice: if you still decide to use a commercial chip and invest in its tests, buy the stock right away ten years ahead. By the way, this is quite a business model of a large and respected 3DPlus company - they test all commercial chips in succession in a row, find those that have (in fact, by chance) sufficient indicators, buy large lots and then pack the chips into their own cases under their own brand .

Other orbits

However, satellites fly not only in low-Earth orbit. As an example of other requirements, let us consider the only way to ensure a stable connection in the vicinity of the North Pole - and this is a strategically important region for Russia - the Molniya orbit, so named after the first apparatus launched to it.

Figure 5. Orbit “Lightning”.

The main feature of this orbit is that due to its large elongation (the minimum height is about 500 km above the Earth’s surface, the maximum is up to 40,000 km, the period is 12 hours) the apparatus crosses the radiation belts four times a day.The lifetime of the very first Molniya satellites was only about half a year - primarily due to a drop in the power of solar panels caused by radiation, which needed to be powered by a powerful (orbitally high) radio transmitter.

In the geostationary orbit or in the orbits of navigation devices, Figure 2 promises us a dose of several hundred steals (Si) - and the dose resistance of commercial microcircuits can easily be 5-10 crads (Si), that is, about 10-15 years of active existence of such chips in orbit speech can not be. More precisely, it can, but this will require a much thicker protection - or protection from something denser than aluminum. However, here we are plunging into the wondrous world of designing spacecraft, so let's confine ourselves to saying that delivering every kilogram to orbit is expensive, and the idea of hiding the most vulnerable electronics deeper inside the case, shielding it with other components is not bad, but completely will not decide.

Military ICs

When dealing with the question of dispelling myths about fastness, it is imperative to say that it is impossible to put an equal sign between "fast-resistant", "space" and "military" microcircuits. Not all military chips are fast-proof, and not all fast-resistant chips are military. If we turn to the US military standard Mil-Std-883 (to the American, because its Russian counterpart in terms of radiation is classified), we will find in it a lot of different tests for environmental influences - thermal cycling, humidity, air with sea salt, and t .d etc.

Radiation relate following items:

1017.2 Neutron irradiation

1019.8 Ionizing radiation (total dose) test procedure

1020.1 Dose rate induced latchup test procedure

1021.3 Dose rate upset testing of digital microcircuits

1023.3 Dose response rate of linear microcircuits

Full dose and dose rate. Single effects? No, no.The requirements specification for a microcircuit may include requirements for resistance to single failures and / or thyristor effect, but these requirements are not standardized and are determined anew each time, based on the needs of specific customers of each chip. It turns out that the status of “military” is not a guarantee of the possibility of launching a microchip into space? Yes it is.An example is the fate of the notorious Phobos-Soil, whose death was caused, according to the official version (very difficult to prove, but very convenient), by hitting a heavy charged particle into an American memory chip of the “military” class, which was not resistant to single failures .

Peaceful atom and others

The importance of radiation resistance is not limited only to space and military applications. The natural radiation background at sea level is many times lower than even what happens in a low orbit, but the Earth’s atmosphere not only serves as the final shield in the path of cosmic radiation, but also creates secondary particles when interacting with it. Secondary particles are mostly neutrons. Appearing in the upper layers of the atmosphere, they usually do not reach the surface, but at the heights of civilian airliner flights and the radiation dose is significant, and very impressive statistics have been collected for single failures. In medicine, X-ray radiation has long been used, and radiotherapy is one of the most important ways to combat malignant tumors, and electronics are also needed in such installations.

And, of course, we should not forget that all the fuss with lead electronics, which was dearly loved by all electronics, was started largely because of the fact that lead and some other materials used in the production of microcircuits contain impurities of heavier elements, in particular uranium, and their use leads to the generation of a small, but still well-measurable flux of alpha particles — right around vulnerable silicon. In the case of BGA packages or 3D assemblies, over the entire surface of vulnerable silicon.

Figure 6. Illustration of a solder ball as a source of alpha particles.

The good news is that alpha particles have a rather small depth of run in silicon (from units to tens of microns, depending on energy), and multi-layer metallization helps reduce their influence. The bad news is that at low design norms, all alpha particles that still reach silicon cause malfunctions, and not only single ones, but also multiple ones (more on this in more detail below). For example, last year TSMC published an article on measuring the number of failures from alpha particle contamination in the memory according to design standards of 7 nm for 2018 IEEE International Reliability Physics Symposium, that is, this problem continues to exist and require some kind of action in a world where everything switched to lead free solder.

Another application of radiation-resistant chips, which I would like to say a few words - is high energy physics and atomic energy. In the cores of hadron colliders and atomic reactors (as well as in equipment designed to eliminate radiation disasters), electronics are also needed, and it is highly desirable that it does not need to be replaced and repaired for a considerable time. The requirements for the total absorbed dose for such applications are dozens and even hundreds of Megarads (Si), that is, three orders of magnitude more than in conventional space applications. Further complicating the situation is that such durability is required not from digital circuits, but from power and analog circuits - control circuits for electric drives and the primary processing of readings from multi-channel sensors.And while ensuring the dose stability of digital circuits is more and more understandable even at high doses, in the case of an analog, the development of an electrical circuit is of fundamental importance, and the resulting circuit itself is even more know-how than is usually the case in analog design.

7. . Y. Cao et.al., «A 4.5 MGy TID-Tolerant CMOS Bandgap Reference Circuit Using a Dynamic Base Leakage Compensation Technique», IEEE TNS, Vol.60, N.4, 2013

I will illustrate the scale of the tasks facing the developers of chips for the nuclear power industry with my favorite example. The reference voltage source (ION), equal to the bandgap voltage reference bandwidth, is a relatively simple and well-known scheme. When exposed to radiation, the parameters of bipolar transistors used as diodes change (the gain decreases due to emitter-base leakage). As a result, the reference voltage of the conventional ION circuit, which determines the accuracy of all measurements, can shift, say, by 15–20%, which corresponds to the effective digit capacity of the ADC of two to three bits. In the circuit on the right, the reference voltage varies within 1% (which is more than 7 bits) with an ionizing radiation dose of 4.5 MGy. In order to achieve this impressive result, the scheme needed to be seriously reworked,adding a whole scattering of feedbacks that compensate for dose leakage. In a highly resistant version, there are about four times more elements than in a normal one, and its power consumption is twice as much. And the worst news is that for each new scheme a strategy of ensuring radiation resistance and its implementation have to be developed separately. But there is still the problem of protecting analog circuits from single effects, the solution of which is also rather poorly formalized.But there is still the problem of protecting analog circuits from single effects, the solution of which is also rather poorly formalized.But there is still the problem of protecting analog circuits from single effects, the solution of which is also rather poorly formalized.

Radiation resistance and design standards