Reservations at Kubernetes: it exists

My name is Sergey, I am from ITSumma, and I want to tell you how we approach reservations at Kubernetes. Recently, I have been doing a lot of advisory work on the implementation of various devops-solutions for various teams, and, in particular, I work closely on projects using K8s. At the Uptime Day 4 conference, which was devoted to reserving in complex architectures, I gave a talk on reserving the "cube", and here is its free retelling. I will only warn you in advance that it is not a direct guide to action, but rather a generalization of reflections on this topic.

In principle, monitoring and redundancy are the two main tools for increasing the resiliency of any project. But in Kuber everything is balanced on its own, you will say, everything scales on its own, and if something happens, it will rise by itself ... That is, when I first studied the topic superficially, to the question of how K8s approaches the backup, the Internet answered me “why ? ”Many people think that a cuber is such a magical thing that eliminates all infrastructure problems and makes the project never fall. But ... the world is not what it seems.

How did we approach the reservation process before? We had identical sites for placement - either they were virtuals or they were iron servers, to which we applied three basic practices:

')

And voila: at any moment we switch to the backup site, everyone is happy, we get up and we disagree.

And what do they propose for us to increase the constant availability of our kubernetes application? The first thing that the informal documentation says is to put a lot of machines, to do a lot of masters - their number should meet the conditions for reaching the quorum within the cluster, and so that each of the masters has etcd, api, MC, scheduler raised ... And, like, everything is fine : when several working nodes or masters fail, our cluster is rebalanced, and the application will continue to work. Again it looks like magic! But often our cluster is located within a single data center, and this can raise certain questions. What if an excavator arrived and dug a cable, a lightning bolt struck, there was a universal flood? Everything is covered, our cluster is no more. How to approach to reservation taking into account this party of a problem?

First of all, you should have another hot standby cluster, that is, a cluster that you can switch to at any time. At the same time, from the point of view of the cuber, the infrastructure must be completely identical. That is, if there are any nonstandard plugins for working with the file system, custom solutions for ingress, they must be completely identical on your two (or three, or ten, then how much money and admin power is enough) clusters. It is necessary to clearly define two sets of applications (deployment'ov, statefulset'ov, daemonset'ov, cronjob'ov and so on): which of them can work on the reserve constantly, and which better not to run before the direct switching.

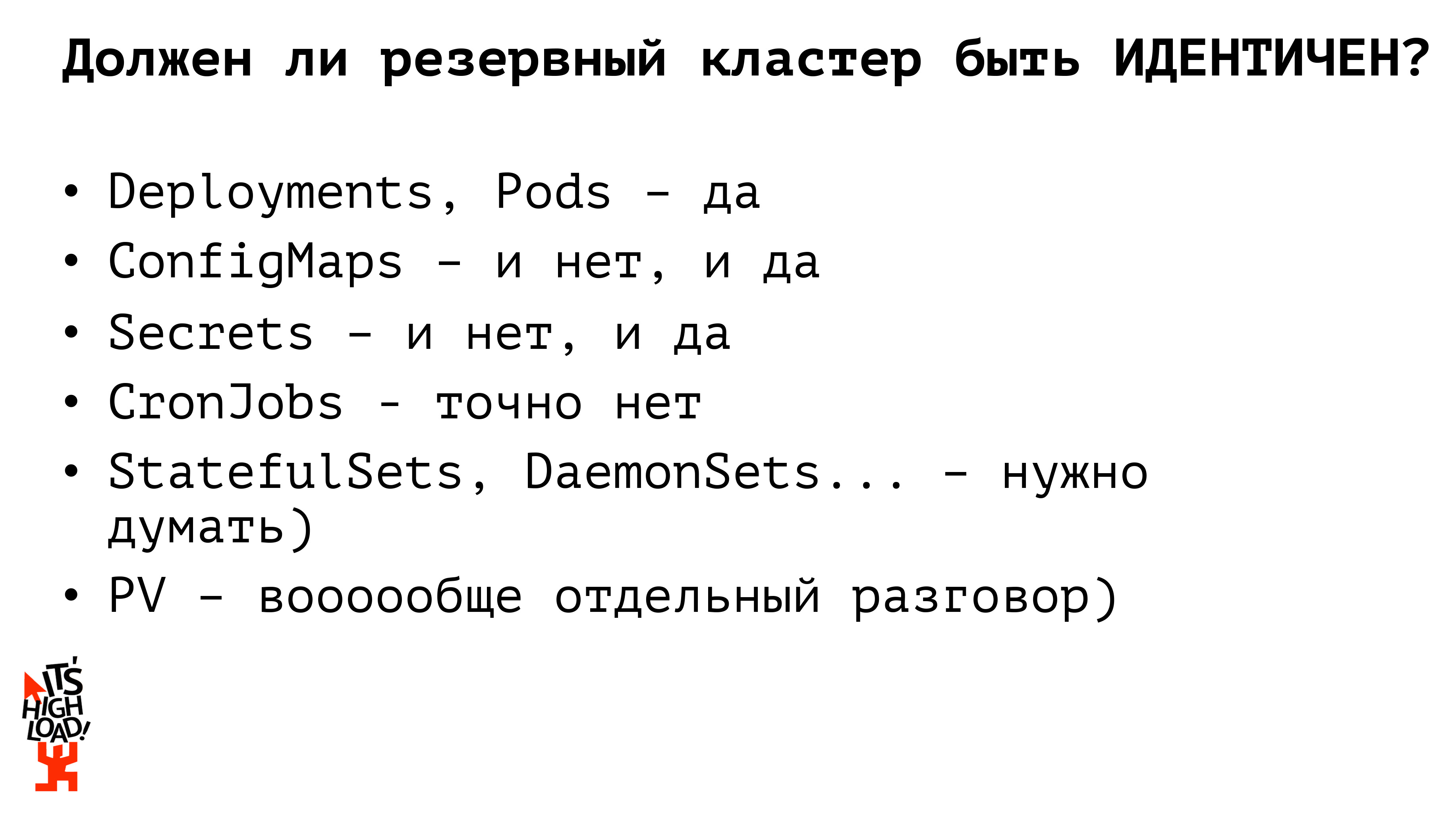

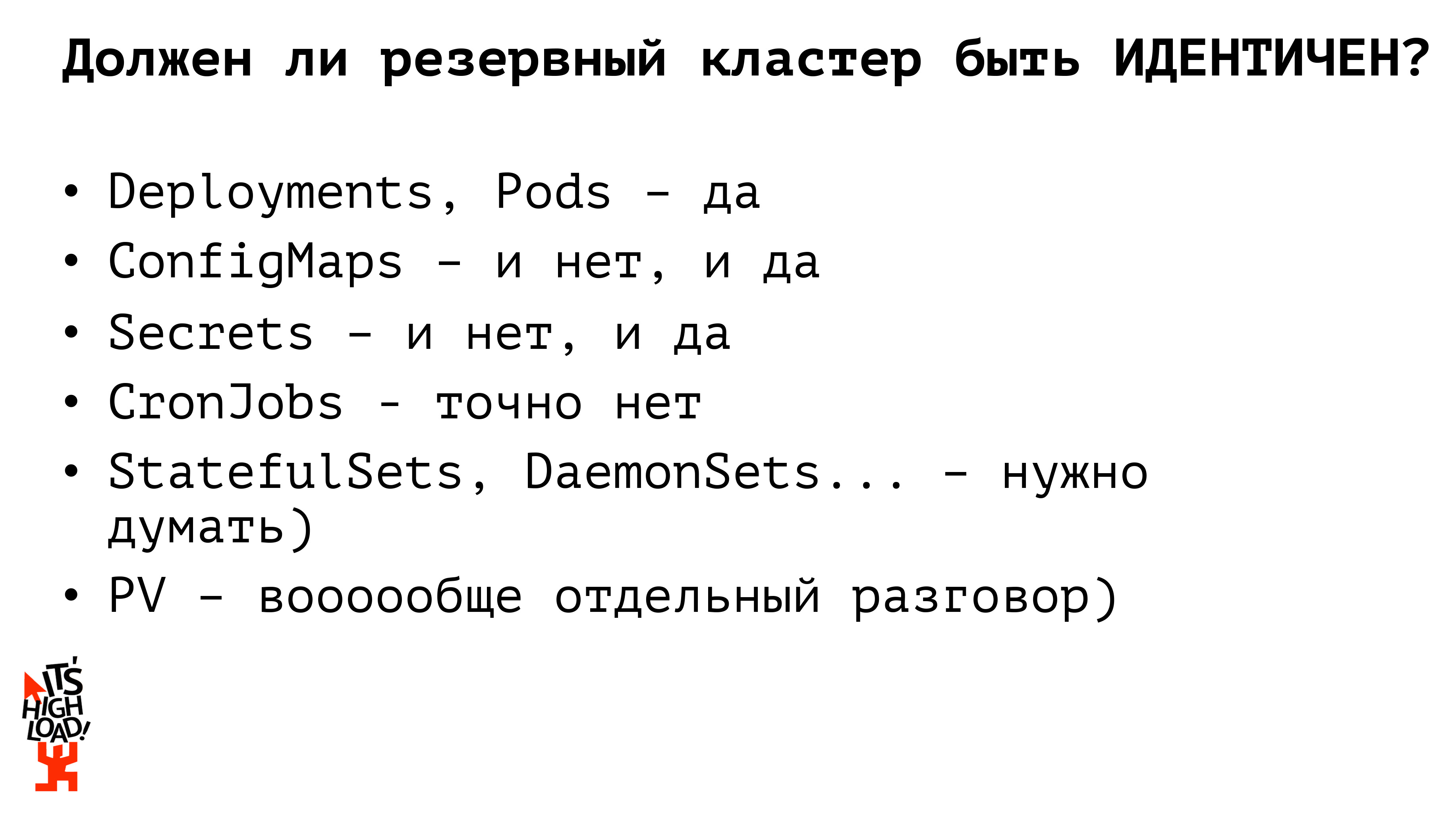

So, should our backup cluster be completely identical to our battle cluster? Not. If earlier, within the framework of work with monolithic projects, with iron infrastructure, we kept almost completely identical surroundings, then within the framework of a cuber, I believe, this should not be. Let's take a look at why.

For example, let's start with the core entities of Cuburnetes - deployments - they should be identical. Applications must be launched that can intercept traffic processing at any time and allow our project to continue to live. If we are talking about configuration files, then we need to look at whether they should be identical or not. That is, if we, smart people, do not use any prohibited substances and do not keep the base in K8s, then we should have access settings to the combat base in configmaps (the backup process is built separately). Accordingly, to provide access to the backup database instance, we must have a separate configuration file (configmap). Exactly the same way we work with secretas: passwords for access to the database, api-keys; At any time we can work either a combat secret or a reserve one. In total, we already have two entities kubernetes, the backup versions of which should not be identical to the combat ones. The next entity on which to stay is cronjob. Cronjobes on the reserve should in no case be identical to the set of cronjobs of the production cluster! If we raise the backup cluster and raise it completely with all the included cronjobs, then, for example, people will receive two letters from you at the same time instead of one. Or some kind of data synchronization with external sources will take place twice, respectively, we begin to hurt, cry, scream and swear.

But how do we propose to organize a cluster of reserving people from the Internet? The second most popular answer after “why?” Is the use of the Kubernetes Federation.

What it is? This is, let's say, a large meta cluster. If we imagine the architecture of a cuber - where we have a master, several nodes, then from the point of view of federation we also have a master and several nodes, only each node is a separate cluster. That is, we work with the same entities, with the same primitives, as with a single kuber, only we twist and turn, not in our physical machines, but in whole clusters. Within the framework of the federation, we have complete synchronization of federated resources from parents to descendants. For example, if we run some kind of deployment through a federation, it will be deposited on each of our child clusters. If we take a configmap, a secret, roll it into a federation — it will spread to all our child clusters; at the same time, the federation allows us to customize our resources on children. That is, we took some configmap, hit it through the federation and then, if we need to tweak something on specific clusters, we go to edit on a separate cluster, and this change will not be synchronized anywhere.

Kubernetes Federation is an existing tool not so long ago, and it doesn’t support the entire set of resources provided by K8s itself: at the time of publication of one of the first versions of the documentation, they mentioned support only for config-maps, deployment for replica-set, ingress. Secrets were not supported, work with volume was also not supported. Too limited set. Especially if we like to have fun, for example, to transfer our own resources to the Capernetic through the custom resource definition, we don’t push them into the federation. That is, as it were ... a very similar decision to the truth, but it makes us periodically shoot ourselves in the foot. On the other hand, federation allows you to flexibly manage our replicaset. For example, we want to run 10 replicas of our application, by default the federation will divide this number proportionally between the number of clusters. And all this can also be configured! That is, you can specify that you need to keep 6 replicas of our application on the combat cluster, and only 4 replicas of our application on the backup cluster to save resources, or for your own entertainment. Which is also quite convenient. But with the federation we have to use some new solutions, to finish something on the go, to force ourselves to think a little more ...

Is it possible to approach the process of reserving a cuber somehow simpler? What tools do we have at all?

Firstly, we always have a certain ci / cd system, that is, we do not manually go, do not write on create / apply servers. The system generates yaml'iki for our containers.

Secondly, there are several clusters, we have either one or several (if we are smart) registry, which we also took and reserved. And there is a wonderful utility kubectl, which can work with several clusters at the same time.

So: in my opinion, the simplest and most correct solution for building a backup cluster is a primitive parallel deployment. There is some kind of pipeline in the ci / cd system; First, build our containers, test and roll out applications through kubectl into several independent clusters. We can build simultaneous calculations on several clusters. Accordingly, the delivery of configurations, we also decide at this stage. You can pre-define a set of configurations for our combat cluster, a set of configurations for the backup cluster and, at the ci / cd system level, roll out the prod environment into the prod cluster, the backup environment into the backup cluster. Compared with the federation, it is not necessary to go to each child cluster after defining a federated resource and redefine something. We did it in advance. What we are great.

But ... there is ... I was written, there is a "root of all evil," but there are actually two of them. First, the file system. There is some kind of PV, or we use external storage. If we store files inside the cluster, then we need to act on the old practices left over from the time of iron infrastructures: for example, synchronize with lsync. Well, or any other crutch you prefer. Roll out all the other cars and live.

Secondly, and, in fact, an even more important sticking point is the database. If we are smart people and do not keep the base in the cuber, then the process of backing up data using the same old scheme is master-slave replication, then switching, we will catch up with the replica and we will live well. But if we keep our DB inside the cluster, then in principle there are many ready-made solutions for organizing the same master-slave replica, many solutions for raising the DB inside the cuber.

A billion reports have already been read about database backups, a billion articles have been written, and nothing new is actually necessary here. In general, follow your dream, live as you wish, invent some complicated crutches for yourself too, but be sure to think over how you will reserve all this.

And now about how, in principle, we will have the process of switching to a backup site in case of a fire. First of all, we deploy stateless applications in parallel. They do not affect the business logic of our applications, our project, we can constantly keep two sets of running applications, and they can start accepting traffic. It is very important in the process of switching to the backup site to see if you need to override the configuration? For example, we have a prod cluster of cuberntes, there is a backup cluster of cuberntes, there is an external database master, there is a reserve database master. We have four options for how these applications in the prode can begin to interact with each other. We can switch the base, and it turns out that we need to switch traffic to the new base in the production cluster, or we can have a cluster - and we moved to the reserve, but we continue to work with the supply base, well, the third option, when we got it , it turned up, and we switch both applications, redefine our configuration, in order for new applications to work with a new database.

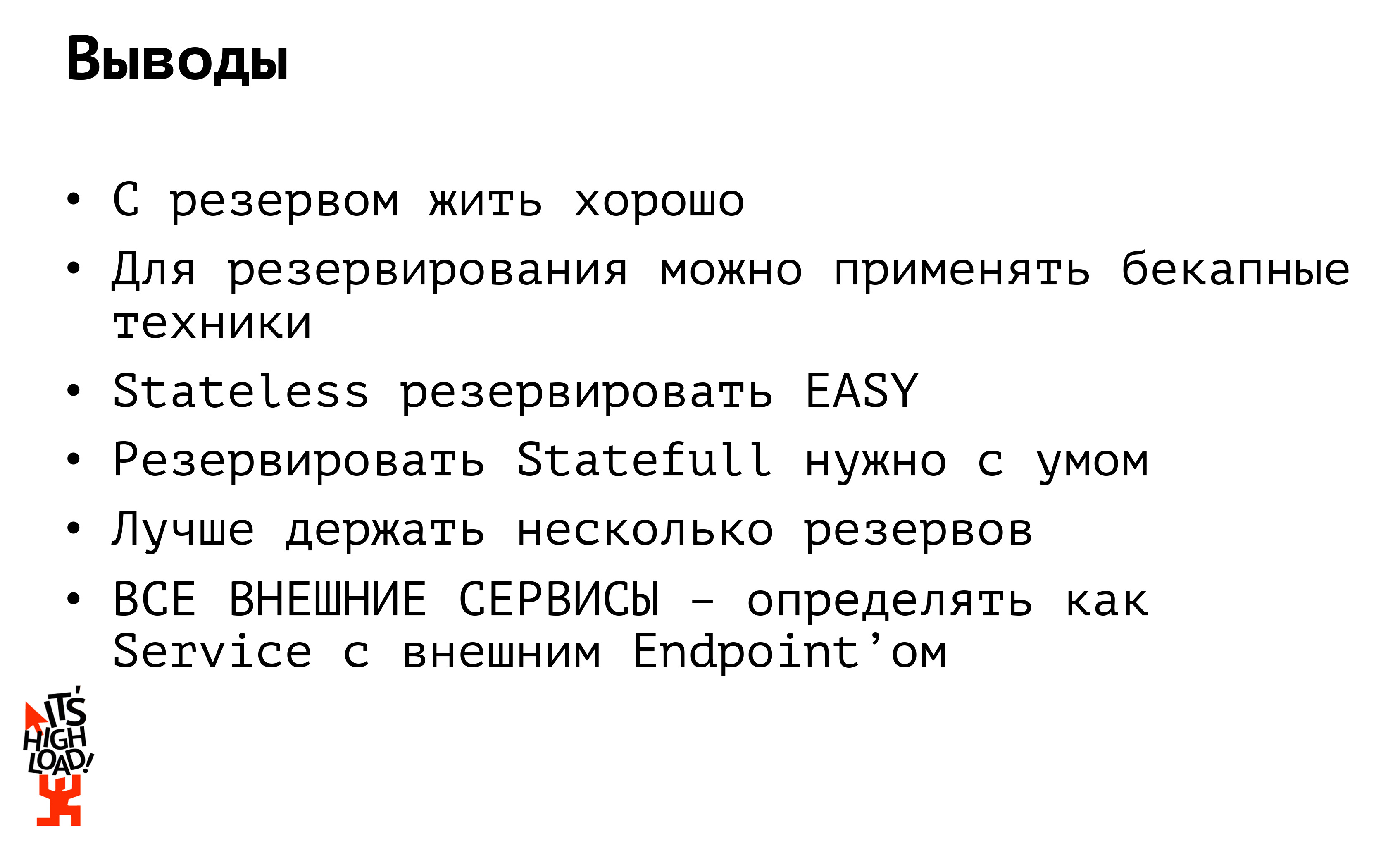

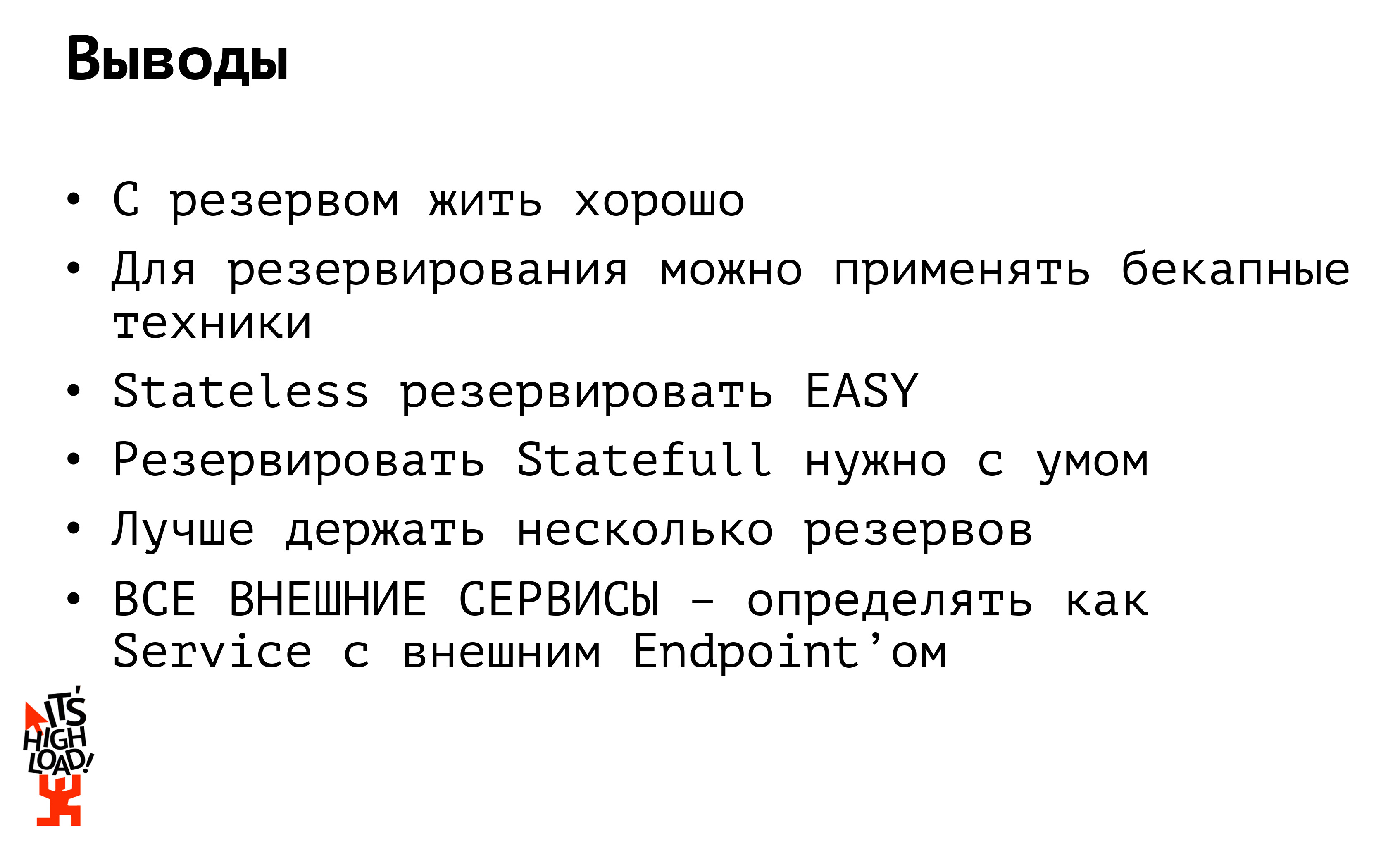

Well, actually, what conclusions can be drawn from all this?

The first conclusion: to live well with the reserve. But expensive. But ideally live not with one reserve. Ideally, you generally need to live with several reserves. Firstly, the reserve should be at least not in one DC, and, secondly, at least at another hosting provider. Often it happened - and in my practice it was. I, unfortunately, cannot name the projects, just when there was a fire in the data center ... I’m like this: switching to a reserve! And backup servers in the same rack were ...

Or imagine that Amazon was banned in Russia (and this was). And that's all: the sense that in another amazon is our reserve? He is also unavailable. So I repeat: we keep a reserve, at least in another DC, and preferably from another hosting provider.

The second conclusion: if your application communicates with some external sources (it can be both a database and some external api) in your cuber, be sure to define it as a service with an external Endpoint so that you do not override it at the moment of switching 15 of your applications that knock on the same base. Identify the base as a separate service, knock on it as if it were inside your cluster: if you have a base, you change your ip in one place and continue to live happily.

And finally: I love the “cube”, as well as experiments with it. I also like to share the results of these experiments and in general my personal experience. Therefore, I recorded a series of webinars about K8s, well in our youtube channel for details.

In principle, monitoring and redundancy are the two main tools for increasing the resiliency of any project. But in Kuber everything is balanced on its own, you will say, everything scales on its own, and if something happens, it will rise by itself ... That is, when I first studied the topic superficially, to the question of how K8s approaches the backup, the Internet answered me “why ? ”Many people think that a cuber is such a magical thing that eliminates all infrastructure problems and makes the project never fall. But ... the world is not what it seems.

How did we approach the reservation process before? We had identical sites for placement - either they were virtuals or they were iron servers, to which we applied three basic practices:

')

- synchronization of code and statics

- configuration synchronization

- DB replication

And voila: at any moment we switch to the backup site, everyone is happy, we get up and we disagree.

And what do they propose for us to increase the constant availability of our kubernetes application? The first thing that the informal documentation says is to put a lot of machines, to do a lot of masters - their number should meet the conditions for reaching the quorum within the cluster, and so that each of the masters has etcd, api, MC, scheduler raised ... And, like, everything is fine : when several working nodes or masters fail, our cluster is rebalanced, and the application will continue to work. Again it looks like magic! But often our cluster is located within a single data center, and this can raise certain questions. What if an excavator arrived and dug a cable, a lightning bolt struck, there was a universal flood? Everything is covered, our cluster is no more. How to approach to reservation taking into account this party of a problem?

First of all, you should have another hot standby cluster, that is, a cluster that you can switch to at any time. At the same time, from the point of view of the cuber, the infrastructure must be completely identical. That is, if there are any nonstandard plugins for working with the file system, custom solutions for ingress, they must be completely identical on your two (or three, or ten, then how much money and admin power is enough) clusters. It is necessary to clearly define two sets of applications (deployment'ov, statefulset'ov, daemonset'ov, cronjob'ov and so on): which of them can work on the reserve constantly, and which better not to run before the direct switching.

So, should our backup cluster be completely identical to our battle cluster? Not. If earlier, within the framework of work with monolithic projects, with iron infrastructure, we kept almost completely identical surroundings, then within the framework of a cuber, I believe, this should not be. Let's take a look at why.

For example, let's start with the core entities of Cuburnetes - deployments - they should be identical. Applications must be launched that can intercept traffic processing at any time and allow our project to continue to live. If we are talking about configuration files, then we need to look at whether they should be identical or not. That is, if we, smart people, do not use any prohibited substances and do not keep the base in K8s, then we should have access settings to the combat base in configmaps (the backup process is built separately). Accordingly, to provide access to the backup database instance, we must have a separate configuration file (configmap). Exactly the same way we work with secretas: passwords for access to the database, api-keys; At any time we can work either a combat secret or a reserve one. In total, we already have two entities kubernetes, the backup versions of which should not be identical to the combat ones. The next entity on which to stay is cronjob. Cronjobes on the reserve should in no case be identical to the set of cronjobs of the production cluster! If we raise the backup cluster and raise it completely with all the included cronjobs, then, for example, people will receive two letters from you at the same time instead of one. Or some kind of data synchronization with external sources will take place twice, respectively, we begin to hurt, cry, scream and swear.

But how do we propose to organize a cluster of reserving people from the Internet? The second most popular answer after “why?” Is the use of the Kubernetes Federation.

What it is? This is, let's say, a large meta cluster. If we imagine the architecture of a cuber - where we have a master, several nodes, then from the point of view of federation we also have a master and several nodes, only each node is a separate cluster. That is, we work with the same entities, with the same primitives, as with a single kuber, only we twist and turn, not in our physical machines, but in whole clusters. Within the framework of the federation, we have complete synchronization of federated resources from parents to descendants. For example, if we run some kind of deployment through a federation, it will be deposited on each of our child clusters. If we take a configmap, a secret, roll it into a federation — it will spread to all our child clusters; at the same time, the federation allows us to customize our resources on children. That is, we took some configmap, hit it through the federation and then, if we need to tweak something on specific clusters, we go to edit on a separate cluster, and this change will not be synchronized anywhere.

Kubernetes Federation is an existing tool not so long ago, and it doesn’t support the entire set of resources provided by K8s itself: at the time of publication of one of the first versions of the documentation, they mentioned support only for config-maps, deployment for replica-set, ingress. Secrets were not supported, work with volume was also not supported. Too limited set. Especially if we like to have fun, for example, to transfer our own resources to the Capernetic through the custom resource definition, we don’t push them into the federation. That is, as it were ... a very similar decision to the truth, but it makes us periodically shoot ourselves in the foot. On the other hand, federation allows you to flexibly manage our replicaset. For example, we want to run 10 replicas of our application, by default the federation will divide this number proportionally between the number of clusters. And all this can also be configured! That is, you can specify that you need to keep 6 replicas of our application on the combat cluster, and only 4 replicas of our application on the backup cluster to save resources, or for your own entertainment. Which is also quite convenient. But with the federation we have to use some new solutions, to finish something on the go, to force ourselves to think a little more ...

Is it possible to approach the process of reserving a cuber somehow simpler? What tools do we have at all?

Firstly, we always have a certain ci / cd system, that is, we do not manually go, do not write on create / apply servers. The system generates yaml'iki for our containers.

Secondly, there are several clusters, we have either one or several (if we are smart) registry, which we also took and reserved. And there is a wonderful utility kubectl, which can work with several clusters at the same time.

So: in my opinion, the simplest and most correct solution for building a backup cluster is a primitive parallel deployment. There is some kind of pipeline in the ci / cd system; First, build our containers, test and roll out applications through kubectl into several independent clusters. We can build simultaneous calculations on several clusters. Accordingly, the delivery of configurations, we also decide at this stage. You can pre-define a set of configurations for our combat cluster, a set of configurations for the backup cluster and, at the ci / cd system level, roll out the prod environment into the prod cluster, the backup environment into the backup cluster. Compared with the federation, it is not necessary to go to each child cluster after defining a federated resource and redefine something. We did it in advance. What we are great.

But ... there is ... I was written, there is a "root of all evil," but there are actually two of them. First, the file system. There is some kind of PV, or we use external storage. If we store files inside the cluster, then we need to act on the old practices left over from the time of iron infrastructures: for example, synchronize with lsync. Well, or any other crutch you prefer. Roll out all the other cars and live.

Secondly, and, in fact, an even more important sticking point is the database. If we are smart people and do not keep the base in the cuber, then the process of backing up data using the same old scheme is master-slave replication, then switching, we will catch up with the replica and we will live well. But if we keep our DB inside the cluster, then in principle there are many ready-made solutions for organizing the same master-slave replica, many solutions for raising the DB inside the cuber.

A billion reports have already been read about database backups, a billion articles have been written, and nothing new is actually necessary here. In general, follow your dream, live as you wish, invent some complicated crutches for yourself too, but be sure to think over how you will reserve all this.

And now about how, in principle, we will have the process of switching to a backup site in case of a fire. First of all, we deploy stateless applications in parallel. They do not affect the business logic of our applications, our project, we can constantly keep two sets of running applications, and they can start accepting traffic. It is very important in the process of switching to the backup site to see if you need to override the configuration? For example, we have a prod cluster of cuberntes, there is a backup cluster of cuberntes, there is an external database master, there is a reserve database master. We have four options for how these applications in the prode can begin to interact with each other. We can switch the base, and it turns out that we need to switch traffic to the new base in the production cluster, or we can have a cluster - and we moved to the reserve, but we continue to work with the supply base, well, the third option, when we got it , it turned up, and we switch both applications, redefine our configuration, in order for new applications to work with a new database.

Well, actually, what conclusions can be drawn from all this?

The first conclusion: to live well with the reserve. But expensive. But ideally live not with one reserve. Ideally, you generally need to live with several reserves. Firstly, the reserve should be at least not in one DC, and, secondly, at least at another hosting provider. Often it happened - and in my practice it was. I, unfortunately, cannot name the projects, just when there was a fire in the data center ... I’m like this: switching to a reserve! And backup servers in the same rack were ...

Or imagine that Amazon was banned in Russia (and this was). And that's all: the sense that in another amazon is our reserve? He is also unavailable. So I repeat: we keep a reserve, at least in another DC, and preferably from another hosting provider.

The second conclusion: if your application communicates with some external sources (it can be both a database and some external api) in your cuber, be sure to define it as a service with an external Endpoint so that you do not override it at the moment of switching 15 of your applications that knock on the same base. Identify the base as a separate service, knock on it as if it were inside your cluster: if you have a base, you change your ip in one place and continue to live happily.

And finally: I love the “cube”, as well as experiments with it. I also like to share the results of these experiments and in general my personal experience. Therefore, I recorded a series of webinars about K8s, well in our youtube channel for details.

Source: https://habr.com/ru/post/452078/

All Articles