Mathematicians have discovered the perfect way to multiply numbers.

By breaking large numbers into small numbers, the researchers exceeded the fundamental mathematical speed limit.

Four thousand years ago, the inhabitants of Babylonia invented multiplication. And in March of this year, mathematics improved it.

On March 18, 2019, two researchers described the fastest known method of multiplying two very large numbers. The work marks the culmination of a long-standing search for the most efficient procedure for performing one of the basic operations of mathematics.

“Everyone thinks that the multiplication method they learned in school is the best, but in fact active research is going on in this area,” says Joris van der Hoeven , a mathematician from the French National Center for Scientific Research, one of the co-authors.

The complexity of the set of computational problems, from counting new digits of the number π to detecting large prime numbers, is reduced to the speed of multiplication. Van der Hoeven describes their result as the appointment of a kind of mathematical constraint on the speed of solving many other problems.

')

“In physics, there are important constants such as the speed of light that allow you to describe all sorts of phenomena,” said van der Hoeven. “If you want to know how quickly computers can solve certain mathematical problems, then multiplication of integers occurs in the form of some basic building block, in relation to which such speed can be expressed.”

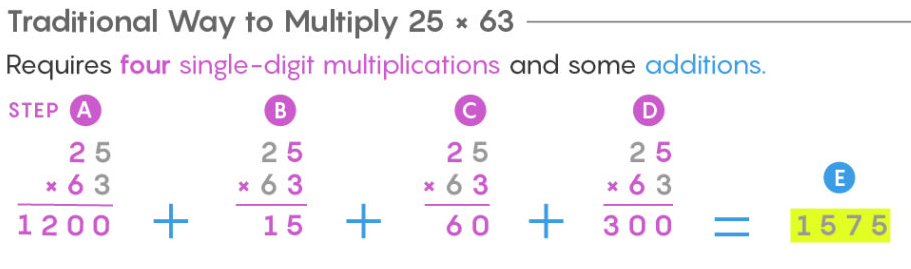

Almost everyone learns to multiply numbers the same way. Write the numbers in the column, multiply the top number for each digit of the bottom (taking into account the digits) and add the result. When you multiply two two-digit numbers, you have to make four smaller multiplications to get a final result.

The school transfer method requires n 2 steps to be performed, where n is the number of digits in each of the multiplied numbers. Calculations with three-digit numbers require nine multiplications, and with three-digit multiplications - 10,000.

The transfer method normally works with numbers consisting of several digits, however, it begins to slip when multiplying numbers consisting of millions or billions of digits (which is what computers do when counting π accurately or when searching for large prime numbers worldwide ). To multiply two numbers with a billion digits, you will need to produce a billion squared, or 10 18 multiplications, - this will take about 30 years for a modern computer.

For several millennia, it was believed that it is impossible to multiply numbers faster. Then in 1960, 23-year-old Soviet and Russian mathematician Anatoly Alekseevich Karatsuba attended a seminar led by Andrei Nikolaevich Kolmogorov , a Soviet mathematician, one of the greatest mathematicians of the 20th century. Kolmogorov stated that there is no generalized multiplication method that requires less than n 2 operations. Karatsuba decided that there was such a way - and after a week of searching he found it.

Anatoly Alekseevich Karatsuba

Karatsuba 's multiplication consists in splitting the digits of a number and re-combining them in a new way, which allows, instead of a large number of multiplications, to conduct a smaller number of additions and subtractions. The method saves time, because the addition takes only 2n steps instead of n 2 .

The traditional method of multiplying 25x63 requires four multiplications by a single number and several additions.

The multiplication of Karatsuba 25x63 requires three multiplications by a single number and several additions and subtractions.

a) break the numbers

b) multiply tens

c) multiply units

d) add numbers

e) multiply these sums

f) count e - b - c

g) collect the total amount of b, c and f

With an increase in the number of characters in numbers, the Karatsuba method can be used recursively.

The traditional multiplication method 2531x1467 requires 16 multiplications by a single digit.

The multiplication of Karatsuba 25311467 requires 9 multiplications.

“Addition to school takes place a year earlier, because it is much simpler, it runs in linear time, with the speed of reading numbers from left to right,” said Martin Führer , a mathematician at Pennsylvania State University, who created the fastest multiplication algorithm at that time in 2007.

When dealing with large numbers, the multiplication of Karatsuba can be repeated recursively, breaking the initial numbers into almost as many parts as there are signs in them. And with each partition, you change the multiplication, which requires many steps, to addition and subtraction, which require far fewer steps.

“Multiple multiplications can be turned into additions, given that computers will cope with this more quickly,” said David Harvey , a mathematician at the University of New South Wales and co-author of the new work.

The Karatsuba method made it possible to multiply numbers using only n 1.58 multiplications by a single digit. Then in 1971, Arnold Schönhage and Volker Strassen published a method for multiplying large numbers in n × log n × log (log n) small multiplications. To multiply two numbers of a billion characters each Karatsuba method will require 165 trillion steps.

Joris van der Hoeven, mathematician from the French National Center for Scientific Research

The Schönhage-Strassen method is used by computers to multiply large numbers, and has led to two other important consequences. First, he introduced the use of a signal processing technique called the fast Fourier transform . Since then, this technique has been the basis of all fast multiplication algorithms.

Secondly, in the same paper, Schönhage and Strassen suggested the possibility of the existence of an even faster algorithm — a method that requires only n × log n multiplications per character — and that such an algorithm will be the fastest possible. This assumption was based on the feeling that in such a fundamental operation as multiplication, the restriction of operations should be written in a somewhat more elegant way than n × log n × log (log n).

“The majority, in general, agreed that multiplication is such an important basic operation that from a purely aesthetic point of view, it requires a beautiful restriction on complexity,” said the Fuhrer. “From experience, we know that the math of basic things always turns out to be elegant in the end.”

The unsharp constraint of Schönhage and Strassen, n × log n × log (log n), lasted 36 years. In 2007, the Fuhrer broke this record, and everything started to turn. Over the past decade, mathematicians have been finding faster and faster multiplication algorithms, each of which gradually crawled to the n × log n mark, not quite reaching it. Then in March of this year, Harvey and van der Hoeven reached it.

Their method is the improvement of the great work done before them. He breaks the numbers into signs, uses an improved version of the fast Fourier transform, and uses other breakthroughs made in the last 40 years. “We use the fast Fourier transform much more crudely, use it several times, not just one, and replace even more multiplications by addition and subtraction,” said van der Hoeven.

The algorithm of Harvey and van der Hoeven proves that multiplication can be carried out in n × log n steps. However, it does not prove the absence of a faster method. It will be much more difficult to establish that their approach is as fast as possible. At the end of February, a team of computer scientists from Aarhus University published a paper claiming that if one of the unproved theorems turns out to be true, then this method really will be the soonest method of multiplication.

And although in theory this new algorithm is very important, in practice it will change little, since it only slightly wins from the algorithms already used. “All we can hope for is a three-fold acceleration,” said van der Hoeven. “Nothing beyond.”

In addition, the circuits of computer equipment have changed. Twenty years ago, computers performed addition much faster than multiplication. The gap in the speeds of multiplication and addition has seriously decreased since then, as a result of which on some chips the multiplication may even overtake addition. Using certain types of equipment, "you can speed up addition, causing the computer to multiply numbers, and this is some kind of madness," said Harvey.

Equipment changes over time, but the best algorithms of its class are eternal. Regardless of how computers look in the future, the algorithm of Harvey and van der Hoeven will still be the most efficient way to multiply numbers.

Source: https://habr.com/ru/post/451860/

All Articles