Another monitoring system

Summation of speed on 16 modems of 4 cellular operators. Outgoing speed in one stream - 933.45 Mbit / s

Introduction

Hello! This article is about how we wrote a new monitoring system for ourselves. It differs from the existing ones by the possibility of high-frequency synchronous acquisition of metrics and a very small consumption of resources. The polling frequency can reach 0.1 milliseconds with synchronization accuracy between the metrics of 10 nanoseconds. All binary files occupy 6 megabytes.

about the project

We have a rather specific product. We produce a complete solution for adding bandwidth and fault tolerance to data transmission channels. This is when there are several channels, let's say Operator1 (40 Mbps) + Operator2 (30 Mbps) + Something else (5 Mbps), the result is one stable and fast channel, the speed of which will be approximately like this: (40+ 30 + 5) x0.92 = 75x0.92 = 69 Mbps.

Such solutions are required where the capacity of any one channel is insufficient. For example, transportation, video surveillance systems and streaming video broadcasting in real time, broadcasting of live radio broadcasts, any suburban sites where there are only representatives of the big four from telecom operators and speed on one modem / channel is not enough.

For each of these areas we produce a separate line of devices, but their software part is almost the same and a high-quality monitoring system is one of its main modules, without the correct implementation of which the product would be impossible.

For several years, we managed to create a multi-level fast, cross-platform and lightweight monitoring system. What we want to share with a respected community.

Formulation of the problem

The monitoring system provides for obtaining metrics of two fundamentally different classes: real-time metrics and all the others. The following requirements were for the monitoring system:

- High-frequency synchronous acquisition of real-time metrics and their transfer to the communication control system without delay.

High frequency and synchronization of different metrics is not just important, it is vital for analyzing the entropy of data transmission channels. If in one data channel the average delay is 30 milliseconds, then an error in synchronization between the other metrics of just one millisecond will result in a degradation of the speed of the resulting channel by about 5%. If we make a mistake in synchronization for 1 millisecond in 4 channels, the degradation of speed can easily fall to 30%. In addition, the entropy in the channels changes very quickly, so if you measure it less often than once every 0.5 milliseconds, on fast channels with a small delay, we get high speed degradation. Of course, such accuracy is not necessary for all metrics and not in all conditions. When the delay in the channel is 500 milliseconds, and we work with such, then the error of 1 millisecond is almost not noticeable. Also, for metrics of life support systems, we have enough polling and synchronization frequencies of 2 seconds, however, the monitoring system itself should be able to work with ultrahigh polling frequencies and ultra-accurate metric synchronization. - Minimum resource consumption and a single stack.

The end device can be a powerful on-board system that can analyze the situation on the road or maintain a biometric fixation of people, or a single board-sized computer that the fighter of special forces wears under a bullet-proof vest for real-time video transmission in poor communication conditions. Despite such a variety of architectures and computing power, we would like to have the same software stack. - Umbrella architecture

Metrics must be collected and aggregated on the end device, have local storage and visualization in real time and retrospectively. In the case of a connection, transfer data to a central monitoring system. When there is no connection, the sending queue should accumulate and not consume RAM. - API for integration into the customer's monitoring system, because no one needs a lot of monitoring systems. The customer must collect data from any devices and networks in a single monitoring.

What happened

In order to load the already impressive longrid, I will not give examples and measurements of all monitoring systems. It will pull on another article. Let me just say that we were unable to find a monitoring system that can take two metrics simultaneously with an error of less than 1 millisecond and which works equally efficiently on both the ARM architecture with 64 MB of RAM and the x86_64 architecture of 32 GB of RAM. Therefore, we decided to write our own, which can do this. Here's what we got:

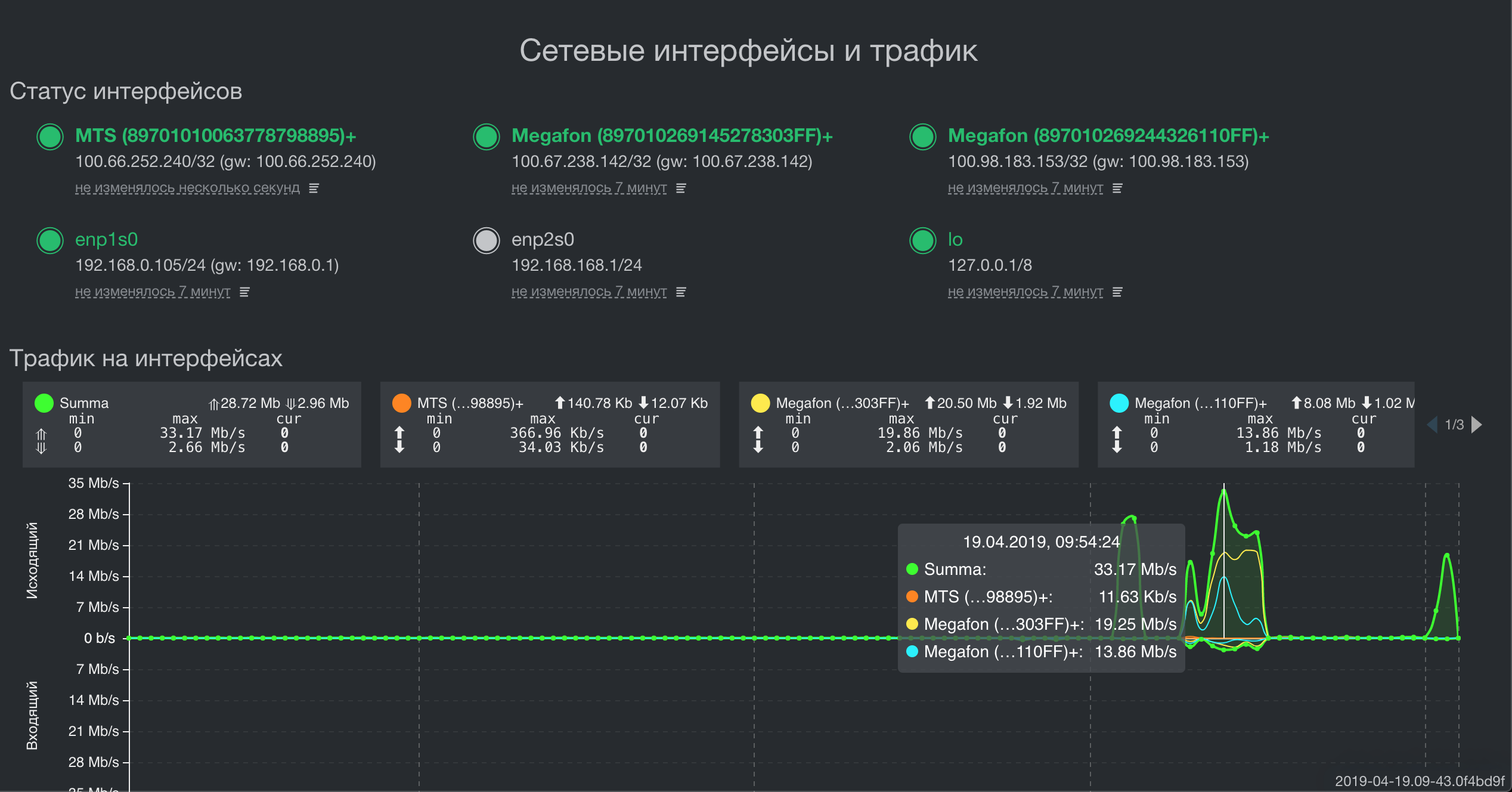

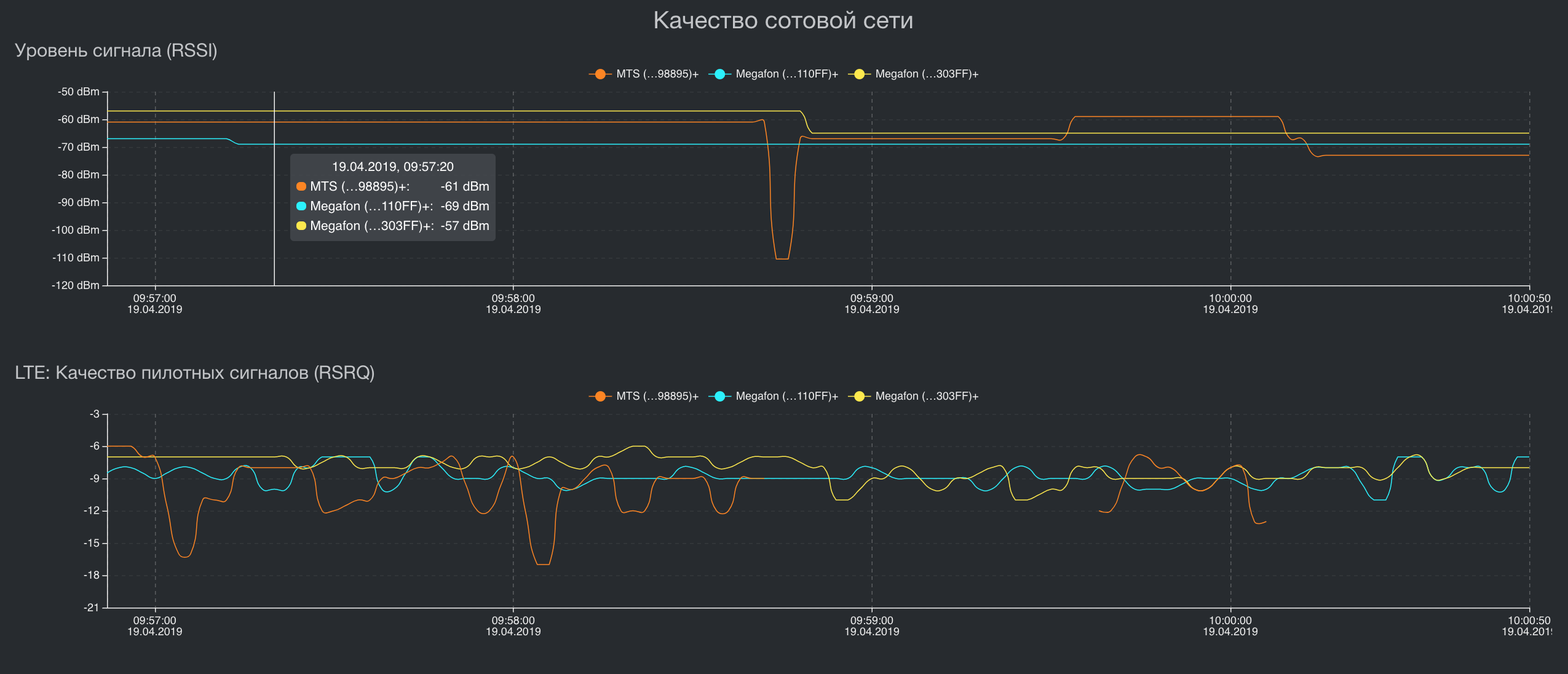

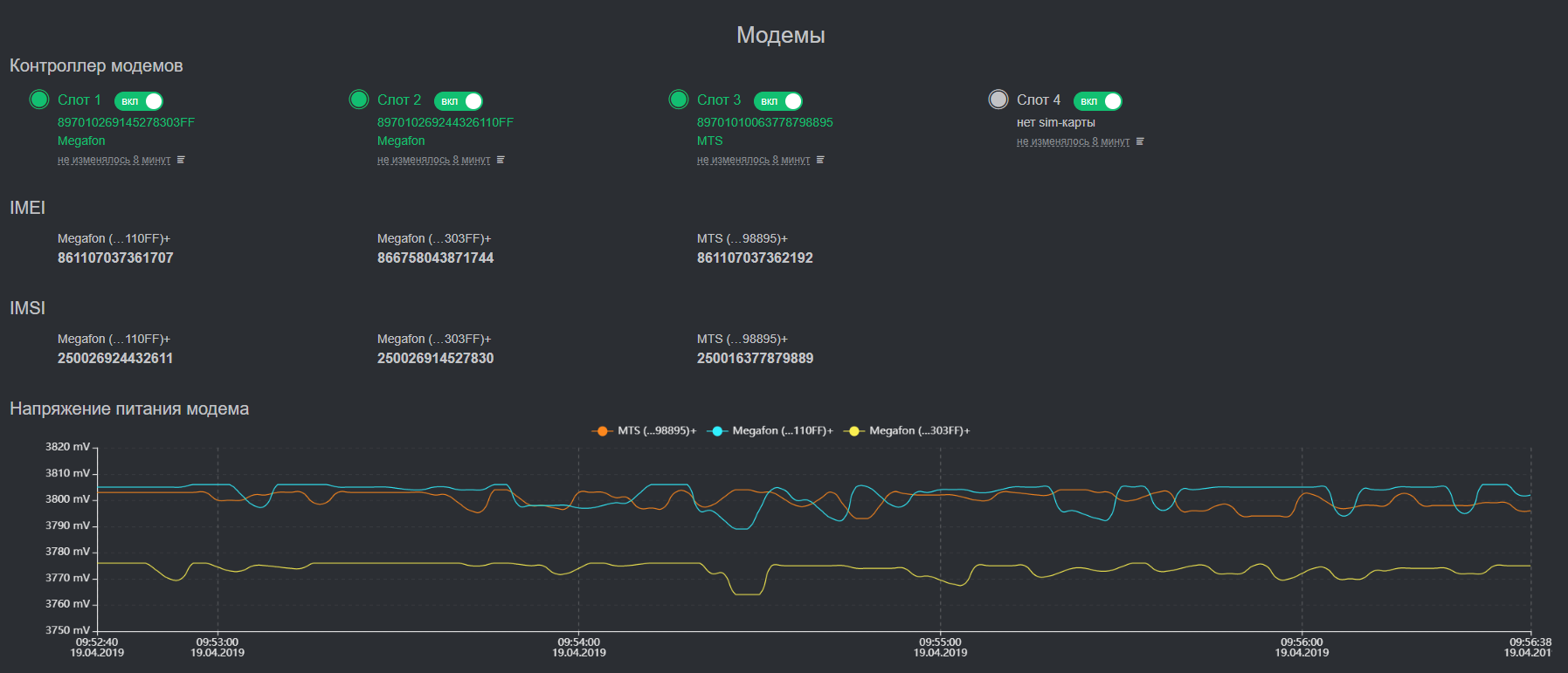

The summation of the bandwidth of the three channels for different network topology

Visualization of some key metrics

Architecture

As the main programming language, both on the device and in the data center, we use Golang. It has greatly simplified life with its multitasking implementation and the ability to get one statically linked executable binary file for each service. As a result, we significantly save on resources, methods and traffic of service deployment to end devices, development and debugging time of the code.

The system is implemented according to the classical modular principle and contains several subsystems:

- Registration of metrics.

Each metric is serviced by its own thread and synchronized through the channels. We managed to get synchronization accuracy up to 10 nanoseconds. - Metrics storage

We chose between writing our own storage for time series or using something from the available one. The database is needed for retrospective data that is subject to subsequent visualization. There are no data on channel delays every 0.5 milliseconds or error indications in the transport network, but there is a speed on each interface every 500 milliseconds. In addition to the high requirements for cross-platform and low resource consumption, it is extremely important for us to be able to process. the data is in the same place where it is stored. It saves tremendous computing resources. We have been using the Tarantool DBMS in this project since 2016 and we do not see a replacement for it in the horizon. Flexible, with optimal resource consumption, more than adequate technical support. Also in Tarantool implemented GIS module. Of course, it is not as powerful as PostGIS, but it is enough for our tasks of storing some metrics associated with a location (relevant for transport). - Visualization of metrics

It's all relatively simple. We take data from the storage and show them either in real time or in retrospect. - Data synchronization with central monitoring system.

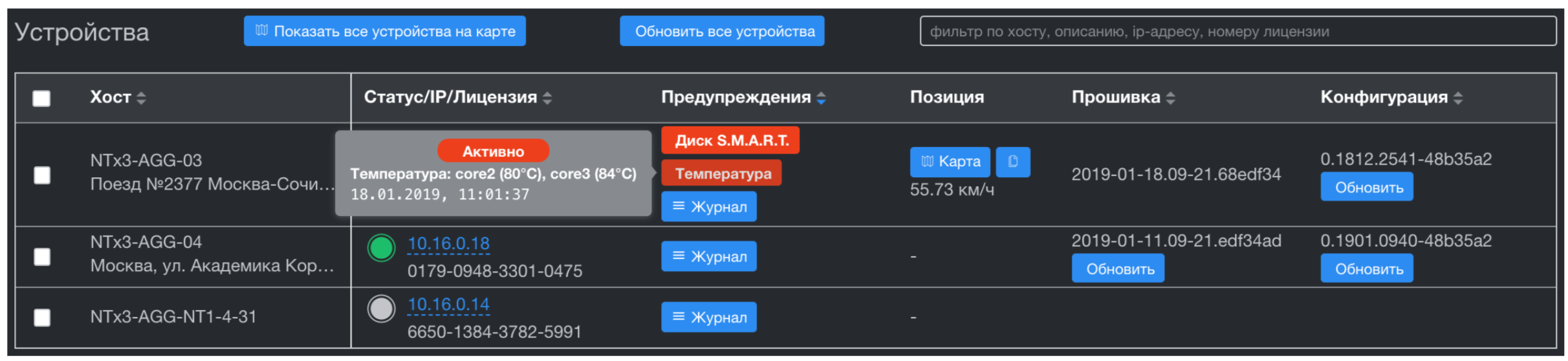

The central monitoring system receives data from all devices, stores it with a given retrospective and sends it to the Customer’s monitoring system through the API. Unlike classical monitoring systems, in which the "head" walks and collects data - we have a reverse scheme. Devices themselves send data when there is a connection. This is a very important point, because it allows you to receive data from the device for the periods in which it was not available and not to load channels and resources at the time when the device is not available. As a central monitoring system, we use the Influx monitoring server. Unlike analogs, it can import retrospective data (that is, with a timestamp different from the time of receiving the metric) Collected metrics are visualized by a modified Grafana file. This standard stack was also chosen because it has ready-to-use integration APIs with virtually any customer monitoring system. - Data synchronization with the central device management system.

The device management system implements Zero Touch Provisioning (firmware upgrades, configurations, etc.) and, unlike the monitoring system, it only receives problems by device. These are triggers for onboard hardware watchdog services and all life support systems metrics: CPU and SSD temperature, CPU load, free space and SMART disk health. The storage subsystem is also built on Tarantool. This gives us a significant speed in the aggregation of time series across thousands of devices, and also completely solves the issue of data synchronization with these devices. Tarantool has an excellent queue system and guaranteed delivery. We got this important feature out of the box, great!

Network management system

What's next

So far the weakest link in our country is the central monitoring system. It is implemented at 99.9% on the standard stack and it has several disadvantages:

- InfluxDB loses data when power is off. As a rule, the Customer promptly takes away everything that comes from the devices and there is no data in the database itself that is older than 5 minutes, but in the future this can become a pain.

- Grafana has a number of problems with data aggregation and synchronism of their display. The most common problem is when the database is in a time series with an interval of 2 seconds starting, say, from 00:00:00, and Grafana starts to show data in aggregation from +1 second. As a result, the user sees a dancing schedule.

- Excess amount of code for API integration with third-party monitoring systems. You can make it much more compact and of course rewrite to Go)

I suppose you all perfectly saw how Grafana looks and without me you know its problems, so I will not overload the post with pictures.

Conclusion

I deliberately did not describe the technical details, but described only the supporting design of this system. First, to technically fully describe the system will require another article. Secondly, not everyone will be interested. Write in the comments what technical details you would like to know.

If someone has questions outside of this article, I can write to the address a.rodin @ qedr.com

')

Source: https://habr.com/ru/post/451778/

All Articles