A brief review of the article "DeViSE: A Deep Visual-Semantic Embedding Model"

Introduction

Modern recognition systems are limited to classify into a relatively small number of semantically unrelated classes. Bringing in textual information, even unrelated to pictures, allows us to enrich the model and to some extent solve the following problems:

- if the recognition model makes a mistake, then often this error is semantically not close to the correct class;

- There is no way to predict an object that belongs to a new class that was not represented in the training dataset.

The proposed approach proposes to display images in a rich semantic space in which the labels of more similar classes are closer to each other than the labels of less similar classes. As a result, the model gives fewer semantically far from the true class of predictions. Moreover, the model, taking into account both visual and semantic proximity, can correctly classify images belonging to a class that was not represented in the training dataset.

Algorithm. Architecture

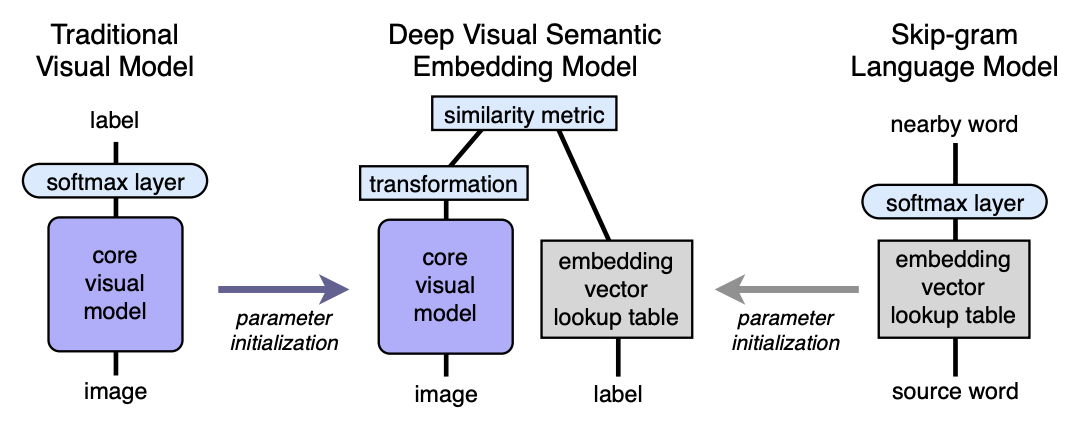

- Pre-teach the language model, which gives good semantically meaningful embedding. The dimension of the space is n. Further n will be taken equal to 500 or 1000.

- We teach the visual model, which classifies objects well into 1000 classes.

- Cut the last softmax layer from the pre-trained visual model and add a fully connected layer from 4096 to n neurons. For each image, we train the resulting model to predict embedding for the corresponding image label.

Explain using mappings. Let LM be the language model, VM the visual model with the cut softmax and the added fully connected layer, I - image, L the label of image, LM (L) - the label embedding in the semantic space. Then in the third step we train the VM so that:

Architecture:

Language model

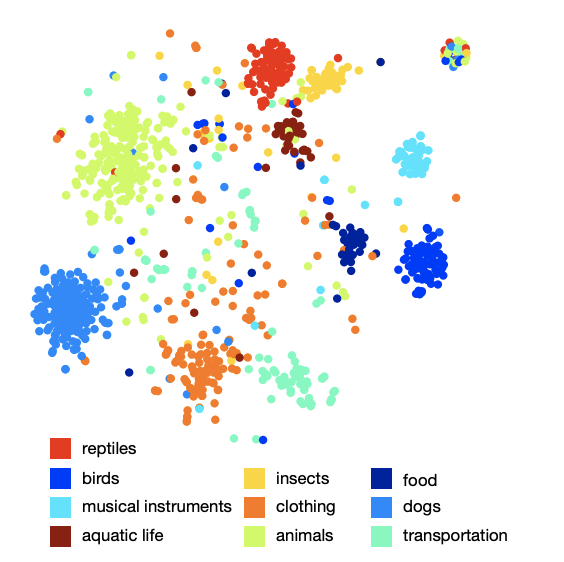

The language model used was a skip-gram model, a body of 5.4 billion words taken from wikipedia.org. The model used a hierarchical softmax layer to predict adjacent concepts, a window - 20 words, the number of passes through the body - 1. It was experimentally established that embedding size is better to take 500-1000.

The picture of the location of classes in space shows that the model has learned a high-quality and rich semantic structure. For example, for a certain type of shark in the resulting semantic space, the 9 nearest neighbors are the other 9 types of sharks.

Visual model

The architecture that won the 2012 ILSVRC competition was taken as a visual model. Softmax was removed and a fully connected layer was added to obtain the desired embedding size.

Loss function

It turned out that the choice of the loss function is important. A combination of cosine similarity and hinge rank loss was used. The loss function spared a larger scalar product between the result vector of the visual network and the corresponding embinge label and fined for the large scalar product between the result of the visual network and embedings of random possible image labels. The number of arbitrary random labels was not fixed, but limited by the condition under which the sum of scalar products with false labels became larger than the scalar product with a valid label minus the fixed margin (constant 0.1). Of course, all the vectors were previously normalized.

Training process

At the beginning, only the last fully connected layer was trained, the rest of the network did not update the weights. In this case, the SGD optimization method was used. Then the entire visual network was unfrozen and trained using the Adagrad optimizer so that during back propagation at different layers of the network the gradients scaled correctly.

Prediction

During the prediction, the image using a visual network, we get some vector in our semantic space. Next, we find the nearest neighbors, that is, some possible labels and in a special way display them back to ImageNet synsets for scoring. The procedure for the last mapping is not as simple as the labels in ImageNet are a set of synonyms, and not one label. If the reader is interested to know the details, I recommend the original article (Appendix 2).

results

The result of the development of the DEVISE model was compared with two models:

- Softmax baseline model - a state-of-the-art vision model (SOTA - at the time of publication)

- Random embedding model is the version of the described DEVISE model, where joints are not learned by the language model, but are initialized arbitrarily.

For quality assessment, “flat” hit @ k metrics and hierarchical precision @ k metric were used. The hit @ k “flat” metric is the percentage of test images for which the correct label is among the first k predicted variants. The hierarchical precision @ k metric was used to assess the quality of semantic match. This metric was based on the label hierarchy in ImageNet. For each true label and fixed k, a set of

semantically correct tags - ground truth list. Receiving a prediction (nearest neighbors) was the percentage of intersection with the ground truth list.

The authors expected that the softmax model should show the best results on flat metric because it minimizes cross-entropy loss, which is very well suited for “flat” hit @ k metrics. The authors were surprised at how close the DEVISE model comes to the softmax model, reaches parity at large k and even outperforms when k = 20.

On the hierarchical metric, the DEVISE model shows itself in all its glory and overtakes the softmax baseline by 3% for k = 5 and by 7% for k = 20.

Zero-shot learning

A particular advantage of the DEVISE model is the ability to provide adequate prediction for images whose marks the network has never seen during training. For example, the network saw images marked tiger shark, bull shark, and blue shark during a workout and never met the shark tag. Since the language model has an idea for shark in the semantic space and it is close to embeddings of different types of shark, the model is more likely to give an adequate prediction. This is called the ability of generalization - generalization.

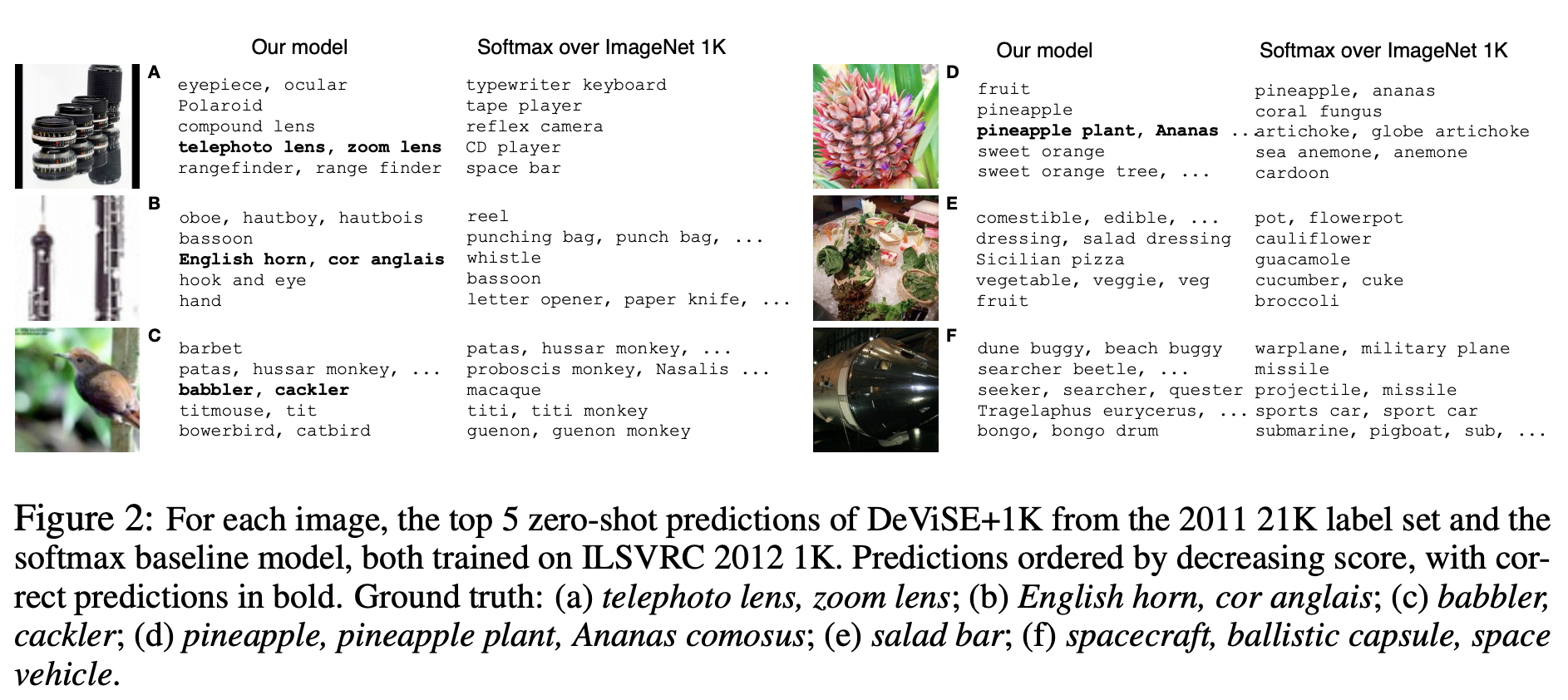

Let us demonstrate some examples of Zero-Shot predictions:

Note that the DEVISE model, even in its erroneous assumptions, is closer to the correct answer than the erroneous assumptions of the softmax model.

So, the presented model, quite a bit loses softmax baseline on flat metrics, but significantly wins on hierarchical precision @ k metric. The model has the ability to generalize, giving adequate predictions for the images, the marks of which the network has not met (zero-shot learning).

The described approach can be easily implemented, since it is based on two pre-trained models - language and visual.

')

Source: https://habr.com/ru/post/451738/

All Articles