Fundamentals of modern artificial intelligence: how it works, and whether our society will destroy this year?

Today's AI is technically “weak” - but it is complex and can significantly affect society.

You do not need to be Cyrus Dully to know how frightening artificial intelligence can become frightening [American actor who played the role of astronaut Dave Bowman in the 2001 film Odyssey 2001 / approx. transl.]

AI, or artificial intelligence, is now one of the most important areas of knowledge. “Unsolvable” problems are solved, billions of dollars are invested, and Microsoft even hires Kommon to tell us with poetic calmness what a wonderful thing it is - AI. That's it.

And, as with any new technology, it is difficult to get through all the hype. For years I have been doing research in the field of UAVs and AI, but even it can be difficult for me to keep up with all this. In recent years, I spent a lot of time looking for answers even to simple questions like:

- What do people mean when they say "AI"?

- What is the difference between AI, machine learning and deep learning?

- What is so great about deep learning?

- Which former complex tasks are now easy to solve, and what is still difficult?

I know that no one is interested in such things. Therefore, if you are wondering what all these enthusiasm about AI are connected with at the simplest level, it's time to look behind the scenes. If you are an AI expert, and read reports from the conference on neurological information processing (NIPS) for entertainment, the article will not contain anything new for you - however, we expect from you clarifications and corrections in comments.

')

What is AI?

There is such an old joke in computer science: what is the difference between AI and automation? Automation is what can be done with a computer, and AI is what we would like to be able to do. As soon as we learn how to do something, it moves from the field of AI to the category of automation.

This joke is valid today, since the AI is not clearly defined. “Artificial Intelligence” is simply not a technical term. If you get into Wikipedia, it says that AI is “intelligence demonstrated by machines, as opposed to natural intelligence demonstrated by humans and other animals.” Less clear and can not be said.

In general, there are two types of AI: strong and weak. Strong AI imagines most people when they hear about AI - this is some kind of God-like all-knowing intelligence like Skynet or Hal 9000, capable of reasoning and comparable to human intelligence, and at the same time superior to its capabilities.

Weak AIs are highly specialized algorithms designed to get answers to certain useful questions in narrowly defined areas. For example, a very good chess program falls into this category. The same can be said about software that very precisely adjusts insurance payments. In their field, such AIs achieve impressive results, but in general they are very limited.

With the exception of Hollywood opus, today we didn’t even come close to a strong AI. So far, any AI is weak, and most researchers in this field agree that the techniques we have invented of creating beautiful weak AI probably will not bring us closer to creating a strong AI.

So today's AI is a more marketing term than a technical one. The reason why companies advertise their "AI" instead of "automation" is because they want to embed a Hollywood AI into the public mind. However, this is not so bad. If this is not taken too strictly, companies only want to say that, although we are still very far from strong AI, today's weak AI is much more capable than it did a few years ago.

And if to distract from marketing, then so it is. In certain areas, the capabilities of machines have increased dramatically, and mainly due to two more fashionable phrases now: machine learning and deep learning.

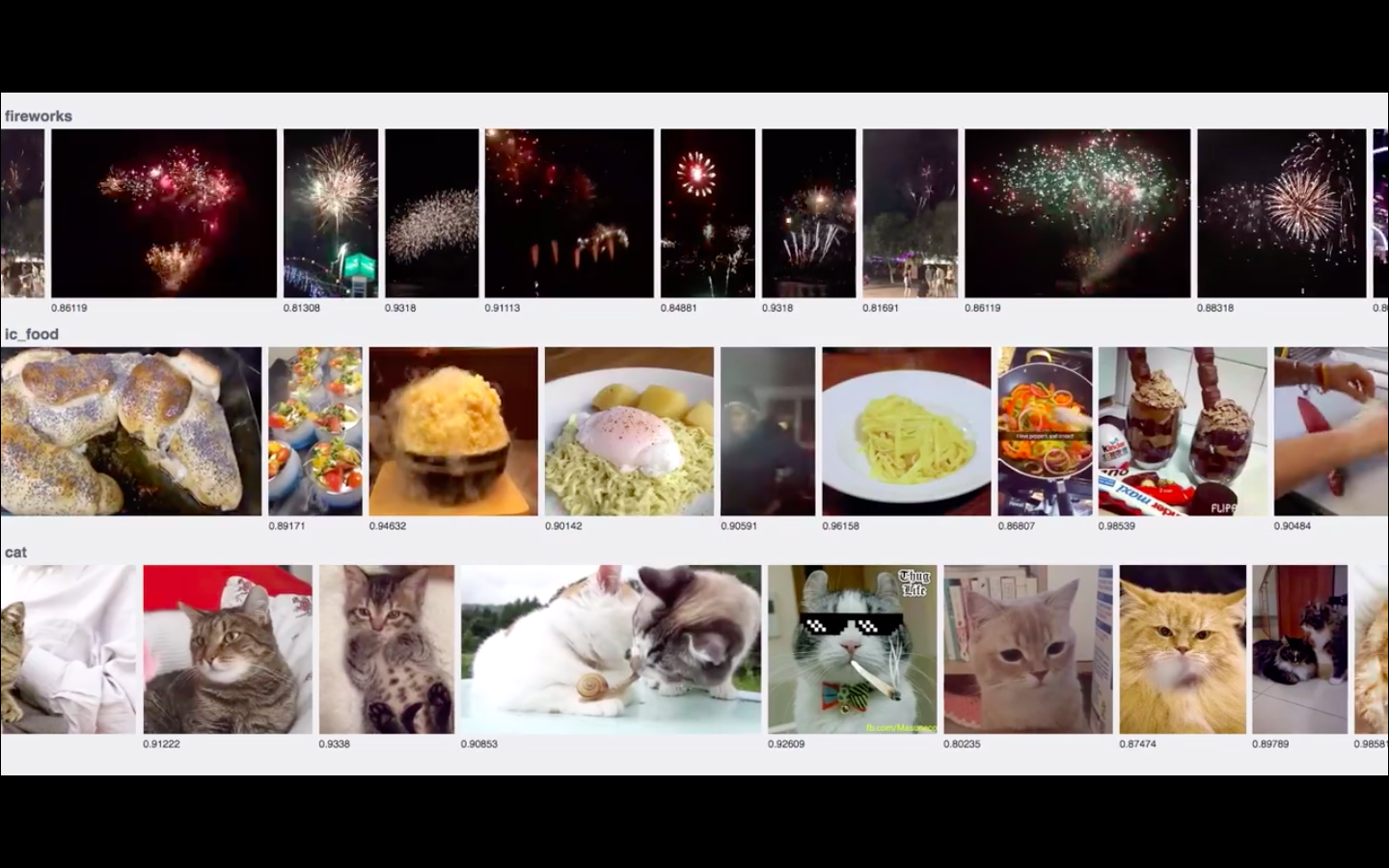

A snapshot of a short video from Facebook engineers demonstrating how AI recognizes cats in real time (task, also known as the holy grail of the Internet)

Machine learning

MO is a special way to create machine intelligence. Suppose you want to launch a rocket, and predict where it will go. In general, this is not so difficult: gravity has been studied fairly well, you can write down the equations and calculate where it goes, based on several variables, such as speed and starting position.

However, this approach becomes clumsy if we turn to an area whose rules are not so well known and clear. Suppose you want a computer to tell you if there are any cats in any images from the sample. How will you write down the rules that describe the view in all possible points of view on all possible combinations of whiskers and ears?

Today, the MO-approach is well known: instead of trying to write down all the rules, you create a system that can derive a set of internal rules on its own after studying a huge number of examples. Instead of describing cats, you simply show your AI a bunch of photos of cats, and let them know for themselves what is a cat and what is not.

And today is the perfect approach. A self-learning system based on data can be improved by simply adding data. And if our view can do something very well, it is to generate, store and manage data. Want to learn to recognize cats better? The Internet generates millions of examples right this minute.

Increasing data flow is one of the reasons for the recent explosive growth of MO algorithms. Other reasons are related to the use of this data.

In addition to the data, for MO there are two more related issues:

- How can I remember what I learned? How to store and present on a computer communications and the rules that I derived from the data?

- How do i study? How to change the saved representation in response to the arrival of new examples, and improve?

In other words, what exactly is being trained on the basis of all this data?

In MO, the computational representation of learning that we store is a model. The type of model used is very important: it determines how your AI learns, what data it can learn, and what questions you can ask it.

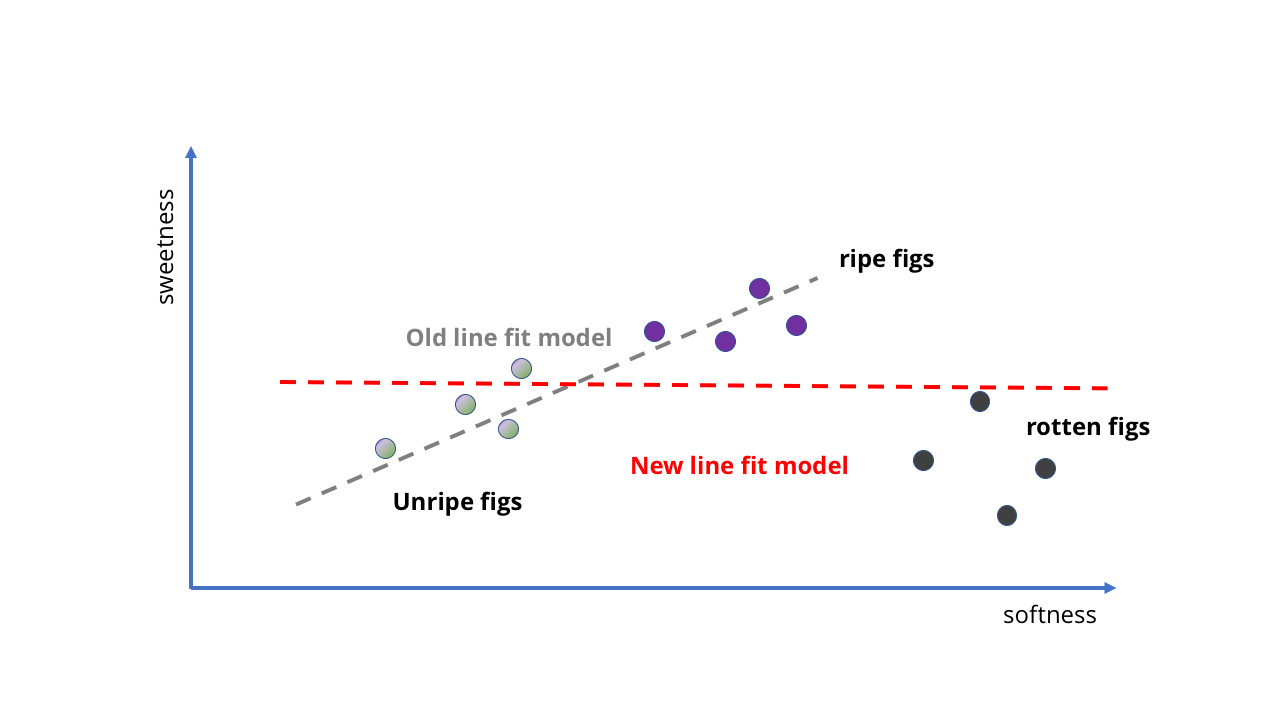

Let's look at a very simple example. Suppose we buy figs at the grocery store, and we want to make an AI with an MO that tells us if it is ripe. This should be easy to do, because in the case of figs, the softer it is, the sweeter it is.

We can take several samples of ripe and unripe figs, see how sweet they are, and then place them on the chart and adjust the line for it. This straight will be our model.

The germ of AI in the form of "the softer they are, the sweeter"

With the addition of new data, the task is complicated

Take a look! The direct implicitly follows the idea that “the softer they are, the sweeter”, and we didn't even have to write anything down. Our AI germ does not know anything about the sugar content or the ripening of the fruit, but it can predict the sweetness of the fruit by squeezing it.

How to train a model to make it better? We can collect even more samples and draw another straight line to get more accurate predictions (as in the second picture above). However, the problems immediately become apparent. So far, we have been training our fig AI on high-quality berries - and what if we take data from an orchard? Suddenly, we have not only ripe, but also rotten fruit. They are very soft, but definitely not suitable for eating.

What should we do? Well, since this is a MO model, we can simply feed it more data, right?

As the first picture below shows, in this case we will get completely meaningless results. The straight is simply not suitable for describing what happens when the fruit becomes too ripe. Our model no longer fits into the data structure.

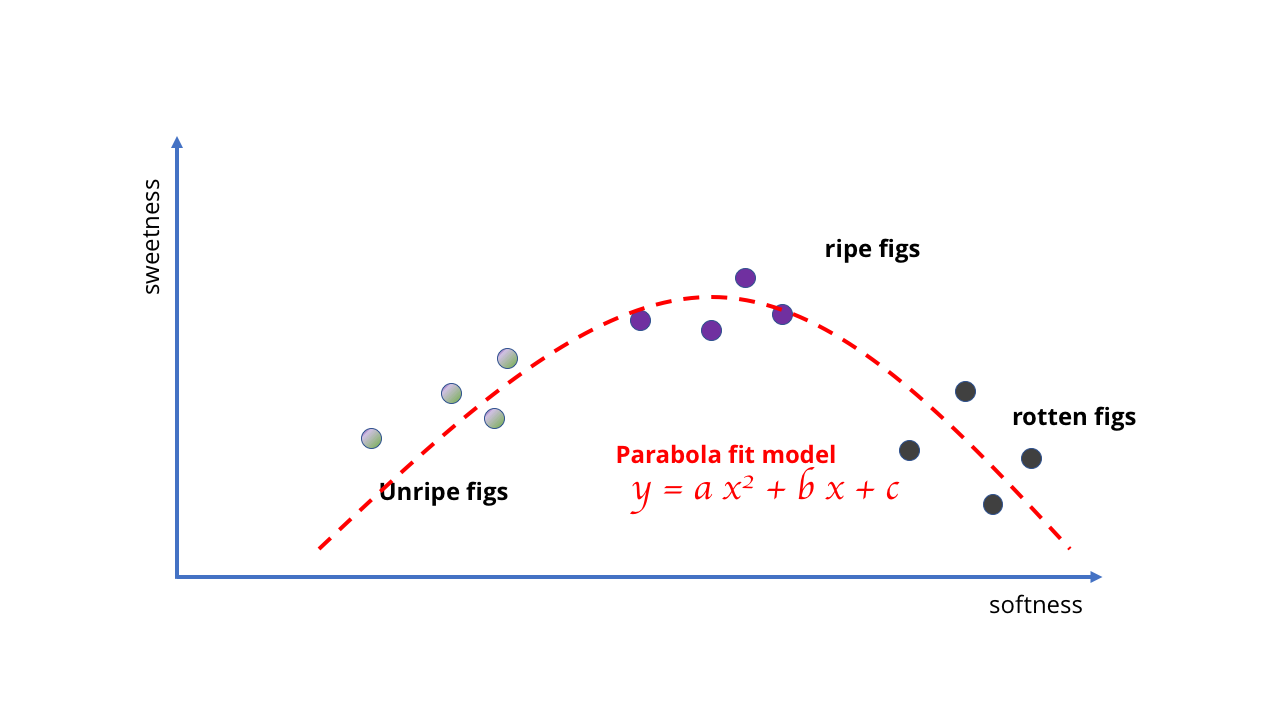

Instead, we will have to change it, and use a better and more complex model - perhaps a parabola, or something similar. This change complicates learning, because drawing a curve requires more complex math than drawing a straight line.

Okay, probably the idea of using direct for complex AI was not very successful.

Math is more difficult

The example is rather silly, but it shows that the choice of model determines the possibilities of learning. In the case of figs, the data is simple, and models can be simple. But if you are trying to learn something more complex, you need more complex models. Just as no amount of data will force the linear model to reflect the behavior of rotten berries, so it is impossible to find a simple curve corresponding to a bunch of pictures to create an algorithm for computer vision.

Therefore, the difficulty for the MO is to create and select the right models for the respective tasks. We need a model that is complex enough to describe really complex connections and structures, but simple enough so that you can work and train it with it. So, although the Internet, smartphones, and so on, have created incredible mountains of data on which to learn, we still need the right models to use this data.

This is where deep learning comes into play.

Deep learning

Deep learning is machine learning using a certain type of model: deep neural networks.

Neural networks are a type of MO model that uses a structure resembling neurons in the brain for computation and prediction. Neurons in neural networks are organized in layers: each layer performs a set of simple calculations and transmits the answer to the next.

The layered model allows for more complex calculations. A simple network with a small number of neuron layers is enough to reproduce the straight line or parabola we used above. Deep neural networks are neural networks with a large number of layers, with dozens, or even hundreds; hence their name. With so many layers you can create incredibly powerful models.

This feature is one of the main reasons for the huge popularity of deep neural networks in recent times. They can learn various complex things without forcing the human researcher to define some rules, and this allowed us to create algorithms capable of solving various tasks to which computers could not approach before.

However, in the success of the neural networks, another aspect has also contributed: training.

The “memory” of a model is a set of numeric parameters that determines how it issues answers to the questions asked of it. Teaching a model means adjusting these parameters so that the model produces the best possible answers.

In our model with figs, we looked for the equation of a straight line. This is a simple regression task, and there are formulas that will give you an answer in one step.

Simple neural network and deep neural network

With more complex models, things are not so simple. A straight line and a parabola can be easily represented by several numbers, but a deep neural network can have millions of parameters, and a data set for its learning can also consist of millions of examples. There is no one-step analytical solution.

Fortunately, there is one strange trick: you can start with a bad neural network, and then improve it with the help of gradual adjustments.

Training a model of MO in this way is similar to checking a student with the help of tests. Each time we get an estimate comparing what answers the model thinks should be with the “correct” answers in the training data. Then we make improvements and run the check again.

How do we know what parameters need to be adjusted, and how much? Neural networks have such a cool property, when for many types of training it is possible not only to get an assessment in a test, but also to calculate how much it will change in response to changes in each parameter. Mathematically speaking, estimation is a function of value, and for most of such functions we can easily calculate the gradient of this function relative to the parameter space.

Now we know for sure in which direction we need to adjust the parameters to increase the assessment, and you can adjust the network by successive steps in all the best and best "directions" until you reach the point where nothing can be improved. This is often referred to as climbing a hill, because it really looks like moving up a hill: if you constantly move up, you end up at the top.

Did you see? Vertex!

Thanks to this neural network is easy to improve. If your network has a good structure, getting new data, you do not need to start from scratch. You can start with the available parameters, and re-learn from the new data. Your network will gradually improve. The most prominent of today's AIs - from cat recognition on Facebook to technologies that Amazon (probably) uses in non-vendor stores - are built on this simple fact.

This is the key to another reason why GO has spread so quickly and so widely: climbing a hill allows you to take one neural network trained for a task and retrain it for another, but similar. If you have trained the AI to recognize cats well, you can use this network to train an AI that recognizes dogs, or giraffes, without having to start from scratch. Start with AI for cats, evaluate it by the quality of dog recognition, and then climb the hill, improving the network!

Therefore, in the last 5-6 years there has been a dramatic improvement in the capabilities of AI. Several pieces of the puzzle have developed in a synergistic way: the Internet has generated a huge amount of data on which to learn. Computations, especially parallel computations on GPUs, made it possible to process these huge sets. Finally, deep neural networks allowed to take advantage of these sets and create incredibly powerful MO models.

And all this means that some things that were previously extremely complex are now very easy to do.

And what can we do now? Pattern recognition

Perhaps the deepest (sorry for the pun) and the fastest impact of deep learning had on the field of computer vision - in particular, on the recognition of objects in photographs. A few years ago, this xkcd comic perfectly described the leading edge of computer science:

Today, the recognition of birds and even certain types of birds is a trivial task that a properly motivated high school student can solve. What has changed?

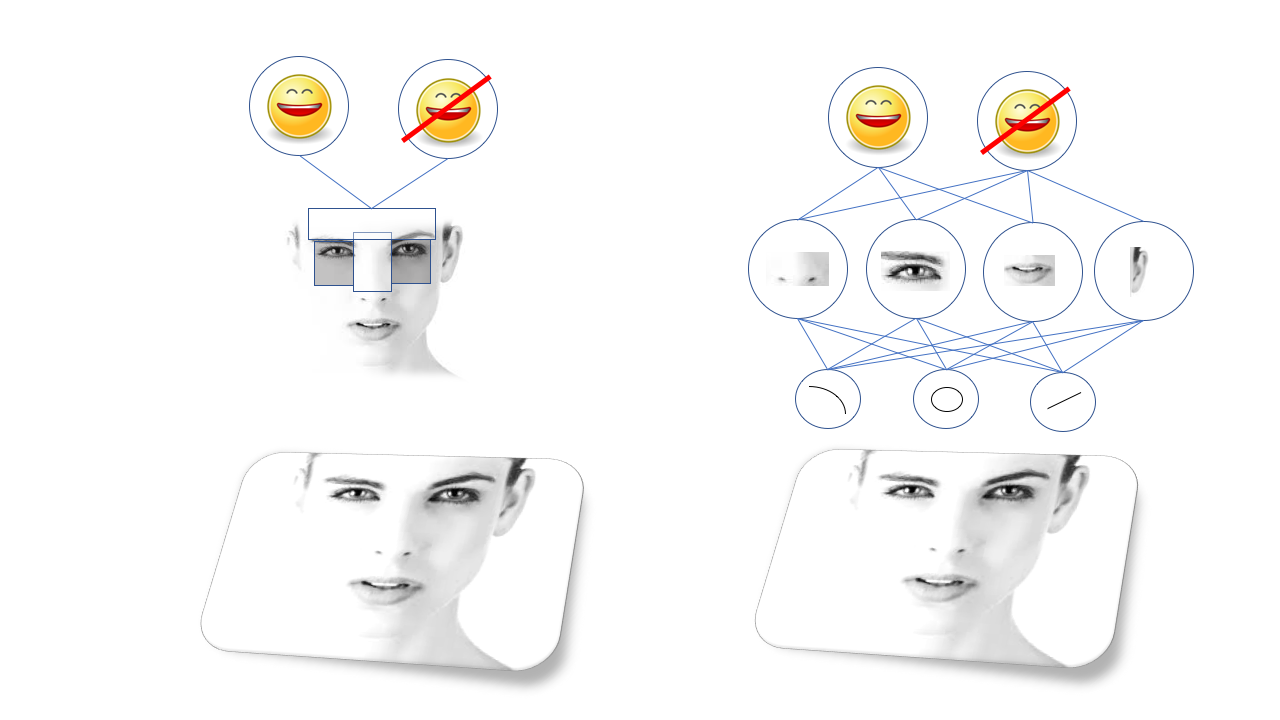

The idea of visual recognition of objects is easy to describe, but difficult to implement: complex objects consist of sets of simpler ones, which in turn consist of simpler forms and lines. Faces consist of eyes, noses and mouths, and those consist of circles and lines, and so on.

Therefore, face recognition becomes a matter of recognizing the patterns in which the eyes and mouths are located, which may require recognition of the shapes of the eyes and mouth from lines and circles.

These patterns are called features, and before the emergence of deep learning for recognition it was necessary to describe all the features manually and program the computer to search for them. For example, there is the famous Viola-Jones face recognition algorithm, based on the fact that eyebrows and nose are usually lighter than eye sockets, so they form a bright T-shape with two dark points. The algorithm, in fact, is looking for similar T-shaped forms.

The Viola-Jones method works well and surprisingly quickly, and serves as the basis for recognizing faces in cheap cameras, etc. But, obviously, not every object that you need to recognize is susceptible to such a simplification, and people invented more and more complex and low-level patterns. For the algorithms to work correctly, the work of the team of doctors of sciences was required, they were very sensitive and susceptible to failures.

The big breakthrough happened thanks to GO, and in particular, to a certain type of neural networks called “convolutional neural networks”. Convolutional neural networks, SNS are deep networks with a certain structure, inspired by the structure of the visual cortex of mammalian brain. This structure allows the SNA to independently study the hierarchy of lines and patterns for object recognition, rather than waiting for PhDs to spend years researching which features are better suited for this. For example, the SNS, trained on the faces, will learn its own internal representation of lines and circles, folding in the eyes, ears and noses, and so on.

Old visual algorithms (Viola-Jones method, left) rely on manually selected features, and deep neural networks (right) on their own hierarchy of more complex features made up of simpler

The SNS was amazingly good for computer vision, and soon the researchers were able to train them to perform all sorts of tasks on visual recognition, from finding cats in the photo to identifying pedestrians who got into the camera of the mobile.

This is all great, but there is another reason for the rapid and widespread adoption of the SNA - this is how easily they adapt. Remember climbing the hill? If our high school student wants to recognize a particular bird, he can take any of the many visual networks with open source and train her on his own data set, without even understanding how the underlying mathematics works.

Naturally, this can be expanded further.

Who's there? (face recognition)

Suppose you want to train a network that recognizes not just faces, but one particular person. You could train the network to recognize a certain person, then another person, and so on. However, time is spent on training networks, and this would mean that each new person would need to retrain the network. No, really.

Instead, we can start with a network that is trained to recognize faces in general. Its neurons are tuned to recognize all facial structures: eyes, ears, mouths, and so on. Then you simply change the output: instead of forcing it to recognize certain faces, you command it to give the face description in the form of hundreds of numbers describing the curvature of the nose or the shape of the eyes, and so on. The network can do this because it already “knows” which components it consists of.

You, of course, do not define it all directly. Instead, you train the network by showing it a set of faces, and then comparing the output. You also teach it in such a way that it gives descriptions of the same person that are similar to each other, and descriptions of different people that are very different from each other. Mathematically speaking, you train the network to build a correspondence to the face of a point in the space of features, where the Cartesian distance between the points can be used to determine their similarity.

Changing a neural network from face recognition (left) to a description of faces (right) only requires changing the format of the output, without changing its basis

Now you can recognize faces by comparing the descriptions of each person created by the neural network.

Having trained a network, you can already easily recognize faces. You take the original face and get its description. Then take a new face and compare the description issued by the network with your original. If they are close enough, you say that they are one and the same person. And here you are from a network capable of recognizing one face, to what can be used to recognize any face!

Such structural flexibility is another reason for the usefulness of deep neural networks. A huge variety of MO-models have been developed for computer vision, and although they are developing in very different directions, the basic structure of many of them is based on early SNS such as Alexnet and Resnet.

I even heard stories about people using visual neural networks to work with time series data or sensor measurements. Instead of creating a special network for analyzing the flow of data, they trained an open-source computer network for the open source to literally look at the shapes of the graph lines.

Such flexibility is a good thing, but not infinite. To solve some other problems, you need to use other types of networks.

And even to this point virtual assistants traveled for a very long time.

What you said? (Speech recognition)

Image cataloging and computer vision are not the only areas of AI revival. Another area in which computers have progressed very far is speech recognition, especially in translating speech into writing.

The basic idea of speech recognition is quite similar to the principle of computer vision: recognize complex things as sets of more simple ones. In the case of speech, the recognition of sentences and phrases is based on the recognition of words, which is based on the recognition of syllables, or, to be more precise, phonemes. So when someone says “Bond, James Bond,” we actually hear BON + DUH + JAY + MMS + BON + DUH.

In vision, the features are organized spatially, and this structure is processed by the SNA. In the rumor, these features are organized in time. People can speak quickly or slowly, without a clear beginning and end of speech. We need a model that is able to perceive sounds as they become available, as a person, instead of waiting and looking for complete sentences in them. We cannot, as in physics, say that space and time are one and the same.

Recognizing individual syllables is fairly easy, but difficult to isolate. For example, “Hello there” may sound like “hell no they're” ... So for any sequence of sounds, there are usually several combinations of syllables actually spoken.

To understand all this, we need the ability to study the sequence in a specific context. If I hear a sound, then what is more likely - that the person said “hello there dear” or “hell no they're deer?” Here again machine learning comes to the rescue. With a fairly large set of patterns of spoken words, you can learn the most likely phrases. And the more examples you have, the better it will be.

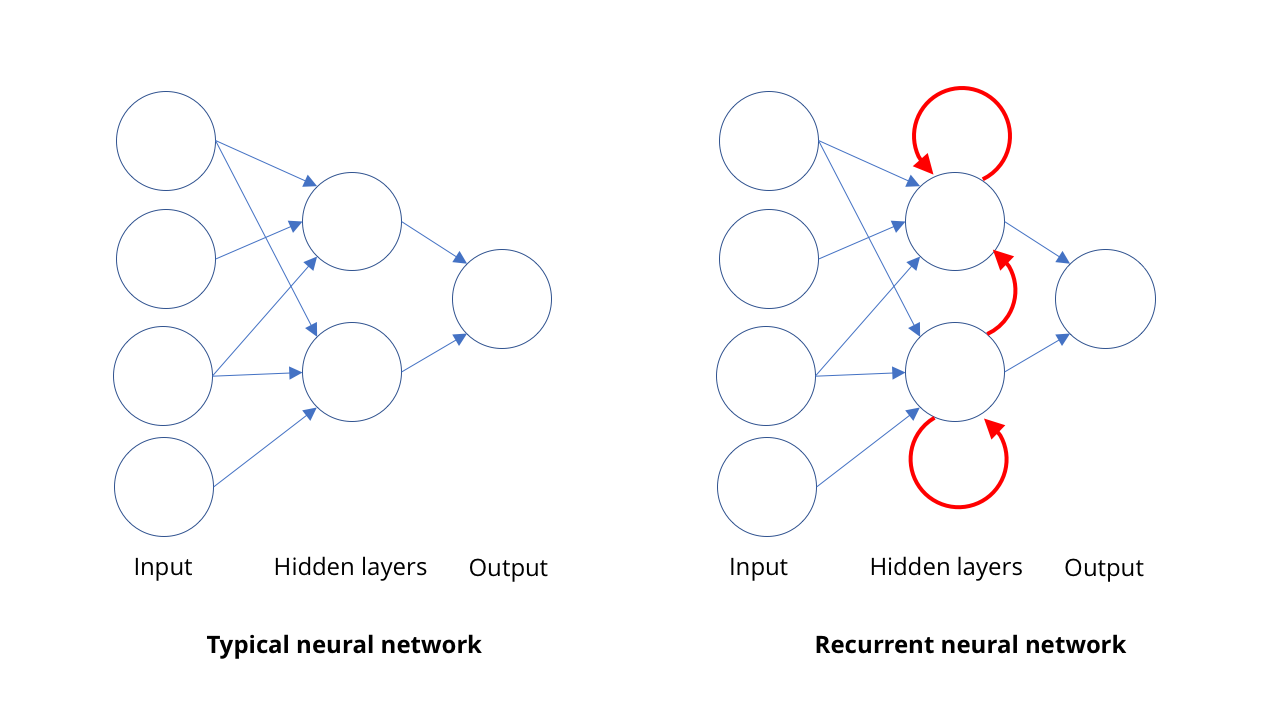

For this, people use recurrent neural networks, RNS. In most types of neural networks, such as, for example, in the SNS that deal with computer vision, the connections between neurons work in one direction, from input to output (mathematically, these are directed acyclic graphs). In the RNS, the output of neurons can be redirected back to the neurons of the same level, to themselves or even further. This allows the RNS to have its own memory (if you are familiar with binary logic, then this situation is similar to the operation of triggers).

SNS works for one approach: we feed it an image, and it gives some description. The RNS maintains an internal memory of what was given to it earlier, and gives answers based on what it has already seen, plus what it sees now.

This property of memory in the RNS allows them not only to “listen” to syllables that come to it one by one. This allows the network to learn what syllables go together, forming a word, and how likely certain sequences are.

Using PHT, it is possible to get a very good transcription of human speech - to such an extent that in some measurements, the accuracy of transcription computers can now exceed people.Of course, sounds are not the only area where sequences appear. Today, the RNS is also used to determine the sequence of movements for recognizing actions on the video.

Show me how you can move (deep fakes and generative networks)

So far we have been talking about MO models designed for recognition: tell me what is shown in the picture, tell me what the person said. But these models are capable of more - today's GO models can also be used to create content.

This is meant when people talk about deepfake - incredibly realistic fake videos and images created using GO. Some time ago, an employee of German television caused an extensive political debate, creating a fake videoon which the Minister of Finance of Greece showed Germany the middle finger. To create this video, it took a team of editors who worked to create a TV show, but in the modern world, this can be done by anyone in a few minutes with access to a medium-sized gaming computer.

All this is rather sad, but not so dark in this area - at the top shows my favorite video on this technology.

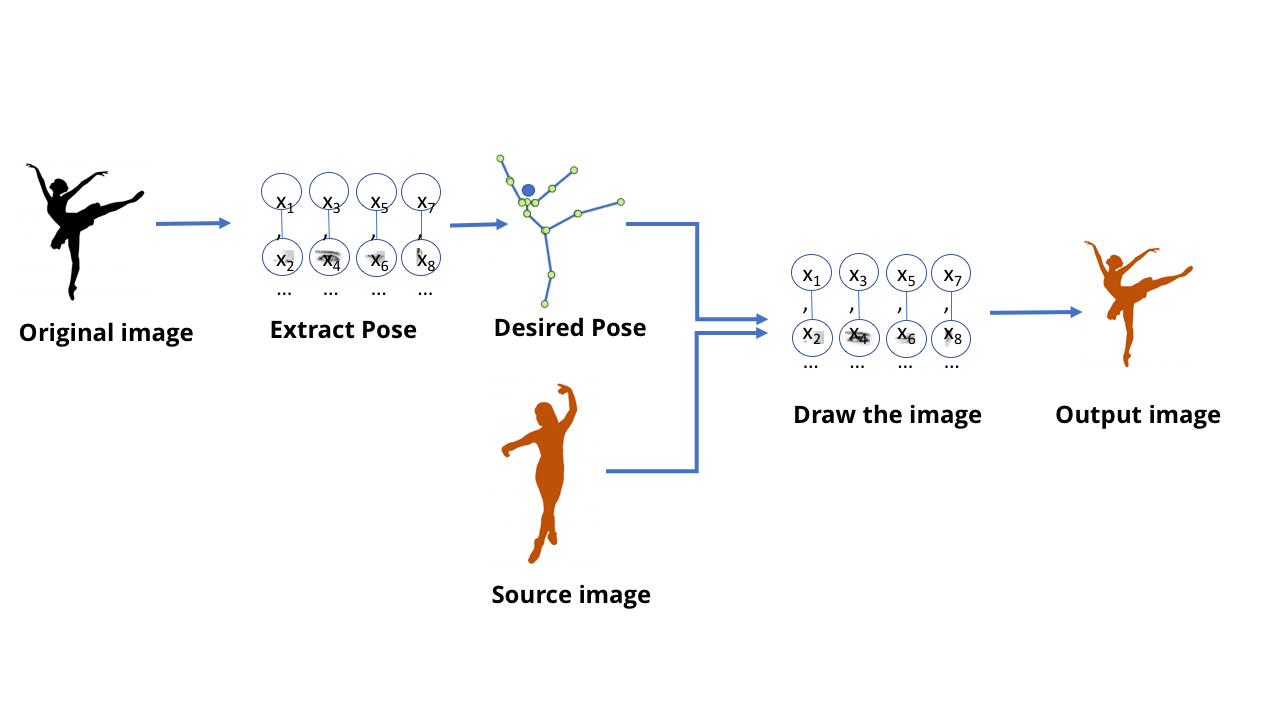

This team has created a model capable of processing a video with the dance movements of one person and creating a video with another person repeating these movements, magically performing them at the expert level. It is also interesting to read the accompanying scientific work .

One can imagine that, using all the techniques we have examined, it is possible to train a network that receives an image of a dancer and tells you where his arms and legs are. And in this case, obviously, at some level, the network learned how to connect the pixels in the image with the location of human limbs. Considering that a neural network is simply data stored on a computer, rather than a biological brain, it should be possible to take this data and go in the opposite direction — to get pixels corresponding to the location of the limbs.

Start with a network that extracts poses from images of people.

MO models capable of doing this are called generative [Eng. generate - generate, produce, create / approx. trans.]. All previous models considered by us are called discriminatory [eng. discriminate - distinguish / approx. trans.]. The difference between them can be imagined as follows: a discriminatory model for cats looks at photos and distinguishes between photos containing cats and photos where there are none. The generative model creates images of cats based on, say, a description of what a cat should be.

Generative models that “draw” images of objects are created using the same SNS structures as the models used to recognize these objects. And these models can be trained basically the same way as other models of MO.

However, the trick is to come up with an “assessment” for their learning. When teaching a discriminatory model, there is an easy way to assess the correctness and incorrectness of the answer - such as whether the network distinguished the dog from the cat correctly. However, how to assess the quality of the resulting picture of a cat, or its accuracy?

And here for a person who loves conspiracy theories and believes that we are all doomed, the situation becomes a bit scary. You see, the best way we have invented to train generative networks is not to do it yourself. To do this, we simply use another neural network.

This technology is called the generative-contention network, or GSS. You force two neural networks to compete with each other: one network tries to create fakes, for example, by drawing a new dancer on the basis of the old poses. Another network is trained to find the difference between real and fake examples using heaps of examples of real dancers.

And these two networks are playing a competitive game. Hence the word "competitive" in the title. The generative network is trying to make convincing counterfeits, and the discriminatory network is trying to understand where the fake is and where the real thing is.

In the case of a video with a dancer, a separate discriminatory network was created during the training process, which produced simple yes / no answers. She looked at the image of a person and the description of the position of his limbs, and decided whether the image was a real photograph or a picture drawn by the generative model.

The GSS forces the two networks to compete with each other: one produces “fakes” and the other tries to distinguish the fake from the original.

In the final workflow, only the generative model is used that creates the necessary images.

During repeated rounds of training, the models got better and better. It’s like a jewelery expert’s competition with an appraisal expert — competing with a strong contender, each one of them becomes stronger and smarter. Finally, when the work of the models is good enough, you can take a generative model and use it separately.

Generative models after training can be very useful for creating content. For example, they can generate images of faces (which can be used to train face recognition programs), or backgrounds for video games.

For all this to work properly, a lot of work on adjustments and corrections is required, but in fact the person here acts as an arbitrator. It is AI that work against each other, making major improvements.

So, should we expect Skynet and Hal 9000 in the near future?

In each documentary film about nature, there is an episode in the end, where the authors talk about how all this great beauty will soon disappear because of how terrible people are. I think that in the same vein, every responsible discussion regarding AI should include a section on its limitations and social consequences.

First, let us once again emphasize the current limitations of AI: the main idea, which I hope you have learned from reading this article, is that the success of MO or AI is extremely dependent on the learning models we have chosen. If people poorly organize the network or use unsuitable materials for training, then these distortions can be very obvious to all.

Deep neural networks are incredibly flexible and powerful, but do not have magical properties. Despite the fact that you use deep neural networks for RNS and SNS, their structure is very different, and therefore people should still define it. So, even if you can take the SNS for cars, and retrain it for bird recognition, you cannot take this model and retrain it for speech recognition.

If we describe it in human terms, then everything looks as if we understood how the visual cortex and the auditory cortex work, but we have no idea how the cerebral cortex works, and from where you can begin to approach it.

This means that in the near future, we probably will not see a Hollywood god-like AI. But this does not mean that in its current form, AI cannot have a serious impact on society.

We often imagine how the AI "replaces" us, that is, how the robots literally do our work, but in reality this will not happen. Look, for example, at radiology: sometimes people, looking at the success of computer vision, say that AI will replace radiologists. Perhaps we will not reach a point where we don’t have a single human radiologist at all. But a future is quite possible in which, for a hundred of today's radiologists, AI will allow five to ten of them to do the work of everyone else. If such a scenario is implemented, where will the remaining 90 doctors go?

Even if the current generation of AI does not justify the hopes of its most optimistic supporters, it will still lead to very extensive consequences. And we will have to solve these problems, so a good start is likely to be the master of the basics of this area.

Source: https://habr.com/ru/post/451214/

All Articles