Looking for a free parking space with Python

I live in a good city. But, as in many others, finding a parking space always turns into a test. Empty places are quickly occupied, and even if you have your own, it will be difficult for friends to drive in because they will have no place to park.

Therefore, I decided to send the camera to the window and use deep learning so that my computer would notify me when the space becomes available:

')

It may sound hard, but actually writing a working prototype with deep learning is quick and easy. All the necessary components are already there - you just need to know where to find them and how to put them together.

So let's have a little fun and write the exact free parking notification system using Python and deep learning.

Decomposing the task

When we have a difficult task that we want to solve with the help of machine learning, the first step is to break it down into a sequence of simple tasks. Then we can use different tools to solve each of them. Combining a few simple solutions together, we get a system that is capable of something complex.

Here is how I broke my task:

The video stream from a webcam is sent to the window at the entrance of the conveyor:

Through the pipeline, we will transmit each frame of video, one at a time.

The first step is to recognize all possible parking spaces on the frame. Obviously, before we can search for unallocated places, we need to understand which parts of the image there is parking.

Then on each frame you need to find all the cars. This will allow us to track the movement of each machine from frame to frame.

The third step is to determine which places are occupied by cars and which are not. To do this, combine the results of the first two steps.

Finally, the program should send an alert when the parking space becomes available. This will be determined by changes in the location of the machines between video frames.

Each of these stages can be completed in different ways using different technologies. There is no only right or wrong way to make this pipeline, different approaches will have their advantages and disadvantages. Let's deal with each step in more detail.

We recognize parking spaces

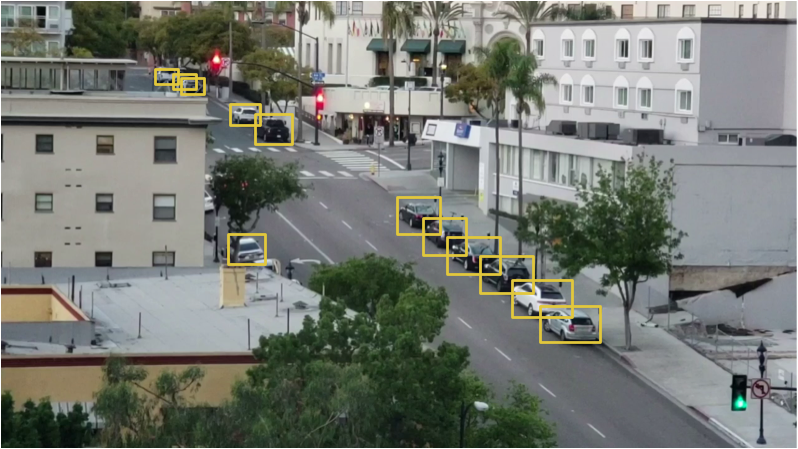

This is what our camera sees:

We need to somehow scan this image and get a list of places to park:

The solution “in the forehead” would be to simply hard-code the locations of all parking spaces manually instead of automatic recognition. But in this case, if we move the camera or want to search for parking spaces on another street, we will have to do the whole procedure again. It sounds so-so, so let's look for an automatic way to recognize parking spaces.

Alternatively, you can search for parking meters on the image and assume that there is a parking space next to each of them:

However, this approach is not so smooth. Firstly, not every parking space has a parking meter, and indeed, we are more interested in finding parking spaces for which there is no need to pay. Secondly, the location of the parking meter tells us nothing about where the parking space is, but only allows us to make a guess.

Another idea is to create an object recognition model that looks for parking space labels drawn on the road:

But this approach is also so-so. Firstly, in my city all such marks are very small and difficult to see at a distance, so it will be difficult to detect them using a computer. Secondly, the street is full of all sorts of other lines and tags. It will be difficult to separate the parking tags from the strip separators and pedestrian crossings.

When you encounter a problem that at first glance seems difficult, take a few minutes to find another approach to solving a problem that will help circumvent some technical problems. What generally is a parking space? This is just a place for which a car is parked for a long time. Perhaps we do not need to recognize parking spaces at all. Why don't we just recognize cars that have been standing for a long time and not assume that they are standing in a parking space?

In other words, parking spaces are located where cars are standing for a long time:

Thus, if we can recognize the cars and find out which of them do not move between frames, we will be able to guess where the parking spaces are. Simply simple - go to the recognition of machines!

We recognize cars

Recognizing machines in a video frame is a classic object recognition task. There are many machine learning-based approaches that we could use for recognition. Here are some of them in order from the “old school” to the “new school”:

- You can train a HOG-based detector (Histogram of Oriented Gradients, directional gradient histograms) and walk them through the whole image to find all the machines. This old approach, which does not use deep learning, works relatively quickly, but does not do very well with machines located differently.

- You can train a detector based on CNN (Convolutional Neural Network, a convolutional neural network) and walk it through the entire image until we find all the machines. This approach works exactly, but not as efficiently, since we need to scan the image several times using CNN to find all the machines. And although this way we can find machines located differently, we will need much more training data than for the HOG detector.

- You can use a deep learning approach like Mask R-CNN, Faster R-CNN or YOLO, which combines the accuracy of CNN and a set of technical tricks that greatly increase recognition speed. Such models will work relatively quickly (on a GPU) if we have a lot of data to train the model.

In general, we need the simplest solution that will work as it should and will require the least amount of training data. It is not necessary that this is the newest and fastest algorithm. However, specifically in our case, the Mask R-CNN is a reasonable choice, despite the fact that it is quite new and fast.

The Mask R-CNN architecture is designed in such a way that it recognizes objects in the entire image, effectively wasting resources, and does not use the sliding window approach. In other words, it works pretty fast. With modern GPU, we will be able to recognize objects in high-definition video at a speed of several frames per second. For our project this should be enough.

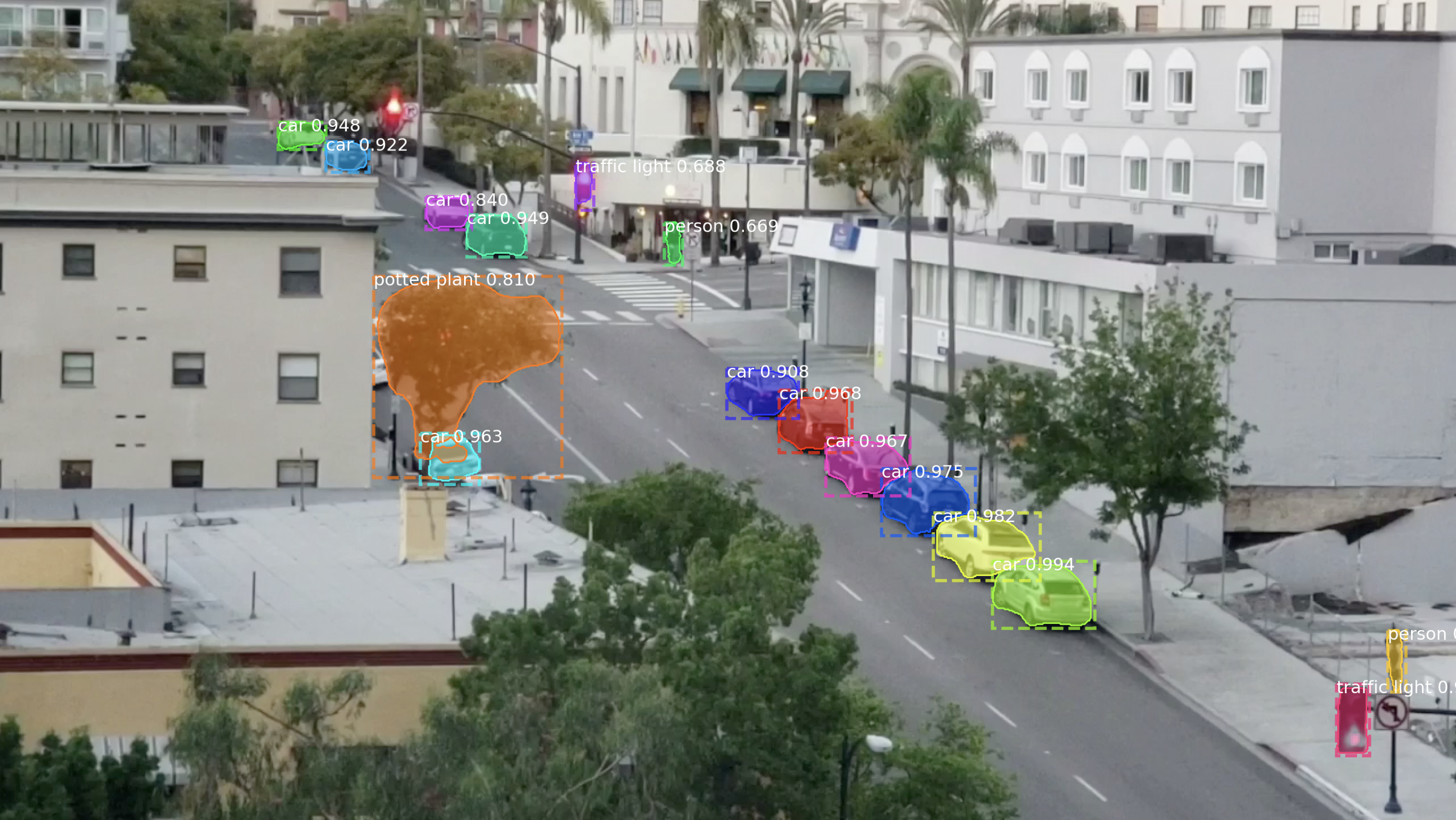

In addition, the Mask R-CNN gives a lot of information about each recognized object. Most recognition algorithms return only the bounding box for each object. However, the Mask R-CNN will not only give us the location of each object, but also its outline (mask):

To learn the Mask R-CNN, we need a lot of images of objects that we want to recognize. We could go outside, take a picture of the cars and label them in the photos, which would require several days of work. Fortunately, cars are one of those objects that people often want to recognize, so there are already several publicly available datasets with images of cars.

One of them is the popular DOCET COCO (short for Common Objects In Context), which has images annotated with masks of objects. In this dataset there are more than 12,000 images with already marked machines. Here is an example of an image from dataset:

Such data is great for training models based on Mask R-CNN.

But hold the horses, there is even better news! We are not the first who wanted to train their model with the help of COCO dataset - many people have already done it before us and shared their results. Therefore, instead of teaching our model, we can take ready-made, which can already recognize the machine. For our project we will use the open-source model from Matterport.

If we give an image from the camera to the input of this model, here’s what we’ll get out of the box:

The model recognized not only cars, but also objects such as traffic lights and people. It's funny that she recognized the tree as a houseplant.

For each recognized object, the R-CNN Mask model returns 4 things:

- The type of object detected (integer). The pre-trained COCO model is able to recognize 80 different common objects such as cars and trucks. A full list of them can be found here.

- The degree of confidence in the recognition results. The higher the number, the more confident the model is that the object is recognized correctly.

- The bounding box for an object in the form of XY-coordinates of pixels in the image.

- A “mask” that shows which pixels within the bounding box are part of an object. Using the mask data you can find the outline of the object.

Below is the Python code for detecting the bounding box for machines using the pre-trained model Mask R-CNN and OpenCV:

import numpy as np import cv2 import mrcnn.config import mrcnn.utils from mrcnn.model import MaskRCNN from pathlib import Path # , Mask-RCNN. class MaskRCNNConfig(mrcnn.config.Config): NAME = "coco_pretrained_model_config" IMAGES_PER_GPU = 1 GPU_COUNT = 1 NUM_CLASSES = 1 + 80 # COCO 80 + 1 . DETECTION_MIN_CONFIDENCE = 0.6 # , . def get_car_boxes(boxes, class_ids): car_boxes = [] for i, box in enumerate(boxes): # , . if class_ids[i] in [3, 8, 6]: car_boxes.append(box) return np.array(car_boxes) # . ROOT_DIR = Path(".") # . MODEL_DIR = ROOT_DIR / "logs" # . COCO_MODEL_PATH = ROOT_DIR / "mask_rcnn_coco.h5" # COCO . if not COCO_MODEL_PATH.exists(): mrcnn.utils.download_trained_weights(COCO_MODEL_PATH) # . IMAGE_DIR = ROOT_DIR / "images" # — 0, , . VIDEO_SOURCE = "test_images/parking.mp4" # Mask-RCNN . model = MaskRCNN(mode="inference", model_dir=MODEL_DIR, config=MaskRCNNConfig()) # . model.load_weights(COCO_MODEL_PATH, by_name=True) # . parked_car_boxes = None # , . video_capture = cv2.VideoCapture(VIDEO_SOURCE) # . while video_capture.isOpened(): success, frame = video_capture.read() if not success: break # BGR ( OpenCV) RGB. rgb_image = frame[:, :, ::-1] # Mask R-CNN . results = model.detect([rgb_image], verbose=0) # Mask R-CNN , . # , . r = results[0] # r : # - r['rois'] — ; # - r['class_ids'] — () ; # - r['scores'] — ; # - r['masks'] — ( ). # . car_boxes = get_car_boxes(r['rois'], r['class_ids']) print("Cars found in frame of video:") # . for box in car_boxes: print("Car:", box) y1, x1, y2, x2 = box # . cv2.rectangle(frame, (x1, y1), (x2, y2), (0, 255, 0), 1) # . cv2.imshow('Video', frame) # 'q', . if cv2.waitKey(1) & 0xFF == ord('q'): break # . video_capture.release() cv2.destroyAllWindows() After running this script, an image with a frame around each detected machine will appear on the screen:

Also, the coordinates of each machine will be displayed in the console:

Cars found in frame of video: Car: [492 871 551 961] Car: [450 819 509 913] Car: [411 774 470 856] So we learned to recognize the cars in the image.

We recognize empty parking spaces

We know the pixel coordinates of each machine. Looking through several consecutive frames, we can easily determine which of the cars did not move, and assume that there are parking spaces. But how to understand that the car left the parking?

The problem is that the frames of the machines partially overlap:

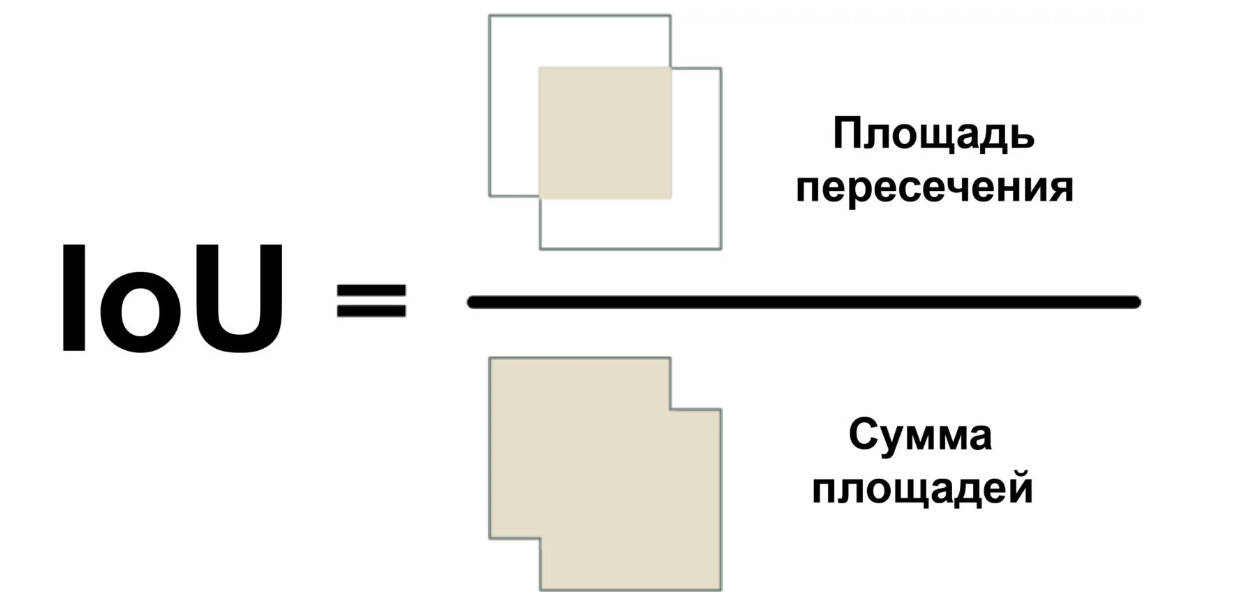

Therefore, if we imagine that each frame represents a parking space, it may turn out that it is partially occupied by a car, when in fact it is empty. We need to find a way to measure the degree of intersection of two objects in order to search for only the “most empty” frames.

We will use a measure called Intersection Over Union (the ratio of the intersection area to the sum of the areas) or IoU. IoU can be found by counting the number of pixels where two objects intersect, and divided by the number of pixels occupied by these objects:

So we can understand how strongly the bounding frame of the car intersects with the frame of the parking space. This makes it easy to determine if parking is available. If the IoU value is low, like 0.15, then the car takes up a small part of the parking space. And if it is high, like 0.6, then this means that the car takes up most of the space and you cannot park there.

Since IoU is used quite often in computer vision, in the respective libraries there is a high probability that this measure is implemented. In our Mask R-CNN library, it is implemented as a function mrcnn.utils.compute_overlaps ().

If we have a list of bounding frames for parking spaces, then we can add a check for the presence of cars in this framework by adding a whole line of different code:

# . car_boxes = get_car_boxes(r['rois'], r['class_ids']) # , . overlaps = mrcnn.utils.compute_overlaps(car_boxes, parking_areas) print(overlaps) The result should look something like this:

[ [1. 0.07040032 0. 0.] [0.07040032 1. 0.07673165 0.] [0. 0. 0.02332112 0.] ] In this two-dimensional array, each row reflects one frame of the parking space. And each column indicates how strongly each of the places intersects with one of the detected machines. The result 1.0 means that the whole place is completely occupied by the car, and a low value like 0.02 means that the car got into place a little, but you can still park on it.

To find unallocated places, you only need to check every line in this array. If all numbers are close to zero, then most likely the place is free!

However, keep in mind that object recognition does not always work perfectly with live video. Although the model based on the Mask R-CNN is pretty accurate, from time to time it can skip a car or two in one frame of video. Therefore, before claiming that the place is free, you need to make sure that it remains so for the next 5–10 frames of the video. This way we will be able to avoid situations when the system mistakenly marks the place as empty due to a glitch on one video frame. As soon as we make sure that the place remains free for several frames, you can send a message!

We send SMS

The last part of our conveyor is sending an SMS notification when a free parking space appears.

Send a message from Python is very easy if you use Twilio. Twilio is a popular API that allows you to send SMS from almost any programming language with just a few lines of code. Of course, if you prefer another service, you can use it. I have nothing to do with Twilio, it's just the first thing that comes to mind.

To use Twilio, sign up for a trial account , create a Twilio phone number and get account authentication data. Then install the client library:

$ pip3 install twilio After that use the following code to send the message:

from twilio.rest import Client # Twilio. twilio_account_sid = ' Twilio SID' twilio_auth_token = ' Twilio' twilio_source_phone_number = ' Twilio' # Twilio. client = Client(twilio_account_sid, twilio_auth_token) # SMS. message = client.messages.create( body=" ", from_=twilio_source_phone_number, to=" , " ) To add the ability to send messages to our script, just copy this code there. However, you need to make sure that the message is not sent on each frame, where you can see the free space. Therefore, we will have a flag that, in the established state, will not allow us to send messages for some time or until another place becomes free.

We put everything together

import numpy as np import cv2 import mrcnn.config import mrcnn.utils from mrcnn.model import MaskRCNN from pathlib import Path from twilio.rest import Client # , Mask-RCNN. class MaskRCNNConfig(mrcnn.config.Config): NAME = "coco_pretrained_model_config" IMAGES_PER_GPU = 1 GPU_COUNT = 1 NUM_CLASSES = 1 + 80 # COCO 80 + 1 . DETECTION_MIN_CONFIDENCE = 0.6 # , . def get_car_boxes(boxes, class_ids): car_boxes = [] for i, box in enumerate(boxes): # , . if class_ids[i] in [3, 8, 6]: car_boxes.append(box) return np.array(car_boxes) # Twilio. twilio_account_sid = ' Twilio SID' twilio_auth_token = ' Twilio' twilio_phone_number = ' Twilio' destination_phone_number = ', ' client = Client(twilio_account_sid, twilio_auth_token) # . ROOT_DIR = Path(".") # . MODEL_DIR = ROOT_DIR / "logs" # . COCO_MODEL_PATH = ROOT_DIR / "mask_rcnn_coco.h5" # COCO . if not COCO_MODEL_PATH.exists(): mrcnn.utils.download_trained_weights(COCO_MODEL_PATH) # . IMAGE_DIR = ROOT_DIR / "images" # — 0, , . VIDEO_SOURCE = "test_images/parking.mp4" # Mask-RCNN . model = MaskRCNN(mode="inference", model_dir=MODEL_DIR, config=MaskRCNNConfig()) # . model.load_weights(COCO_MODEL_PATH, by_name=True) # . parked_car_boxes = None # , . video_capture = cv2.VideoCapture(VIDEO_SOURCE) # . free_space_frames = 0 # SMS? sms_sent = False # . while video_capture.isOpened(): success, frame = video_capture.read() if not success: break # BGR RGB. rgb_image = frame[:, :, ::-1] # Mask R-CNN . results = model.detect([rgb_image], verbose=0) # Mask R-CNN , . # , . r = results[0] # r : # - r['rois'] — ; # - r['class_ids'] — () ; # - r['scores'] — ; # - r['masks'] — ( ). if parked_car_boxes is None: # — , . # . parked_car_boxes = get_car_boxes(r['rois'], r['class_ids']) else: # , . , . # . car_boxes = get_car_boxes(r['rois'], r['class_ids']) # , . overlaps = mrcnn.utils.compute_overlaps(parked_car_boxes, car_boxes) # , , . free_space = False # . for parking_area, overlap_areas in zip(parked_car_boxes, overlaps): # # (, ). max_IoU_overlap = np.max(overlap_areas) # . y1, x1, y2, x2 = parking_area # , , IoU. if max_IoU_overlap < 0.15: # ! . cv2.rectangle(frame, (x1, y1), (x2, y2), (0, 255, 0), 3) # , . free_space = True else: # — . cv2.rectangle(frame, (x1, y1), (x2, y2), (0, 0, 255), 1) # IoU . font = cv2.FONT_HERSHEY_DUPLEX cv2.putText(frame, f"{max_IoU_overlap:0.2}", (x1 + 6, y2 - 6), font, 0.3, (255, 255, 255)) # , . # , , # . if free_space: free_space_frames += 1 else: # , . free_space_frames = 0 # , , . if free_space_frames > 10: # SPACE AVAILABLE!! . font = cv2.FONT_HERSHEY_DUPLEX cv2.putText(frame, f"SPACE AVAILABLE!", (10, 150), font, 3.0, (0, 255, 0), 2, cv2.FILLED) # , . if not sms_sent: print("SENDING SMS!!!") message = client.messages.create( body="Parking space open - go go go!", from_=twilio_phone_number, to=destination_phone_number ) sms_sent = True # . cv2.imshow('Video', frame) # 'q', . if cv2.waitKey(1) & 0xFF == ord('q'): break # 'q', . video_capture.release() cv2.destroyAllWindows() To run this code, you first need to install Python 3.6+, Matterport Mask R-CNN and OpenCV .

I specifically wrote the code as easy as possible. For example, if he sees on the first frame of the car, he concludes that they are all parked. Try experimenting with it and see if you can improve its reliability.

By simply changing the identifiers of the objects that the model is looking for, you can turn the code into something completely different. For example, imagine that you work at a ski resort. Having made a couple of changes, you can turn this script into a system that automatically recognizes snowboarders jumping off the ramp, and records videos with great jumps. Or, if you work in the reserve, you can create a system that counts zebras. You are limited only by your imagination.

More similar articles can be read in the Neuron telegram channel (@neurondata)

Link to alternative translation: tproger.ru/translations/parking-searching/

All knowledge. Experiment!

Source: https://habr.com/ru/post/451164/

All Articles