RabbitMQ unrestricted migration to Kubernetes

RabbitMQ is a message broker written in Erlang that allows you to organize a failover cluster with full data replication to several nodes, where each node can serve read and write requests. Having a lot of Kubernetes clusters in production-operation, we support a large number of RabbitMQ installations and are faced with the need to migrate data from one cluster to another without downtime.

We needed this operation in at least two cases:

')

- Transferring data from a RabbitMQ cluster that is not in Kubernetes to a new — already “cubed” (i.e., operating in K8s pods) - cluster.

- RabbitMQ migration within Kubernetes from one namespace to another (for example, if the contours are delimited by name spaces, then to transfer the infrastructure from one contour to another).

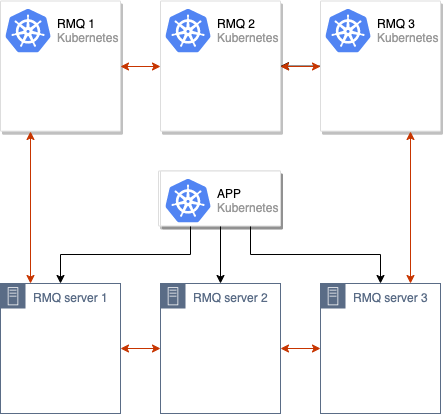

The recipe proposed in the article is focused on situations (but not limited to them at all), in which there is an old RabbitMQ cluster (for example, from 3 nodes) located either in K8s or on some old servers. An application placed in Kubernetes works with it (already there or in the future):

... and we are faced with the task of migrating it to a new production in Kubernetes.

First, a general approach to the migration itself will be described, and after that - technical details on its implementation.

Migration Algorithm

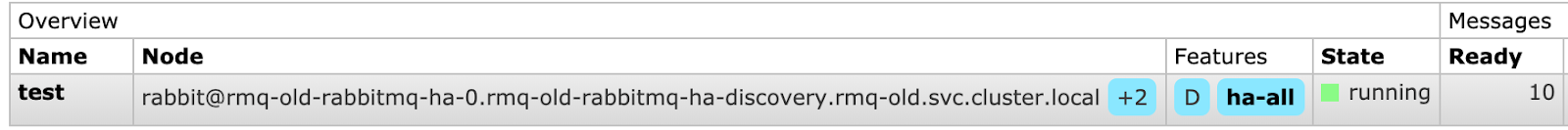

The first, preliminary, stage before any actions is to check that the old installation of RabbitMQ has High Availability Mode ( HA ) enabled. The reason is obvious - we do not want to lose any data. To perform this check, you can go to the RabbitMQ admin panel and in the Admin → Policies tab, make sure that the value of

ha-mode: all :

The next step is to raise a new RabbitMQ cluster in Kubernetes pods (in our case, for example, consisting of 3 nodes, but their number may be different).

After that, we merge the old and new RabbitMQ clusters, getting a single cluster (of 6 nodes):

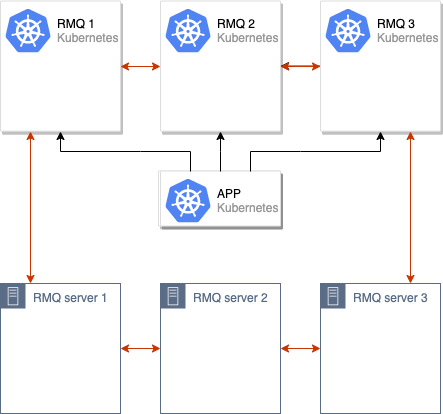

The process of data synchronization between the old and new RabbitMQ clusters is initiated. After all data is synchronized between all nodes in the cluster, we can switch the application to use the new cluster:

After these operations, it is enough to remove the old nodes from the RabbitMQ cluster, and the move can be considered complete:

We have repeatedly used this scheme in production. However, for their own convenience, they implemented it as part of a specialized system that distributes typical RMQ configurations on sets of Kubernetes clusters (for those who are curious: this is about the addon-operator , which we recently told you about ) . Below will be presented separately taken instructions that everyone can apply on their installations to try the proposed solution in action.

We try in practice

Requirements

Details are very simple:

- Kubernetes cluster (minikube will do);

- RabbitMQ cluster (it can be deployed on bare metal, and made as a regular cluster in Kubernetes from the official Helm-chart).

For the example below, I deployed RMQ in Kubernetes and called it

rmq-old .Stand preparation

1. Download the Helm-chart and edit it a bit:

helm fetch --untar stable/rabbitmq-ha For convenience, we set a password,

ErlangCookie and make a ha-all policy so that by default the queues are synchronized between all the nodes of the RMQ cluster: rabbitmqPassword: guest rabbitmqErlangCookie: mae9joopaol7aiVu3eechei2waiGa2we definitions: policies: |- { "name": "ha-all", "pattern": ".*", "vhost": "/", "definition": { "ha-mode": "all", "ha-sync-mode": "automatic", "ha-sync-batch-size": 81920 } } 2. Set the chart:

helm install . --name rmq-old --namespace rmq-old 3. Go to the RabbitMQ admin area, create a new queue and add several messages. They will be needed so that after migration we can make sure that all the data was saved and that we did not lose anything:

The test bench is ready: we have an “old” RabbitMQ with data that needs to be transferred.

RabbitMQ Cluster Migration

1. First, let's deploy a new RabbitMQ in a different namespace with the same

ErlangCookie and password for the user. To do this, we perform the operations described above, changing the final RMQ installation command to the following: helm install . --name rmq-new --namespace rmq-new 2. Now you need to merge the new cluster with the old one. To do this, we go into each of the pods of the new RabbitMQ and execute the commands:

export OLD_RMQ=rabbit@rmq-old-rabbitmq-ha-0.rmq-old-rabbitmq-ha-discovery.rmq-old.svc.cluster.local && \ rabbitmqctl stop_app && \ rabbitmqctl join_cluster $OLD_RMQ && \ rabbitmqctl start_app The variable

OLD_RMQ is the address of one of the nodes of the old RMQ cluster.These commands will stop the current node of the new RMQ cluster, attach it to the old cluster and restart it.

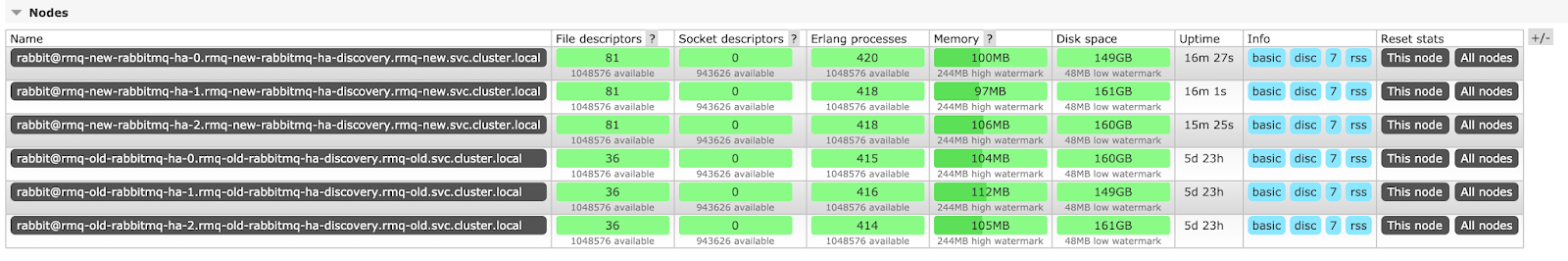

3. RMQ cluster of 6 nodes is ready:

You must wait until the messages are synchronized between all nodes. It is not difficult to guess that the time of message synchronization depends on the power of the iron in which the cluster is deployed, and on the number of messages. In the described scenario, there are only 10 of them, so the data was synchronized instantly, but with a sufficiently large number of messages, the synchronization can take hours.

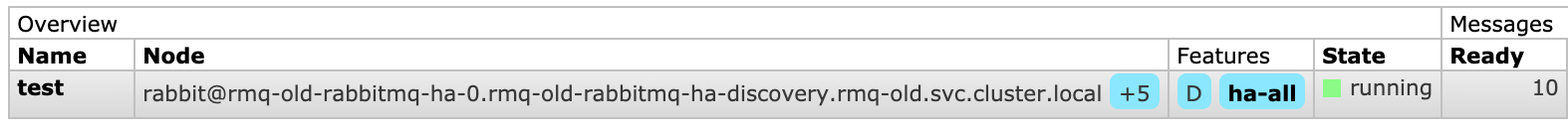

So, the synchronization status:

Here,

+5 means that messages are already on another 5 nodes (except for what is indicated in the Node field). Thus, the synchronization was successful.4. It remains only to switch the address of the RMQ to the new cluster in the application (specific actions here depend on the technological stack you are using and other specifics of the application), after which you can say goodbye to the old one.

For the last operation (ie, after switching the application to the new cluster), we go to each node of the old cluster and execute the commands:

rabbitmqctl stop_app rabbitmqctl reset Cluster "forgot" about the old nodes: you can delete the old RMQ, on which the move will be completed.

Note : If you use RMQ with certificates, then nothing changes fundamentally - the process of moving will be carried out in the same way.

findings

The described scheme is suitable for almost all cases when we need to move RabbitMQ or just move to a new cluster.

In our case, difficulties arose only once, when RMQ was contacted from many places, and we did not have the opportunity to change the address of RMQ everywhere to a new one. Then we launched the new RMQ in the same namespace with the same labels, so that it would fall under the already existing services and Ingress, and when the pod was started, hands were manipulating the labels, deleting them at the beginning, so that no queries would get on the empty RMQ, and adding them back after syncing messages.

We used the same strategy when upgrading RabbitMQ to a new version with a modified configuration - everything worked like a clock.

PS

As a logical continuation of this material, we are preparing articles about MongoDB (migration from the iron server to Kubernetes) and MySQL (one of the options for “preparing” this DBMS inside Kubernetes). They will be published in the coming months.

Pps

Read also in our blog:

Source: https://habr.com/ru/post/450662/

All Articles