Terraformer - Infrastructure To Code

I would like to tell you about the new CLI tool that I wrote to solve one old problem.

Problem

Terraform has long become a standard in the Devops / Cloud / IT community. The thing is very convenient and useful to deal with infrastructure as code. There are many charms in Terraform as well as many forks, sharp knives and rakes.

With Terraform it is very convenient to do new things and then manage, change or delete them. And what about those who have a huge infrastructure in the cloud and are not created through Terraform? Rewriting and re-creating the entire cloud is expensive and unsafe.

I encountered such a problem in 2 papers, the simplest example is when you want everything to be in the form of file terraform files, and you have 250+ buckets and write something for the terraform with your hands like that a lot.

There is an issue since 2014 in terrafom which was closed in 2016 with the hope that it will be import.

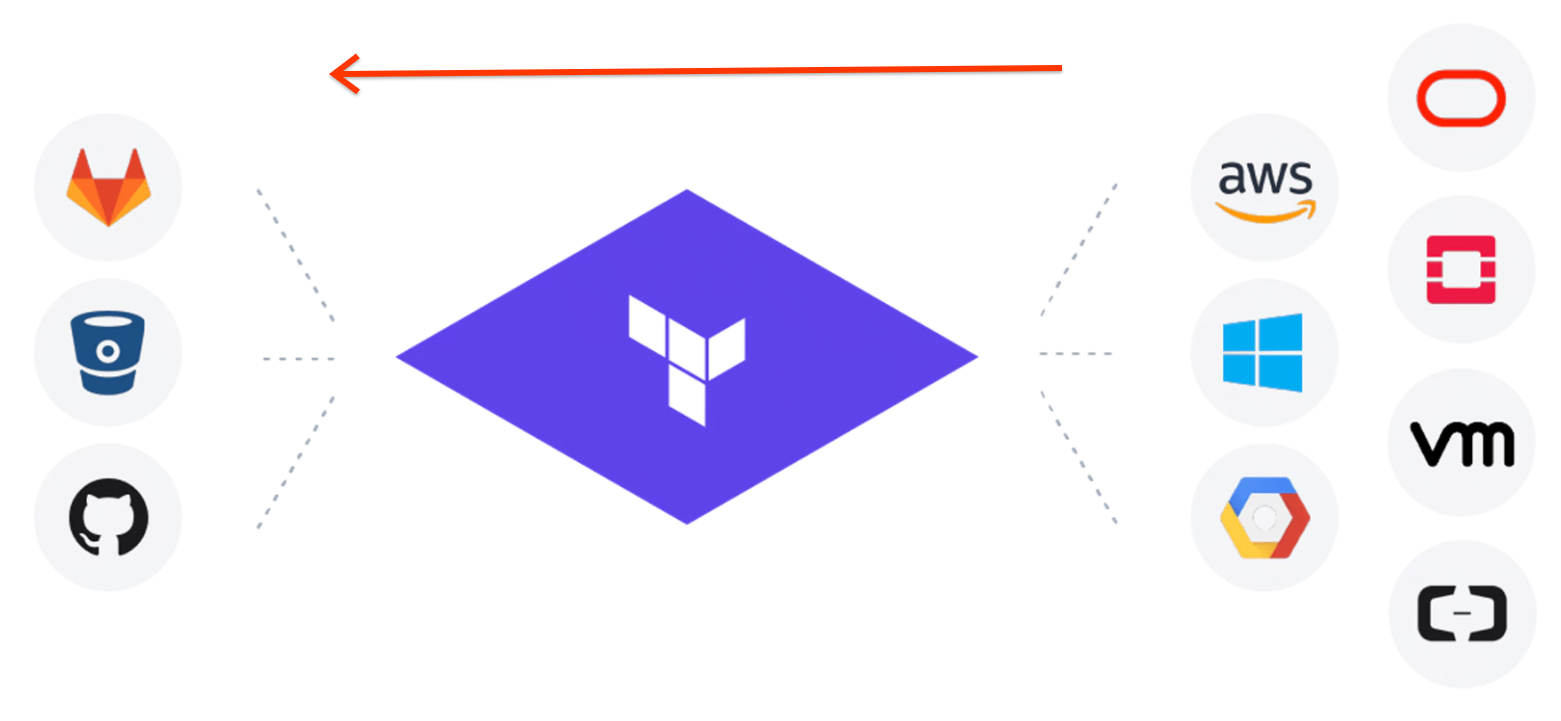

In general, everything is as in the picture only from right to left

')

Warnings: The author lives half the life not in Russia and writes little in Russian. Caution spelling errors.

Solutions

1. There are ready and old solutions for AWS terraforming . When I tried to get my 250+ bakes through it, I realized that everything was bad there. AWS has long been a lot of new options and terraforming does not know about them, and in general his ruby templet looks poor . After 2 in the evening I sent a pull request to add more features there and I realized that such a solution does not work at all.

How terraforming works it takes data from the AWS SDK and generates tf and tfstate via the templet.

There are 3 problems here:

1. There will always be a lag in updates.

2. tf files sometimes come out broken

3. tfstate is set up separately from tf and does not always converge.

In general, it is difficult to get a result in which `terraform plan` will say that there are no changes.

2. `terraform import` - built-in command in terraform. How does it work?

You write an empty TF file with the name and type of the resource, then you run the `terraform import` and pass the resource ID. terraform accesses the provider and receives the data and makes a tfstate file.

There are 3 problems here:

1. We get only the tfstate file and the tf empty must be manually written or converted with tfstate

2. Able to work with only one resource each time and does not support all resources. And what do I do again with 250+ bakes

3. You need to know the resource ID - that is, you need to wrap it in the code that gets the list of resources

In general, the result is partial and does not scale well.

My decisions

Requirements:1. The ability to create files tf and tfstate resources. For example, download all buckets / security group / load balancer and that `terraform plan` returned no changes

2. You need 2 GCP + AWS clouds

3. Global solutions that are easy to update every time and do not waste time on each resource for 3 days of work

4. Making an open source is a problem for everyone

Go language - because I love, and it has a library for creating HCL files that is used in terraform + a lot of code in terraform which can be useful

Way

Attempt first

Started a simple option. Referring to the cloud via the SDK for the desired resource and converting it into fields for terraform. The attempt died immediately on the security group because I did not like to convert only the security group for 1.5 days (and there are many resources). Long and then fields can be changed / added

Attempt second

Based on the idea described here . Just take and convert tfstate to tf. All data is there and the fields are the same. How to get full tfstate for a lot of resources ?? This is where the `terraform refresh` command came to the rescue. terraform takes all the resources in tfstate and by ID pulls data from them and writes everything to tfstate. That is, to create an empty tfstate only with names and IDs, run `terraform refresh`, then we get full tfstate. Hooray!

Now let's do

Here is its important part attributes

"attributes": { "id": "default/backend-logging-load-deployment", "metadata.#": "1", "metadata.0.annotations.%": "0", "metadata.0.generate_name": "", "metadata.0.generation": "24", "metadata.0.labels.%": "1", "metadata.0.labels.app": "backend-logging", "metadata.0.name": "backend-logging-load-deployment", "metadata.0.namespace": "default", "metadata.0.resource_version": "109317427", "metadata.0.self_link": "/apis/apps/v1/namespaces/default/deployments/backend-logging-load-deployment", "metadata.0.uid": "300ecda1-4138-11e9-9d5d-42010a8400b5", "spec.#": "1", "spec.0.min_ready_seconds": "0", "spec.0.paused": "false", "spec.0.progress_deadline_seconds": "600", "spec.0.replicas": "1", "spec.0.revision_history_limit": "10", "spec.0.selector.#": "1", There is:

1. id - string

2. metadata - array of size 1 and in it an object with fields which is described below

3. spec - hash size 1 and in it key, value

In short, a fun format, everything can be in depth, too, on several levels

"spec.#": "1", "spec.0.min_ready_seconds": "0", "spec.0.paused": "false", "spec.0.progress_deadline_seconds": "600", "spec.0.replicas": "1", "spec.0.revision_history_limit": "10", "spec.0.selector.#": "1", "spec.0.selector.0.match_expressions.#": "0", "spec.0.selector.0.match_labels.%": "1", "spec.0.selector.0.match_labels.app": "backend-logging-load", "spec.0.strategy.#": "0", "spec.0.template.#": "1", "spec.0.template.0.metadata.#": "1", "spec.0.template.0.metadata.0.annotations.%": "0", "spec.0.template.0.metadata.0.generate_name": "", "spec.0.template.0.metadata.0.generation": "0", "spec.0.template.0.metadata.0.labels.%": "1", "spec.0.template.0.metadata.0.labels.app": "backend-logging-load", "spec.0.template.0.metadata.0.name": "", "spec.0.template.0.metadata.0.namespace": "", "spec.0.template.0.metadata.0.resource_version": "", "spec.0.template.0.metadata.0.self_link": "", "spec.0.template.0.metadata.0.uid": "", "spec.0.template.0.spec.#": "1", "spec.0.template.0.spec.0.active_deadline_seconds": "0", "spec.0.template.0.spec.0.container.#": "1", "spec.0.template.0.spec.0.container.0.args.#": "3", In general, who wants a programming problem for an interview, then just ask to write a parser for this thing :)

After long attempts to write a parser without bugs, I found a part of it in the code of terraform and the most important part. And everything seemed to work norms

Attempt three

The terraform provider is a binary in which there is a code with all the resources and logic for working with the cloud API. Each cloud has its own provider and the terraform itself only calls them through its RPC protocol between two processes.

Now I decided to contact the terraform providers directly via RPC calls. So it turned out beautifully and made it possible to change the terraform providers to newer ones and get new opportunities without changing the code. It turned out that not all fields in tfstate should be in tf, but how to find out? Just ask the provider about it. Then another

At the end, we got a useful CLI tool with a common infrastructure for all terraform providers and you can easily add a new one. Also adding resources takes a little code. Plus all sorts of buns like connection between resources. Of course there were many different problems that can not be described.

Named animal Terrafomer.

The final

Using Terrafomer, we generated 500-700 thousand lines of tf + tfstate code from two clouds. We were able to take Legacy things and start touching them only through a terraform, as in the best ideas infrastructure as code. Just magic when you take a huge cloud and get it through the command of it in the form of a terraform file of workers. And then grep / replace / git and so on.

Combed and put in order, received permission. Released on githab for all on Thursday (05/02/19). github.com/GoogleCloudPlatform/terraformer

Already received 600 stars, 2 pull requests add support for openstack and kubernetes. Good feedback. In general, the project is useful for people.

I advise everyone who wants to start working with Terraform and not rewrite everything for this.

I will be glad to pull requests, issues, stars.

Demo

Updates: screwed up minimal support for Openstack and kubernetes support is almost ready, thanks to people for PRs

Source: https://habr.com/ru/post/450410/

All Articles