Internet history: core network

Other articles of the cycle:

- Relay history

- The history of electronic computers

- Transistor history

- Internet history

Introduction

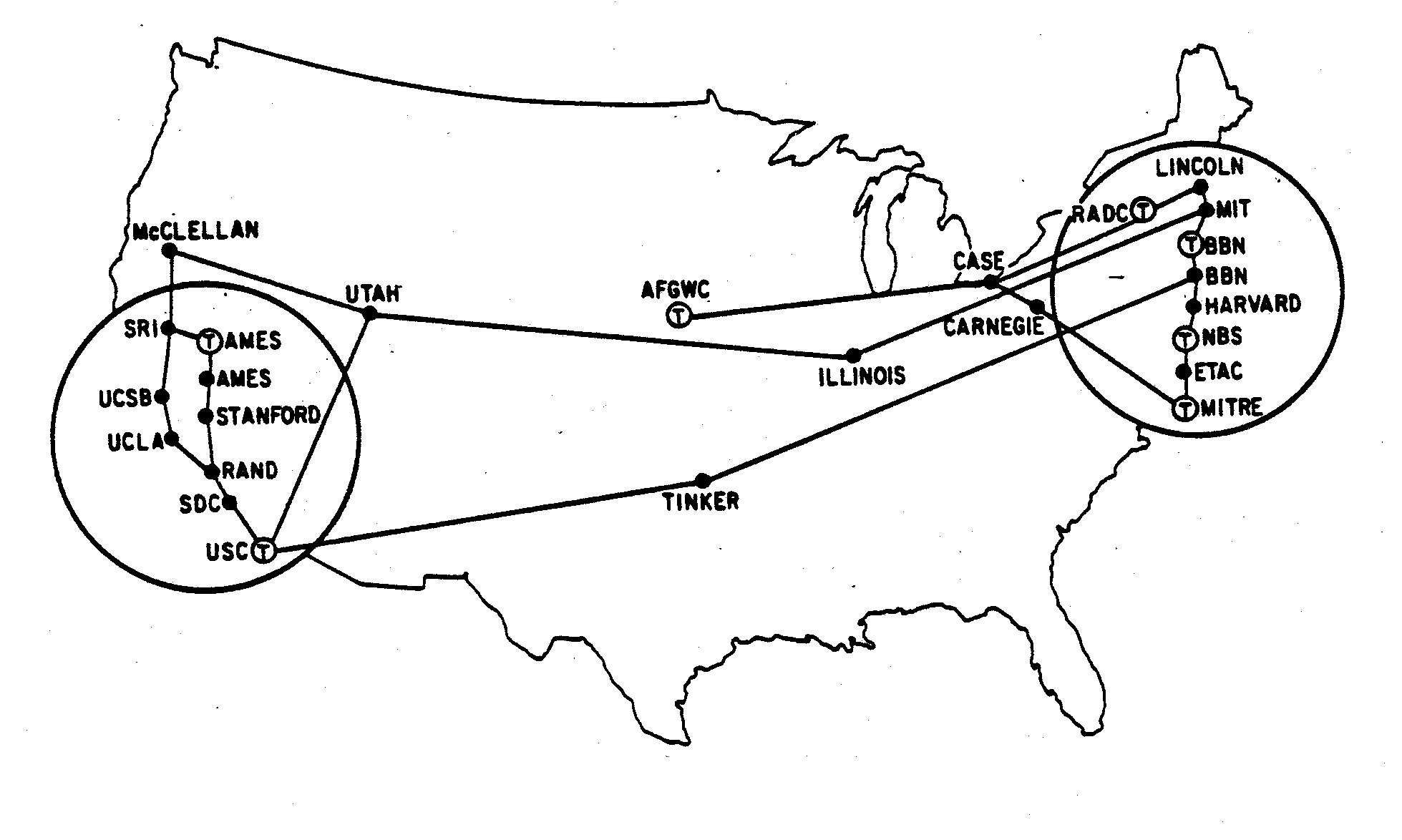

In the early 1970s, Larry Roberts came to AT & T, a huge US telecommunications monopoly, with an interesting proposal. At that time, he was the director of the computing division of Advanced Research Projects Agency (ARPA), a relatively young organization operating within the Ministry of Defense and engaged in long-term research, divorced from reality. Over the past five years, Roberts oversaw the creation of the ARPANET, the first of quite important computer networks connecting computers located in 25 different locations throughout the country.

The network was successful, but its long-term existence and all the bureaucracy connected with it did not fall under the authority of ARPA. Roberts was looking for a way to dump this task on someone else. So he contacted the directors of AT & T to offer them the keys to this system. After carefully considering the proposal, AT & T eventually abandoned it. The company's senior engineers and managers believed that the fundamental ARPANET technology was impractical and unstable, and it had no place in a system designed to provide reliable and versatile service.

ARPANET, naturally, became a grain around which the Internet crystallized; prototype of a huge information system, covering the whole world, whose kaleidoscopic capabilities are impossible to calculate. How could AT & T not see such potential, so stuck in the past? Bob Taylor, who hired Roberts for the position of curator of the ARPANET project in 1966, later said bluntly: "Working with AT & T would be like working with Cro-Magnons." However, before we face such unreasonable ignorance of unknown corporate bureaucrats, let us take a step back. The theme of our story will be the history of the Internet, so first it would be nice to get a more general idea of what is being said.

')

Of all the technological systems created in the late half of the 20th century, the Internet probably had the greatest importance for society, culture and the economy of the modern world. His closest competitor in this matter may be moving on jet planes. Using the Internet, people can instantly share photos, videos and thoughts, desirable and undesirable, with friends and relatives around the world. Young people living thousands of kilometers apart now constantly fall in love and even get married in the framework of the virtual world. An endless shopping center is available at any time, day or night, right from millions of comfortable homes.

For the most part, all this is familiar and that is exactly what happens. But, as the author himself can confirm, the Internet also turned out to be, perhaps, the greatest distraction, a waste of time and a source of damage to the mind in the history of man, having surpassed television in this — and this was not easy to do. He allowed all kinds of inadequacies, fanatics and lovers of conspiracy theories to spread their nonsense all over the globe at the speed of light — some of this information can be considered harmless, and some cannot. He allowed many organizations, both private and private, to slowly gather, and in some cases quickly and shamefully lose, huge mountains of data. In general, he became an amplifier of human wisdom and stupidity, and the amount of the latter is frightening.

But what is the subject we are discussing, its physical structure, all this machinery, which made it possible to undergo these social and cultural changes? What is the Internet? If we somehow managed to filter this substance by placing it in a glass vessel, we would see how it is stratified into three layers. At the bottom, the global communications network will be postponed. This layer is about a hundred years older than the Internet, and at first it consisted of copper or iron wires, but has since been replaced by coaxial cables, microwave repeaters, optical fiber, and cellular radio.

The next layer consists of computers communicating with each other through this system using common languages, or protocols. Among the most fundamental of these are the Internet Protocol (IP), the Transmission Control Protocol (TCP), and the Border Gateway Protocol (BGP). This is the core of the Internet itself, and its specific expression occurs in the form of a network of special computers, called routers, responsible for finding the path for the message through which it can pass from the source computer to the target computer.

Finally, in the upper layer will be various applications that people use and machines to work and play on the Internet, many of which use specialized languages: a web browser, applications for communication, video games, trading applications, etc. To use the Internet, the application only needs to conclude the message in a format that is understandable for routers. The message can be a move in chess, a tiny part of the film or a request to transfer money from one bank account to another - routers will not care, and they will treat it equally.

Our story will bring these three threads together to tell the story of the Internet. First, the global communications network. In the end, all the magnificence of various programs that allow computer users to have fun or do something useful over the network. Together they are connected by technologies and protocols that allow different computers to communicate with each other. The creators of these technologies and protocols were based on the achievements of the past (the network) and used a vague idea of the future, towards which they were groping (future programs).

In addition to these creators, the state will be one of the permanent actors in our story. This will especially concern the level of telecommunication networks, which were either controlled by the government or were subject to strict supervision on its part. Which brings us back to AT & T. No matter how unpleasant it was for them to realize, the fate of Taylor, Roberts and their ARPA colleagues was hopelessly connected with telecommunication operators, the main layer of the future Internet. The operation of their networks was completely dependent on such services. How do you explain their hostility, their belief that ARPANET represents the new world, which is inherently opposed to retrograde officials in charge of telecommunications?

In fact, these two groups did not share temporary, but philosophical differences. The directors and engineers of AT & T considered themselves to be the caretakers of a huge and complex machine that provided reliable and versatile services in communication of one person with another. Bell System was responsible for all the equipment. The ARPANET architects considered the system to be a conductor of arbitrary data particles, and believed that its operators should not interfere with how this data is created and used from both ends of the wire.

Therefore, we must begin with a story about how this deadlock dispute about the nature of American telecommunications has been resolved thanks to the power of the US government.

One system, universal service?

The Internet was born in a specific environment of American telecommunications — in the US, telephone and telegraph providers were not treated like the rest of the world — and there is every reason to believe that this medium played a formative role in developing and shaping the spirit of the future Internet. So let's carefully examine how it all happened. To do this, we will go back to the time of the birth of the American telegraph.

American anomaly

In 1843, Samuel Morse and his allies convinced Congress to spend $ 30,000 on the creation of a telegraph line between Washington, DC, and Washington. and baltimore. They believed that this would be the first link in the telegraph line network created with government money that would spread across the continent. In a letter to the House of Representatives, Morse suggested that the government buy out all the rights to its telegraph patents, and then order the construction of separate parts of the network from private companies, keeping separate lines for official communications. In this case, Morse wrote, “a little time will pass before the entire surface of this country is riddled with these nerves, which with the speed of thought will spread knowledge about all that is happening on earth, turning the whole country into one big settlement”.

It seemed to him that such a vital communication system naturally served the public interest, and therefore falls within the cares of the government. Providing communication between several states through postal services was one of several federal government tasks specifically noted in the US Constitution. However, his motives were not fully determined by serving the community. Government control gave Morse and his supporters the opportunity to successfully complete their enterprise — to receive one but significant payment from state money. In 1845, Cave Johnson, US general postmaster at the 11th President of the United States, James Polk, announced his support for the public telegraph system proposed by Morse: “Using such a powerful tool, for good or harm, for the sake of people’s safety cannot be left in private hands persons, ”he wrote. However, this is all over. Other members of the Polk Democratic Administration did not want anything to do with the public telegraph, nor did the Democratic Congress. The parties did not like the Whig schemes , which forced the government to spend money on “internal improvements” - they considered these schemes to encourage favoritism, corruption and corruption.

Due to the government’s reluctance to act, one of the Morse team members, Amos Kendal, began to develop a telegraph network scheme with the support of private sponsors. However, Morse’s patent was not enough to secure a monopoly on telegraph communication. For ten years, dozens of competitors have appeared, either buying a license for alternative telegraph technologies (based on Royal House's telegraph office) or simply doing semi-legal affairs on shaky legal grounds. Lawsuits were filed in batches, paper fortunes grew and disappeared, bankrupt companies collapsed or were sold to competitors after artificially blowing up stock prices. From all this turmoil, by the end of the 1860s, one major player emerged: Western Union.

A startled rumor about "monopoly" began to spread. The telegraph has already become necessary for several aspects of American life: finance, railways and newspapers. Not a single private organization has ever grown to such a size. The proposal for government control of the telegraph has received a new life. In the decade after the civil war, the postal committees of the Congress invented various plans to pull the telegraph into the orbit of the postal service. Three basic options have appeared: 1) the postal service sponsors another contender by Western Union, giving it special access to post offices and highways, instead of imposing restrictions on tariffs. 2) The postal service launches its own telegraph to compete with WU and other private traders. 3) The government nationalizes the entire telegraph, transferring it to the postal service.

Plans to create a postal telegraph acquired several loyal supporters in Congress, including Alexander Ramsey, the chairman of the Senate postal committee. However, much of the campaign energy was provided by external lobbyists, in particular, Gardiner Hubbard, who had experience in public services as an organizer of urban water and gas lighting in Cambridge (he later became the most important early sponsor of Alexander Bell and founder of the National Geographic Society). Hubbard and his supporters argued that the public system would provide the same useful distribution of information as paper mail gave, keeping tariffs low. They said that this approach would certainly serve society better than the WU system, which was aimed at the business elite. WU, of course, objected that the cost of telegrams is determined by its cost, and that the public system, artificially lowering the tariffs, will face problems and will not benefit anyone.

In any case, the postal telegraph did not receive sufficient support to serve as the theme of the battles in Congress. All proposed laws quietly suffocated. The volume of monopoly did not reach such indicators that would overcome the fear of government abuse. The Democrats gained control of Congress again in 1874, the spirit of national reconstruction in the period immediately after the civil war was muffled, and the weak attempts to create a postal telegraph were exhausted. The idea of placing the telegraph (and later the telephone) under the control of the government periodically arose in subsequent years, but in addition to brief periods of (nominal) government control of the telephone in wartime in 1918, nothing grew out of it.

The government’s neglect of telegraph and telephone was a global anomaly. In France, the telegraph was nationalized before it was electrified. In 1837, when a private company attempted to arrange an optical telegraph (using signal towers) next to the existing system controlled by the government, the French parliament passed a law prohibiting the development of a telegraph not authorized by the government. In Britain, the private telegraph was allowed to develop for several decades. However, public dissatisfaction with the resulting duopole led to government control over the situation in 1868. Throughout Europe, governments placed telegraphy and telephony under the control of state mail, as Hubbard and his supporters suggested. [in Russia, the state-owned enterprise Central Telegraph was founded on October 1, 1852 / approx. trans.].

Outside Europe and North America, most of the world was controlled by the colonial authorities, and therefore had no voice in the development and regulation of telegraphy. Where independent governments existed, they usually created state telegraph systems using the European model. These systems usually did not have enough funds to expand as fast as was observed in the United States and European countries. For example, by 1869, the Brazilian state telegraph company, which operated under the wing of the Ministry of Agriculture, Commerce and Labor, had only 2100 km of telegraph lines, while in the USA there were 4 times more people in a similar area where 4 times more people lived. 130 000 km are already stretched.

New deal

Why did the USA go on such a unique way? You can draw to this local system of distribution of public office among supporters of the party that won the elections, which existed until the last years of the XIX century. Government bureaucracy, up to the heads of post offices, consisted of political appointments with which it was possible to reward loyal allies. Both parties did not want to create new major sources of patronage for their opponents - and this would have happened when the telegraph would come under the control of the federal government. However, the simplest explanation would be the traditional American distrust of a powerful central government — for the same reason, the structures of American health care, education, and other public institutions are just as different from those in other countries.

Given the increasing importance of telecommunications for public life and security, the United States could not completely separate from the development of communications. In the first decades of the 20th century, a hybrid system appeared in which two systems tested private communications: on the one hand, the bureaucracy constantly monitored the tariffs of communications companies, ensuring that they would not take a monopoly position and would not extract excessive profits; on the other hand, the threat of being divided under antitrust laws in the event of inappropriate behavior. As we shall see, these two forces could conflict: the theory of tariff regulation considered monopoly a natural phenomenon in certain circumstances, and duplication of services would be an unnecessary waste of resources. Regulators usually tried to minimize the negative side of a monopoly by controlling prices. At the same time, antitrust laws sought to destroy the monopoly on the vine, forcibly organizing a competitive market.

The concept of tariff regulation was born on railways, and was implemented at the federal level through the Interstate Trade Commission (ICC) established by Congress in 1887. Small businesses and independent farmers became the main driving force of the law. They often had no choice but to use the services of the railways, which they used to bring products to the market, and they said that the railway companies used this by squeezing the last money out of them, while providing luxurious conditions to large corporations.The five-member commission was given the right to monitor the services and tariffs of railways and discourage abuse of monopoly status, in particular, by prohibiting railways from offering special tariffs to selected companies (a precursor of the concept we call today “network neutrality”). The Mann-Elkins Act of 1910 expanded the ICC's telegraph and telephone rights. However, the ICC, concentrating on transportation, was never particularly interested in these new areas of responsibility, practically ignoring them.

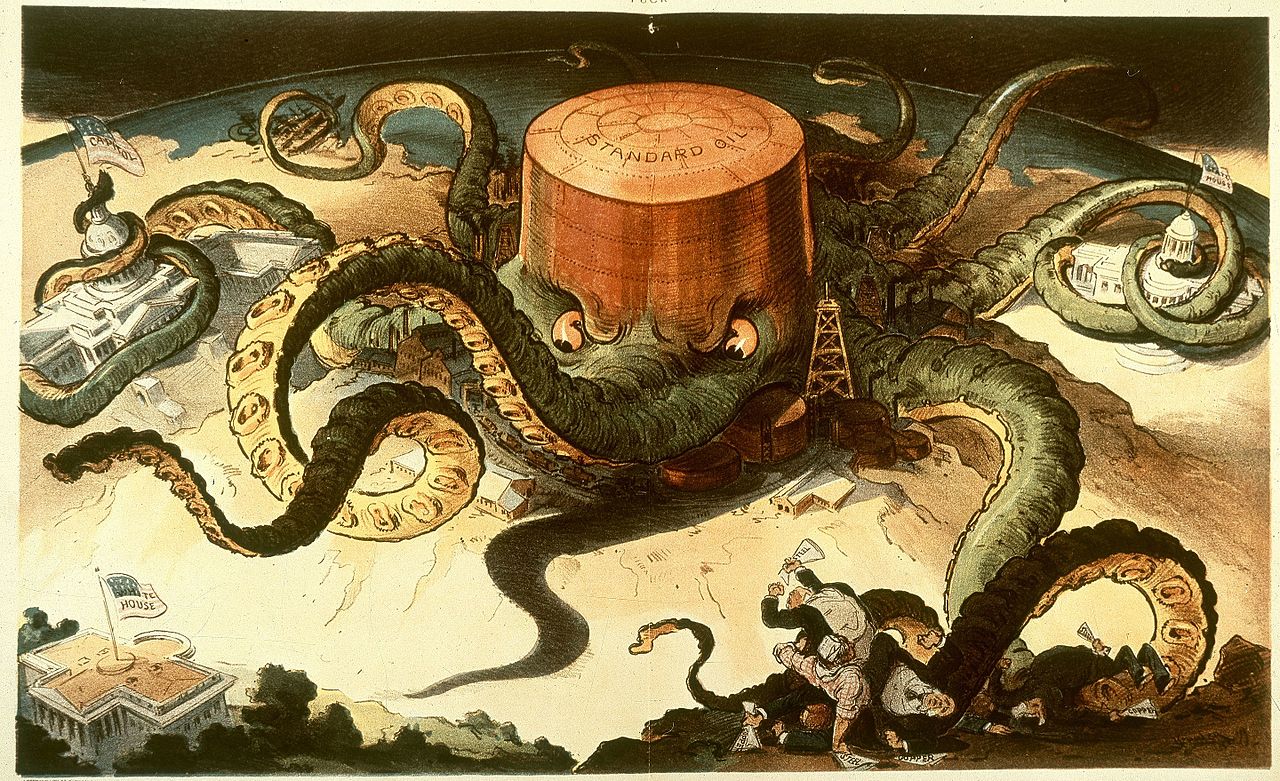

At the same time, the federal government has developed a completely new tool to combat monopolies. Sherman ActIn 1890, prosecutors general were given the opportunity to challenge in court any commercial "combination" suspected of "deterring trade" - that is, overwhelming competition due to the possibilities of a monopoly. For the next two decades, this law was applied to liquidate several major corporations, including the Supreme Court decision of 1911 to divide Standard Oil into 34 parts.

Octopus Standard Oil from a 1904 cartoon, before separation

By that time, telephony, and its main provider, AT & T, had managed to eclipse telegraphy and WU in importance and capabilities so that in 1909, AT & T was able to buy a controlling stake in WU. Theodore Vail became president of the combined companies and began the process of stitching them together. Vale firmly believed that a benevolent telecommunications monopoly would better serve the interests of society, and promoted the company's new slogan: “One policy, one system, universal service”. As a result, Vail matured so that the destroyers of the monopolies would pay attention to him.

Theodore Vale, ca. 1918

The assumption of office in 1913 by the Woodrow Wilson administration provided the members of his Progressive Partya good time to threaten his antitrust cudgel. Postal Service Director Sydney Burlson was inclined to complete postalization of the phone on the European model, but this idea, as usual, did not receive support. Instead, Attorney General George Vickersham expressed the opinion that AT & T’s continuous takeover of independent telephone companies violated the Sherman Act. Instead of going to court, Weil and his deputy, Nathan Kingsbury, concluded an agreement with the company that went down in history as the “Kingsbury agreement”, under which AT & T pledged:

- Stop buying independent companies.

- Sell your stake in WU.

- Allow independent phone companies to connect to a long-distance network.

But after this dangerous moment for the monopolies, decades have passed. A quiet star of tariff regulation has risen, suggesting the presence of natural monopolies in communications. By the early 1920s, indulgences had been made, and AT & T resumed the process of acquiring small independent telephone companies. This approach was enshrined in the act of 1934, which established the US Federal Communications Commission (FCC), instead of the ICC, which became the regulator of wire communications tariffs. By that time, Bell System controlled at least 90% of America’s telephone business by any parameters: 135 of 140 million km of wires, 2.1 of 2.3 billion monthly calls, 990 million of billion dollars in annual profits. However, the main purpose of the FCC was not to resume competition, but to “make it accessible, as far as possible, to all US residentsfast, efficient, state and world wide wire and radio communications with adequate convenience and at a reasonable price. ” If such a service could provide one organization, so be it.

In the mid-20th century, local and state telecommunications regulators in the United States developed a multi-stage cross-subsidy system to accelerate the development of a universal communications service. Regulatory commissions set tariffs based on the estimated network value for each customer, and not based on the cost of providing services for that customer. Therefore, business users who relied on telephony to conduct business paid more than individuals (for whom this service provided social amenities). Customers in large urban markets, who had easy access to many other users, paid more than residents of small cities, despite the greater efficiency of large telephone exchanges. Long-distance users paid too much, despitethat technology has tirelessly reduced the cost of long-distance calls, and the profits of local switches grew. This complex system of redistribution of capital worked quite well, as long as there was one monolithic provider within which all this could work.

New technology

We are accustomed to consider monopoly as a retarding force, generating idleness and lethargy. We expect that a monopoly will jealously guard its position and status quo, and not serve as an engine for technological, economic and cultural transformation. However, it is difficult to apply this look at AT & T at the peak of its heyday, because it presented innovation as innovation, anticipating and speeding up the appearance of each new breakthrough in communications.

For example, in 1922, AT & T installed a commercial broadcasting radio station on its building in Manhattan, just a year and a half after the first such large station, KDKA from Westinghouse, opened. The following year, she used her long-distance network to relay President Warren Harding’s appeal to many local radio stations across the country. A few years later, AT & T entrenched itself in the film industry, after engineers from Bell Laboratories developed a machine that combined the video and recorded sound. The Warner Brothers studio used this " Vaitaphone " to produce the first Hollywood film with the synchronization of music "Don Juan" ,followed by the first full-length film in history using the dubbing of synchronous replicas "The jazz singer . "

Walton

Gifford, who became president of AT & T in 1925, decided to rid the company of such side companies as broadcasting and cinema, in particular, to avoid an investigation by anti-monopoly. Although the US Department of Justice did not threaten the company since It was not worthwhile to draw undue attention with actions that could be regarded as an attempt to abuse the monopoly position in telephony for unfair promotion to other markets. So, instead of organizing your own th broadcast, AT & T became the main provider for the transfer of the American radio corporations RCA signals and other radio networks, transferring programs from their New York studios and other major cities in the radio affiliates across the country.

Meanwhile, in 1927, a radiotelephony service was launched across the Atlantic, which was put into operation by a trivial question asked by Gifford to his interlocutor from the British postal service: “How is the weather in London?”. This, of course, is not “This is what God is doing!” [The first sentence, officially telegraphed by Morse code / approx. transl.], but still she noted an important milestone, the emergence of the possibility of intercontinental conversations several decades before the laying of the submarine telephone cable, albeit with great cost and low quality.

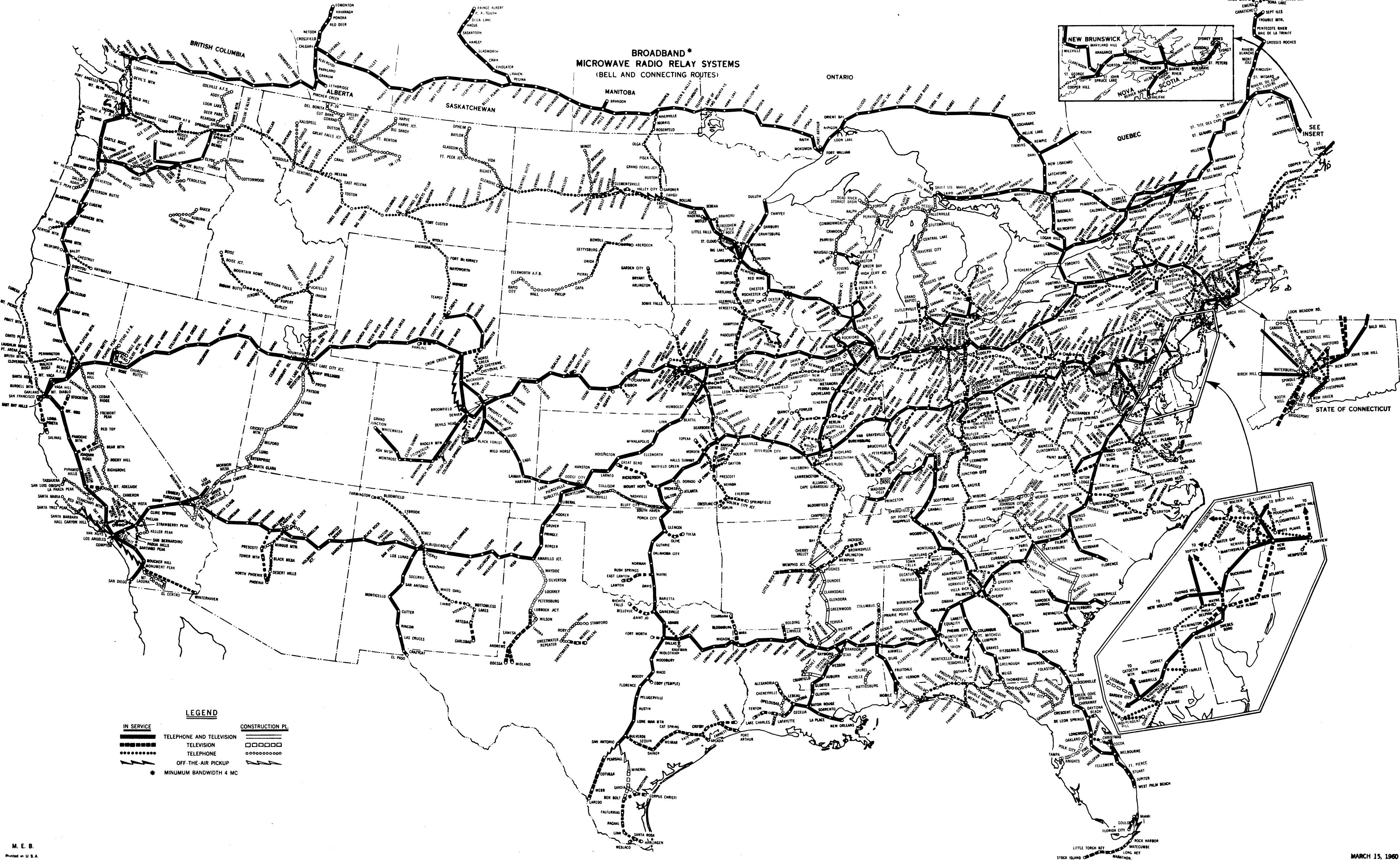

However, the most important events for our history concerned the transfer of large amounts of data over long distances. AT & T has always wanted to increase the traffic of its long-distance networks, which served as the main competitive advantage over several still-living independent companies, as well as giving greater profits. The easiest thing was to attract customers through the development of a new technology, which reduced the cost of transmission - this usually meant the possibility of cramming more talk into the same wires or cables. But, as we have already seen, requests for long-distance communication went beyond the traditional telegraph and telephone messages from one person to another. Radio networks needed their own channels, and television was already looming on the horizon, with far greater demands for bandwidth.

The most promising way to satisfy new requests was to lay a coaxial cable made up of concentric metal cylinders [coaxial, co-axial — with a common axis / approx. trans. ].The properties of such a conductor were studied in the 19th century by the giants of classical physics: Maxwell, Heaviside, Rayleigh, Kelvin and Thomson. He had tremendous theoretical advantages like a transmission line, since he could transmit a broadband signal, and his own structure completely shielded him from cross-interaction and interference from external signals. Since the development of television in the 1920s, none of the existing technologies could provide megahertz (and more) bandwidth required for high-quality broadcasts. Therefore, engineers from Bell Laboratories set out to transform the theoretical advantages of the cable into a working long-distance and broadband transmission line, including the creation of all the necessary auxiliary equipment for generating, amplifying,reception and other signal processing. In 1936, with the permission of the FCC, AT & T conducted a field test of a cable over 160 km in length, stretched from Manhattan to Philadelphia. After the first verification of the system with 27 voice circuits, engineers successfully learned how to transmit video by the end of 1937.

At that time, another request for long-distance communications with high bandwidth, radio relay communications began to appear. The radiotelephony used in the 1927 transatlantic connection used a pair of broadcast radio signals and created a two-way voice channel on short waves. It was economically unprofitable to link two radio transmitters and receivers using the entire frequency band for one telephone conversation from the point of view of ground communication. If you managed to cram a lot of conversations into one radio beam, then this would have been a different conversation. Although each individual radio station would be quite expensive, hundreds of such stations should have been enough to transmit signals throughout the United States.

For the right to use in such a system, two frequency bands struggled: ultrahigh frequencies (decimeter waves) UHF and microwaves (centimeter-length waves). Higher frequency microwaves promised greater throughput, but also represented a greater technological complexity. In the 1930s, AT & T's responsible opinion tended toward a safer option with UHF.

However, microwave technology made a big leap forward during World War II due to its active use in radar. Bell Labs demonstrated the viability of microwave radio using the example of AN / TRC-69, a mobile system capable of transmitting eight telephone lines to another antenna in direct line of sight. This allowed the military headquarters to quickly restore voice communication after redeployment, without waiting for the cable to be laid (and without risking to remain without communication after cutting the cable, both accidental and in the context of enemy actions).

Extended microwave radio station AN / TRC-6

After the war, Harold T. Friis, an officer of Danish origin from Bell’s laboratories, took the lead in developing microwave microwave links. A trial line, 350 km long, from New York to Boston, was opened at the end of 1945. The waves jumped 50 km long between ground towers - using a principle essentially similar to the optical telegraph, or even a chain of signal lights. Up the river to the Hudson Highlands, over the hills of Connecticut, to Mount Ashnebamskit in western Massachusetts, and then down to Boston Bay.

AT & T was not the only company that was interested in microwave communications, but also had military experience in managing microwave signals. Philco, General Electric, Raytheon and television broadcasters built or planned their own experimental systems in the post-war years. Philco overtook AT & T by building a communications line between Washington and Philadelphia in the spring of 1945.

AT & T Microwave Radio Relay Station in Creston (WY), part of the first transcontinental line, 1951.

For over 30 years, AT & T has avoided problems with anti-monopoly committees and other government regulators. For the most part, it was defended by the idea of a natural monopoly — that it would be terribly inefficient to create a multitude of competing and unrelated systems that laid their wires throughout the country. Microwave communication was the first serious dent in this armor, which allowed many companies to provide long-distance communication without extra costs.

Microwave transmissions have seriously reduced the entry barrier for potential competitors. Since the technology required only a chain of stations located 50 km apart, to create a useful system, it was not necessary to buy back thousands of kilometers of land and maintain thousands of kilometers of cable. Moreover, the microwave capacity significantly exceeded that of traditional twin cables, because each relay station could transmit thousands of telephone conversations or several television programs. The competitive advantage of the existing AT & T wired long-distance system has faded.

However, the FCC has defended AT & T for years from the effects of such competition by making two decisions in the 1940s and 1950s. At first, the commission refused to issue licenses, except temporary and experimental ones, to new communication providers that did not provide their services to the entire population (and, say, communicated within one enterprise). Therefore, access to this market threatened to lose a license. Commission members were worried about the appearance of the same problem that they threatened to broadcast twenty years ago, and led to the creation of the FCC itself: the cacophony of interferences of many different transmitters polluting a limited radio band.

The second decision concerned interworking. Recall that the Kingsbury agreement required AT & T to allow local telephone companies to connect to its long-distance network. Were these microwave microwave requirements applicable? The FCC ruled that they are applicable only in those places where there was no adequate coverage of the public communications system. Therefore, any competitor who creates a regional or local network risked a sudden disconnection from the rest of the country when AT & T decides to enter its area. The only alternative to maintain communication was to create a new own national network, which was scary to do under an experimental license.

By the end of the 1950s, there was only one major player in the long-distance telecommunications market, AT & T. His microwave network transmitted over 6000 telephone lines on each route, and reached out to each continental state.

The AT & T microwave radio network in the 1960s.

However, the first significant obstacle to full and comprehensive AT & T control over the telecommunications network came from a completely different direction.

What else to read

- Gerald W. Brock, The Telecommunications Industry (1981) Gerald W. Brock

- John Brooks, Telephone: The First Hundred Years (1976)

- MD Fagen, ed., The System of Transmission Technology (1985)

- Joshua D. Wolff, Western Union and the American Corporate Order (2013)

Source: https://habr.com/ru/post/450168/

All Articles