How technology manipulates your mind: the look of an illusionist and a Google design ethic expert

Estimated reading time: 12 minutes.

“It is easier to deceive a person than to convince him that he was deceived”

Unknown author

I understand how technologies use our psychological vulnerabilities. That is why the last three years I have worked as an expert on design ethics at Google. I am learning how to create products so as to protect a billion human minds from manipulation.

When we use technology, we often focus on the positive side of what they do for us. But I want to show you the other side ...

How do technologies exploit our mind's vulnerabilities?

I first began to think about it when I was an illusionist in childhood. Illusionists seek blind spots, vulnerabilities, and the limits of a person’s perception in order to force a person to do something that he will not even be aware of. When you understand which buttons of the human mind to press, you will play them like a piano.

This I show focus on mom's birthday

And this is exactly what product makers do with your mind. In pursuit of your attention, they play against you, using your psychological vulnerabilities - consciously and unconsciously.

I want to show you how they do it.

Trick # 1: Choosing from a set of options, you supposedly control your choice

Western culture is built around the ideals of individual choice and personal freedom. Many of us fiercely defend the right to make “free” choices, but we do not notice how the components of this choice are manipulated.

This is exactly what illusionists do. They give the illusion of choice, choosing the options so as to win no matter what the person chooses. I can not convey the depth of this insight.

When people are given choices, they rarely ask:

- What options were not included in the list?

- Why do I choose from these and not other options?

- Do I know the goals of those people who give me a choice?

- Do these options lead to the satisfaction of my true need — or only distract from it? (For example, an infinite number of toothpastes in a supermarket)

How much does this set of options help satisfy the need for “my toothpaste is out”?

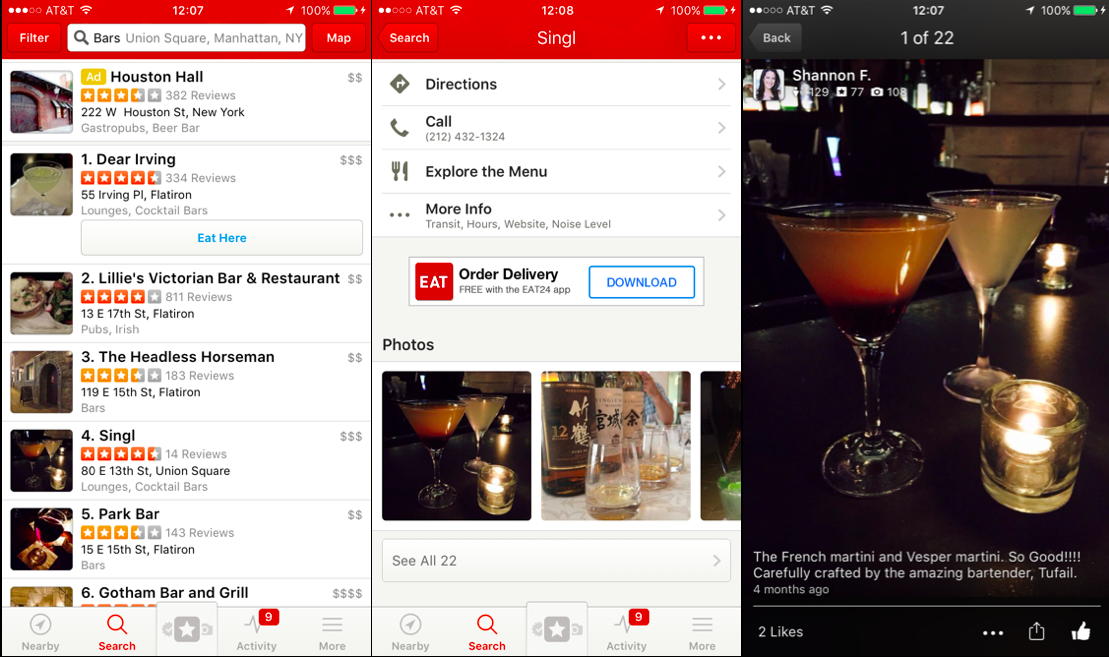

Imagine that you are hanging out with friends on Thursday evening and want to continue the conversation somewhere. You open the Yelp (aggregator reviews), and look for bars nearby. All friends buried in smartphones and compare bars . Your friends begin to scrupulously examine the photos and cocktail menu. How much does this correspond to the initial need of your company “to continue communication somewhere?”

Not that bars are a bad decision, but the fact is that Yelp changed the original task “where do we go to continue communication?” With “which bar has better photos and cocktails?” Through the presentation of options.

Moreover, Yelp has created for your company the illusion that what is listed in it is an exhaustive list of options . While you were looking at smartphones, you didn’t notice a park with musicians across the street, you didn’t notice a gallery on the other side of the street where you serve pancakes with coffee. Neither one nor the other is in Yelp.

Yelp quietly changed the company's need for “where to go to continue communication?”

The more technology gives us in every area of our life (information, events, places to visit, friends, dating, work) the more we think that the choice in our phone is exhaustive. But is it?

Choices that bring us closer to goals are different from choices with the most choices. However, if you blindly follow the choice that is given, it is easy not to notice this difference:

- “Who is free tonight?” Becomes a choice among those who recently wrote to us (whom we can ping).

- “What is happening in the world?” Becomes what is written in the news feeds.

- “Who is free to date?” Becomes a set of photos for svayp in Tinder (instead of local events with friends or urban adventures).

- “I need to answer this email” becomes the choice of keywords to answer (instead of more engaging and deeper communication options with the person).

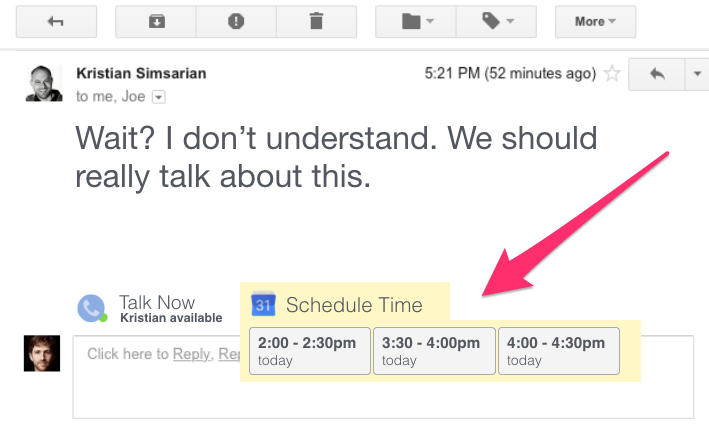

"Schedule time." All user interfaces are a choice among options. What if your email client gives you inspiring answers instead of “what message do you want to answer?”

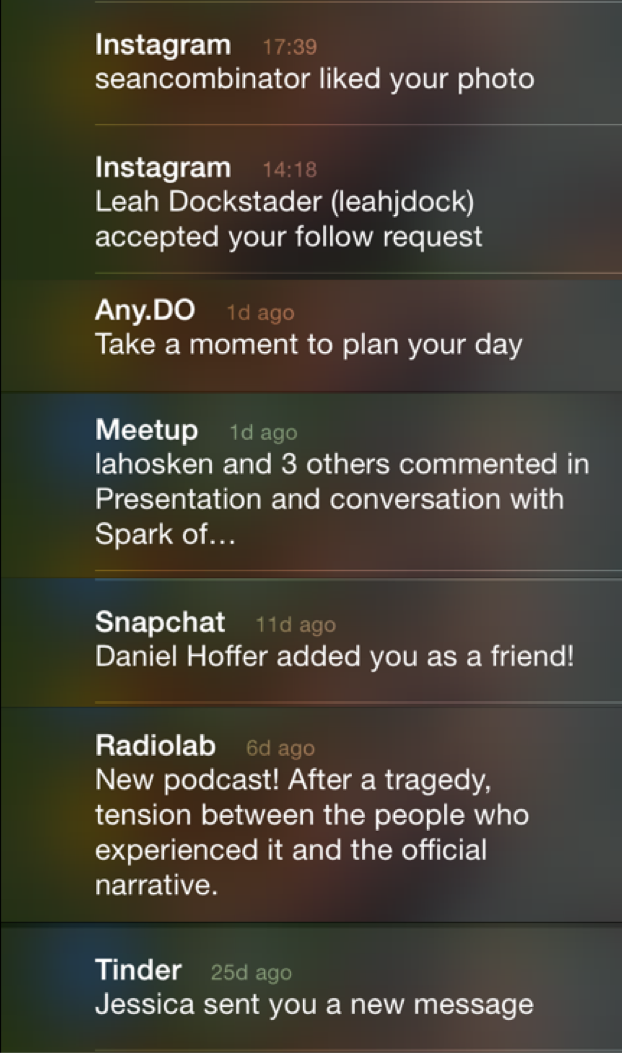

When we wake up in the morning and stick to the phone with notifications, it creates the idea of “morning rise, along with everything I've missed since yesterday evening” (even more examples here: Joe Edelman's Empowering Design talk )

The list of notifications when we woke up in the morning - how much does it help us achieve goals? How does it reflect what is really important to us?

By creating options for choice, technology replaces how we perceive our choice. The more attention we pay to the options that are given to us, the more we will notice when they do not meet our true needs.

Trick # 2. Put the slot machine in your pocket

If you are a mobile application, how can you do so to tie any person to yourself? Answer: become a gaming machine.

An ordinary person checks a smartphone 150 times a day. Why do we do this? Do we make 150 informed choices a day?

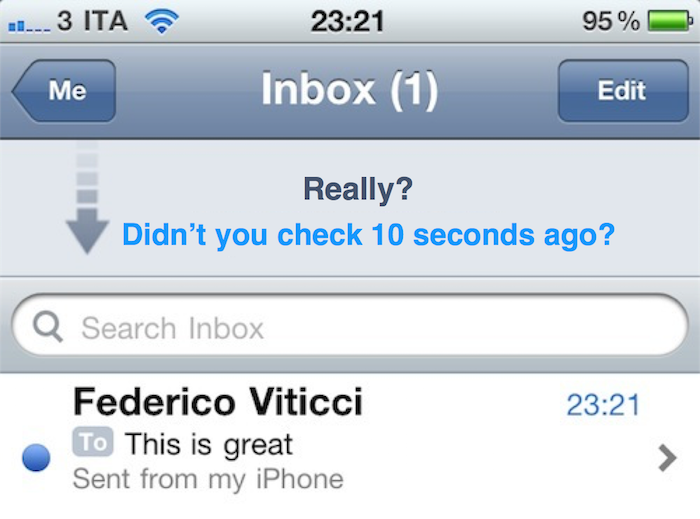

How often do you check your email during the day?

The main ingredient in slot machines is the variable reward .

If you want to maximize dependence on your product, then you need to associate user actions (pull the lever) with a variable reward . You pull the lever and immediately receive a tempting reward (for example, a match in Tinder or some other “prize”), or nothing at all. Dependence increases when the remuneration rate is the most variable.

Does this effect really work on humans? Yes. Slot machines in the United States make more money than baseball, cinema and amusement parks combined . Compared to other types of gambling, “problematic dependence” on slot machines occurs 3-4 times faster , according to Natasha Dow Schull, a professor at the University of New York, the author of Addiction by Design.

Unpleasant truth: several billion people keep slot machines in their pockets

- When we get the phone out of our pockets, we play slot machines to see which notifications came to us.

- When we update incoming mail, we play slot machines to see what new letters we received.

- When we scroll through the Instagram tape, we play the slot machines to see which photo comes up next.

- When we swap faces in Tinder, we play slot machines to find out if we can potentially pair with someone.

- When we tap on the red dot on the bell on Facebook, we play slot machines to find out what's inside.

“Play the slot machines to find out how many likes you have collected”

Applications and websites spray variable rewards over their products because it is profitable for the business.

In some cases, the slot machines in the products appear randomly. For example, there is no evil corporation that specifically made an email slot machine. No one benefits that millions of people check their mail, and there is nothing there. Designers Apple and Google also did not want to make a gaming machine from a smartphone. It was an accident.

But now companies like Apple or Google should take responsibility for reducing the impact of variable rewards and moving to less addictive, more predictable models through thoughtful design. For example, companies may encourage people to set a period of time during the day or week when they can use the “slot machines” applications and adjust all notifications to that time.

Trick # 3. Fear of missing something important (FOMO, fear of missing out)

Another trick to controlling our mind is to increase the “1% chance of missing something really important.”

If I can convince you that I am a channel of important information, messages, friendly contacts, potential sexual partners, then it will be hard for me to turn off, unsubscribe, or delete my account ... because (yeah, I won!) You can skip something important.

- Therefore, we leave the email newsletters, even if they do not bring us anything useful (“what if I miss an important announcement in the future?”)

- Therefore, we remain “friends” with people with whom eternity did not communicate (“what if I miss something important from them?”)

- Therefore, we continue to svaypat photos in applications for dating, even if we have not met anyone for a long time (“What if I miss the one that I like”)

- Therefore, we continue to use social media (“What if I miss what my friends are discussing?”)

But if we delve into the study of this fear, we will see that it is infinite. We will always miss something important when we do not use something.

- There will be moments in Facebook that we will miss without flipping through Facebook for the sixth hour of the day (for example, an old friend who came to your city).

- There will be moments in Tinder that we will miss without having to upload 700 photos in a row (for example, the partner we dreamed of).

- There will be emergency calls that we will miss without being online around the clock.

But let's be honest: living the moments of our lives, afraid to miss something important, is not something for which we were created.

It's amazing how quickly we wake up from this illusion, letting go of this fear. When we disconnect for more than a day, unsubscribe from notifications, or go to a digital detox for a week, we’ll understand that in fact we have nothing to worry about.

What we do not pay attention to does not bother us.

The thought “what if I miss something important?” Appears before shutting down, unsubscribing or shutting down - not after. Imagine that technology companies are aware of this and help us build relationships with friends or products from the point of view of “well spent time”, and not what we might have missed.

Trick # 4. Social approval

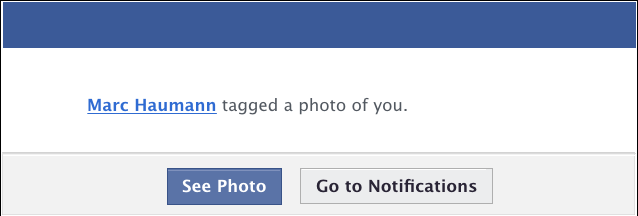

“Mark Howman has tagged you in a photo.” One of the most convincing messages a person can receive.

We are all vulnerable to social approval. The need to belong to a certain circle, to be accepted is one of the most powerful motivations of a person. Today, social approval is in the hands of technology companies.

When my buddy Mark marks me in a photo, I think he makes an informed choice to mark me. But I can’t even imagine how a company like Facebook brings him to this action.

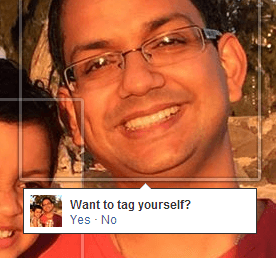

Facebook, Instagram and Snapchat manipulate how people tag others in photos, offering users all the faces that they can recognize. For example, they simplify the task by creating a box with the question “mark Tristan in the photo?” - you just need to click on it.

So, when Mark marks me, he actually responds to Facebook’s suggestion to mark me , and does not make an informed choice. This design allows Facebook to control how often millions of people get social approval.

“Do you want to mark yourself?” Facebook uses the marks on the photo so that people more often mark other people, and thereby create more external social effects.

The same happens when we change our profile picture? Facebook knows that at this moment we are most vulnerable to social approval: “what will my friends think about my new photo?” Facebook can rank this photo higher in the news feeds, it will be visible longer and more people will like it or leave a comment. Every time someone likes or comments, we go back to the social network.

Each person responds by default to social approval, but some demographic groups (teenagers) have stronger reactions and, therefore, are more vulnerable. That is why it is so important to understand how powerful product designers are when they exploit such human vulnerabilities.

“How many likes did I get?”

Trick # 5. Social reciprocity ("tooth for tooth")

- You do me a favor - I owe you a favor next time

- You say "thank you" - I answer "please"

- You send me an e-mail - ugly not to answer you

- You subscribe to me - it’s ugly not to subscribe in response (especially for teenagers).

We are vulnerable because of the need to reciprocate the gestures of others. And just like with social approval, technology companies manipulate how often we experience social reciprocity.

Regarding cases, this happened by itself: email and instant messengers by default imply reciprocity. But in other cases, companies exploit this vulnerability intentionally.

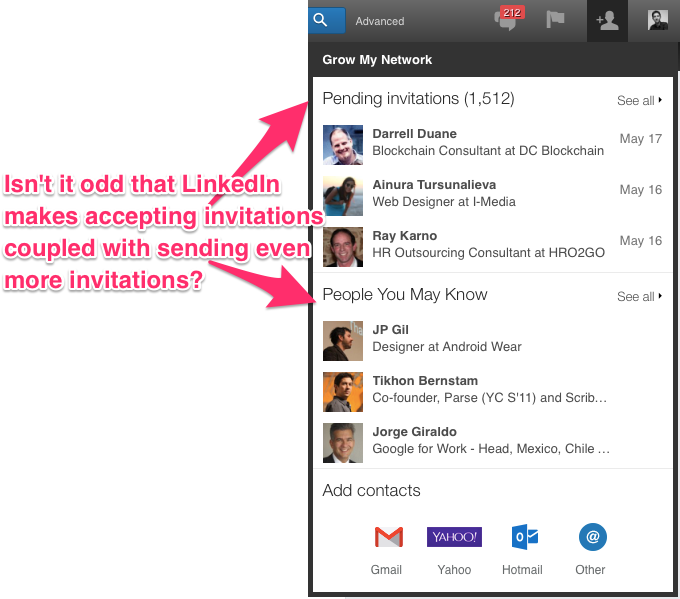

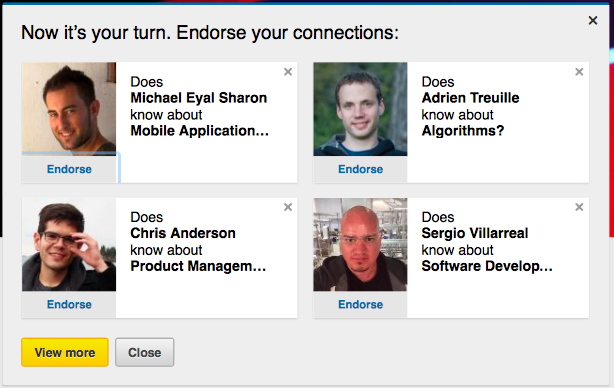

LinkedIn is the most obvious manipulator. LinkedIn aims to create as many mutual obligations between users as possible. That's because every time reciprocity between users is achieved (through accepting a contact request, responding to messages or confirming a skill) they need to return to linkedin.com. So LinkedIn forces users to spend more time on their site or in an application.

Like Facebook, LinkedIn exploits perception asymmetry. When you get an invitation to make contact with someone, you imagine that this person made an informed choice to add you. But in reality, he unconsciously responded to LinkedIn's list of proposed contacts. In other words, LinkedIn has translated your subconscious impulse to add a person to a social commitment that millions of users want to reciprocate. And all for the benefit that the social network will receive from the time spent in it.

Isn't it strange that LinkedIn has put in one place the confirmation of requests for a contact, along with a bunch of new invitations to connect?

Imagine millions of people torn out in the middle of the day to reciprocate each other - all for the benefit of the companies that created such a design.

Welcome to the world of social media.

“Now it's your turn. Confirm your contact skills. ” After you accept your skills, LinkedIn uses your cognitive distortion to reciprocate and offers to confirm the skills to four more contacts.

Imagine if technology companies were responsible for minimizing mutual obligations. Or if there was an organization that represents the public interest - as an industrial consortium or the FDA ( Food Safety Authority ), but for technology. They could monitor that technology companies did not abuse human vulnerabilities.

“LinkedIn is finally starting to pay off - he brought me two new followers on Twitter”

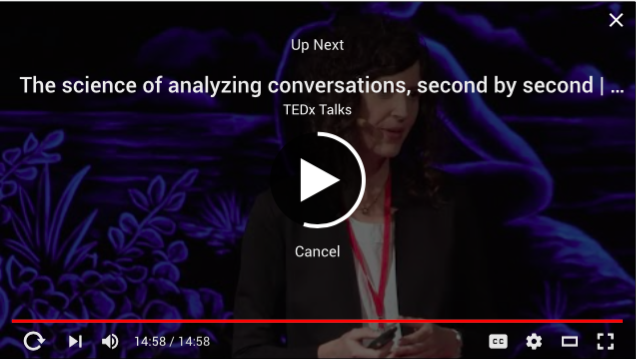

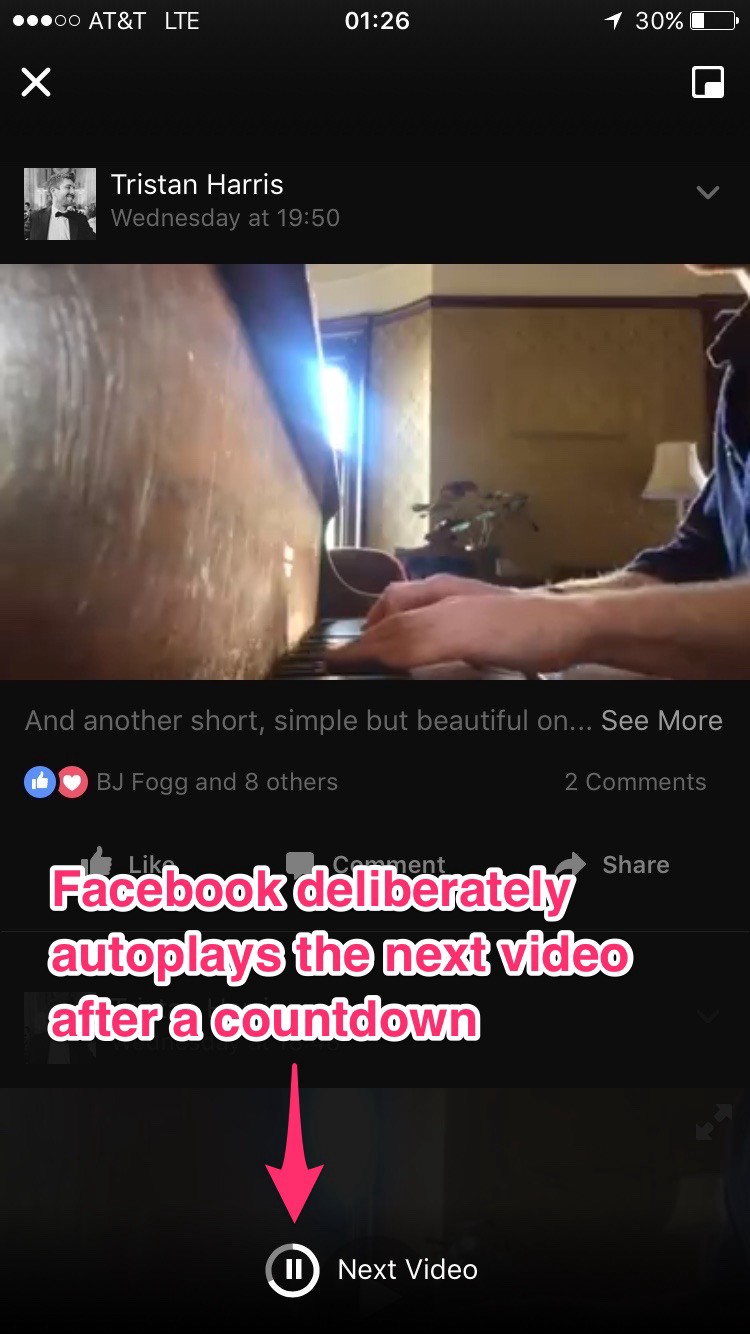

Trick # 6. Bottomless vessels, endless tapes and autostart

YouTube automatically launches the next video after the countdown.

Another way to manipulate minds is to allow people to consume without stopping, even if they are already full.

How? Yes Easy. Take a finite and limited experience and make an endless non-stop flow of it.

Brian Wansink, a professor at Cornell University, demonstrated this in his study, showing how to deceive a person and make him eat soup, giving him a bottomless plate that is automatically filled as the person eats. With an endless bowl of soup, people consume 73% more calories than with a regular plate, and they are mistaken in their estimates of 140 calories eaten.

Technology companies use a similar principle. News feeds are created with automatic loading, so that you continue to scroll and you have no reason to stop, change your mind or leave.

For the same reason, the Netflix, YouTube or Facebook video services after the countdown include the following video — instead of waiting for your informed choice. A huge proportion of the traffic on these sites is generated by autoplaying the next video.

“Netflix automatically turns on the next episode after the countdown”

Facebook without your demand includes autoplay the next video after the countdown.

Technology companies often say "we just make it easier for the user to watch the video that he wants to watch," while they only pursue their own interests. And you cannot blame them for this, because “ time spent on a platform” ( time spent ) is the currency for which they are fighting.

Despite this, imagine if technology companies would help you consciously limit browsing to increase the quality of your time.

Trick # 7. Interruptions against “respectful” notifications

Companies know that messages that interrupt a user are more likely to receive an immediate response than asynchronous messages, such as email.

If Facebook messenger (or WhatsApp, WeChat, or Price) were free, they would have designed the message system in such a way that they would immediately interrupt the user (and show him a chat window) instead of helping users to respect each other's attention.

In other words, interruption is beneficial for business .

It is also in the interests of companies to increase the sense of urgency and the need to return social commitment. For example, Facebook automatically informs the sender when you “viewed” the message, instead of helping you avoid “disclosure” whether you read it or not (“when you know that the one who sent you the message knows you read it - you are even more feel obligated to answer ").

Against this background, Apple stands out with a more respectful attitude towards users and the ability to disable "read receipts".

The problem is that increasing interruptions in the name of business destroys the ability to focus on millions of people and creates billions of useless interruptions every day. This is a huge problem that we need to solve by common design standards (perhaps as part of the Time Well Spent project).

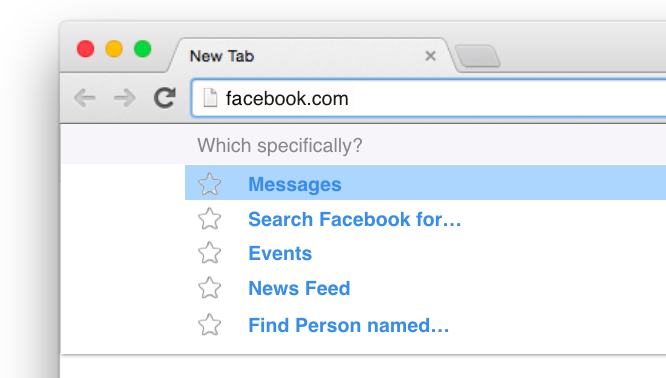

Trick # 8. Associate your needs with user needs

Another way to manipulate you is to take your intention to use the application (for example, to complete a task) and make it inseparable from the business objectives (maximize our consumption while we use the product).

For example, when we go to the store around the corner, intentions # 1 and # 2 are buying medicine and milk. But grocery stores want to maximize the number of purchases, so they put milk and drugs at the end of the store.

In other words, they do what buyers want (milk and medicine) inseparable from what the business wants. If the stores were really organized to support people, they would put the most popular products in the beginning .

Technology companies design their websites in a similar way. For example, if you want to see events tonight on Facebook (your intention), then the Facebook application will not let you do it. First you get on the news feed (business interests). This is intentional. Facebook wants to convert interest in its use into interest to spend as much time on Facebook, consuming content.

Imagine if ...

- Twitter made it possible to post tweets without having to watch your feed.

- Facebook made it possible to watch today's events without having to watch your feed.

- Facebook made it possible to use Facebook Connect as a passport to create accounts in third-party applications - without having to install the entire application, with news feed and notifications.

In the world of "well spent time" there is always a direct opportunity to get what you want, no matter what the business wants. Imagine a digital “Bill of Rights” that outlines design standards and forces products for millions of people to give them the opportunity to directly get what they want without having to go through deliberately established distractions.

Imagine if browsers helped you find what you need — especially on sites that deliberately lead you away from your goal for your own reasons.

Trick # 9. Inconvenient choice

We are told that it’s enough for businesses to “make choice available”:

- "If you do not like this product, you can always use another"

- “If you don’t like it, you can always unsubscribe”

- “If you are addicted to our application, you can always remove it from your phone.”

Business literally wants you to make a choice that it needs easily, and one that is not needed is difficult. Illusionists do the same. You make it easier for the viewer to choose what you want and complicate the choice of what you don't want.

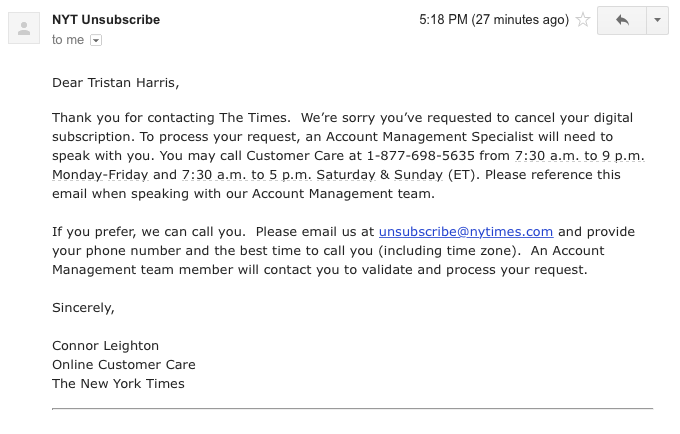

For example, NYtimes.com gives you a “make free choice” to unsubscribe. But instead of turning off the "unsubscribe" button, they send you a letter with instructions on which number and what time you need to call to cancel the subscription.

NYtimes supposedly gives you the freedom to choose to cancel a subscription.

Instead of looking at the world from a position of choice, we must look at the world from the position of the effort necessary to make a choice. Imagine a world where the options for choosing are labeled with the level of complexity of their implementation (as a coefficient of force). And there would be an independent entity — an industrial consortium or non-profit organization that would tag these levels of complexity and set navigation standards among them.

Trick # 10. Prediction errors and the feet-in-door strategy

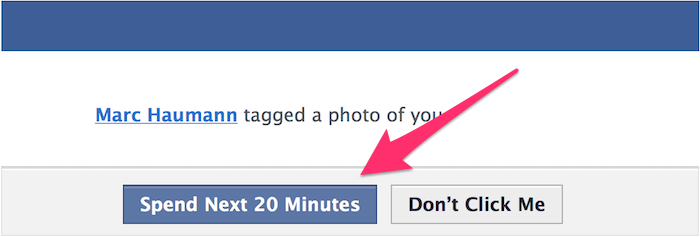

"Spend the next 20 minutes." Facebook offers a simple “view photo” choice. Would we click if we knew the real “price” of this choice?

The last trick is: applications exploit a person’s inability to predict the consequences of the first action.

People cannot intuitively predict the real cost of a click when they see an offer to click. Salespeople use “feet in the door” techniques, asking small, innocent questions to start a conversation (“just one click to see which tweet was retweeted”) and to continue (“why not stay for a while?”). All involving sites use this trick.

Imagine if web browsers and smartphones, the doors through which people make a choice, would truly look after people and help them predict the consequences of a click (based on real data about what real benefits and cost this click has ?)

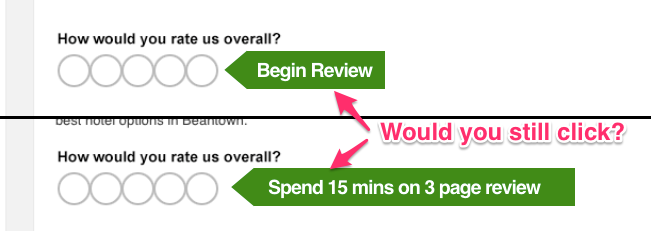

That is why I add “an approximate reading time” at the beginning of my posts. If you show the "real value" of choice to users, you treat your audience with dignity and respect. In the world of “well spent time,” options for choosing are presented in the format of forecasting costs and benefits, so that users can make informed choices by default, rather than make additional efforts.

« ?» TripAdvisor « », (« ?»), .

, ? . , . , , , . , , , .

— , , , .

, - . , , . — . , .

Product Philosopher Google 2016. , .

- Time Well Spent http://timewellspent.io .

- , , : Joe Edelman , Aza Raskin , Raph D'Amico , Jonathan Harris Damon Horowitz .

- Joe Edelman's .

- : , product owner

- : ponchiknews

- : PonchikNews

')

Source: https://habr.com/ru/post/450068/

All Articles