Not just processing: How we made a distributed database from Kafka Streams, and what came of it

Hi, Habr!

We remind you that following the book about Kafka we released a no less interesting work about the Kafka Streams API library.

')

So far, the community is only learning the limits of this powerful tool. So, recently published an article with the translation of which we want to introduce you. In his own experience, the author tells how to make a distributed data storage from Kafka Streams. Enjoy reading!

The Apache Kafka Streams library worldwide is used in the enterprise for distributed stream processing on top of Apache Kafka. One of the underestimated aspects of this framework is that it allows you to store a local state produced on the basis of stream processing.

In this article I will tell you how in our company we managed to use this opportunity profitably when developing a product for the security of cloud applications. With the help of Kafka Streams, we created microservices with shared state, each of which serves as a fault-tolerant and highly available source of reliable information about the state of objects in the system. For us, this is a step forward both in terms of reliability and convenience of support.

If you are interested in an alternative approach that allows you to use a single central database to support the formal state of your objects - read, it will be interesting ...

Why we felt it was time to change our approaches to working with shared state

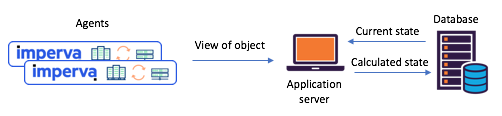

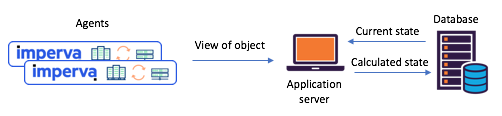

We needed to maintain the state of various objects, based on the reports of agents (for example: was the site attacked)? Before switching to Kafka Streams, we often relied on a single central database (+ service API) to manage our health. This approach has its drawbacks: in data-intensive situations, maintaining consistency and synchronization turns into a real challenge. The database can become a bottleneck, or be in a state of race and suffer from unpredictability.

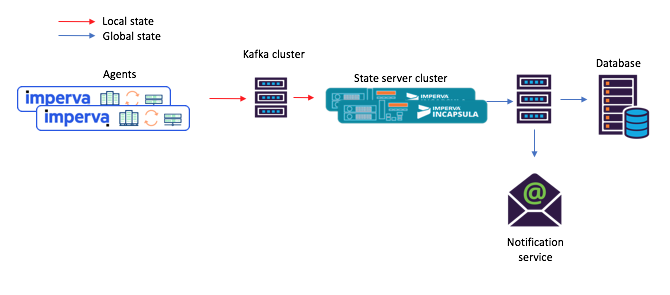

Figure 1: A typical state separation scenario encountered before

Kafka and Kafka Streams: agents report their views through the API, the updated state is calculated through the central database

Meet Kafka Streams - now it's easy to create shared state microservices

About a year ago, we decided to thoroughly review our shared-state scenarios to deal with such problems. Immediately we decided to try Kafka Streams - it is known how scalable, highly available and fault-tolerant it is, what streaming functionality it has (conversions, including state preservation). Just what we needed, not to mention how mature and reliable the messaging system was in Kafka.

Each of the microservices we created with state saving was built on the basis of the Kafka Streams instance with a fairly simple topology. It consisted of 1) a source 2) a processor with a permanent store of keys and values 3) of the flow:

Figure 2: the default topology of our streaming instances for microservices with state preservation. Please note: there is also a repository that contains planning metadata.

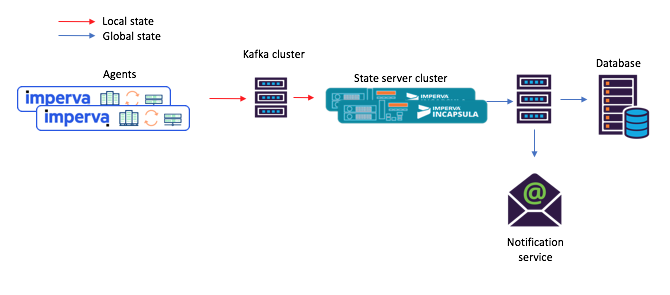

With this new approach, agents compose messages submitted to the original topic, and consumers — say, the mail notification service — accept the calculated shared state through the sink (output topic).

Figure 3: A new example of a task flow for a script with shared microservices: 1) the agent generates a message arriving at the original Kafka topic; 2) a microservice with a shared state (using Kafka Streams) processes it and writes the calculated state to the final topic of Kafka; after which 3) consumers take a new state

Hey, this built-in key and value store is really very useful!

As mentioned above, our shared state topology contains a repository of keys and values. We found several options for its use, and two of them are described below.

Option # 1: Use a keystore and values store for calculations.

Our first keystore and values store contained supporting data that we needed for the calculations. For example, in some cases, the shared state was determined by the principle of "majority of votes." In the repository, you could keep all the latest reports of agents on the status of some object. Then, receiving a new report from one agent or another, we could save it, retrieve all other agents' reports on the state of the same object from the repository and repeat the calculation.

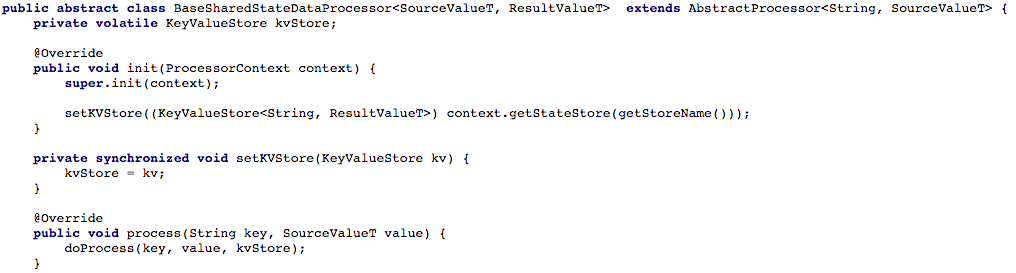

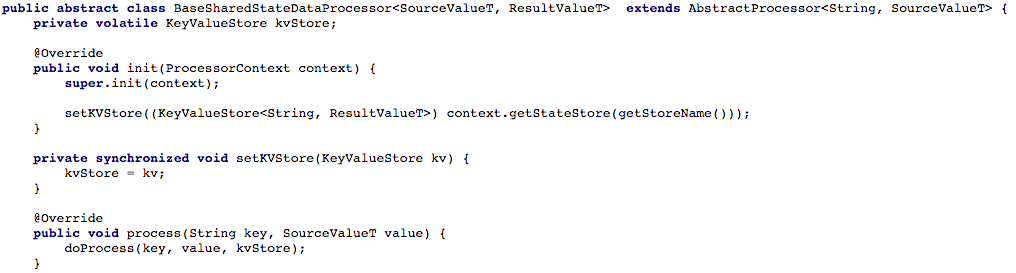

Figure 4 below shows how we opened access to the keystore and values store to the processor's processing method, so that a new message could then be processed.

Figure 4: open access to the keystore and values storage for the processor's processing method (after that, in each shared state scenario, you need to implement the

Option # 2: Create a CRUD API over Kafka Streams

Having adjusted our basic task flow, we began to try to write a RESTful CRUD API for our shared state microservices. We wanted to be able to retrieve the state of some or all of the objects, as well as set or delete the state of the object (this is useful when supporting the server part).

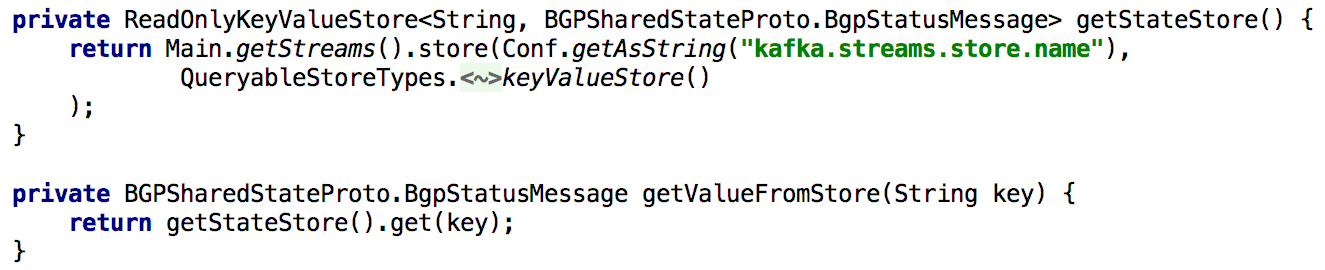

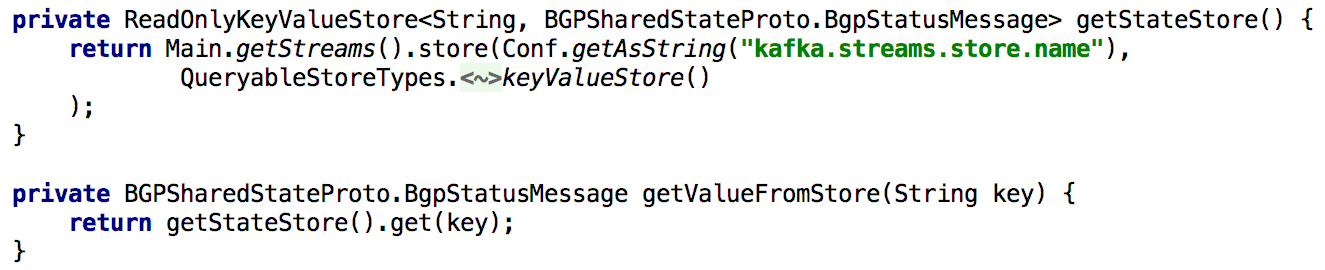

To support all the Get State APIs, whenever we needed to re-evaluate the state during processing, we permanently put it into the built-in keystore and values store. In this case, it becomes quite simple to implement such an API using a single instance of Kafka Streams, as shown in the listing below:

Figure 5: Using the built-in keystore and values store to retrieve the precomputed state of an object

Updating the state of an object through the API is also easy to implement. In principle, for this you only need to create a Kafka producer, and with its help make a record that contains the new state. This way, it is guaranteed that all messages generated via the API will be processed in the same way as those received from other producers (eg agents).

Figure 6: You can set the state of an object using the Kafka producer.

Slight complication: Kafka has many partitions

Next, we wanted to distribute the processing load and improve accessibility by providing a cluster of shared state microservices for each scenario. Setting up was easy for us: after we configured all the instances so that they work with the same application ID (and the same bootstrap servers), almost everything else was done automatically. We also specified that each source topic would consist of several partitions so that each instance could be assigned a subset of such partitions.

I also mention that it is in the order of things here to make a backup of the state store so that, for example, in the case of recovery after a failure, transfer this copy to another instance. A replicable topic with a change log (in which local updates are tracked) is created for each state store in Kafka Streams. Thus, Kafka constantly insures state storage. Therefore, in the event of a failure of one or another Kafka Streams instance, the state store can be quickly restored on another instance where the corresponding partitions will go. Our tests have shown that this is done in seconds, even if there are millions of records in the repository.

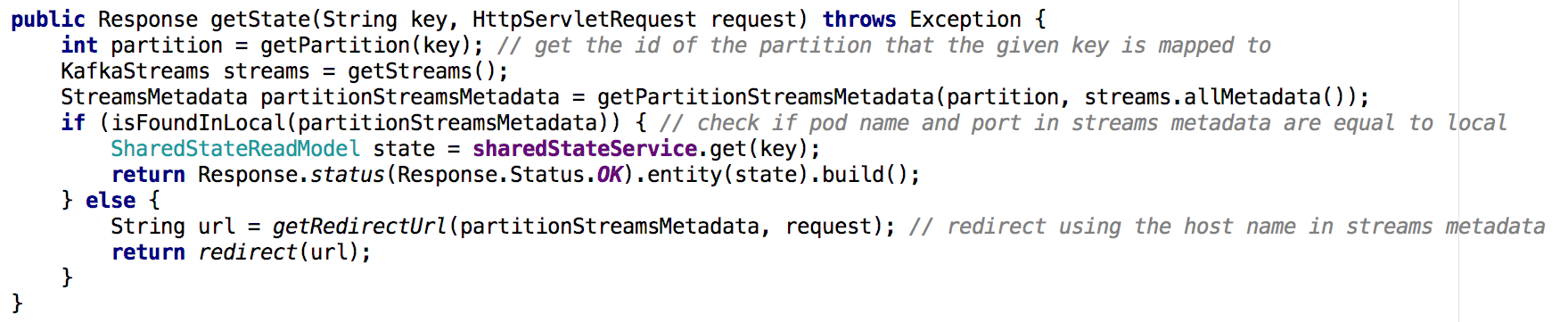

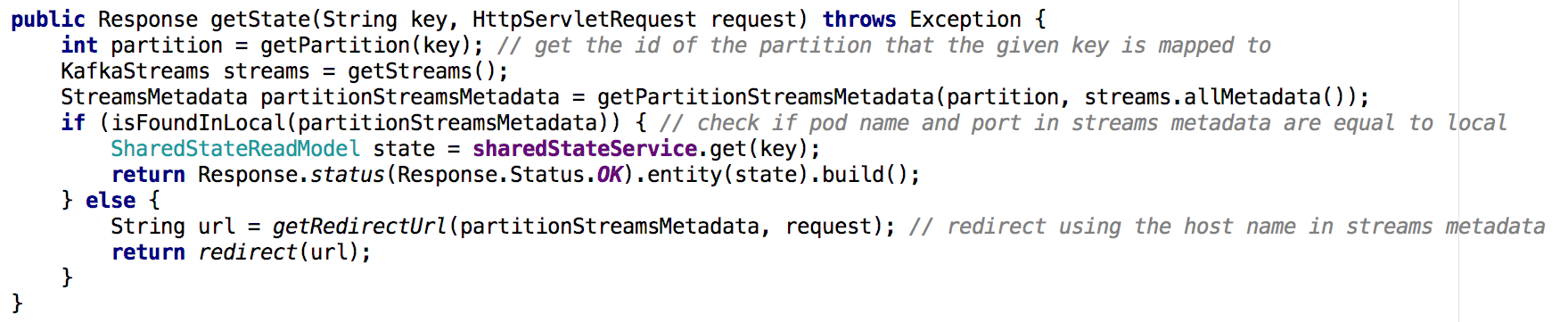

Moving from one microservice with shared state to a cluster of microservices, it becomes not so trivial to implement the Get State API. In the new situation the state of each microservice contains only a part of the overall picture (those objects whose keys were mapped to a specific partition). It was necessary to determine which instance contained the state of the object we needed, and we did this based on the metadata of the streams, as shown below:

Figure 7: using metadata streams, we determine from which instance to query the state of the desired object; a similar approach was used with the GET ALL API

Main conclusions

State stores in Kafka Streams de facto can serve as a distributed database

Thanks to these and other strengths, Kafka Streams is great for supporting a global state in a distributed system like ours. Kafka Streams proved to be very reliable in production (since its deployment, we have hardly lost any messages), and we are sure that its capabilities are not limited to this!

We remind you that following the book about Kafka we released a no less interesting work about the Kafka Streams API library.

')

So far, the community is only learning the limits of this powerful tool. So, recently published an article with the translation of which we want to introduce you. In his own experience, the author tells how to make a distributed data storage from Kafka Streams. Enjoy reading!

The Apache Kafka Streams library worldwide is used in the enterprise for distributed stream processing on top of Apache Kafka. One of the underestimated aspects of this framework is that it allows you to store a local state produced on the basis of stream processing.

In this article I will tell you how in our company we managed to use this opportunity profitably when developing a product for the security of cloud applications. With the help of Kafka Streams, we created microservices with shared state, each of which serves as a fault-tolerant and highly available source of reliable information about the state of objects in the system. For us, this is a step forward both in terms of reliability and convenience of support.

If you are interested in an alternative approach that allows you to use a single central database to support the formal state of your objects - read, it will be interesting ...

Why we felt it was time to change our approaches to working with shared state

We needed to maintain the state of various objects, based on the reports of agents (for example: was the site attacked)? Before switching to Kafka Streams, we often relied on a single central database (+ service API) to manage our health. This approach has its drawbacks: in data-intensive situations, maintaining consistency and synchronization turns into a real challenge. The database can become a bottleneck, or be in a state of race and suffer from unpredictability.

Figure 1: A typical state separation scenario encountered before

Kafka and Kafka Streams: agents report their views through the API, the updated state is calculated through the central database

Meet Kafka Streams - now it's easy to create shared state microservices

About a year ago, we decided to thoroughly review our shared-state scenarios to deal with such problems. Immediately we decided to try Kafka Streams - it is known how scalable, highly available and fault-tolerant it is, what streaming functionality it has (conversions, including state preservation). Just what we needed, not to mention how mature and reliable the messaging system was in Kafka.

Each of the microservices we created with state saving was built on the basis of the Kafka Streams instance with a fairly simple topology. It consisted of 1) a source 2) a processor with a permanent store of keys and values 3) of the flow:

Figure 2: the default topology of our streaming instances for microservices with state preservation. Please note: there is also a repository that contains planning metadata.

With this new approach, agents compose messages submitted to the original topic, and consumers — say, the mail notification service — accept the calculated shared state through the sink (output topic).

Figure 3: A new example of a task flow for a script with shared microservices: 1) the agent generates a message arriving at the original Kafka topic; 2) a microservice with a shared state (using Kafka Streams) processes it and writes the calculated state to the final topic of Kafka; after which 3) consumers take a new state

Hey, this built-in key and value store is really very useful!

As mentioned above, our shared state topology contains a repository of keys and values. We found several options for its use, and two of them are described below.

Option # 1: Use a keystore and values store for calculations.

Our first keystore and values store contained supporting data that we needed for the calculations. For example, in some cases, the shared state was determined by the principle of "majority of votes." In the repository, you could keep all the latest reports of agents on the status of some object. Then, receiving a new report from one agent or another, we could save it, retrieve all other agents' reports on the state of the same object from the repository and repeat the calculation.

Figure 4 below shows how we opened access to the keystore and values store to the processor's processing method, so that a new message could then be processed.

Figure 4: open access to the keystore and values storage for the processor's processing method (after that, in each shared state scenario, you need to implement the

doProcess method)Option # 2: Create a CRUD API over Kafka Streams

Having adjusted our basic task flow, we began to try to write a RESTful CRUD API for our shared state microservices. We wanted to be able to retrieve the state of some or all of the objects, as well as set or delete the state of the object (this is useful when supporting the server part).

To support all the Get State APIs, whenever we needed to re-evaluate the state during processing, we permanently put it into the built-in keystore and values store. In this case, it becomes quite simple to implement such an API using a single instance of Kafka Streams, as shown in the listing below:

Figure 5: Using the built-in keystore and values store to retrieve the precomputed state of an object

Updating the state of an object through the API is also easy to implement. In principle, for this you only need to create a Kafka producer, and with its help make a record that contains the new state. This way, it is guaranteed that all messages generated via the API will be processed in the same way as those received from other producers (eg agents).

Figure 6: You can set the state of an object using the Kafka producer.

Slight complication: Kafka has many partitions

Next, we wanted to distribute the processing load and improve accessibility by providing a cluster of shared state microservices for each scenario. Setting up was easy for us: after we configured all the instances so that they work with the same application ID (and the same bootstrap servers), almost everything else was done automatically. We also specified that each source topic would consist of several partitions so that each instance could be assigned a subset of such partitions.

I also mention that it is in the order of things here to make a backup of the state store so that, for example, in the case of recovery after a failure, transfer this copy to another instance. A replicable topic with a change log (in which local updates are tracked) is created for each state store in Kafka Streams. Thus, Kafka constantly insures state storage. Therefore, in the event of a failure of one or another Kafka Streams instance, the state store can be quickly restored on another instance where the corresponding partitions will go. Our tests have shown that this is done in seconds, even if there are millions of records in the repository.

Moving from one microservice with shared state to a cluster of microservices, it becomes not so trivial to implement the Get State API. In the new situation the state of each microservice contains only a part of the overall picture (those objects whose keys were mapped to a specific partition). It was necessary to determine which instance contained the state of the object we needed, and we did this based on the metadata of the streams, as shown below:

Figure 7: using metadata streams, we determine from which instance to query the state of the desired object; a similar approach was used with the GET ALL API

Main conclusions

State stores in Kafka Streams de facto can serve as a distributed database

- constantly replicated in kafka

- On top of such a system is easy to build CRUD API

- Processing multiple partitions is a bit more complicated.

- It is also possible to add one or more state stores to the stream topology for storing auxiliary data. This option can be used for:

- Long-term storage of data needed for calculations during stream processing

- Long-term storage of data that may be useful during the next initialization of the stream instance

- much more ...

Thanks to these and other strengths, Kafka Streams is great for supporting a global state in a distributed system like ours. Kafka Streams proved to be very reliable in production (since its deployment, we have hardly lost any messages), and we are sure that its capabilities are not limited to this!

Source: https://habr.com/ru/post/449928/

All Articles