[Translation] Envoy threading model (Envoy threading model)

Hi, Habr! I present to you the translation of the article "Envoy threading model" by Matt Klein.

This article seemed to me quite interesting, and since Envoy is most often used as part of “istio” or simply as “ingress controller” kubernetes, therefore most people do not have the same direct interaction with it as for example with typical Nginx or Haproxy settings. However, if something breaks, it would be good to understand how it is arranged from the inside. I tried to translate as much text as possible into Russian, including special words; for those who are pained to look at this, I left the originals in brackets. Welcome under cat.

Low-level technical documentation on the Envoy codebase is currently rather poor. To fix this, I plan to do a series of blog posts about various Envoy subsystems. Since this is the first article, please let me know what you think and what you might be interested in in the following articles.

One of the most common technical questions I get about Envoy is a request for a low-level description of the threading model used. In this post, I will describe how Envoy maps connections to threads, as well as a description of the Thread Local Storage system, which is used internally to make the code more parallel and high-performance.

')

Envoy uses three different types of threads:

As discussed briefly above, all worker threads listen to all listeners without any segmentation. Thus, the kernel is used to correctly send received sockets to worker threads. Modern kernels in general are very good at this, they use functions such as I / O priority (IO) boosting to try to fill the thread with work before starting to use other threads that also listen on the same socket, and not use cyclic blocking (Spinlock) to handle each request.

Once a connection is made on a worker thread (worker thread), it never leaves this thread (thread). All further processing of the connection is fully processed in the worker thread, including any forwarding behavior.

This has several important consequences:

The term “non-blocking” has so far been used several times when discussing how the main and worker threads work. All code is written with the proviso that nothing is ever blocked. However, this is not entirely true (which is not quite true?).

Envoy uses several long process locks:

Because of the way Envoy separates the responsibilities of the main thread from those of the workflow, there is a requirement that complex processing can be performed in the main thread and then provided to each workflow with a high degree of parallelism. This section describes the high level Envoy Thread Local Storage (TLS) system. In the next section, I will describe how it is used to manage the cluster.

As already described, the main thread handles almost all management functions and control plane functionality in the Envoy process. The control plane here is a bit overloaded, but if we consider it within the framework of the Envoy process itself and compare it with the shipment that the workflows perform, this seems reasonable. As a general rule, the main thread process does some work, and then it needs to update each workflow according to the result of this work, and the workflow does not need to set a lock on every access .

The TLS (Thread local storage) Envoy system works like this:

Although this is a very simple and incredibly powerful paradigm that is very similar to the concept of RCU blocking (Read-Copy-Update). Essentially, workflows never see any data changes in TLS slots during job execution. The change occurs only during the rest period between work events.

Envoy uses it in two different ways:

In this section, I will describe how TLS (Thread local storage) is used to manage a cluster. Cluster management includes the processing of the xDS and / or DNS API, as well as health checking.

Cluster flow control includes the following components and steps:

Using the above procedure, Envoy can process each request without any locks (other than those described earlier). In addition to the complexity of the TLS code itself, most of the code does not need to understand how multithreading works, and it can be written in single-threaded mode. This makes it easier to write most of the code in addition to excellent performance.

TLS (Thread local storage) and RCU (Read Copy Update) are widely used in Envoy.

Examples of using:

There are other cases, but previous examples should provide a good understanding of what TLS is used for.

Although Envoy works quite well overall, there are several known areas that need attention when it is used with very high concurrency and throughput:

The Envoy threading model is designed to provide ease of programming and massive parallelism through the potentially wasteful use of memory and connections if they are not configured correctly. This model allows it to work very well with a very high number of flows and throughput.

As I briefly mentioned on Twitter, the design can also run on top of a full-featured network stack in user mode, such as the DPDK (Data Plane Development Kit), which can result in normal servers processing millions of requests per second with full L7 processing. It will be very interesting to see what will be built in the next few years.

One last quick comment: I was asked many times why we chose C ++ for Envoy. The reason still is that this is still the only widely spoken industrial language on which to build the architecture described in this post. C ++ is definitely not suitable for all or even many projects, but for certain use cases it is still the only tool to do the job (to get the job done).

Links to files with interfaces and header implementation discussed in this post:

This article seemed to me quite interesting, and since Envoy is most often used as part of “istio” or simply as “ingress controller” kubernetes, therefore most people do not have the same direct interaction with it as for example with typical Nginx or Haproxy settings. However, if something breaks, it would be good to understand how it is arranged from the inside. I tried to translate as much text as possible into Russian, including special words; for those who are pained to look at this, I left the originals in brackets. Welcome under cat.

Low-level technical documentation on the Envoy codebase is currently rather poor. To fix this, I plan to do a series of blog posts about various Envoy subsystems. Since this is the first article, please let me know what you think and what you might be interested in in the following articles.

One of the most common technical questions I get about Envoy is a request for a low-level description of the threading model used. In this post, I will describe how Envoy maps connections to threads, as well as a description of the Thread Local Storage system, which is used internally to make the code more parallel and high-performance.

')

Threading description (Threading overview)

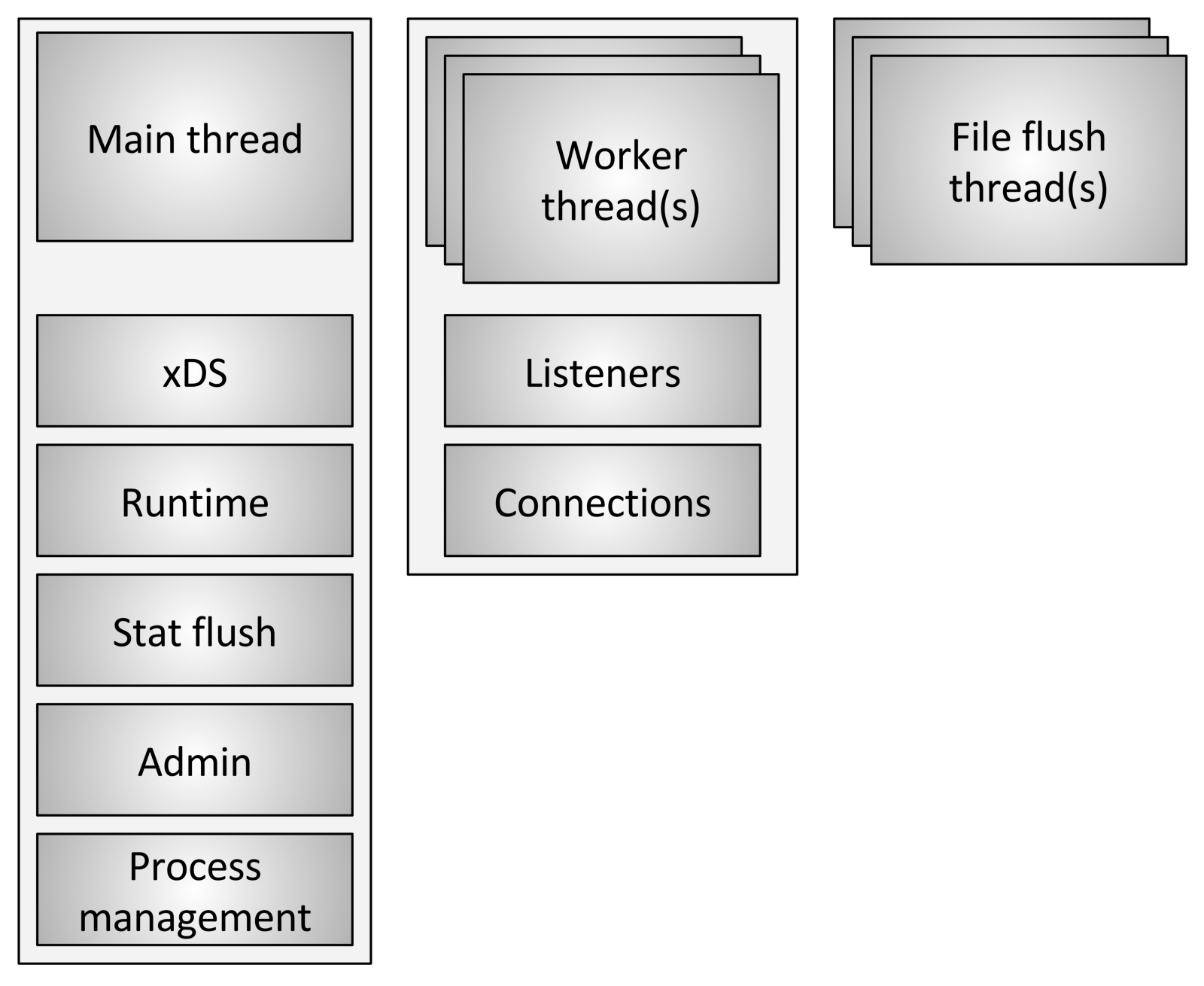

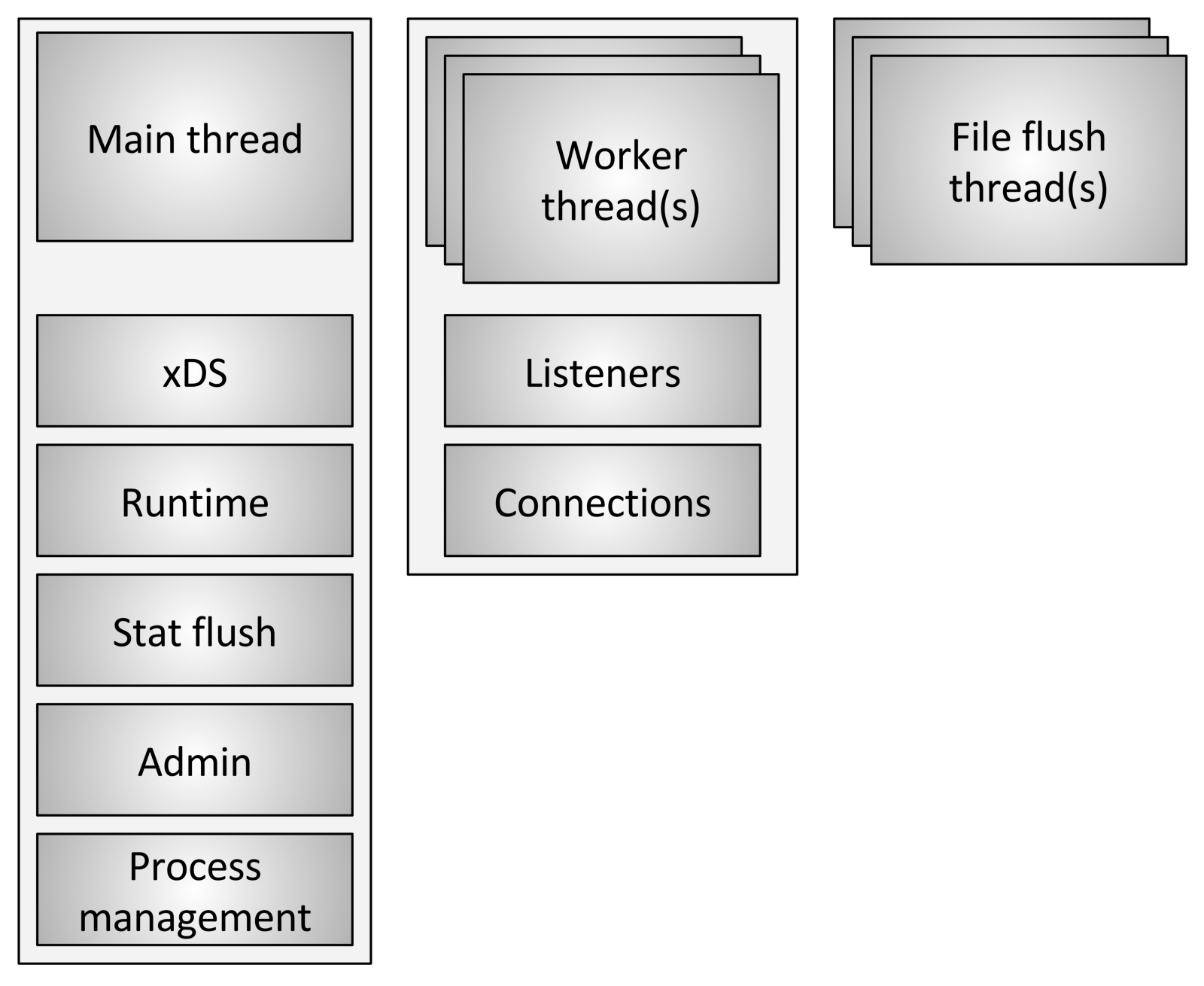

Envoy uses three different types of threads:

- Main (Main): This flow controls the start and end of the process, all XDS (xDiscovery Service) API processing, including DNS, health checking, general cluster management and service run time, statistics reset, administration and general management processes - Linux signals, hot restart (hot restart), etc. Everything that happens in this stream is asynchronous and non-blocking. In general, the main thread coordinates all critical functionality processes, which do not require a large number of CPUs. This allows most control code to write as if it were single-threaded.

- Worker: By default, Envoy creates a worker thread for each hardware thread in the system, this can be controlled using the

--concurrencyoption. Each workflow starts a “non-blocking” event loop, which is responsible for listening (listening) to each listener (listener), at the time of this writing (July 29, 2017) there is no segmentation (sharding) of a listener (listener), receiving new connections, creating an instance of a stack of filters for connecting and processing all input-output (IO) operations during the connection. Again, this allows most of the connection handling code to be written as if it were single-threaded. - File (flusher): Each file that Envoy writes, mostly access logs, currently has an independent blocking stream. This is due to the fact that writing to files cached by the file system even when using

O_NONBLOCKcan sometimes be blocked (sigh). When workflows need to be written to a file, the data is actually moved to a buffer in memory, where it is eventually dropped through the file flush stream. This is one area of code in which technically all worker threads (worker threads) can block (block) the same lock (lock), trying to fill the memory buffer.

Connection handling

As discussed briefly above, all worker threads listen to all listeners without any segmentation. Thus, the kernel is used to correctly send received sockets to worker threads. Modern kernels in general are very good at this, they use functions such as I / O priority (IO) boosting to try to fill the thread with work before starting to use other threads that also listen on the same socket, and not use cyclic blocking (Spinlock) to handle each request.

Once a connection is made on a worker thread (worker thread), it never leaves this thread (thread). All further processing of the connection is fully processed in the worker thread, including any forwarding behavior.

This has several important consequences:

- All connection pools in Envoy are related to the workflow. Thus, while HTTP / 2 connection pools make only one connection with each upstream host at a time, if there are four worker threads, there will be four HTTP / 2 connections per upstream host in a steady state.

- The reason Envoy works in this way is that, while keeping everything in one working thread, almost all the code can be written without blocking and as if it is single-threaded. This design simplifies writing a large amount of code and scales incredibly well for an almost unlimited number of worker threads.

- However, one of the main conclusions is that, in terms of the efficiency of the memory pool and connections, it is actually very important to configure the

--concurrencyparameter. Having more worker threads than necessary will lead to memory loss, creating more idle connections and reducing the rate of hitting the connection pool. In Lyft, our envoy sidecar containers work with very low concurrency, so the performance roughly corresponds to the services they sit next to. We run Envoy as a border proxy (edge) only with maximum concurrency (concurrency).

What does non-blocking means mean?

The term “non-blocking” has so far been used several times when discussing how the main and worker threads work. All code is written with the proviso that nothing is ever blocked. However, this is not entirely true (which is not quite true?).

Envoy uses several long process locks:

- As already mentioned, when writing access logs, all worker threads get the same lock before filling the log buffer in memory. The lock hold time should be very low, but it is possible that this lock will be challenged with high parallelism and high bandwidth.

- Envoy uses a very complex system for processing statistics that is local to the stream. This will be the topic of a separate post. However, I will briefly mention that as part of the local processing of flow statistics, it is sometimes necessary to obtain a lock for the central “statistics store”. This lock should never be required.

- The main thread periodically needs coordination with all worker threads. This is done by “publishing” from the main thread to the worker threads, and sometimes from the worker threads back to the main thread. A block is required for sending so that the published message can be placed in a queue for subsequent delivery. These locks should never be seriously rivaled, but they can still be technically blocked.

- When Envoy writes a log to the system error stream (standard error), it acquires a lock on the entire process. In general, Envoy local logging is considered terrible in terms of performance, so much attention is not paid to its improvement.

- There are several other random locks, but none of them is critical for performance and should never be disputed.

Local thread storage (Thread local storage)

Because of the way Envoy separates the responsibilities of the main thread from those of the workflow, there is a requirement that complex processing can be performed in the main thread and then provided to each workflow with a high degree of parallelism. This section describes the high level Envoy Thread Local Storage (TLS) system. In the next section, I will describe how it is used to manage the cluster.

As already described, the main thread handles almost all management functions and control plane functionality in the Envoy process. The control plane here is a bit overloaded, but if we consider it within the framework of the Envoy process itself and compare it with the shipment that the workflows perform, this seems reasonable. As a general rule, the main thread process does some work, and then it needs to update each workflow according to the result of this work, and the workflow does not need to set a lock on every access .

The TLS (Thread local storage) Envoy system works like this:

- Code running in the main thread can allocate a TLS slot for the entire process. Although it is abstracted, in practice it is an index in the vector that provides O (1) access.

- The main thread can set arbitrary data in its slot. When this is done, data is published in each workflow as a normal event of the event loop.

- Workflows can read from their TLS slot and retrieve any local stream data available there.

Although this is a very simple and incredibly powerful paradigm that is very similar to the concept of RCU blocking (Read-Copy-Update). Essentially, workflows never see any data changes in TLS slots during job execution. The change occurs only during the rest period between work events.

Envoy uses it in two different ways:

- By storing different data on each workflow, access to this data is carried out without any blocking.

- Keeping a global pointer to global data in read-only mode on each workflow. Thus, each workflow has a data reference count, which cannot be reduced during job execution. Only when all workers calm down and upload new common data will old data be destroyed. This is identical to RCU.

Cluster update threading

In this section, I will describe how TLS (Thread local storage) is used to manage a cluster. Cluster management includes the processing of the xDS and / or DNS API, as well as health checking.

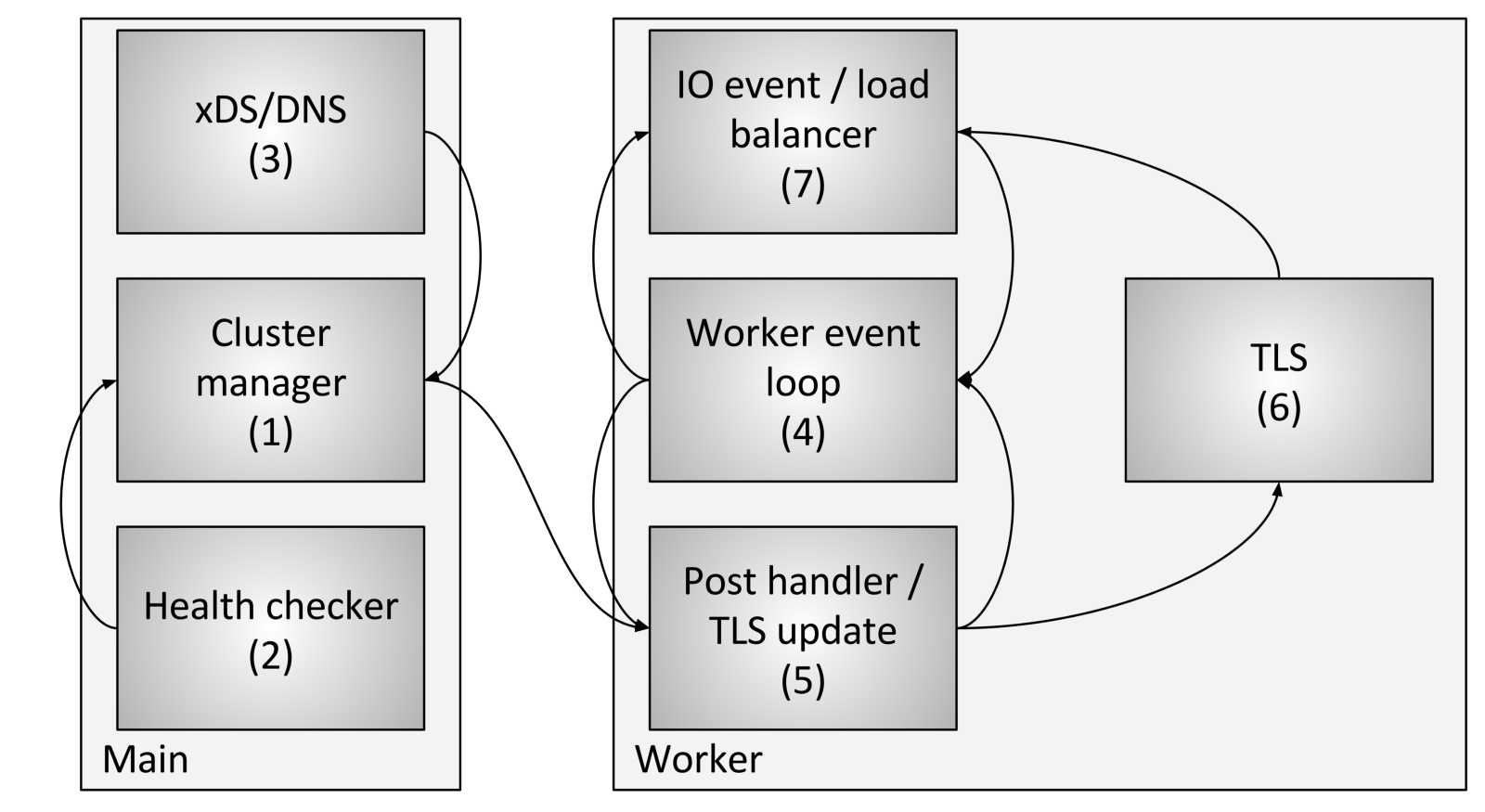

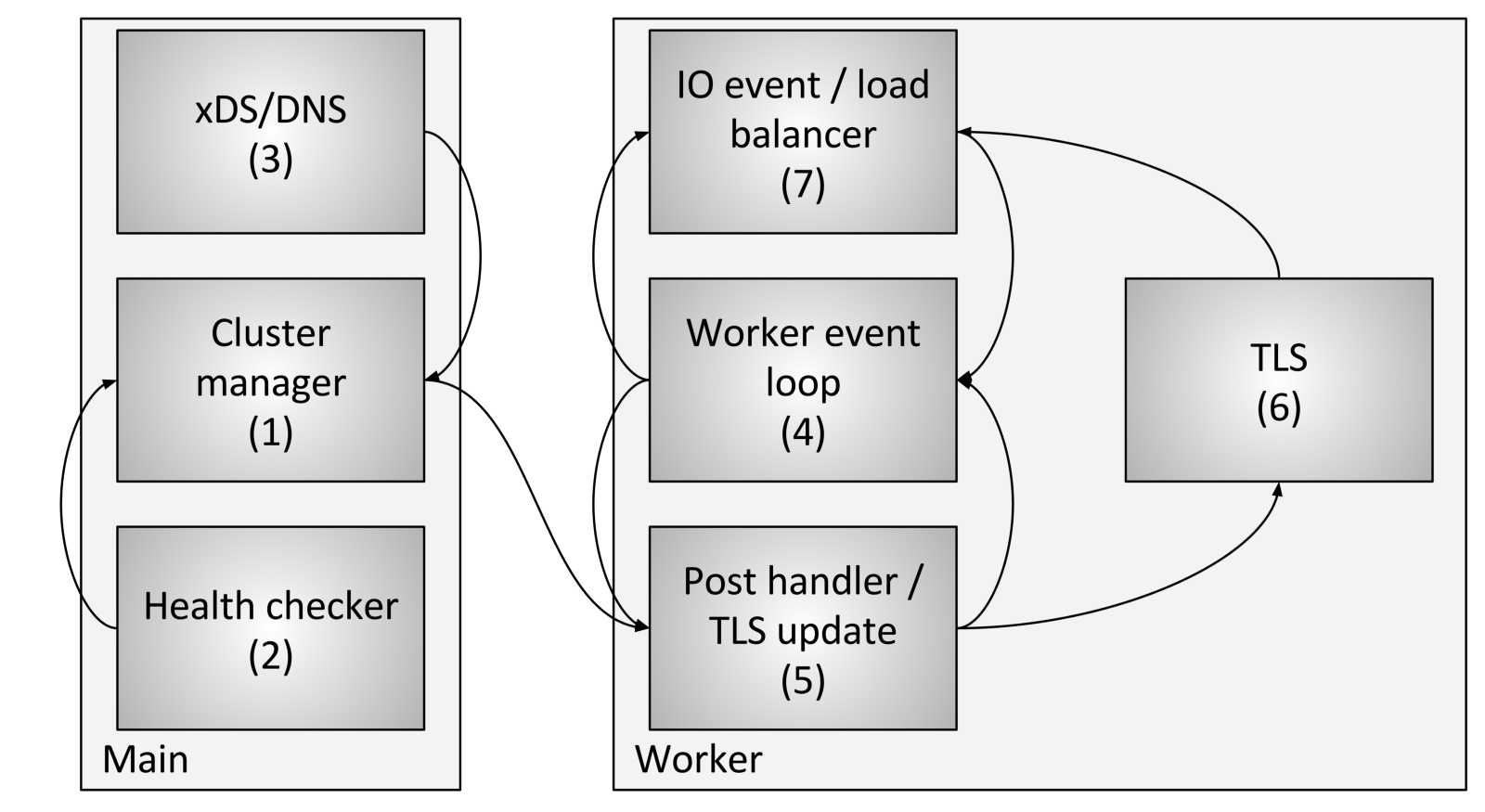

Cluster flow control includes the following components and steps:

- The cluster manager is a component within Envoy that manages all known upstream clusters, the CDS (Cluster Discovery Service) API, the Secret Discovery Service (SDS) and Endpoint Discovery Service (SDS) APIs, and active external checks health (health checking). He is responsible for creating a “eventually agreed” (eventually consistent) view of each upstream (upstream) cluster, which includes the detected hosts, as well as the health status.

- The health checker performs an active health check and reports changes in the health state to the cluster dispatcher.

- CDS (Cluster Discovery Service) / SDS (Secret Discovery Service) / EDS (Endpoint Discovery Service) / DNS are performed to determine cluster membership. The state change is returned to the cluster manager.

- Each worker thread continuously performs an event loop.

- When the cluster manager determines that the state for the cluster has changed, it creates a new read-only cluster snapshot and sends it to each workflow.

- During the next rest period, the worker thread will update the snapshot in the dedicated TLS slot.

- During an I / O event that the host must determine for load balancing, the load balancer will query the TLS slot (Thread local storage) for host information. No locks are required for this. Note also that TLS can also trigger events during an update, so load balancers and other components can recalculate caches, data structures, etc. This is outside the scope of this post, but is used in various places in the code.

Using the above procedure, Envoy can process each request without any locks (other than those described earlier). In addition to the complexity of the TLS code itself, most of the code does not need to understand how multithreading works, and it can be written in single-threaded mode. This makes it easier to write most of the code in addition to excellent performance.

Other subsystems using TLS (Other subsystems that make use of TLS)

TLS (Thread local storage) and RCU (Read Copy Update) are widely used in Envoy.

Examples of using:

- The mechanism for changing the functionality during execution: The current list of the included functionality is calculated in the main thread. Each worker thread is then provided with a read-only snapshot using RCU semantics.

- Replacing route tables : For route tables provided by RDS (Route Discovery Service), route tables are created in the main flow. The read-only snapshot will later be provided to each worker thread using the RCU semantics (Read Copy Update). This makes changing route tables atomic efficient.

- HTTP header caching: As it turns out, calculating the HTTP header for each request (when performing ~ 25K + RPS per core) is quite expensive. Envoy centrally calculates a header about every half second and provides it to each worker through TLS and RCU.

There are other cases, but previous examples should provide a good understanding of what TLS is used for.

Known performance pitfalls

Although Envoy works quite well overall, there are several known areas that need attention when it is used with very high concurrency and throughput:

- As already described in this article, currently all worker threads get a lock when they are written to the memory buffer of the access log. With high concurrency and high bandwidth, you will need to package the access logs for each workflow due to unorganized delivery when writing to the final file. Alternatively, you can create a separate access log for each workflow.

- Although the statistics are very much optimized, with very high concurrency and throughput, there will probably be atomic competition on individual statistics. The solution to this problem is counters for one workflow with periodic reset of central counters. This will be discussed in a subsequent post.

- The existing architecture will not work well if Envoy is deployed in a scenario in which there are very few connections that require significant resources for processing. There is no guarantee that connections will be evenly distributed between workflows. This can be solved by implementing balancing work connections, in which the possibility of exchanging connections between worker threads will be realized.

Conclusion

The Envoy threading model is designed to provide ease of programming and massive parallelism through the potentially wasteful use of memory and connections if they are not configured correctly. This model allows it to work very well with a very high number of flows and throughput.

As I briefly mentioned on Twitter, the design can also run on top of a full-featured network stack in user mode, such as the DPDK (Data Plane Development Kit), which can result in normal servers processing millions of requests per second with full L7 processing. It will be very interesting to see what will be built in the next few years.

One last quick comment: I was asked many times why we chose C ++ for Envoy. The reason still is that this is still the only widely spoken industrial language on which to build the architecture described in this post. C ++ is definitely not suitable for all or even many projects, but for certain use cases it is still the only tool to do the job (to get the job done).

Links to code (Links to code)

Links to files with interfaces and header implementation discussed in this post:

- github.com/lyft/envoy/blob/master/include/envoy/thread_local/thread_local.h

- github.com/lyft/envoy/blob/master/source/common/thread_local/thread_local_impl.h

- github.com/lyft/envoy/blob/master/include/envoy/upstream/cluster_manager.h

- github.com/lyft/envoy/blob/master/source/common/upstream/cluster_manager_impl.h

Source: https://habr.com/ru/post/449826/

All Articles