WebRTC and video surveillance: how we beat the delay in video from cameras

From the first days of working on a cloud-based video surveillance system, we ran into a problem, without solving which Ivideon could have put up a cross - it was our Everest, which climbed took a lot of strength, but now we finally stuck an ice ax into the crown of the cross-platform rebus.

The system of audio and video transmission over the Internet should not depend on the equipment, Web-clients and standards supported by them, as well as work correctly in the presence of Network Address Translators and firewalls. The user of cloud video surveillance wants to access the service, even if he uses analog cameras, and prefers to watch live video on the most modern device.

')

It is very significant that the user wants to watch the video with a minimum delay. Almost the only way to show low latency video in a browser is to use WebRTC (web real-time communications). WebRTC is a set of technologies for peer-to-peer video and audio transmission in browsers, originally designed for the transmission and playback of low-latency video streams. This, among other things, uses the UDP protocol.

Before telling you what the new engine gives the user, we will remind you why and why we support HLS-technologies, and for what we decided to move on.

HLS engine: pros and cons

( c )

Technology HLS (HTTP Live Streaming) was developed at Apple, so it is not surprising that for the first time its support appeared on devices of this particular brand. To date, the video sequence in the HLS format is also able to play almost all set-top boxes and many devices running on the Android OS.

The HLS engine uses the well-known H264 video codec in combination with AAC or MP3 audio streams for video streaming. All audio and video streaming is packaged in an MPEG-TS transport container. For the transfer via the HTTP protocol, the information contained in the stream is divided into fragments described in m3u8 playlists. And only then these fragments along with playlists are transmitted via HTTP. The division into fragments automatically means a delay in seconds. Such a feature of the MPEG-TS container.

The HLS engine also supports multibitrate streams, Live / VOD.

The main advantages of HLS:

- native support in all major browsers;

- ease of implementation (when compared with WebRTC);

- it is very convenient and efficient to organize all sorts of broadcasts to a large audience due to the fact that you can load segments onto a CDN once.

With all the simplicity of the engine with it, not everything is as smooth as it seems. The main problem is that the developers of third-party players have moved away from Apple's recommendations, for example, in terms of supported audio formats. In particular, many developers began to add the ability to work with popular audio streams: mpeg2 video, mpeg2 audio, etc. As a result, I had to create different playlist formats for different players.

But one of the biggest problems with the HLS engine is the high latency in data transfer.

The origins of the "brakes"

The main reason for the high latency of HLS lies in the fact that programmers created an engine to get the highest quality picture. Therefore, the parameters of the frame interval used and the playback buffer size are simply not suitable for conducting live video broadcasts. Because of this, there is a rather high delay in the transmission of the video sequence, which can be 5-7 seconds.

On the one hand, this is not much, for example, for those who watch a movie from a video hosting server. But for video surveillance systems, a delay in the transmission of a video sequence can be very important.

If you are watching the office, where employees are detached once an hour from the monitors, then the delay of 5 seconds does not matter. But people began to complain that, for example, when they were broadcasting a football match, the GOOOOL had already been written in the chat, but this is not yet in the video :). We already have a number of user cases where Ivideon should practically replace skype.

Can I beat the delay in HLS? The answer to this question sounds like a performance of an experienced rat fighter at a lecture before novice razrezhizatorami: "Rats can not be destroyed, but their livestock can be reduced to a reasonable minimum." So with the delay in HLS, it will not work to remove it to zero, but there are solutions on the market that can significantly reduce the delay.

Fine cut

Another drawback of the engine is the use of small-sized files for data transfer. It would seem that in this bad?

Anyone who tried to copy a large number of small files from one medium to another, probably noticed that the recording speed of such a set is much lower than one large file of the same size. Yes, and the intensity of calls to the hard disk increases significantly, which generally affects the performance of the entire computer. Therefore, the transfer of video data in the form of small 10 second fragments also contributes to the increased engine delay.

Briefly summarize all the pros and cons of HLS-technology.

Advantages of HLS:

- Ability to work with any devices. You can watch the video on any modern device, be it a smartphone, tablet, laptop or desktop PC. The main thing is that the web browser is a modern version and is compatible with HTML5 and Media Source Extensions.

- Excellent image quality. The used function of adaptive data transmission allows you to dynamically change the quality of the transmitted video sequence depending on the bandwidth of the Internet connection, while the algorithm seeks to keep the quality maximum.

- No need for complex configuration of user equipment.

Disadvantages:

- Limited support for working with the engine on some devices.

- High latency in image transmission.

- The huge increase in overheads and the difficulty of optimization due to the use of small files. Due to the nature of the container, we can never get a delay less than the size of the segment.

The disadvantages of HLS outweighed its merits for us and forced us to look for alternatives.

What is WebRTC

( c )

The WebRTC platform was developed by Google in 2011 to transfer streaming video and audio data between browsers and mobile applications with minimal latency. Standard protocol UDP and special flow control algorithms are used for this. Today it is an open source project, it is actively supported by Google and is developing.

WebRTC is a set of technologies for peer-to-peer video and audio transmission. That is, for example, user browsers using WebRTC can transfer data to each other directly, without using remote servers for storing and processing data. All information is also processed by end-user browsers and mobile applications.

Convenience and great features of this technology are appreciated by developers of all popular browsers. Today, WebRTC support is implemented in Mozilla Firefox, Opera, Google Chrome (and all Chromium-based browsers), as well as in mobile applications for Android and iOS.

For all its undoubted merits, WebRTC has several significant drawbacks.

Difficulties of choice

The WebRTC technology is much more complex in terms of network interactions due to the fact that it is about P2P. It is difficult to debug, test, it can behave unpredictably. At the same time, we need to overcome NAT and firewall, we need to ensure work in networks where UDP is blocked.

Google's WebRTC implementation is very difficult to use. There is even a whole company that provides SDK assembly services. Plus, the implementation from Google was very difficult to integrate with our system so that it does not transcode the entire video.

However, we have long wanted to give users the opportunity to work with a full-fledged “live” video sequence and minimize the image lag on the screen from the events themselves. Plus, we had a desire to make using PTZ cameras more comfortable, where delays are critical.

Considering that other implementations of the fight against lags have limited functionality and work much worse, we decided to use WebRTC.

What we did

Competently implement the WebRTC platform is not an easy task. Any miscalculation or inaccuracy can lead to the fact that the delays in the transmission of a video sequence not only do not decrease compared to other platforms, but also increase.

For correct operation of WebRTC, first of all, it is necessary to carry out technological modernization of the stack for working with web video. What we did.

First we implemented the WebRTC signaling protocol server on top of Websocket, and also deployed a WebRTC peer server in the cloud based on the SDK webrtc.org. Its task is to distribute video streams to client WebRTC peers in H.264 + Opus / G.711 format without transcoding video.

We chose Websocket as a signaling protocol because it already has quality support in all popular web browsers. Due to this, it is possible to significantly reduce not only development overhead, but also not to waste time and resources on repeated TCP and TLS handshake compared to AJAX.

The fact is that, by default, WebRTC does not provide the signaling protocol necessary to properly configure, support, and break video communications in real time between the source and client applications.

And in order to independently implement the signaling technology, we needed to develop our own signaling server with support for several web protocols (Websocet, WebRTC). And also with the ability to securely manage sessions and notifications in real time, video management and many other parameters.

We have overcome the limitations of P2P, reducing the delay not due to P2P, but due to UDP and flow control, aimed at reducing the delay. This is also embedded in WebRTC, since the main use-case is p2p conversations through the browser.

In the mobile client, we implemented the player using the webrtc.org SDK, because only in it the flow control is correctly implemented, there are all known Forward Error Correction (FEC) schemes, the packet re-sending mechanism is correctly implemented for all browsers. The fact that the webrtc.org SDK is actively developing by Google is also important.

What is the result of the introduction of WebRTC?

To view live video from cameras, we added a new optimized player based on WebRTC to our personal account. It provides high-speed video loading and completely eliminates the problem of accumulation of delay with increasing viewing time.

After the implementation of WebRTC support in the Ivideon cloud service, we can confidently say that now our customers can view the full live video. Now the delay in broadcasting a video series does not exceed one second! For comparison, the old HLS engine provided video delivery with a delay of 5-7 seconds. The difference in the speed of video demonstration is very significant, and the user will notice it immediately after starting work with our video service.

As we expected, the implementation of the new player has increased the responsiveness of PTZ and voice communication with the camera.

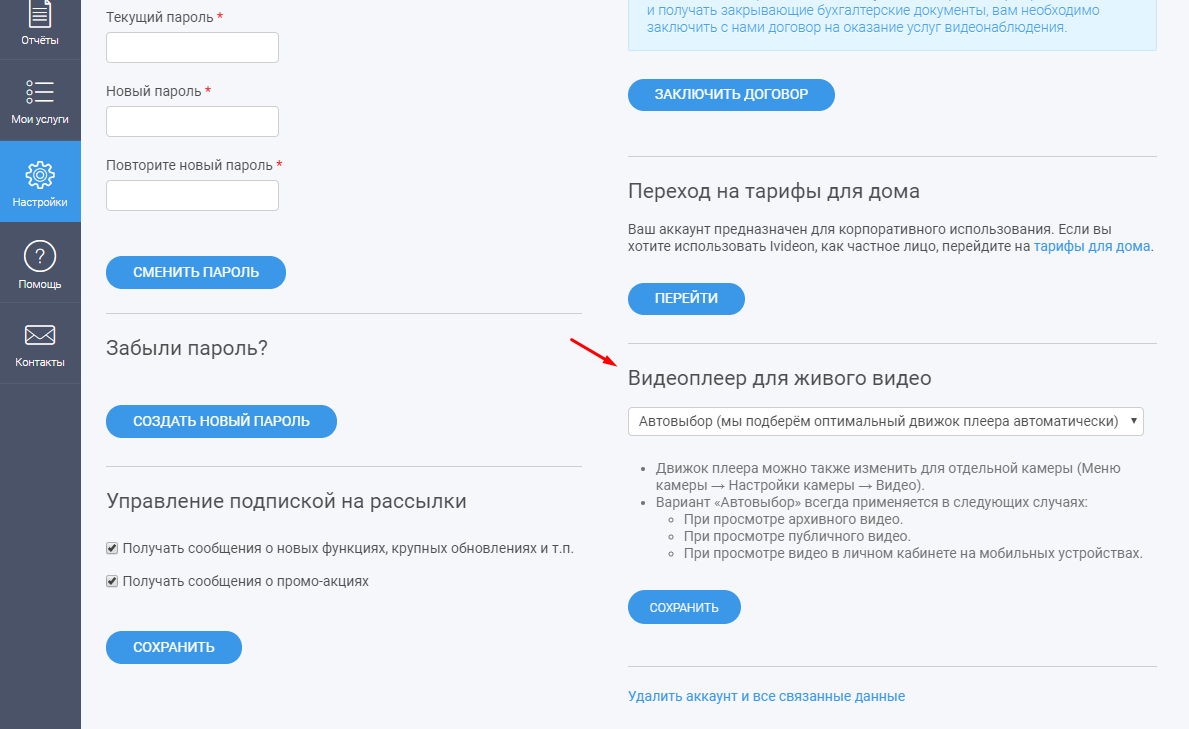

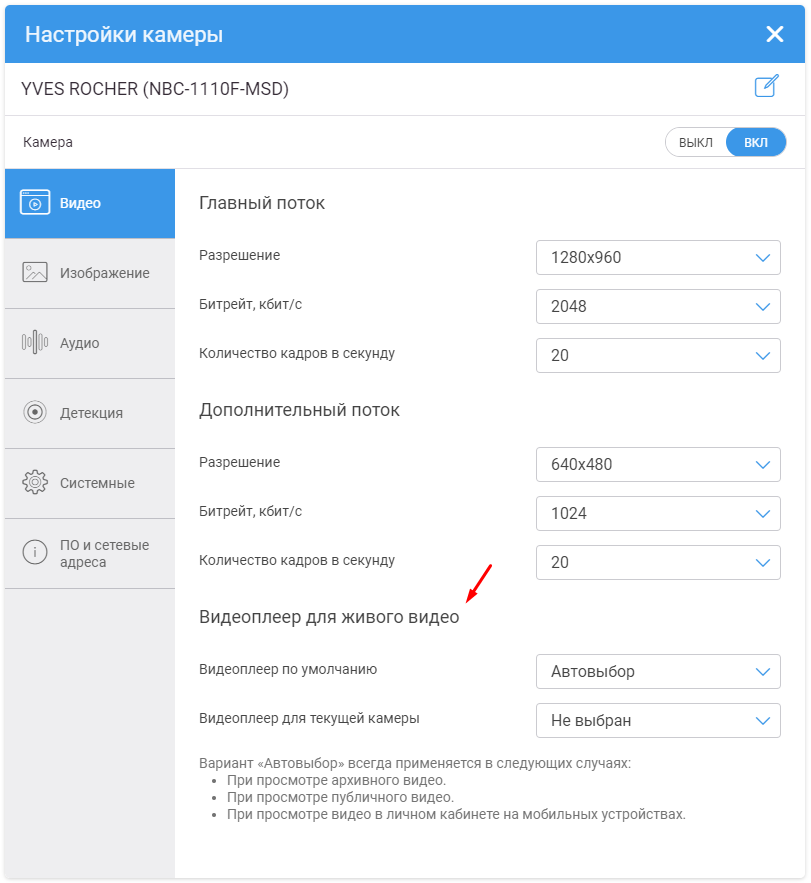

There is only one subtle point that we want to pay attention to. New WebRTC-player while working in test mode. And that is why we do not connect it to all our customers by default. But you can activate it yourself by turning on the corresponding item in the camera settings (for this you need to go to your personal account ).

Features of WebRTC implementation in the Ivideon service

WebRTC is still experimental technology at the moment. Its support is not yet correctly implemented in all browsers and user devices, and also not in all cameras.

This is precisely what explains the fact that we have not yet made the WebRTC player the main default for all users.

For now, we recommend using WebRTC only in Google Chrome browsers. The latest versions of Firefox and Safari also support this technology, but, unfortunately, it is still unstable.

We have not yet implemented WebRTC support for browsers on mobile devices. Now, if you log in from a mobile device and activate WebRTC, this mode will not work. However, WebRTC is in our mobile applications for Android and iOS .

And completing the story about the features of the implementation of WebRTC in our service, we note two more subtle points.

First, the technology is focused on broadcasting live video in real time. Therefore, if your channel’s bandwidth is not enough to transmit a video sequence, you will notice dropped frames (with HLS you will notice video fading and an increase in latency, while frames will not fall out), but the video will still be broadcast in real time.

Secondly, since the technology is designed to work with live video in real time, we do not use it to work with archived video data.

Other changes in the service

At the moment, Flash is no longer involved in the automatic engine selection mechanism. You can still use this player, but for this you need to select it manually in your account or camera settings. This is not a fad, just according to the statistics of our service, users working with Flash are almost gone. And on trying to determine if the user's browser supports it, we lose about 2 seconds of precious time.

Here is a brief summary of the changes that await you in our cloud-based video surveillance system and personal account. Stay with us and stay tuned!

Source: https://habr.com/ru/post/449326/

All Articles