Steal: who steals processor time from virtual machines

Hello! I want to tell in simple language about the mechanics of steal inside virtual machines and about some unobvious artifacts that we were able to find out during his research, which I had to dive into as a Mail.ru Cloud Solutions cloud platform. Platform powered by KVM.

CPU steal time is the time during which the virtual machine does not receive processor resources for its execution. This time is counted only in guest operating systems in virtualization environments. The reasons where these very allocated resources go, as in life, are very vague. But we decided to figure it out, even set up a whole series of experiments. Not that we now know everything about steal, but now we’ll tell you something interesting.

1. What is steal

So, steal is a metric that indicates a lack of CPU time for the processes inside the virtual machine. As described in the KVM core patch , steal is the time during which the hypervisor executes other processes on the host OS, although it put the virtual machine process in the queue for execution. That is, steal is considered as the difference between the time when the process is ready to be executed and the time when the processor is allocated CPU time.

')

The metric steal of the virtual machine core is obtained from the hypervisor. At the same time, the hypervisor does not specify exactly what other processes it performs, just “while busy, I can’t give you time”. On KVM, support for steal counting has been added in patches . There are two key points:

- The virtual machine learns about steal from the hypervisor. That is, from the point of view of losses, for processes on the virtual machine itself, this is an indirect measurement, which can be subject to various distortions.

- The hypervisor does not share with the virtual machine information about how he is busy with others - the main thing is that he does not devote time to it. Because of this, the virtual machine itself cannot reveal the distortions in the steal index, which could be assessed by the nature of the competing processes.

2. What affects steal

2.1. Calculation steal

In fact, steal is considered to be about the same as the normal processor utilization time. There is not a lot of information about how recycling is considered. Probably because the majority considers this question obvious. But here, too, there are pitfalls. To familiarize yourself with this process, you can read the article by Brendann Gregg : you will learn about a bunch of nuances in the calculation of recycling and about situations where this calculation will be wrong for the following reasons:

- Overheating of the processor, at which cycles are skipped.

- Turn on / off turbobust, as a result of which the processor clock frequency changes.

- The change in the duration of a time slot that occurs when using processor power-saving technologies, such as SpeedStep.

- The problem of calculating the average: assessment of disposal for one minute at 80% can hide a short burst of 100%.

- Cyclic locking (spin lock) causes the processor to be utilized, but the user process does not see progress in its execution. As a result, the calculated processor utilization will be one hundred percent, although physically the process will not consume processor time.

I did not find an article describing a similar calculation for steal (if you know, share in the comments). But, judging by the source code, the calculation mechanism is the same as for disposal. Just one more counter is added to the kernel, directly for the KVM process (virtual machine process), which counts the duration of the KVM process in the waiting state of the processor time. The counter takes information about the processor from its specification and looks at whether all its ticks are utilized by the virtual process. If all, then we believe that the processor was engaged only in the process of the virtual machine. Otherwise, we inform that the processor was doing something else, steal appeared.

The steal counting process is subject to the same problems as the normal recycling count. Not to say that such problems often appear, but they look discouraging.

2.2. KVM Virtualization Types

Generally speaking, there are three types of virtualization, and all of them are supported by KVM. The type of steal may depend on the type of virtualization.

Broadcast . In this case, the operation of the operating system of the virtual machine with the physical devices of the hypervisor occurs approximately like this:

- The guest operating system sends a command to its guest device.

- The guest device driver accepts the command, issues a request for the device's BIOS, and sends it to the hypervisor.

- The hypervisor process translates the command to the command for the physical device, making it, among other things, more secure.

- A physical device driver accepts a modified command and sends it to the physical device itself.

- The results of executing the commands go back along the same path.

The advantage of translation is that it allows you to emulate any device and does not require special training of the operating system kernel. But for this you have to pay, first of all, with speed.

Hardware virtualization . In this case, the device at the hardware level understands commands from the operating system. This is the fastest and best way. But, unfortunately, it is not supported by all physical devices, hypervisors and guest operating systems. Currently, the main devices that support hardware virtualization are processors.

Paravirtualization (paravirtualization) . The most common version of device virtualization on KVM and generally the most common virtualization mode for guest operating systems. Its peculiarity is that work with some hypervisor subsystems (for example, with a network or disk stack) or allocation of memory pages occurs using the hypervisor API, without translating low-level commands. The disadvantage of this virtualization method is the need to modify the kernel of the guest operating system so that it can interact with the hypervisor using this API. But usually this is solved by installing special drivers on the guest operating system. In KVM, this API is called the virtio API .

With paravirtualization, compared with translation, the path to the physical device is significantly reduced by sending commands directly from the virtual machine to the hypervisor process on the host. This allows you to speed up the execution of all instructions inside the virtual machine. In KVM, the virtio API is responsible for this, which only works for certain devices, such as a network adapter or disk adapter. That is why virtio-drivers are installed inside virtual machines.

The reverse side of such acceleration - not all processes that run inside the virtual machine remain inside it. This creates some special effects that can lead to appearance on steal. A detailed study of this issue is recommended to start with An API for virtual I / O: virtio .

2.3. "Fair" sheduling

The virtual machine on the hypervisor is, in fact, a normal process that obeys the laws of scheduling (allocation of resources between processes) in the Linux kernel, so let's take a closer look at it.

Linux uses the so-called CFS, Completely Fair Scheduler, starting from the 2.6.23 kernel, which became the default dispatcher. To deal with this algorithm, you can read the Linux Kernel Architecture or source code. The essence of CFS is the allocation of processor time between processes depending on the duration of their execution. The more CPU time the process requires, the less this time it gets. This guarantees the “honest” execution of all processes - so that one process does not occupy all processors all the time, and other processes can also be executed.

Sometimes this paradigm leads to interesting artifacts. Long-time Linux users will surely remember the fading of a regular text editor on the desktop while running demanding compiler-type applications. This happened because the non-resource-intensive tasks of desktop applications competed with tasks that actively consume resources, such as the compiler. CFS thinks it's not fair, therefore it periodically stops the text editor and allows the processor to process the compiler tasks. This was corrected using the sched_autogroup mechanism, but many other features of the allocation of processor time between tasks remained. Actually, this story is not about how bad things are in CFS, but trying to draw attention to the fact that “fair” allocation of CPU time is not the most trivial task.

Another important point in the scheduler is preemption. This is necessary in order to expel the snickering process from the processor and let others work. The exile process is called context switching, the switching context of the processor. At the same time, the whole context of the task is preserved: the state of the stack, registers, etc., after which the process is sent to wait, and another takes its place. This is an expensive operation for the OS, and it is rarely used, but in fact there is nothing wrong with it. Frequent context switching can talk about a problem in the OS, but usually it goes on continuously and does not indicate anything.

Such a long story is needed to explain one fact: the more processor resources are trying to consume the process in an honest Linux sheduler, the faster it will be stopped, so that other processes can also work. Is this right or not? This is a difficult question, which is solved in different ways for different loads. In Windows, until recently, the scheduler focused on priority processing of desktop applications, which could cause background processes to hang. There were five different classes of sheduler on Sun Solaris. When we started virtualization, we added the sixth, Fair share scheduler , because the previous five worked with Solaris virtualization inadequate. A detailed study of this issue is recommended to start with books like Solaris Internals: Solaris 10 and OpenSolaris Kernel Architecture or Understanding the Linux Kernel .

2.4. How to monitor steal?

Monitoring steal inside a virtual machine, like any other processor metric, is simple: you can use any means of removing processor metrics. The main thing is that the virtual is on Linux. Windows for some reason does not provide such information to its users. :(

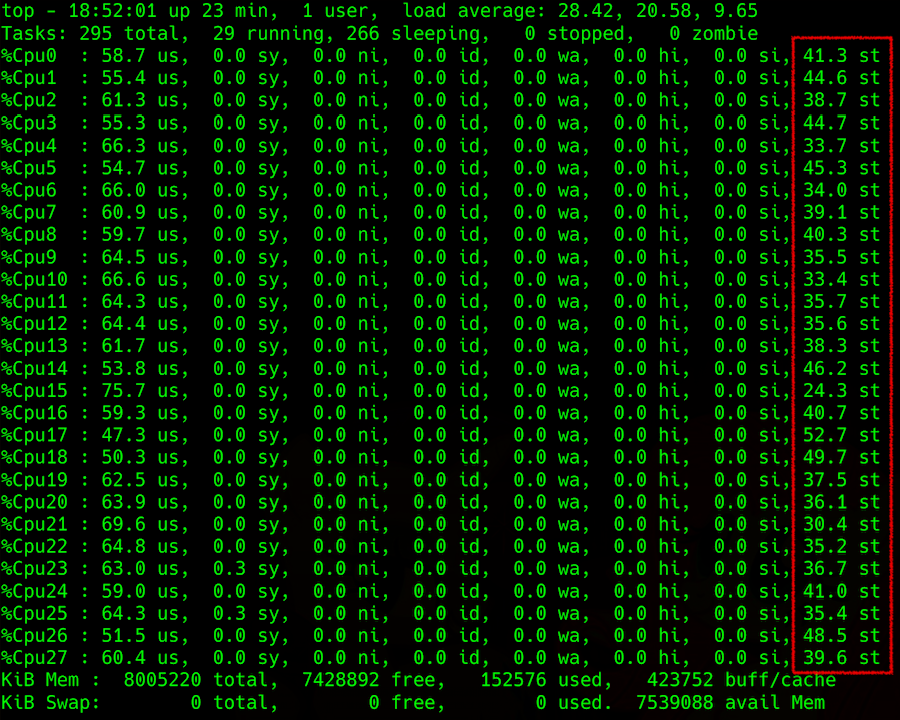

Top output: processor load detail, in the rightmost column — steal

The difficulty arises when trying to get this information from the hypervisor. You can try to predict steal on the host machine, for example, by the parameter Load Average (LA) - the average value of the number of processes waiting in the queue for execution. The method of calculating this parameter is not easy, but in general, if the LA processor normalized by the number of threads is greater than 1, this indicates that the server with Linux is overloaded with something.

What are all these processes waiting for? The obvious answer is the cpu. But the answer is not entirely correct, because sometimes the processor is free, and LA goes off scale. Remember how NFS falls off and how LA grows . About the same can be with the disk, and with other input / output devices. But in fact, processes can expect the end of any blocking, both physical, associated with an I / O device, and logical, such as a mutex. This includes locking at the level of hardware (the same response from the disk), or logic (the so-called locking primitives, which includes a bunch of entities, mutex adaptive and spin, semaphores, condition variables, rw locks, ipc locks ...).

Another feature of LA is that it is considered as an average over the operating system. For example, 100 processes compete for one file, and then LA = 50. Such a high value would seem to indicate that the OS is bad. But for another crookedly written code, this can be a normal state, despite the fact that it is only bad for him, and other processes in the OS do not suffer.

Because of this averaging (and not less than in a minute), determining something by the LA indicator is not the most rewarding occupation, with very uncertain results in specific cases. If you try to figure it out, you will find that articles on Wikipedia and other available resources describe only the simplest cases, without a deep explanation of the process. All interested are sent, again, here, to Brendann Gregg - hereinafter links. Who is lazy in English - translation of his popular article about LA .

3. Special effects

Now we’ll focus on the main steal cases that we encountered. I'll tell you how they follow from the above and how they relate to the indicators on the hypervisor.

Recycling . The simplest and most frequent: the hypervisor is reutilized. Indeed, there are a lot of running virtual machines, a large CPU consumption inside them, a lot of competition, LA utilization is more than 1 (in normalization by processor threads). Inside all virtualok everything slows down. Steal transmitted from the hypervisor is also growing, it is necessary to redistribute the load or turn off someone. In general, everything is logical and understandable.

Paravirtualization against lonely instances . There is only one virtual machine on the hypervisor, it consumes a small part of it, but it gives a lot of I / O load, for example, a disk. And from somewhere a small steal appears in it, up to 10% (as shown by several experiments carried out).

The case is interesting. Steal appears here just because of the blocking at the level of para-virtualized drivers. An interrupt is created inside the virtual machine, processed by the driver and goes to the hypervisor. Because of the interrupt processing on the hypervisor for a virtual machine, it looks like a sent request, it is ready for execution and is waiting for the processor, but it is not given processor time. Virtual thinks that this time is stolen.

This happens at the moment the buffer is sent, it goes into the hypervisor's kernel space, and we start to wait for it. Although, from the point of view of the virtual, he should immediately return. Consequently, according to the steal calculation algorithm, this time is considered stolen. Most likely, in this situation there may be other mechanisms (for example, processing some more sys calls), but they should not differ much.

Sheduler against highly loaded virtual locks . When one virtual machine suffers from steal more than others, it is connected just with a sheduler. The more the process loads the processor, the sooner the scheduler will drive it out, so that the others can work as well. If the virtual user consumes a little, she almost does not see the steal: her process honestly sat and waited, we need to give him more time. If the virtual machine makes the maximum load on all its cores, it is more often expelled from the processor and try not to give much time.

Even worse, when the processes inside the virtual team are trying to get more processor, because they do not cope with data processing. Then the operating system on the hypervisor, due to fair optimization, will give less and less CPU time. This process occurs like an avalanche, and steal jumps up to the skies, although the rest of the virtual girls can barely notice it. And the more cores, the worse the machine got under the distribution. In short, high-loaded virtual machines with multiple cores suffer the most.

Low LA, but there is steal . If LA is approximately 0.7 (that is, the hypervisor seems to be underloaded), but steal is observed inside the individual virtual locks:

- Already described above option with paravirtualization. A virtual player can get metrics pointing to steal, although the hypervisor is fine. According to the results of our experiments, this option steal does not exceed 10% and should not have a significant impact on the performance of applications inside the virtual machine.

- The LA parameter is considered invalid. More precisely, at each particular moment it is considered true, but if averaged over one minute, it is underestimated. For example, if one virtual machine for a third of a hypervisor consumes all its processors for exactly half a minute, then LA per minute on the hypervisor will be 0.15; four such virtual machines working at the same time will give 0.6. And the fact that half a minute on each of them was a wild steal under 25% in terms of LA is no longer a pull out.

- Again, because of the sheduler, who decided that someone eats too much, and let this someone wait. For the time being, I am switching the context, working on interruptions and doing other important system things. As a result, some virtuals do not see any problems, while others experience a serious performance degradation.

4. Other distortions

There are a million reasons for the distortion of the fair return of processor time on the virtual machine. For example, hypertreaming and NUMA make it difficult to calculate. They finally confuse the choice of the kernel for the execution of the process, because the scheduler uses coefficients - weights, which, when switching context, make the calculation even more difficult.

There are distortions due to technologies like a turbo-bus or, conversely, energy-saving mode, which, when calculating recycling, can artificially increase or decrease the frequency or even time slot on the server. Turning on the turbobust reduces the performance of one processor thread due to an increase in the performance of another. At this moment, information about the actual frequency of the processor is not transmitted to the virtual machine, and she believes that someone tyrit her time (for example, she requested 2 GHz, but received half as much).

In general, the causes of distortion can be many. In a particular system, you can find something else. It is better to start with the books for which I have given the links above, and the removal of statistics from the hypervisor with utilities like perf, sysdig, systemtap, of which there are dozens .

5. Conclusions

- Some amount of steal may occur due to paravirtualization, and it can be considered normal. On the Internet, they write that this value can be 5-10%. It depends on the applications inside the virtual machine and on the load it gives on its physical devices. It is important to pay attention to how applications feel inside the virtualok.

- The ratio of the load on the hypervisor and steal inside the virtual is not always uniquely interrelated, both steal estimates may be erroneous in specific situations under different loads.

- Sheduler is bad for processes that ask a lot. He tries to give less to those who ask for more. Big virtuals are evil.

- A small steal can be the norm without para-virtualization (taking into account the load inside the virtual machine, the peculiarities of the load of the neighbors, the distribution of the load among the threads and other factors).

- If you want to figure out steal in a particular system, you have to explore various options, collect metrics, carefully analyze them and think through how to evenly distribute the load. Deviations from any cases are possible, which must be confirmed experimentally or viewed in the kernel debager.

Source: https://habr.com/ru/post/449316/

All Articles