Ok, google: how to get captcha?

Hello. My name is Ibadov Ilkin, I am a student of the Ural Federal University.

In this article, I want to talk about my experience with the automated solution of the google company “Google” - “reCAPTCHA”. I would like to warn the reader in advance that at the time of writing this article, the prototype is not working as effectively as it may seem from the title, however, the result shows that the approach being implemented is able to solve the problem posed.

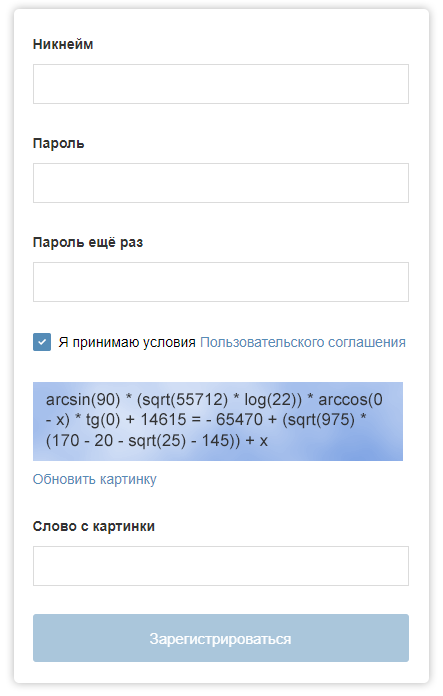

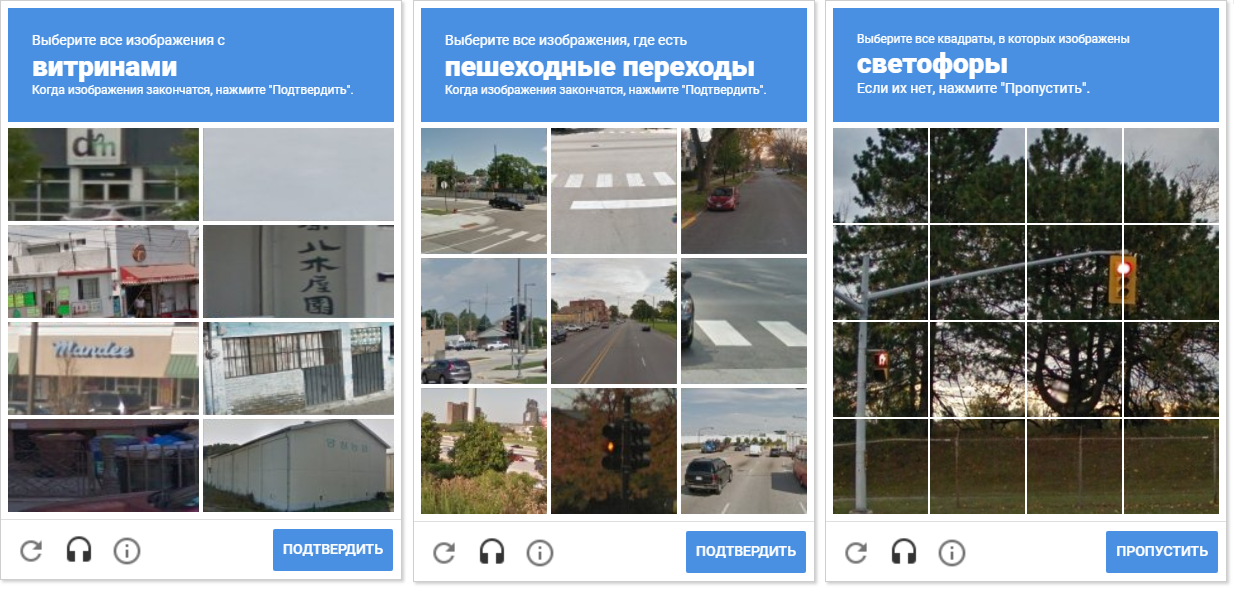

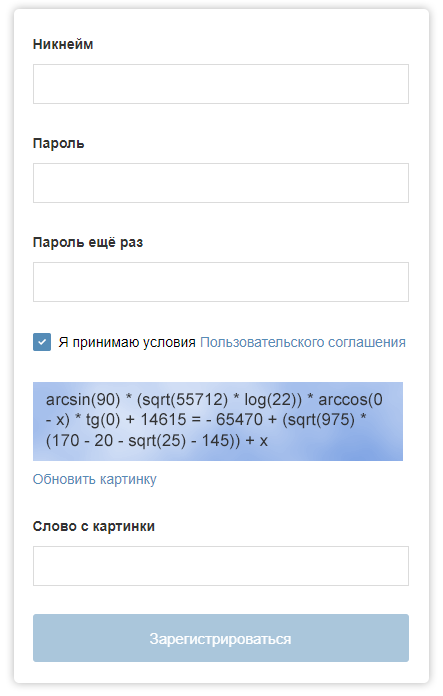

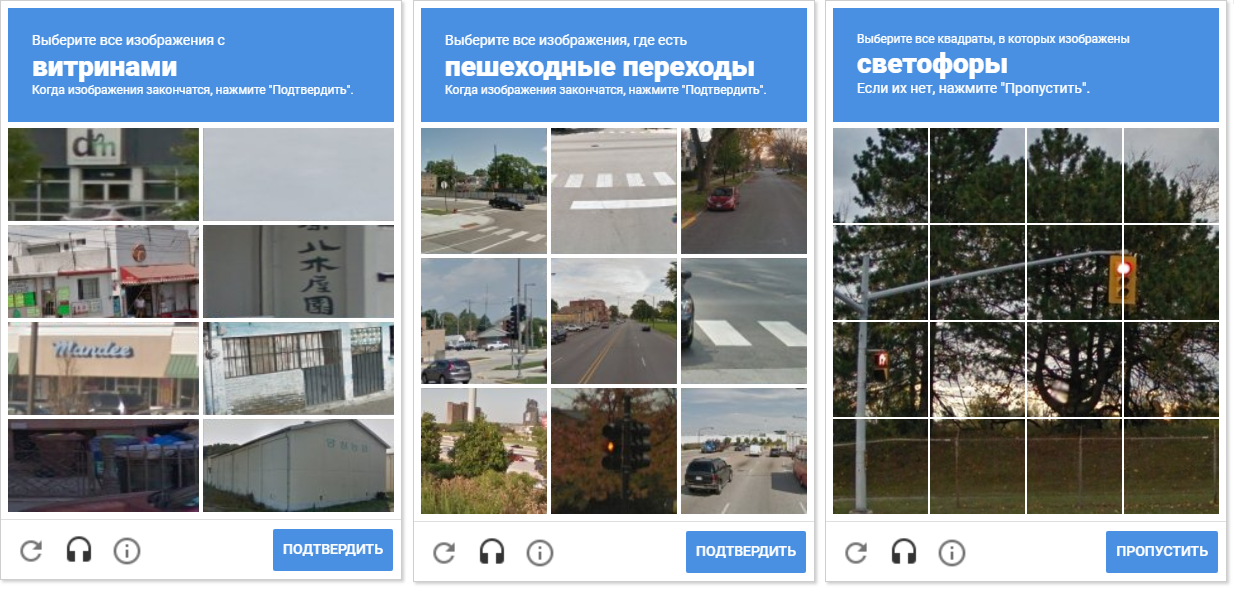

Probably everyone in his life came across captcha: enter text from a picture, solve a simple expression or a complex equation, choose cars, fire hydrants, pedestrian crossings ... Protecting resources from automated systems is necessary and plays a significant role in security: captcha protects from DDoS attacks , automatic registrations and postings, parsing, prevents from spam and selection of passwords to accounts.

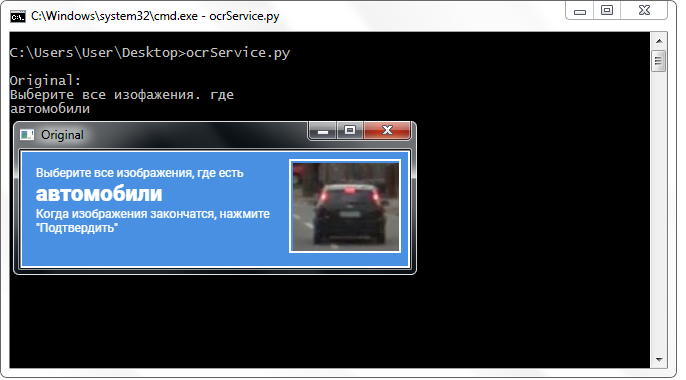

The registration form on "Habré" could be with such a captcha.

')

With the development of machine learning technologies, the performance of a captcha may be at risk. In this article, I describe the key points of the program, which can solve the problem of manual image selection in Google reCAPTCHA (to my delight, not always).

To get a captcha, you need to solve such tasks as: determining the required captcha class, detecting and classifying objects, detecting captcha cells, imitating human activity in captcha solution (cursor movement, click).

To search for objects in an image, trained neural networks are used that can be downloaded to a computer and recognize objects in images or videos. But to solve a captcha, it is not enough just to detect objects: it is necessary to determine the position of the cells and find out which cells you need to select (or not select the cells at all). For this, computer vision tools are used: in this work, this is the well-known OpenCV library .

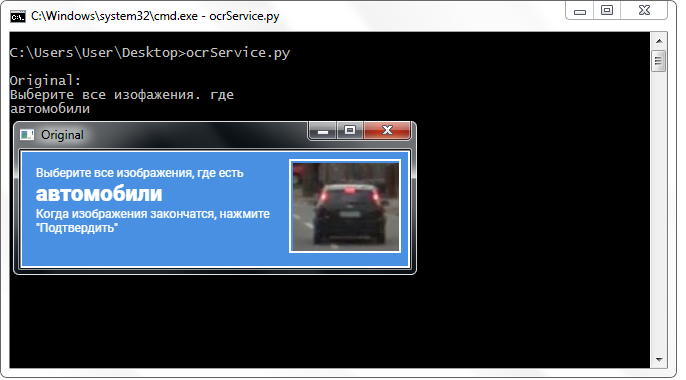

In order to find the objects in the image, first, the image itself is required. I get a screenshot of a part of the screen using the “ PyAutoGUI ” module with sufficient dimensions for detecting objects. In the remainder of the screen, I bring up windows for debugging and monitoring the processes of the program.

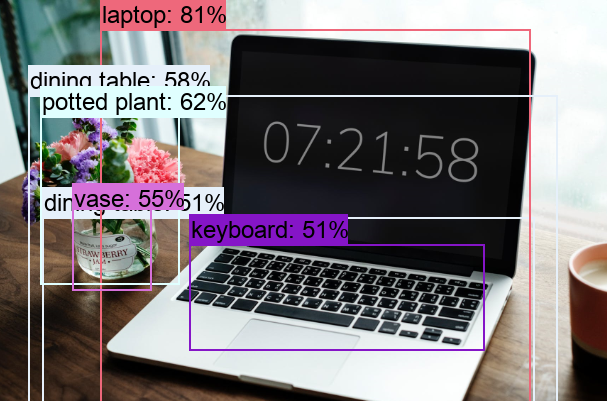

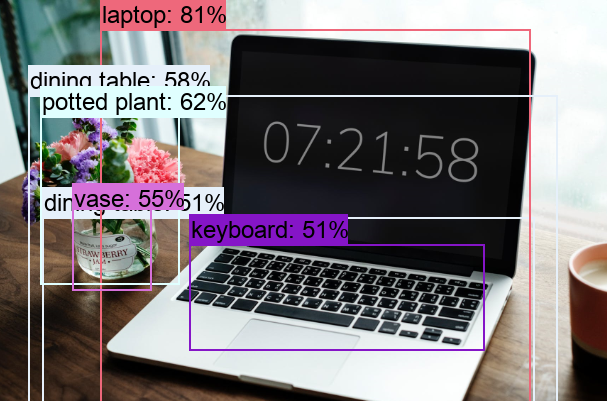

Detection and classification of objects is what the neural network does. The library that allows us to work with neural networks is called “ Tensorflow ” (developed by Google). Today, there are many different trained models for your choice on different data , which means that they can all return different detection results: some models will be better at finding objects, and some are worse.

In this paper I use the ssd_mobilenet_v1_coco model. The selected model is trained on the COCO data set, which identifies 90 different classes (from people and cars to a toothbrush and a comb). Now there are other models that are trained on the same data, but with different parameters. In addition, this model has optimal performance and accuracy, which is important for a desktop computer. The source reports that the processing time for a single frame of 300 x 300 pixels is 30 milliseconds. On the "Nvidia GeForce GTX TITAN X".

The result of the neural network is a set of arrays:

The indices of the elements in these arrays correspond to each other, that is: the third element in the array of object classes corresponds to the third element in the array of “boxes” of detected objects and the third element in the array of object ratings.

The selected model allows you to detect objects from 90 classes in real time.

“OpenCV” provides us with the ability to operate on entities called “ outlines ”: They can be found only with the function “findContours ()” from the library “OpenCV”. The input of such a function is to submit a binary image, which can be obtained by the threshold conversion function :

Having established the extreme values of the parameters of the threshold conversion function, we also get rid of various kinds of noise. Also, to minimize the number of unnecessary small elements and noise, you can apply morphological transformations : erosion (compression) and capacity (expansion) functions. These functions are also included in the "OpenCV". After transformations, contours are selected, the number of vertices of which is equal to four (having previously performed an approximation function over the contours).

In the first window, the result of the threshold conversion. In the second - an example of morphological transformation. In the third window, the cells and captcha cap are already selected: they are highlighted in color by software.

After all the transformations, the outlines that are not cells still fall into the final array with cells. In order to weed out unnecessary noise, I select by the values of the length (perimeter) and the area of the contours.

It was experimentally revealed that the values of the circuits of interest are in the range from 360 to 900 units. This value is selected on the screen with a diagonal of 15.6 inches and a resolution of 1366 x 768 pixels. Further, the indicated contour values can be calculated depending on the size of the user's screen, but there is no such anchoring in the prototype being created.

The main advantage of the chosen approach to the detection of cells is that we do not care how the grid will look and how many cells will be shown on the captcha page: 8, 9 or 16.

The image shows varieties of captcha nets. Note that the distance between the cells is different. Separate cells from each other allows morphological compression.

An additional advantage of detecting contours is the fact that OpenCV allows us to detect their centers (we need them to determine the coordinates of movement and mouse click).

Having an array with pure contours of captcha cells without unnecessary noise contours, we can cycle through each cell of a captcha (“contour” in the terminology of “OpenCV”) and check it for the fact of intersection with the detected “box” of the object received from the neural network.

To establish this fact, the translation of the detected “box” into a cell-like contour was used. But this approach turned out to be wrong, because the case when the object is located inside a cell is not considered to be an intersection. Naturally, such cells did not stand out in the captcha.

The problem was solved by redrawing the contour of each cell (with white fill) on a black sheet. Similarly, a binary image of a frame with an object was obtained. The question arises - how now to establish the fact of the intersection of a cell with a filled object frame? In each iteration of the array with cells, a disjunction (logical or) is performed on the two binary images. As a result, we get a new binary image in which the intersected sections will be highlighted. That is, if there are such areas, then the cell and the frame of the object intersect. Programmatically, such a test can be done using the “ .any () ” method: it will return “True” if there is at least one element in the array equal to one or “False” if there are no units.

The “any ()” function for the “Logical OR” image in this case will return true and thereby establish the fact of the intersection of the cell with the frame area of the detected object.

Cursor control in “Python” becomes available thanks to the “win32api” module (however, later it turned out that “PyAutoGUI” already imported into the project also knows how to do this). Pressing and releasing the left mouse button, as well as moving the cursor to the desired coordinates, is performed by the corresponding functions of the win32api module. But in the prototype, they were wrapped in user functions in order to provide visual observation of the movement of the cursor. This has a negative impact on performance and was implemented solely for demonstration.

In the development process, the idea of selecting cells in a random order arose. It is possible that this has no practical sense (for obvious reasons, “Google” does not give us comments and descriptions of the captcha mechanisms), but moving the cursor over the cells in a chaotic manner looks more fun.

On animation, the result is “random.shuffle (boxesForSelect)”.

In order to combine all the existing developments into a single whole, one more link is required: the block of recognition of the class required from the captcha. We already know how to recognize and distinguish different objects in the image, we can click on arbitrary captcha cells, but we don’t know which cells to click on. One of the ways to solve this problem is to recognize the text from the captcha cap. First of all, I tried to implement text recognition using the Tesseract-OCR optical character recognition tool.

In recent versions, it is possible to install language packs directly in the installer window (previously this was done manually). After installing and importing Tesseract-OCR into my project, I tried to recognize the text from the captcha cap.

The result, unfortunately, did not impress me at all. I decided that the text in the header is highlighted in bold and consistent style for a reason, so I tried to apply various transformations to the image: binarization, shrinking, expanding, blurring, distorting and resizing operations. Unfortunately, this did not give a good result: in the best cases, only part of the class letters was determined, and when the result was satisfactory, I applied the same transformations, but for other caps (with different text), and the result was again bad.

Recognizing the “Tesseract-OCR” header usually resulted in unsatisfactory results.

It is impossible to say unequivocally that Tesseract-OCR does not recognize the text well, it is not so: the tool does much better with other images (not captcha caps).

I decided to use a third-party service that offered an API for working with it free of charge (registration and receiving a key to an email address is required). The service has a limit of 500 recognitions per day, but over the entire development period I have not encountered any problems with restrictions. On the contrary: I submitted to the service the original image of the cap (without applying absolutely no transformations) and I was pleasantly impressed with the result.

The words from the service were returned with almost no errors (usually even those written in small print). Moreover, they were returned in a very convenient format — broken down by line with newline characters. In all the images I was interested only in the second line, so I addressed it directly. This could not but rejoice, since such a format freed me from the need to prepare a line: I did not have to cut the beginning or end of the whole text, do trims, replacements, work with regular expressions and perform other operations on the line aimed at selecting one word (and sometimes two!) - a nice bonus!

The service that recognized the text almost never made a mistake with the class name, but I still decided to leave part of the class name for a possible error. This is optional, but I noticed that “Tesseract-OCR” in some cases incorrectly recognized the end of a word starting from the middle. In addition, this approach eliminates the application error, in the case of a long class name or a two-word name (in this case, the service returns not 3, but 4 lines, and I cannot find an entry in the second line for the full name of the class).

The third-party service recognizes the class name well without any transformations above the image.

Get the text from the caps - a little. It should be compared with the identifiers of the existing classes of the model, because in the class array the neural network returns exactly the class identifier, and not its name, as it may seem. When training a model, as a rule, a file is created in which the names of the classes and their identifiers are matched (aka “label map”). I decided to do it easier and specify the class identifiers manually, since the captcha still requires classes in Russian (by the way, this can be changed):

Everything described above is reproduced in the main program loop: the object's frames, cells, their intersections are determined, cursor movements and clicks are made. When a cap is detected, text is recognized. If the neural network cannot detect the required class, then an arbitrary image shift is performed up to 5 times (that is, the input data changes to the neural network), and if detection has not yet occurred, then click on the “Pass / Confirm” button (its position is detected similarly to cell detection and caps).

If you often decide captcha, you could observe a picture when the selected cell disappears, and a new one appears slowly and slowly in its place. Since the prototype was programmed to instantly go to the next page after selecting all the cells, I decided to make 3 second pauses in order to eliminate pressing the “Next” button without detecting objects on the slowly appearing cell.

The article would not be complete if it did not contain a description of the most important thing - a tick of the successful passage of a captcha. I decided that a simple pattern matching can do this task. It is worth noting that pattern matching is far from the best way to detect objects. For example, I had to set the detection sensitivity to “0.01” so that the function would stop seeing ticks in everything, but I saw it when there was a tick. Similarly, I acted with an empty chekbos, which meets the user and from which the captcha begins (there were no problems with sensitivity).

The result of all the actions described was the application, which I tested on the " Toaster ":

We have to admit that the video was not shot on the first attempt, since I often encountered the need to select classes that are not available in the model (for example, pedestrian crossings, stairs or shop windows).

“Google reCAPTCHA” returns a certain value to the site, indicating how “You are a robot”, and site administrators, in turn, can set a threshold for passing this value. It is possible that the “Toaster” had a relatively low threshold for captcha passing. This explains the rather easy passage of a captcha program, despite the fact that it was mistaken twice without seeing the traffic light from the first page and the fire hydrant from the fourth page of the captcha.

In addition to the Toaster, experiments were conducted on the official reCAPTCHA demonstration page . As a result, it is noticed that after multiple erroneous detections (and non-detections), captcha becomes extremely difficult for even a person: new classes are required (like tractors and palm trees), cells without objects appear in the samples (almost monotonous colors) and the number of pages increases that need to go through.

This was especially noticeable when I decided to try clicking on random cells in case of undetected objects (due to their absence in the model). Therefore, we can say for sure that random clicks will not lead to the solution of the problem. To get rid of such a “blockage” by the examiner, the Internet connection was reconnected and the browser data was cleared, because it was impossible to pass such a test - it was almost endless!

If you doubt your humanity, such an outcome is possible.

If the article and the application cause interest in the reader, I will be happy to continue its implementation, tests and further description in a more detailed form.

We are talking about finding classes that are not part of the current network, it will significantly improve the efficiency of the application. At the moment there is an urgent need to recognize at least such as classes like: pedestrian crossings, shop windows and chimneys - I will tell you how to retrain the model. During development, I made a small list of the most common classes:

Improvements in the quality of object detection can also be achieved by using several models at the same time: this can degrade performance but increase accuracy.

Another way to improve the quality of object detection is to change the image input to the neural network: in the video you can see that if objects are not detected, I repeatedly make an arbitrary image displacement (within 10 pixels horizontally and vertically), and often this operation allows you to see objects that were previously were not detected.

An image magnification from a small square to a large one (up to 300 x 300 pixels) also leads to the detection of undetected objects.

No objects were found on the left: the original square with a side of 100 pixels. On the right, the bus is found: an enlarged square up to 300 x 300 pixels.

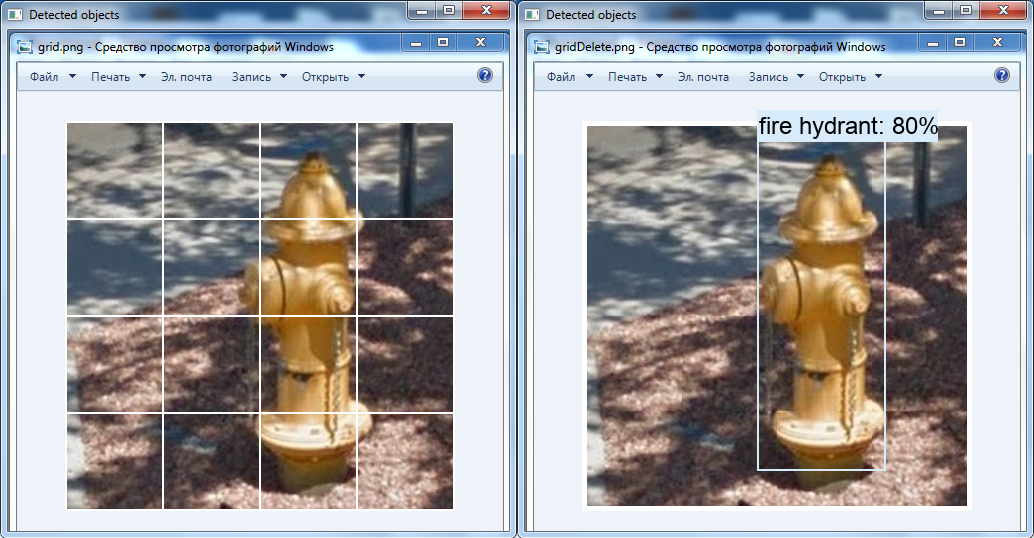

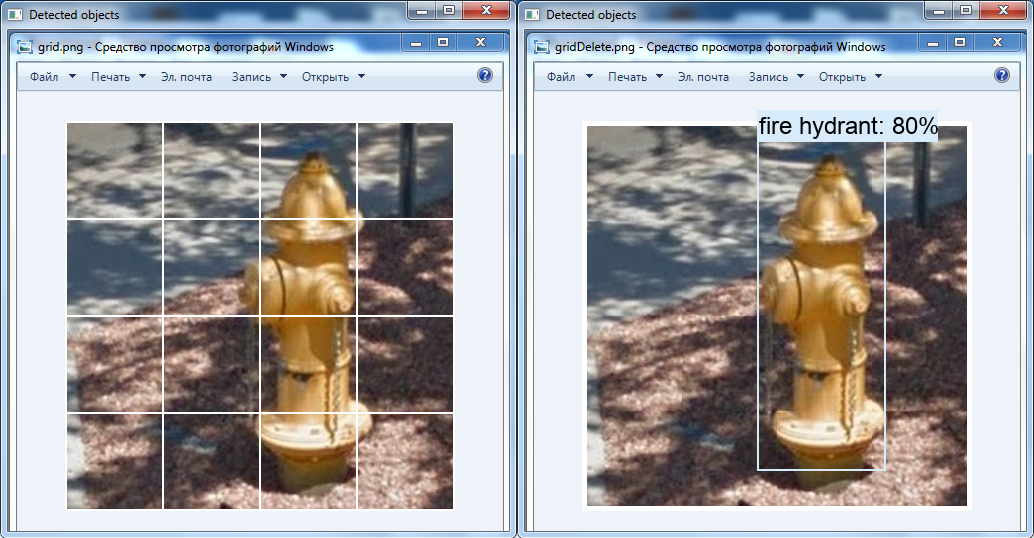

Another interesting transformation is the removal of the white grid over the image by means of “OpenCV”: it is possible that the fire hydrant in the video was not detected for this reason (this class is present in the neural network).

On the left, the original image, and on the right, the modified one in the graphical editor: the grid is removed, the cells are moved towards each other.

With this article I wanted to tell you that captcha is probably not the best protection against bots, and it is quite possible that soon there will be a need for new means of protection against automated systems.

The developed prototype, even if it is in an incomplete state, demonstrates that with the presence of the required classes in the neural network model and the application of transformations over images, it is possible to achieve automation of the process that should not be automated.

Also, I would like to draw Google’s attention to the fact that in addition to the captcha circumvention method described in this article, there is also another way in which the audio sample is transcribed . In my opinion, now it is necessary to take measures related to improving the quality of software products and algorithms against robots.

From the content and essence of the material it may seem that I do not like “Google” and in particular “reCAPTCHA”, but this is far from the case, and if there is a next implementation, I will tell why.

Developed and demonstrated in order to improve the level of education and improve methods aimed at ensuring the security of information.

Thanks for attention.

In this article, I want to talk about my experience with the automated solution of the google company “Google” - “reCAPTCHA”. I would like to warn the reader in advance that at the time of writing this article, the prototype is not working as effectively as it may seem from the title, however, the result shows that the approach being implemented is able to solve the problem posed.

Probably everyone in his life came across captcha: enter text from a picture, solve a simple expression or a complex equation, choose cars, fire hydrants, pedestrian crossings ... Protecting resources from automated systems is necessary and plays a significant role in security: captcha protects from DDoS attacks , automatic registrations and postings, parsing, prevents from spam and selection of passwords to accounts.

The registration form on "Habré" could be with such a captcha.

')

With the development of machine learning technologies, the performance of a captcha may be at risk. In this article, I describe the key points of the program, which can solve the problem of manual image selection in Google reCAPTCHA (to my delight, not always).

To get a captcha, you need to solve such tasks as: determining the required captcha class, detecting and classifying objects, detecting captcha cells, imitating human activity in captcha solution (cursor movement, click).

To search for objects in an image, trained neural networks are used that can be downloaded to a computer and recognize objects in images or videos. But to solve a captcha, it is not enough just to detect objects: it is necessary to determine the position of the cells and find out which cells you need to select (or not select the cells at all). For this, computer vision tools are used: in this work, this is the well-known OpenCV library .

In order to find the objects in the image, first, the image itself is required. I get a screenshot of a part of the screen using the “ PyAutoGUI ” module with sufficient dimensions for detecting objects. In the remainder of the screen, I bring up windows for debugging and monitoring the processes of the program.

Object Detection

Detection and classification of objects is what the neural network does. The library that allows us to work with neural networks is called “ Tensorflow ” (developed by Google). Today, there are many different trained models for your choice on different data , which means that they can all return different detection results: some models will be better at finding objects, and some are worse.

In this paper I use the ssd_mobilenet_v1_coco model. The selected model is trained on the COCO data set, which identifies 90 different classes (from people and cars to a toothbrush and a comb). Now there are other models that are trained on the same data, but with different parameters. In addition, this model has optimal performance and accuracy, which is important for a desktop computer. The source reports that the processing time for a single frame of 300 x 300 pixels is 30 milliseconds. On the "Nvidia GeForce GTX TITAN X".

The result of the neural network is a set of arrays:

- with the list of classes of detected objects (their identifiers);

- with a list of assessments of detected objects (in percent);

- with the list of coordinates of the detected objects ("boxes").

The indices of the elements in these arrays correspond to each other, that is: the third element in the array of object classes corresponds to the third element in the array of “boxes” of detected objects and the third element in the array of object ratings.

The selected model allows you to detect objects from 90 classes in real time.

Cell Detection

“OpenCV” provides us with the ability to operate on entities called “ outlines ”: They can be found only with the function “findContours ()” from the library “OpenCV”. The input of such a function is to submit a binary image, which can be obtained by the threshold conversion function :

_retval, binImage = cv2.threshold(image,254,255,cv2.THRESH_BINARY) contours = cv2.findContours(binImage, cv2.RETR_LIST, cv2.CHAIN_APPROX_SIMPLE)[0] Having established the extreme values of the parameters of the threshold conversion function, we also get rid of various kinds of noise. Also, to minimize the number of unnecessary small elements and noise, you can apply morphological transformations : erosion (compression) and capacity (expansion) functions. These functions are also included in the "OpenCV". After transformations, contours are selected, the number of vertices of which is equal to four (having previously performed an approximation function over the contours).

In the first window, the result of the threshold conversion. In the second - an example of morphological transformation. In the third window, the cells and captcha cap are already selected: they are highlighted in color by software.

After all the transformations, the outlines that are not cells still fall into the final array with cells. In order to weed out unnecessary noise, I select by the values of the length (perimeter) and the area of the contours.

It was experimentally revealed that the values of the circuits of interest are in the range from 360 to 900 units. This value is selected on the screen with a diagonal of 15.6 inches and a resolution of 1366 x 768 pixels. Further, the indicated contour values can be calculated depending on the size of the user's screen, but there is no such anchoring in the prototype being created.

The main advantage of the chosen approach to the detection of cells is that we do not care how the grid will look and how many cells will be shown on the captcha page: 8, 9 or 16.

The image shows varieties of captcha nets. Note that the distance between the cells is different. Separate cells from each other allows morphological compression.

An additional advantage of detecting contours is the fact that OpenCV allows us to detect their centers (we need them to determine the coordinates of movement and mouse click).

Selecting cells for selection

Having an array with pure contours of captcha cells without unnecessary noise contours, we can cycle through each cell of a captcha (“contour” in the terminology of “OpenCV”) and check it for the fact of intersection with the detected “box” of the object received from the neural network.

To establish this fact, the translation of the detected “box” into a cell-like contour was used. But this approach turned out to be wrong, because the case when the object is located inside a cell is not considered to be an intersection. Naturally, such cells did not stand out in the captcha.

The problem was solved by redrawing the contour of each cell (with white fill) on a black sheet. Similarly, a binary image of a frame with an object was obtained. The question arises - how now to establish the fact of the intersection of a cell with a filled object frame? In each iteration of the array with cells, a disjunction (logical or) is performed on the two binary images. As a result, we get a new binary image in which the intersected sections will be highlighted. That is, if there are such areas, then the cell and the frame of the object intersect. Programmatically, such a test can be done using the “ .any () ” method: it will return “True” if there is at least one element in the array equal to one or “False” if there are no units.

The “any ()” function for the “Logical OR” image in this case will return true and thereby establish the fact of the intersection of the cell with the frame area of the detected object.

Control

Cursor control in “Python” becomes available thanks to the “win32api” module (however, later it turned out that “PyAutoGUI” already imported into the project also knows how to do this). Pressing and releasing the left mouse button, as well as moving the cursor to the desired coordinates, is performed by the corresponding functions of the win32api module. But in the prototype, they were wrapped in user functions in order to provide visual observation of the movement of the cursor. This has a negative impact on performance and was implemented solely for demonstration.

In the development process, the idea of selecting cells in a random order arose. It is possible that this has no practical sense (for obvious reasons, “Google” does not give us comments and descriptions of the captcha mechanisms), but moving the cursor over the cells in a chaotic manner looks more fun.

On animation, the result is “random.shuffle (boxesForSelect)”.

Text recognising

In order to combine all the existing developments into a single whole, one more link is required: the block of recognition of the class required from the captcha. We already know how to recognize and distinguish different objects in the image, we can click on arbitrary captcha cells, but we don’t know which cells to click on. One of the ways to solve this problem is to recognize the text from the captcha cap. First of all, I tried to implement text recognition using the Tesseract-OCR optical character recognition tool.

In recent versions, it is possible to install language packs directly in the installer window (previously this was done manually). After installing and importing Tesseract-OCR into my project, I tried to recognize the text from the captcha cap.

The result, unfortunately, did not impress me at all. I decided that the text in the header is highlighted in bold and consistent style for a reason, so I tried to apply various transformations to the image: binarization, shrinking, expanding, blurring, distorting and resizing operations. Unfortunately, this did not give a good result: in the best cases, only part of the class letters was determined, and when the result was satisfactory, I applied the same transformations, but for other caps (with different text), and the result was again bad.

Recognizing the “Tesseract-OCR” header usually resulted in unsatisfactory results.

It is impossible to say unequivocally that Tesseract-OCR does not recognize the text well, it is not so: the tool does much better with other images (not captcha caps).

I decided to use a third-party service that offered an API for working with it free of charge (registration and receiving a key to an email address is required). The service has a limit of 500 recognitions per day, but over the entire development period I have not encountered any problems with restrictions. On the contrary: I submitted to the service the original image of the cap (without applying absolutely no transformations) and I was pleasantly impressed with the result.

The words from the service were returned with almost no errors (usually even those written in small print). Moreover, they were returned in a very convenient format — broken down by line with newline characters. In all the images I was interested only in the second line, so I addressed it directly. This could not but rejoice, since such a format freed me from the need to prepare a line: I did not have to cut the beginning or end of the whole text, do trims, replacements, work with regular expressions and perform other operations on the line aimed at selecting one word (and sometimes two!) - a nice bonus!

text = serviceResponse['ParsedResults'][0]['ParsedText'] # JSON lines = text.splitlines() # print("Recognized " + lines[1]) # ! The service that recognized the text almost never made a mistake with the class name, but I still decided to leave part of the class name for a possible error. This is optional, but I noticed that “Tesseract-OCR” in some cases incorrectly recognized the end of a word starting from the middle. In addition, this approach eliminates the application error, in the case of a long class name or a two-word name (in this case, the service returns not 3, but 4 lines, and I cannot find an entry in the second line for the full name of the class).

The third-party service recognizes the class name well without any transformations above the image.

Fusion of developments

Get the text from the caps - a little. It should be compared with the identifiers of the existing classes of the model, because in the class array the neural network returns exactly the class identifier, and not its name, as it may seem. When training a model, as a rule, a file is created in which the names of the classes and their identifiers are matched (aka “label map”). I decided to do it easier and specify the class identifiers manually, since the captcha still requires classes in Russian (by the way, this can be changed):

if "" in query: # classNum = 3 # "label map" elif "" in query: classNum = 10 elif "" in query: classNum = 11 ... Everything described above is reproduced in the main program loop: the object's frames, cells, their intersections are determined, cursor movements and clicks are made. When a cap is detected, text is recognized. If the neural network cannot detect the required class, then an arbitrary image shift is performed up to 5 times (that is, the input data changes to the neural network), and if detection has not yet occurred, then click on the “Pass / Confirm” button (its position is detected similarly to cell detection and caps).

If you often decide captcha, you could observe a picture when the selected cell disappears, and a new one appears slowly and slowly in its place. Since the prototype was programmed to instantly go to the next page after selecting all the cells, I decided to make 3 second pauses in order to eliminate pressing the “Next” button without detecting objects on the slowly appearing cell.

The article would not be complete if it did not contain a description of the most important thing - a tick of the successful passage of a captcha. I decided that a simple pattern matching can do this task. It is worth noting that pattern matching is far from the best way to detect objects. For example, I had to set the detection sensitivity to “0.01” so that the function would stop seeing ticks in everything, but I saw it when there was a tick. Similarly, I acted with an empty chekbos, which meets the user and from which the captcha begins (there were no problems with sensitivity).

Result

The result of all the actions described was the application, which I tested on the " Toaster ":

We have to admit that the video was not shot on the first attempt, since I often encountered the need to select classes that are not available in the model (for example, pedestrian crossings, stairs or shop windows).

“Google reCAPTCHA” returns a certain value to the site, indicating how “You are a robot”, and site administrators, in turn, can set a threshold for passing this value. It is possible that the “Toaster” had a relatively low threshold for captcha passing. This explains the rather easy passage of a captcha program, despite the fact that it was mistaken twice without seeing the traffic light from the first page and the fire hydrant from the fourth page of the captcha.

In addition to the Toaster, experiments were conducted on the official reCAPTCHA demonstration page . As a result, it is noticed that after multiple erroneous detections (and non-detections), captcha becomes extremely difficult for even a person: new classes are required (like tractors and palm trees), cells without objects appear in the samples (almost monotonous colors) and the number of pages increases that need to go through.

This was especially noticeable when I decided to try clicking on random cells in case of undetected objects (due to their absence in the model). Therefore, we can say for sure that random clicks will not lead to the solution of the problem. To get rid of such a “blockage” by the examiner, the Internet connection was reconnected and the browser data was cleared, because it was impossible to pass such a test - it was almost endless!

If you doubt your humanity, such an outcome is possible.

Development

If the article and the application cause interest in the reader, I will be happy to continue its implementation, tests and further description in a more detailed form.

We are talking about finding classes that are not part of the current network, it will significantly improve the efficiency of the application. At the moment there is an urgent need to recognize at least such as classes like: pedestrian crossings, shop windows and chimneys - I will tell you how to retrain the model. During development, I made a small list of the most common classes:

- pedestrian crossings;

- fire hydrants;

- shop windows;

- chimneys;

- cars;

- buses;

- traffic lights;

- bicycles;

- vehicles;

- stairs;

- marks.

Improvements in the quality of object detection can also be achieved by using several models at the same time: this can degrade performance but increase accuracy.

Another way to improve the quality of object detection is to change the image input to the neural network: in the video you can see that if objects are not detected, I repeatedly make an arbitrary image displacement (within 10 pixels horizontally and vertically), and often this operation allows you to see objects that were previously were not detected.

An image magnification from a small square to a large one (up to 300 x 300 pixels) also leads to the detection of undetected objects.

No objects were found on the left: the original square with a side of 100 pixels. On the right, the bus is found: an enlarged square up to 300 x 300 pixels.

Another interesting transformation is the removal of the white grid over the image by means of “OpenCV”: it is possible that the fire hydrant in the video was not detected for this reason (this class is present in the neural network).

On the left, the original image, and on the right, the modified one in the graphical editor: the grid is removed, the cells are moved towards each other.

Results

With this article I wanted to tell you that captcha is probably not the best protection against bots, and it is quite possible that soon there will be a need for new means of protection against automated systems.

The developed prototype, even if it is in an incomplete state, demonstrates that with the presence of the required classes in the neural network model and the application of transformations over images, it is possible to achieve automation of the process that should not be automated.

Also, I would like to draw Google’s attention to the fact that in addition to the captcha circumvention method described in this article, there is also another way in which the audio sample is transcribed . In my opinion, now it is necessary to take measures related to improving the quality of software products and algorithms against robots.

From the content and essence of the material it may seem that I do not like “Google” and in particular “reCAPTCHA”, but this is far from the case, and if there is a next implementation, I will tell why.

Developed and demonstrated in order to improve the level of education and improve methods aimed at ensuring the security of information.

Thanks for attention.

Source: https://habr.com/ru/post/449236/

All Articles