About the bias of artificial intelligence

tl; dr:

- Machine learning is looking for patterns in data. But artificial intelligence can be "biased" - that is, to find the wrong patterns. For example, a skin cancer detection system from a photograph may pay particular attention to images taken at a doctor’s office. Machine learning is not able to understand : its algorithms only reveal patterns in numbers, and if the data are not representative, so will the result of their processing. And to catch such bugs can be difficult because of the mechanics of machine learning.

- The most obvious and frightening problem area is human diversity. There are many reasons why data about people may lose their objectivity even at the collection stage. But one should not think that this problem concerns only people: exactly the same difficulties arise when trying to detect a flood in a warehouse or a failed gas turbine. Some systems may have prejudices about color, others will be biased towards Siemens sensors.

- Such problems are not new to machine learning, and are peculiar not only to him. Wrong assumptions are made in any complex structures, and to understand why this or that decision was made is always difficult. It is necessary to deal with this in a complex way: to create tools and processes for testing - and to form users so that they do not blindly follow the recommendations of the AI. Machine learning really does some things much better than us - but dogs, for example, are much more effective than people in detecting drugs, which is not a reason to involve them as witnesses and to make sentences based on their testimony. And dogs, by the way, are much smarter than any machine learning system.

Machine learning today is one of the most important fundamental technological trends. This is one of the main ways that technology will change the world around us in the next decade. Some aspects of these changes are worrying. For example, the potential impact of machine learning on the labor market, or its use for unethical purposes (for example, authoritarian regimes). There is another problem that this post is devoted to: the bias of artificial intelligence .

This is not an easy story.

AI from Google can find cats. This news from 2012 was something special then.

What is “AI bias”?

Raw data is both an oxymoron and a bad idea; data need to be well and carefully prepared. —Geoffrey Boker

Somewhere before 2013, in order to make a system that, say, recognizes cats in photos, you had to describe logical steps. How to find corners on the image, recognize eyes, analyze textures for the presence of fur, count paws, and so on. Then collect all the components - and find that it all does not really work. Approximately like a mechanical horse - theoretically it can be done, but in practice it is too complicated to describe. At the exit you have hundreds (or even thousands) of handwritten rules. And not a single working model.

With the advent of machine learning, we no longer use the “manual” rules for recognizing an object. Instead, we take a thousand samples of "that", X, a thousand samples of "another", Y, and force the computer to build a model based on their statistical analysis. Then we give this model some sample data, and it determines with some accuracy whether it fits one of the sets. Machine learning generates a model based on data, and not with the help of the person who writes it. The results are impressive, especially in the field of image and pattern recognition, and that is why the entire industry is now moving to machine learning (ML).

But not everything is so simple. In the real world, your thousands of examples of X or Y also contain A, B, J, L, O, R, and even L. They may be unevenly distributed, and some of them may occur so often that the system will pay more attention to them than on objects that interest you.

What does this mean in practice? My favorite example is when image recognition systems look at a grassy hill and say, "sheep . " It is clear why: most of the photographs of the examples of the "sheep" are made in the meadows where they live, and in these images the grass takes up much more space than little white fluffy trees, and it is the grass of the system that is considered the most important.

There are more serious examples. From a recent one, one project for detecting skin cancer in photographs. It turned out that dermatologists often photograph a ruler along with skin cancer manifestations in order to fix the size of the lesions. On examples of photos of healthy skin there are no lines. For an AI system, such rulers (more precisely, pixels, which we define as a “ruler”) became one of the differences between sets of examples, and sometimes more important than a small skin rash. So the system created for the recognition of skin cancer, sometimes instead recognized the ruler.

The key point here is that the system does not have a semantic understanding of what it is looking at. We look at a set of pixels and see a sheep, skin or rulers in them, and the system is just a numeric string. She does not see three-dimensional space, does not see any objects, nor textures, nor sheep. She simply sees patterns in the data.

The difficulty of diagnosing such problems is that the neural network (the model generated by your machine learning system) consists of thousands of thousands of nodes. There is no easy way to look at a model and see how it makes a decision. Having such a method would mean that the process is simple enough to describe all the rules manually, without using machine learning. People worry that machine learning has become a kind of “black box”. (I will explain a little later why this comparison is still a bust.)

This, in general terms, is the problem of bias in artificial intelligence or machine learning: a system for finding patterns in the data may find incorrect patterns, but you may not notice it. This is a fundamental characteristic of technology, and this is obvious to everyone who works with it in scientific circles and in large technology companies. But its consequences are complex, and our possible solutions to these effects are, too.

Let's talk first about the consequences.

The AI may implicitly make a choice in favor of certain categories of people based on a large number of imperceptible signals.

AI bias scenarios

The most obvious and frightening that this problem can manifest itself when it comes to human diversity. Recently there was a rumor that Amazon tried to build a machine learning system for the initial screening of candidates for employment. Since there are more men among Amazon workers, the examples of “successful hiring” are also more often male, and there were more men in the list of resumes proposed by the system. Amazon noticed this and did not release the system in production.

The most important thing in this example is that the system, according to rumors, favored male candidates, despite the fact that the gender was not indicated in the resume. The system saw other patterns in the examples of “successful recruitment”: for example, women can use special words to describe achievements, or have particular hobbies. Of course, the system did not know what “hockey” is, who such “people” are, or what “success” is, it simply carried out a statistical analysis of the text. But the patterns that she saw would most likely have remained unnoticed by a person, and some of them (for example, the fact that people of different sexes describe success in different ways) would probably be difficult for us to see, even looking at them.

Further worse. A machine learning system that finds cancer on pale skin very well may work worse with dark skin, or vice versa. Not necessarily because of bias, but because you probably need to build a separate model for a different skin color, choosing different characteristics. Machine learning systems are not interchangeable even in such a narrow area as image recognition. You need to tune the system, sometimes simply by trial and error, to notice well the features in the data of interest to you, until you reach the desired accuracy. But you may not notice that the system in 98% of cases is accurate when working with one group and only 91% (even if it is more accurate than a human analysis) on the other.

So far I have mainly used examples related to people and their characteristics. The discussion around this problem is mainly focused on this topic. But it is important to understand that bias towards people is only part of the problem. We will use machine learning for a variety of things, and the sampling error will be relevant for all of them. On the other hand, if you work with people, data bias may not be related to them.

To understand this, let’s go back to the skin cancer example and consider three hypothetical possibilities for system failure.

- Non-uniform distribution of people: an unbalanced number of photographs of skin of different tones, which leads to false-positive or false-negative results associated with pigmentation.

- The data on which the system is trained contain a frequently occurring and non-uniformly distributed characteristic that is not related to humans and does not have diagnostic value: a ruler on photos of skin cancer manifestations or grass on photos of sheep. In this case, the result will be different if on the image the system finds the pixels of something that the human eye defines as a “ruler”.

- The data contain a third-party characteristic that a person cannot see, even if he searches for it.

What does it mean? We know a priori that data can represent different groups of people in different ways, and at a minimum we can schedule a search for such exceptions. In other words, there are a lot of social reasons to assume that the data on groups of people already contain some prejudice. If we look at the photo with the ruler, we will see this ruler - we just ignored it before, knowing that it does not matter, and forgetting that the system does not know anything.

But what if all your photos of unhealthy skin were taken in an office where incandescent bulbs are used, and healthy ones with fluorescent light? What if, having finished taking off healthy skin, before shooting unhealthy, you updated the operating system on your phone, and Apple or Google slightly changed the noise reduction algorithm? A person does not notice this, no matter how much he looks for such features. And then the machine use system will immediately see and use it. She doesn't know anything.

So far we have been talking about false correlations, but it may happen that the data are accurate and the results are correct, but you do not want to use them for ethical, legal or managerial reasons. In some jurisdictions, for example, women cannot be given a discount on insurance, although women may be safer to drive a car. We can easily imagine a system that, when analyzing historical data, assigns a lower risk coefficient to female names. Ok, let's remove the names from the selection. But remember the example with Amazon: the system can determine the gender by other factors (although it doesn’t know what the floor is and what the machine is), and you won’t notice this until the controller backdating analyzes the tariffs you offer and doesn’t charge you are fine

Finally, it is often implied that we will use such systems only for projects that involve people and social interactions. This is not true. If you are making gas turbines, you probably want to apply machine learning to telemetry transmitted by tens or hundreds of sensors on your product (audio, video, temperature, and any other sensors generate data that can be very easily adapted to create a machine learning model ). Hypothetically, you can say: “Here are the data on a thousand turbines that were out of order, obtained before their breakdown, but data from a thousand turbines that did not break. Build a model to tell the difference between them. ” Well, now imagine that Siemens sensors are 75% bad turbines, and only 12% are good (there is no connection with failures). The system will build a model to find turbines with Siemens sensors. Oops!

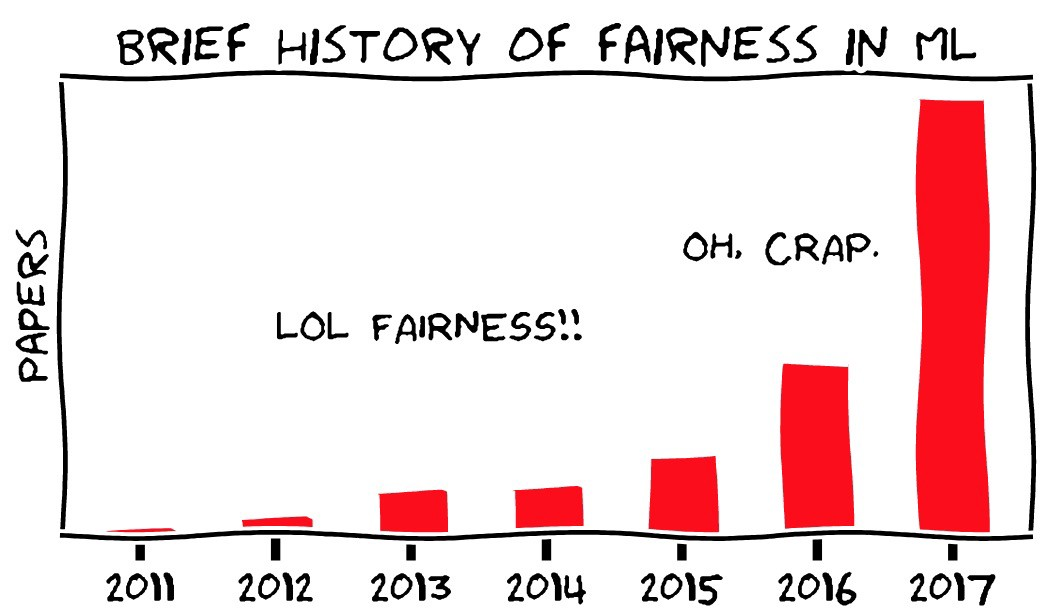

Picture - Moritz Hardt, UC Berkeley

AI bias control

What can we do about it? You can approach the issue from three sides:

- Methodological rigor in the collection and management of data for training the system.

- Technical tools for analyzing and diagnosing model behavior.

- Training, education and caution when introducing machine learning into products.

In Moliere’s The Bourgeois in the Nobility, there is a joke: one man was told that literature was divided into prose and poetry, and he discovered with admiration that he had spoken prose all his life without knowing it. Probably, statistics somehow feel today: without even noticing, they devoted their careers to artificial intelligence and sampling error. To look for a sampling error and worry about it is not a new problem, we just need to systematically approach its solution. As mentioned above, in some cases it is actually easier to do by studying the problems associated with data about people. We assume a priori that we may have prejudices regarding different groups of people, but it’s hard to even imagine a prejudice about Siemens sensors.

The new thing in all of this, of course, is that people are no longer engaged in statistical analysis directly. It is carried out by machines that create large complex models that are difficult to understand. The issue of transparency is one of the main aspects of the bias problem. We are afraid that the system is not just biased, but that there is no way to detect its bias, and that machine learning is different from other forms of automation, which are supposed to consist of clear logical steps that can be verified.

There are two problems here. We may still be able to conduct some kind of audit of machine learning systems. And auditing any other system is actually not at all easier.

First, one of the directions of modern research in the field of machine learning is the search for methods on how to identify the important functionality of machine learning systems. At the same time, machine learning (in its current state) is a completely new field of science, which is rapidly changing, so you should not think that impossible things today cannot soon become quite real. The OpenAI project is an interesting example.

Secondly, the idea that you can test and understand the decision-making process in existing systems or organizations is good in theory, but so-so in practice. Understanding how decisions are made in a large organization is not easy at all. Even if there exists a formal decision-making process, it does not reflect how people actually interact, and they themselves often do not have a logical systematic approach to making their decisions. As my colleague Vijay Pande said , people are black boxes too .

Take a thousand people in several overlapping companies and institutions, and the problem will become even more difficult. We know after the fact that the Space Shuttle was destined to fall apart when returning, and some people inside NASA had information that gave them reason to think that something bad could happen, but the system did not know it in general . NASA even just went through a similar audit, losing the previous shuttle, and yet it lost another one - for a very similar reason. It is easy to say that organizations and people follow clear logical rules that can be tested, understood and changed - but experience proves the opposite. This is the “ deception of the State Planning Committee ”.

I often compare machine learning with databases, especially with relational ones - a new fundamental technology that has changed the capabilities of computer science and the world around it, which has become a part of everything that we constantly use without realizing this. Databases also have problems, and they have similar properties: the system can be built on incorrect assumptions or on bad data, but it will be difficult to notice, and people using the system will do what she says to them without asking questions. There are a lot of old jokes about tax officials who once wrote your name wrong, and convincing them to correct the error is much more difficult than actually changing the name. You can think about this in different ways, but it is not clear how best: how about a technical problem in SQL, or about an error in the release of Oracle, or how a bureaucratic institution fails? How difficult is it to find an error in the process that led to the fact that the system does not have such features as correcting typos? Could you understand this before people started complaining?

Even more simply, this problem is illustrated by stories when drivers, because of outdated data in the navigator, move into rivers. OK, maps must be constantly updated. But how much TomTom is to blame for the fact that your car blows into the sea?

I say this to the fact that yes - the bias of machine learning will create problems. But these problems will be similar to those we have encountered in the past, and they can be noticed and solved (or not) approximately as well as we have managed in the past. Therefore, a scenario in which AI bias will cause damage is unlikely to happen to leading researchers working in a large organization. Most likely, some insignificant technological contractor or software vendor will write something on his knee, using open-source components, libraries and tools that are not clear to him. And the hapless client buys the phrase “artificial intelligence” in the product description and, without asking any questions, will distribute it to his low-paid workers, telling them to do what the AI says. This is exactly what happened with the databases. This is not an artificial intelligence problem, or even a software problem. This is a human factor.

Conclusion

Machine learning can do everything you can teach a dog - but you can never be sure what exactly you taught this dog.

It often seems to me that the term “artificial intelligence” only makes it difficult to enter conversations like this. This term creates the false impression that we actually created it - this intelligence. What we are on the way to HAL9000 or Skynet - to something that actually understands . But no. These are just cars, and it is much more correct to compare them, say, with a washing machine. It is much better for a person to do laundry, but if you put dishes in it instead of laundry, she will ... wash it. The dishes will even be clean. But it will not be what you expected, and it will not happen because the system has some preconceptions about the dishes. A washing machine does not know what dishes are, or what clothes are - this is just an example of automation, conceptually no different from how processes were automated before.

Whatever it is about - machines, airplanes or databases - these systems will be very powerful and very limited at the same time. They will depend entirely on how people use these systems, whether they have good or bad intentions and how they understand their work.

Therefore, to say that “artificial intelligence is mathematics, therefore it cannot have prejudices” is absolutely not true. But it is just as wrong to say that machine learning is "subjective in nature." Machine learning finds patterns in the data, and what patterns it finds depends on the data, and the data depends on us. Like what we do with them. Machine learning really does some things much better than us - but dogs, for example, are much more effective than people in detecting drugs, which is not a reason to involve them as witnesses and to make sentences based on their testimony. And dogs, by the way, are much smarter than any machine learning system.

Translation: Diana Letskaya .

Editing: Alexey Ivanov .

Community: @PonchikNews .

')

Source: https://habr.com/ru/post/449224/

All Articles