FFmpeg getting started with Visual Studio

Hello! To begin with, I am developing a program for identifying car numbers on a cheap low-power processor like Intel ATOM Z8350. We obtained quite good results in determining the Russian numbers on a static picture (up to 97%) with good speed without using neural networks. The case is left for small - work with IP-camera Fig 1.

pic.1 Intel ATOM Z83II computer and ATIS IP camera

FFmpeg is a library for creating video applications or even general purpose utilities that takes care of all the hard work of video processing, performing all the decoding, encoding, multiplexing and demultiplexing for you.

')

Task : Full HD IP camera in standard h.264 transmits RTSP stream. The size of the unpacked frame is 1920x1080 pixels, the frequency is 25 frames per second. It is necessary to receive decoded frames into RAM and save every 25 frames to disk.

In this example, we will decode frames programmatically. The goal is to learn how to use FFmpeg and further compare the results obtained using hardware decoding. You will see FFmpeg - it's easy!

Installing FFmpeg : many people suggest building FFmpeg for their hardware. I propose to use zeranoe assemblies, it greatly simplifies the task. It is very important that zeranoe assemblies include support for DXVA2, which will come in handy later for hardware decoding.

Go to the site https://ffmpeg.zeranoe.com/builds/ and download 2 shared archives and dev before choosing 32 or 64 bits. The dev archive stores libraries (.lib) and include. The shared archive contains the necessary .dll which will need to be copied to the folder with your future program.

So, create a C: \ ffmpeg folder on the C: \ drive. In it we will rewrite the files from the archive dev.

Connecting FFmpeg to Visual Studio 2017: create a new project. Go to the project properties (Project - properties). Next C / C ++ and select "Additional directories include files." Set the value: "C: \ ffmpeg \ dev \ include;". After that, go to Linker-Additional Library Directories and set the value "C: \ ffmpeg \ dev \ lib;". Everything. FFmpeg is connected to our project.

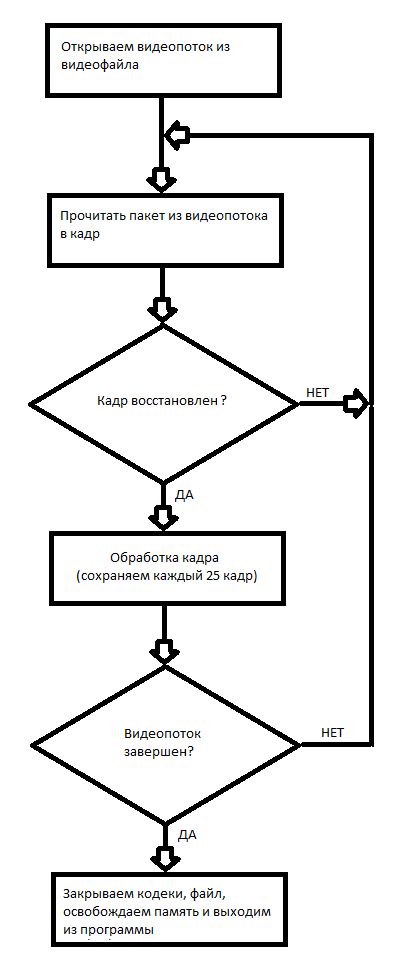

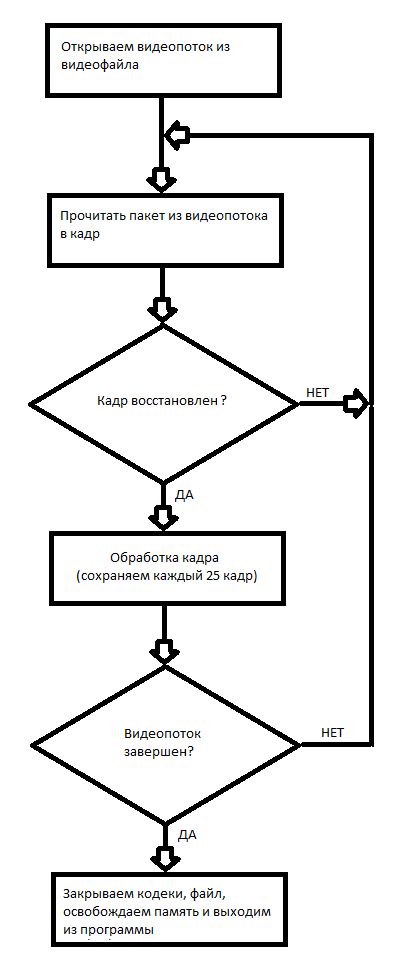

The first project with FFmpeg: software video decoding and recording every 25 frames per disc. The principle of working with video file in FFmpeg is presented in the flowchart of Fig.2

Fig.2 Block diagram of working with a video file.

Because I have an IP camera with IP 192.168.1.168, then the program call:

Also, this example is able to decode video files, it is enough to indicate its location.

And so, in this example, we learned how to programmatically decode video files and save the received frames to disk. Frames are saved in .ppm format. To open these files you can use IrfanView 64 or GIMP in Windows.

Conclusion: software decoding of the RTSP Full HD H.264 stream takes up to two Intel ATOM Z8350 cores, moreover, packet loss occurs periodically, due to which some frames are decoded incorrectly. This method is more applicable for decoding recorded video files, because there is no need for real-time operation.

In the next article I will explain how to hardware decode an RTSP stream.

Archive with the project

Working program

1. The FFmpeg tutorial is a bit out of date.

2. Various useful information on FFmpeg.

3. Information on the use of various libraries provided by FFmpeg.

pic.1 Intel ATOM Z83II computer and ATIS IP camera

FFmpeg is a library for creating video applications or even general purpose utilities that takes care of all the hard work of video processing, performing all the decoding, encoding, multiplexing and demultiplexing for you.

')

Task : Full HD IP camera in standard h.264 transmits RTSP stream. The size of the unpacked frame is 1920x1080 pixels, the frequency is 25 frames per second. It is necessary to receive decoded frames into RAM and save every 25 frames to disk.

In this example, we will decode frames programmatically. The goal is to learn how to use FFmpeg and further compare the results obtained using hardware decoding. You will see FFmpeg - it's easy!

Installing FFmpeg : many people suggest building FFmpeg for their hardware. I propose to use zeranoe assemblies, it greatly simplifies the task. It is very important that zeranoe assemblies include support for DXVA2, which will come in handy later for hardware decoding.

Go to the site https://ffmpeg.zeranoe.com/builds/ and download 2 shared archives and dev before choosing 32 or 64 bits. The dev archive stores libraries (.lib) and include. The shared archive contains the necessary .dll which will need to be copied to the folder with your future program.

So, create a C: \ ffmpeg folder on the C: \ drive. In it we will rewrite the files from the archive dev.

Connecting FFmpeg to Visual Studio 2017: create a new project. Go to the project properties (Project - properties). Next C / C ++ and select "Additional directories include files." Set the value: "C: \ ffmpeg \ dev \ include;". After that, go to Linker-Additional Library Directories and set the value "C: \ ffmpeg \ dev \ lib;". Everything. FFmpeg is connected to our project.

The first project with FFmpeg: software video decoding and recording every 25 frames per disc. The principle of working with video file in FFmpeg is presented in the flowchart of Fig.2

Fig.2 Block diagram of working with a video file.

Here is the C ++ project code.

// 21 2019 // , , http://dranger.com/ffmpeg/tutorial01.html // #include "pch.h" extern "C" { #include <libavcodec/avcodec.h> #include <libavformat/avformat.h> #include <libswscale/swscale.h> #include <libavformat/avio.h> #include <libavutil/pixdesc.h> #include <libavutil/hwcontext.h> #include <libavutil/opt.h> #include <libavutil/avassert.h> #include <libavutil/imgutils.h> #include <libavutil/motion_vector.h> #include <libavutil/frame.h> } <cut /> #include <stdio.h> #include <stdlib.h> #include <string.h> #pragma comment(lib, "avcodec.lib") #pragma comment(lib, "avformat.lib") #pragma comment(lib, "swscale.lib") #pragma comment(lib, "avdevice.lib") #pragma comment(lib, "avutil.lib") #pragma comment(lib, "avfilter.lib") #pragma comment(lib, "postproc.lib") #pragma comment(lib, "swresample.lib") #define _CRT_SECURE_NO_WARNINGS #pragma warning(disable : 4996) // compatibility with newer API #if LIBAVCODEC_VERSION_INT < AV_VERSION_INT(55,28,1) #define av_frame_alloc avcodec_alloc_frame #define av_frame_free avcodec_free_frame #endif void SaveFrame(AVFrame *pFrame, int width, int height, int iFrame) { FILE *pFile; char szFilename[32]; int y; // sprintf(szFilename, "frame%d.ppm", iFrame); pFile = fopen(szFilename, "wb"); if (pFile == NULL) return; // fprintf(pFile, "P6\n%d %d\n255\n", width, height); // for (y = 0; y < height; y++) fwrite(pFrame->data[0] + y * pFrame->linesize[0], 1, width * 3, pFile); // fclose(pFile); } <cut /> int main(int argc, char *argv[]) { AVFormatContext *pFormatCtx = NULL; int i, videoStream; AVCodecContext *pCodecCtxOrig = NULL; AVCodecContext *pCodecCtx = NULL; AVCodec *pCodec = NULL; AVFrame *pFrame = NULL; AVFrame *pFrameRGB = NULL; AVPacket packet; int frameFinished; int numBytes; uint8_t *buffer = NULL; struct SwsContext *sws_ctx = NULL; if (argc < 2) { printf("Please provide a movie file\n"); return -1; } // av_register_all(); // if (avformat_open_input(&pFormatCtx, argv[1], NULL, NULL) != 0) return -1; // // if (avformat_find_stream_info(pFormatCtx, NULL) < 0) return -1; // : , , av_dump_format(pFormatCtx, 0, argv[1], 0); // videoStream = -1; for (i = 0; i < pFormatCtx->nb_streams; i++) if (pFormatCtx->streams[i]->codec->codec_type == AVMEDIA_TYPE_VIDEO) { videoStream = i; break; } if (videoStream == -1) return -1; // <cut /> // pCodecCtxOrig = pFormatCtx->streams[videoStream]->codec; // pCodec = avcodec_find_decoder(pCodecCtxOrig->codec_id); if (pCodec == NULL) { fprintf(stderr, "Unsupported codec!\n"); return -1; // } // pCodecCtx = avcodec_alloc_context3(pCodec); if (avcodec_copy_context(pCodecCtx, pCodecCtxOrig) != 0) { fprintf(stderr, "Couldn't copy codec context"); return -1; // } // if (avcodec_open2(pCodecCtx, pCodec, NULL) < 0) return -1; // // pFrame = av_frame_alloc(); // RGB pFrameRGB = av_frame_alloc(); if (pFrameRGB == NULL) return -1; // numBytes = avpicture_get_size(AV_PIX_FMT_RGB24, pCodecCtx->width, pCodecCtx->height); buffer = (uint8_t *)av_malloc(numBytes * sizeof(uint8_t)); // . avpicture_fill((AVPicture *)pFrameRGB, buffer, AV_PIX_FMT_RGB24, pCodecCtx->width, pCodecCtx->height); // SWS context RGB sws_ctx = sws_getContext(pCodecCtx->width, pCodecCtx->height, pCodecCtx->pix_fmt, pCodecCtx->width, pCodecCtx->height, AV_PIX_FMT_RGB24, SWS_BILINEAR, NULL, NULL, NULL ); <cut /> // 25 i = 0; while (av_read_frame(pFormatCtx, &packet) >= 0) { // ? if (packet.stream_index == videoStream) { // avcodec_decode_video2(pCodecCtx, pFrame, &frameFinished, &packet); // ? if (frameFinished) { // RGB sws_scale(sws_ctx, (uint8_t const * const *)pFrame->data, pFrame->linesize, 0, pCodecCtx->height, pFrameRGB->data, pFrameRGB->linesize); // if (++i % 25 == 0) SaveFrame(pFrameRGB, pCodecCtx->width, pCodecCtx->height,i); } } // av_free_packet(&packet); } // av_free(buffer); av_frame_free(&pFrameRGB); av_frame_free(&pFrame); avcodec_close(pCodecCtx); avcodec_close(pCodecCtxOrig); avformat_close_input(&pFormatCtx); return 0; } Because I have an IP camera with IP 192.168.1.168, then the program call:

decode.exe rtsp://192.168.1.168 Also, this example is able to decode video files, it is enough to indicate its location.

And so, in this example, we learned how to programmatically decode video files and save the received frames to disk. Frames are saved in .ppm format. To open these files you can use IrfanView 64 or GIMP in Windows.

Conclusion: software decoding of the RTSP Full HD H.264 stream takes up to two Intel ATOM Z8350 cores, moreover, packet loss occurs periodically, due to which some frames are decoded incorrectly. This method is more applicable for decoding recorded video files, because there is no need for real-time operation.

In the next article I will explain how to hardware decode an RTSP stream.

Archive with the project

Working program

Links to materials on FFmpeg:

1. The FFmpeg tutorial is a bit out of date.

2. Various useful information on FFmpeg.

3. Information on the use of various libraries provided by FFmpeg.

Source: https://habr.com/ru/post/449198/

All Articles