Operating Systems: Three Easy Pieces. Part 4: Scheduler Introduction (Translation)

Introduction to operating systems

Hi, Habr! I want to bring to your attention a series of articles-translations of one interesting in my opinion literature - OSTEP. This material takes a rather in-depth look at the work of unix-like operating systems, namely, working with processes, various schedulers, memory, and other similar components that make up a modern OS. The original of all materials you can see here . Please note that the translation was made unprofessionally (fairly freely), but I hope I saved the general meaning.

Laboratory work on this subject can be found here:

Other parts:

')

- Part 1: Intro

- Part 2: Abstraction: the process

- Part 3: Introduction to the Process API

- Part 4: Scheduler Introduction

And you can also look to me on the channel in the telegram =)

Scheduler Introduction

The crux of the problem: How to develop a policy planner

How should basic scheduler frameworks be developed? What should be the key assumptions? What metrics are important? What are the basic techniques used in early computing systems?

Workload assumptions

Before discussing possible policies, let's start with a few simplifying digressions about the processes running in the system, which are collectively called workload . Defining the workload as a critical part of building a policy and the more you know about the workload, the better the quality policy you can write.

We make the following assumptions about the processes running on the system, sometimes also called jobs . Practically all these assumptions are not realistic, but are necessary for the development of thought.

- Each task is running the same amount of time.

- All tasks are set simultaneously

- The task is working until its completion,

- All tasks use only CPU,

- The time of each task is known.

Scheduler Metrics

In addition to some load assumptions, some other tool for comparing different scheduling policies is needed: scheduler metrics. A metric is just some measure of something. There are a number of metrics that can be used to compare planners.

For example, we will use a metric called turnaround time. The task turnaround time is defined as the difference between the task completion time and the task arrival time in the system.

Tturnaround = Tcompletion − Tarrival

Since we assumed that all tasks arrived at the same time, Ta = 0 and thus Tt = Tc. This value will naturally change when we change the above assumptions.

Another metric is fairness . Productivity and honesty are often opposing characteristics in planning. For example, the scheduler can optimize performance, but at the cost of waiting for other tasks to run, thus honesty is reduced.

FIRST IN FIRST OUT (FIFO)

The most basic algorithm that we can implement is called FIFO or first come (in), first served (out) . This algorithm has several advantages: it is very simple to implement and it fits all our assumptions, doing the job quite well.

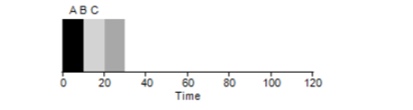

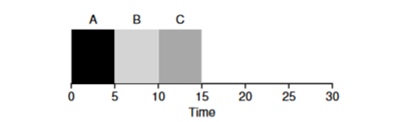

Consider a simple example. Suppose 3 tasks were set at the same time. But suppose that task A came a bit earlier than all the others, so the list of execution will be earlier than the others, just like B with respect to B. Suppose that each of them will be executed for 10 seconds. What would be the average time to complete these tasks?

By counting the values - 10 + 20 + 30 and dividing by 3, we get the average program execution time equal to 20 seconds.

Now we will try to change our assumptions. In particular, assumption 1 and thus we will no longer assume that each task is executed the same amount of time. How will the FIFO show itself this time?

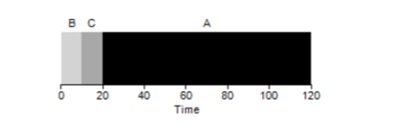

As it turns out, the different execution times of the tasks extremely negatively affect the productivity of the FIFO algorithm. Suppose that task A is executed 100 seconds, while B and C will still be 10 each.

As can be seen from the figure, the average time for the system is (100 + 110 + 120) / 3 = 110. This effect is called the convoy effect , when some short-term consumers of any resource will stand in line after a heavy consumer. It’s like a line at a grocery store when you’re in front of you with a full cart. The best solution is to try to change the cashier or relax and breathe deeply.

Shortest job first

Is it possible to somehow solve a similar situation with heavy processes? Of course. Another type of scheduling is called Shortest Job First (SJF). Its algorithm is also quite primitive - as the name implies, the shortest tasks will be launched first one by one.

In this example, the result of running the same processes will be an improvement in the average turnover time of programs and it will be 50 instead of 110 , which is almost 2 times better.

Thus, for the given assumption that all tasks arrive at the same time, the SJF algorithm seems to be the most optimal algorithm. However, our assumptions still do not seem realistic. This time we change Assumption 2 and this time imagine that tasks can be at any time, and not all at the same time. What problems can it cause?

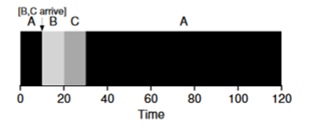

Imagine that task A (100s) arrives at the very first and starts to be executed. At time t = 10, tasks B, C arrive, each of which will take 10 seconds. Thus, the average execution time is (100+ (110-10) + (120-10)) \ 3 = 103. What could the planner do to improve the situation?

Shortest Time-to-Completion First (STCF)

In order to improve the situation, we omit assumption 3 that the program is up and running until completion. In addition, we will need hardware support and, as you might guess, we will use a timer to interrupt a running task and switch contexts . Thus, the scheduler can do something at the moment of receipt of tasks B, C - stop the execution of task A and put tasks B and C into processing and after they finish continue the process A. Such a scheduler is called STCF or Preemptive Job First .

The result of this scheduler will be the following result: ((120-0) + (20-10) + (30-10)) / 3 = 50. Thus, such a scheduler becomes even more optimal for our tasks.

Metric Response Time (Response Time)

Thus, if we know the running time of the tasks and the fact that these tasks use only the CPU, STCF will be the best solution. And once in early times, these algorithms worked and pretty well. However, now the user spends most of the time behind the terminal and expects productive interactive interaction from it. Thus, a new metric was born - the response time.

The response time is calculated as follows:

Tresponse = Tfirstrun − Tarrival

Thus, for the previous example, the response time will be: A = 0, B = 0, B = 10 (abg = 3.33).

And the STCF algorithm turns out to be not so good in a situation when 3 tasks arrive at the same time - it will have to wait until the small tasks are completely completed. Thus, the algorithm is good for the turnover time metric, but bad for the interactivity metric. Imagine that sitting at the terminal in an attempt to print characters in the editor you would have to wait more than 10 seconds, because some other task takes the processor. This is not very nice.

So we face another problem - how can we build a scheduler that is sensitive to response time?

Round robin

To solve this problem, a round robin (RR) algorithm was developed. The basic idea is quite simple: instead of starting tasks to complete, we will run the task for a certain period of time (called a time slice) and then switch to another task from the queue. The algorithm repeats its work until all tasks are completed. At the same time, the program must be a multiple of the time after which the timer will interrupt the process. For example, if a timer interrupts the process every x = 10ms, then the size of the process execution window should be a multiple of 10 and be 10.20 or x * 10.

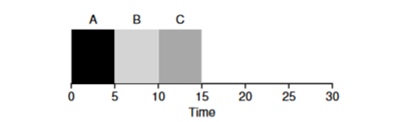

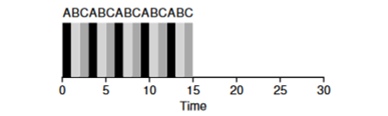

Consider an example: ABC tasks arrive simultaneously in the system and each of them wants to work for 5 seconds. The SJF algorithm will perform each task to the end, before running another. In contrast, the RR algorithm with the launch window = 1c will go through the tasks as follows (Fig. 4.3):

(SJF Again (Bad for Response Time)

(Round Robin (Good For Response Time)

The average response time for the algorithm RR (0 + 1 + 2) / 3 = 1, whereas for SJF (0 + 5 + 10) / 3 = 5.

It is logical to assume that the time window is a very important parameter for RR, the smaller it is, the higher the response time. However, it cannot be made too small, since the time for context switching will also play its part in overall performance. Thus, the timing of the execution window is set by the OS architect and depends on the tasks that it plans to perform. Context switching is not the only service operation that spends time - the running program still has many things to operate, for example, different caches and with each switch it is necessary to save and restore this environment, which can also take a lot of time.

RR is a great scheduler if it were only a response time metric. But how will the task time metric behave with this algorithm? Consider the example above when the operating time is A, B, B = 5s and arrive at the same time. Task A will end at 13, B at 14, C at 15 s and the average turnaround time will be 14 s. Thus, RR is the worst algorithm for the turnover metric.

More generally, any RR-type algorithm is fair, it divides the work on the CPU equally between all processes. And thus, these metrics are constantly in conflict with each other.

Thereby, we have several opposed algorithms and there are still a few assumptions - that the task time is known and that the task only uses the CPU.

Mixing with I / O

First of all, we remove assumption 4 that the process only uses the CPU, naturally this is not the case, and processes can also apply to other equipment.

When a process requests an I / O operation, the process goes to the blocked state, waiting for the I / O to complete. If I / O is sent to the hard disk, such an operation can take up to several ms or longer, and the processor will be idle at this moment. At this time, the scheduler may take the processor by any other process. The next decision that the scheduler has to make is when the process completes its I / O. When this happens, an interrupt will occur and the OS will transfer the process that caused the I / O to the ready state.

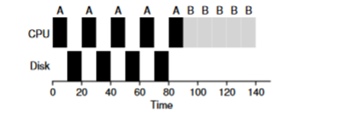

Consider an example of several tasks. Each of them needs 50ms of CPU time. However, the first one will go to I / O every 10ms (which will also be executed by 10ms). Process B simply uses a 50ms processor without I / O.

In this example, we will use the STCF scheduler. How will the scheduler behave if you run a process like A on it? He will proceed as follows - first, process A will fully complete, and then process B.

The traditional approach to solving this problem is to interpret every 10 ms subtask of process A as a separate task. Thus, when starting with the STJF algorithm, the choice between the 50-ms task and the 10-ms task is obvious. Then when subtask A is complete, process B and I / O will start. After completing the I / O, it will be decided to restart the 10-ms process A instead of the process B. Thus, it is possible to implement an overlap when the CPU is used by another process while the first waits for I / O. And as a result, the system is better utilized - at the moment when interactive processes are waiting for I / O, other processes can run on the processor.

The oracle is no more

Now let's try to get rid of the assumption that the time of the task is known. This is generally the worst and unrealistic assumption from the entire list. In fact, in average statistical OSs, the OS itself usually knows very little about the execution time of tasks, so how can we build a scheduler without knowing how long the task will be executed? Perhaps we could use some RR principles to solve this problem?

Total

We reviewed the basic ideas of scheduling tasks and reviewed 2 families of planners. The first one starts the shortest task at the beginning and thus increases the turnover time, the second one is torn between all tasks equally, increasing the response time. Both algorithms are bad where the algorithms of the other family are good. We also looked at how parallel use of CPU and I / O can improve performance, but never solved the problem with OS clairvoyance. And in the next lesson we will look at a planner who looks to the near past and tries to predict the future. And it is called multi-level feedback queue.

Source: https://habr.com/ru/post/449026/

All Articles