Swiss json processing knife

How to work effectively with json in R?

It is a continuation of previous publications .

Formulation of the problem

As a rule, the main source of data in json format will be the REST API. The use of json in addition to platform independence and ease of human data perception allows the exchange of unstructured data systems with a complex tree structure.

In API construction tasks, this is very convenient. It is easy to provide versioning of communication protocols, it is easy to provide flexibility of information exchange. At the same time, the complexity of the data structure (levels of nesting can be 5, 6, 10 or even more) does not scare, since it is not that difficult to write a flexible parser for every single record for a single record.

The tasks of data processing also include obtaining data from external sources, including in json format. R has a good set of packages, in particular jsonlite , designed to convert json to R objects ( list or, data.frame , if the data structure allows).

However, in practice, two classes of tasks often arise when the use of jsonlite and others like it becomes extremely inefficient. Tasks look like this:

- processing a large amount of data (unit of measurement - gigabytes) obtained during the work of various information systems;

- combining a large number of variable structure responses received during a batch of parameterized REST API requests into a uniform rectangular representation (

data.frame).

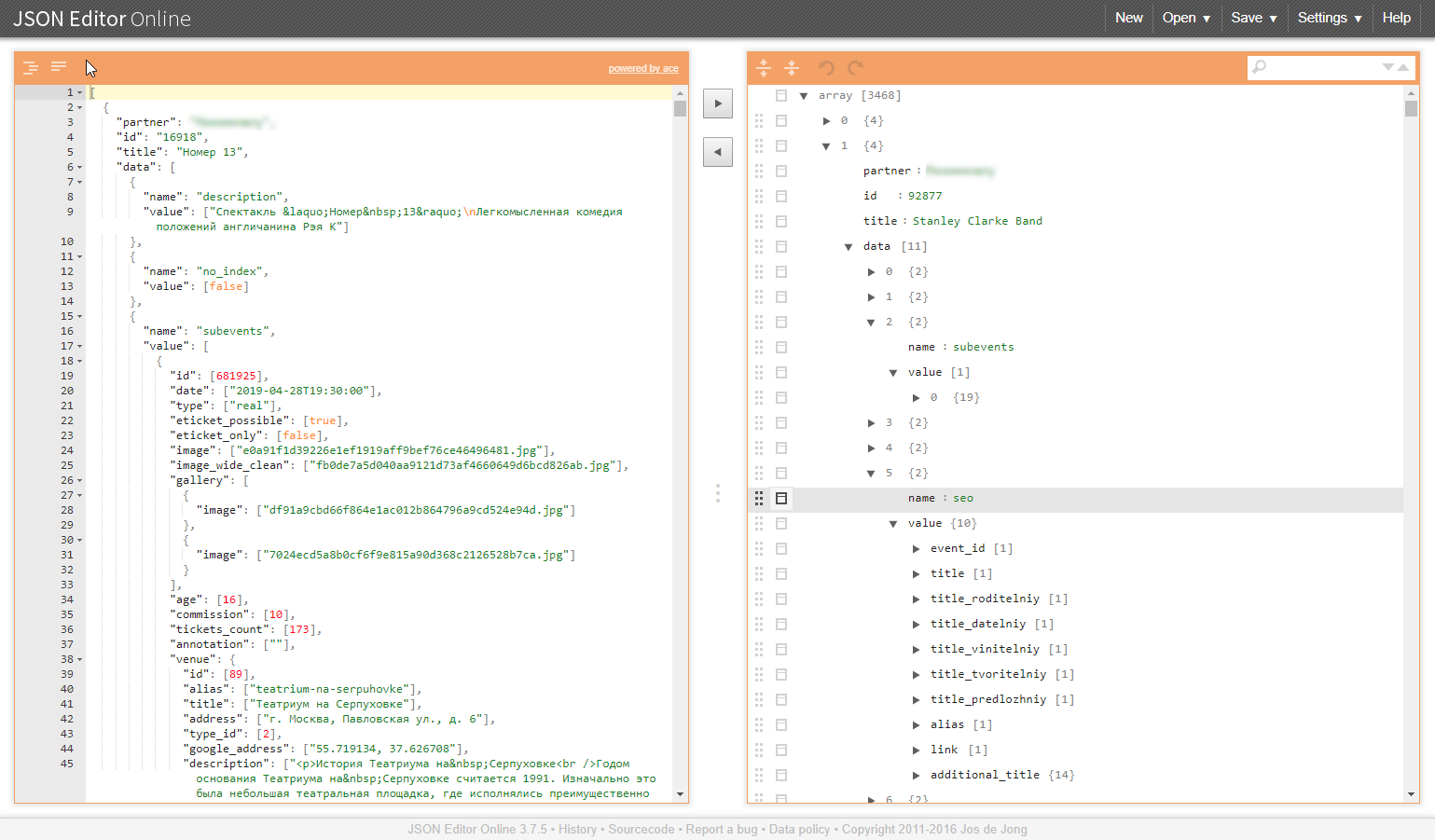

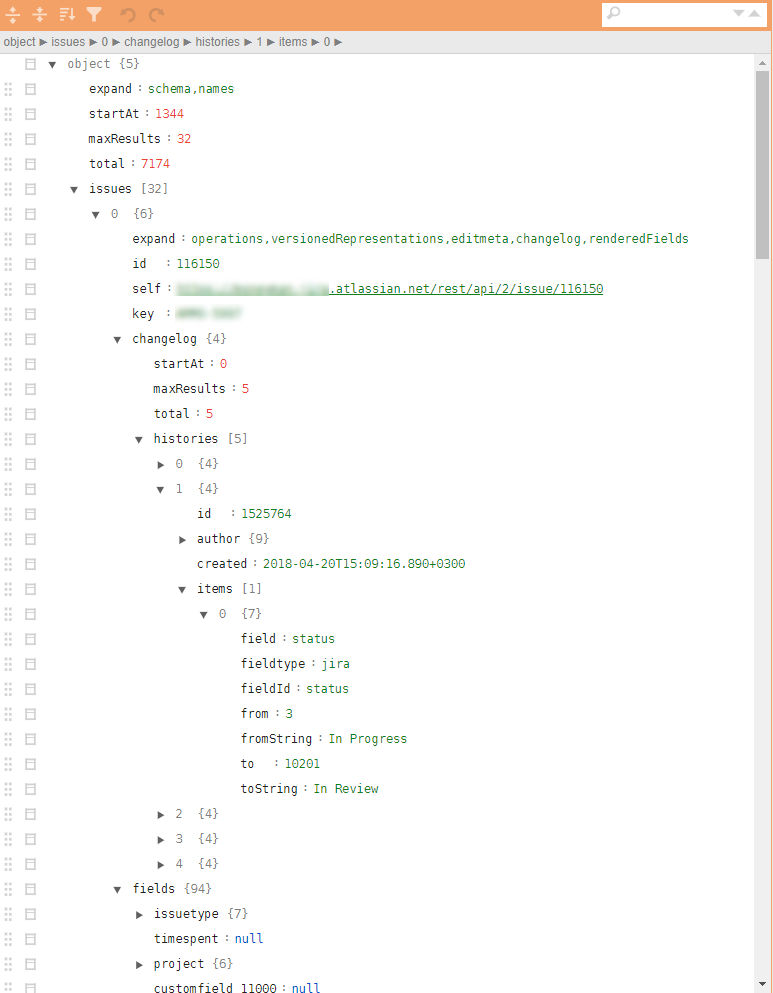

An example of such a structure in the illustrations:

Why are these classes of problems problematic?

Large amount of data

As a rule, downloads from information systems in json format are an indivisible block of data. To parse it correctly, you need to read it all and run through its entire volume.

Induced problems:

- a necessary amount of RAM and computational resources are required;

- The speed of parsing is highly dependent on the quality of the libraries used, and even if resources are sufficient, the conversion time can be tens or even hundreds of minutes;

- in the event of a parsing failure on output, no result is obtained, and hope that everything will always go smoothly, there is no reason, rather the opposite;

- It will be very successful if the parsed data can be converted to

data.frame.

Merging tree structures

Similar tasks arise, for example, when it is necessary to collect directories required by a business process for work on a packet of requests through an API. Additionally, reference books imply unification and readiness for embedding into an analytical pipeline and potential unloading into a database. And this again leads to the need to transform such summary data into data.frame .

Induced problems:

- tree structures themselves will not turn into a flat one. json parsers will turn the input data into a set of nested lists, which then manually must be long and painfully deployed;

- freedom in the attributes of the output data (the missing ones may not be issued) results in the appearance of

NULLobjects that are relevant in the lists but cannot “fit” in thedata.frame, which further complicates the postprocessing and complicates even the basic merging of individual strings indata.frame(doesn't matter,rbindlist,bind_rows, "map_dfr 'orrbind).

JQ - way out

In particularly difficult situations, the use of very convenient approaches of the jsonlite package "convert everything to R objects" for the reasons given above gives a serious failure. Well, if the end of the treatment can be reached. Worse, if in the middle you have to open your arms and give up.

An alternative to this approach is to use the json preprocessor, which operates directly with data in the json format. jq library and jqr wrapper Practice shows that it is not only little used, but very few people have heard of it at all and are very vain.

Advantages of the jq library.

- the library can be used in R, in Python and on the command line;

- all transformations are performed at the json level, without transformation into representations of R / Python objects;

- processing can be divided into atomic operations and use the principle of chains (pipe);

- cycles for processing object vectors are hidden inside the parser, the syntax of the maximum iteration is simplified;

- the ability to carry out all procedures for the unification of the structure of json, the deployment and retrieval of the necessary elements in order to form the json format converted in a batch manner into the

data.frameusingjsonlite; - multiple reduction of R code responsible for processing json data;

- tremendous processing speed, depending on the size and complexity of the data structure, the gain can be 1-3 orders of magnitude;

- much smaller requirements for RAM.

The processing code shrinks to fit the screen and may look something like this:

cont <- httr::content(r3, as = "text", encoding = "UTF-8") m <- cont %>% # jqr::jq('del(.[].movie.rating, .[].movie.genres, .[].movie.trailers)') %>% jqr::jq('del(.[].movie.countries, .[].movie.images)') %>% # jqr::jq('del(.[].schedules[].hall, .[].schedules[].language, .[].schedules[].subtitle)') %>% # jqr::jq('del(.[].cinema.location, .[].cinema.photo, .[].cinema.phones)') %>% jqr::jq('del(.[].cinema.goodies, .[].cinema.subway_stations)') # m2 <- m %>% jqr::jq('[.[] | {date, movie, schedule: .schedules[], cinema}]') df <- fromJSON(m2) %>% as_tibble() jq is very elegant and fast! Those to whom it is relevant: download, install, understand. We accelerate processing, we simplify life for ourselves and our colleagues.

Previous publication - “How to start using R in Enterprise. An example of a practical approach .

')

Source: https://habr.com/ru/post/448950/

All Articles