Python Testing with pytest. Using pytest with other tools, CHAPTER 7

Typically, pytest is not used on its own, but in a testing environment with other tools. This chapter discusses other tools that are often used in conjunction with pytest for effective and efficient testing. Although this is by no means an exhaustive list, the tools discussed here will give you an idea of the taste of pytest mixing power with other tools.

The examples in this book are written using Python 3.6 and pytest 3.2. pytest 3.2 supports Python 2.6, 2.7 and Python 3.3+.

The source code for the Tasks project, as well as for all the tests shown in this book, is available via the link on the book's web page at pragprog.com . You do not need to download the source code to understand the test code; The test code is presented in a convenient form in the examples. But to follow along with the project objectives, or to adapt test examples to test your own project (your hands are untied!), You should go to the book’s web page and download the work. In the same place, on the book’s web page there is a link for errata messages and a discussion forum .

Under the spoiler is a list of articles in this series.

- Introduction

- Chapter 1: Getting Started with pytest

- Chapter 2: Writing Test Functions

- Chapter 3: Pytest Fixtures

- Chapter 4: Builtin Fixtures

- Chapter 5: Plugins

- Chapter 6: Configuration

- [ Chapter 7: Using pytest with other tools ] (This article) ( https://habr.com/ru/en/post/448798/ )

pdb: Debugging Test Failures

The pdb module is a Python debugger in the standard library. You use --pdb to start the pytest debug session at the point of failure. Let's take a look at pdb in action in the context of the Tasks project.

In “Parameterization Fixture” on page 64, we left the Tasks project with a few errors:

$ cd /path/to/code/ch3/c/tasks_proj $ pytest --tb=no -q .........................................FF.FFFF FFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFFF.FFF........... 42 failed, 54 passed in 4.74 seconds Before we look at how pdb can help us debug this test, let's take a look at the available pytest options to speed up the debugging of test errors that we first examined in the “Using Options” section on page 9:

--tb=[auto/long/short/line/native/no]: Controls the trace style.-v / --verbose: Displays all test names passed or not.-l / --showlocals: Display local variables next to the stack trace.-lf / --last-failed: Run only tests that failed.-x / --exitfirst: Stops a test session on the first crash.--pdb: Runs an interactive debugging session at the point of failure.

Installing MongoDB

As mentioned in chapter 3, “Pytest Fixtures,” on page 49, MongoDB and pymongo required to run MongoDB tests.

I tested the Community Server version found at https://www.mongodb.com/download-center . pymongo is installed with pip : pip install pymongo . However, this is the last example in a book using MongoDB. To test the debugger without using MongoDB, you can run the pytest commands from code/ch2/ , since this directory also contains several failed tests.

We just ran tests from code/ch3/c to make sure that some of them do not work. We did not see tracebacks or test names, because --tb=no disables tracing, and we didn’t --tb=no on --verbose . Let's repeat the mistakes (no more than three) with detailed text:

$ pytest --tb=no --verbose --lf --maxfail=3 ============================= test session starts ============================= collected 96 items / 52 deselected run-last-failure: rerun previous 44 failures tests/func/test_add.py::test_add_returns_valid_id[mongo] ERROR [ 2%] tests/func/test_add.py::test_added_task_has_id_set[mongo] ERROR [ 4%] tests/func/test_add.py::test_add_increases_count[mongo] ERROR [ 6%] =================== 52 deselected, 3 error in 0.72 seconds ==================== Now we know which tests failed. Let's look at just one of them, using -x , turning on tracing, not using --tb=no , and showing local variables with -l :

$ pytest -v --lf -l -x ===================== test session starts ====================== run-last-failure: rerun last 42 failures collected 96 items tests/func/test_add.py::test_add_returns_valid_id[mongo] FAILED =========================== FAILURES =========================== _______________ test_add_returns_valid_id[mongo] _______________ tasks_db = None def test_add_returns_valid_id(tasks_db): """tasks.add(<valid task>) should return an integer.""" # GIVEN an initialized tasks db # WHEN a new task is added # THEN returned task_id is of type int new_task = Task('do something') task_id = tasks.add(new_task) > assert isinstance(task_id, int) E AssertionError: assert False E + where False = isinstance(ObjectId('59783baf8204177f24cb1b68'), int) new_task = Task(summary='do something', owner=None, done=False, id=None) task_id = ObjectId('59783baf8204177f24cb1b68') tasks_db = None tests/func/test_add.py:16: AssertionError !!!!!!!!!!!! Interrupted: stopping after 1 failures !!!!!!!!!!!! ===================== 54 tests deselected ====================== =========== 1 failed, 54 deselected in 2.47 seconds ============ Quite often this is enough to understand why the test failed. In this particular case, it is pretty clear that task_id not an integer — it is an ObjectId instance. ObjectId is the type that MongoDB uses for object identifiers in a database. My intention with the tasksdb_pymongo.py layer was to hide certain implementation details of MongoDB from the rest of the system. It is clear that in this case it did not work.

However, we want to see how to use pdb with pytest, so let's imagine that it is unclear why this test failed. We can make pytest start a debugging session and start us right at the point of failure with --pdb :

$ pytest -v --lf -x --pdb ===================== test session starts ====================== run-last-failure: rerun last 42 failures collected 96 items tests/func/test_add.py::test_add_returns_valid_id[mongo] FAILED >>>>>>>>>>>>>>>>>>>>>>>>>> traceback >>>>>>>>>>>>>>>>>>>>>>>>>>> tasks_db = None def test_add_returns_valid_id(tasks_db): """tasks.add(<valid task>) should return an integer.""" # GIVEN an initialized tasks db # WHEN a new task is added # THEN returned task_id is of type int new_task = Task('do something') task_id = tasks.add(new_task) > assert isinstance(task_id, int) E AssertionError: assert False E + where False = isinstance(ObjectId('59783bf48204177f2a786893'), int) tests/func/test_add.py:16: AssertionError >>>>>>>>>>>>>>>>>>>>>>>>> entering PDB >>>>>>>>>>>>>>>>>>>>>>>>> > /path/to/code/ch3/c/tasks_proj/tests/func/test_add.py(16) > test_add_returns_valid_id() -> assert isinstance(task_id, int) (Pdb) Now that we are in the prompt (Pdb), we have access to all the interactive features of pdb debugging. When viewing failures, I regularly use these commands:

p/print expr: Prints the value of exp.pp expr: Pretty prints the value of expr.l/list: Lists the point of failure and five lines of code above and below.l/list begin,end: Lists specific line numbers.a/args: Prints the arguments of the current function with their values.u/up: Moves up one level on the stack path.d/down: Moves down one level in the stack trace.q/quit: Terminates the debug session.

Other navigation commands, such as step and next, are not very useful, as we sit directly in the assert statement. You can also just enter the variable names and get the values.

You can use p/print expr similarly to the -l/--showlocals to view the values in the function:

(Pdb) p new_task Task(summary='do something', owner=None, done=False, id=None) (Pdb) p task_id ObjectId('59783bf48204177f2a786893') (Pdb) Now you can exit the debugger and continue testing.

(Pdb) q !!!!!!!!!!!! Interrupted: stopping after 1 failures !!!!!!!!!!!! ===================== 54 tests deselected ====================== ========== 1 failed, 54 deselected in 123.40 seconds =========== If we didn't use - , pytest would open Pdb again in the next test. More information about using the pdb module is available in the Python documentation .

Coverage.py: Determining the amount of code under test

Code coverage is an indication of what percentage of code being tested is tested by a test suite. When you run tests for the Tasks project, some Tasks functions are performed with each test, but not with all.

Code coverage tools are great for letting you know which parts of the system are completely missing from the tests.

Coverage.py is the preferred Python coverage tool that measures code coverage.

You will use it to check the Tasks project code using pytest.

To use coverage.py you need to install it. It pytest-cov n't hurt to install a plugin called pytest-cov , which allows you to call coverage.py from pytest with some additional pytest options. Since coverage is one of the dependencies of pytest-cov , it is enough to install pytest-cov and it will attract coverage.py :

$ pip install pytest-cov Collecting pytest-cov Using cached pytest_cov-2.5.1-py2.py3-none-any.whl Collecting coverage>=3.7.1 (from pytest-cov) Using cached coverage-4.4.1-cp36-cp36m-macosx_10_10_x86 ... Installing collected packages: coverage, pytest-cov Successfully installed coverage-4.4.1 pytest-cov-2.5.1 Let's run a coverage report for the second version of the tasks. If you still have the first version of the Tasks project installed, uninstall it and install version 2:

$ pip uninstall tasks Uninstalling tasks-0.1.0: /path/to/venv/bin/tasks /path/to/venv/lib/python3.6/site-packages/tasks.egg-link Proceed (y/n)? y Successfully uninstalled tasks-0.1.0 $ cd /path/to/code/ch7/tasks_proj_v2 $ pip install -e . Obtaining file:///path/to/code/ch7/tasks_proj_v2 ... Installing collected packages: tasks Running setup.py develop for tasks Successfully installed tasks $ pip list ... tasks (0.1.1, /path/to/code/ch7/tasks_proj_v2/src) ... Now that the next version of the tasks is installed, you can run a baseline coverage report:

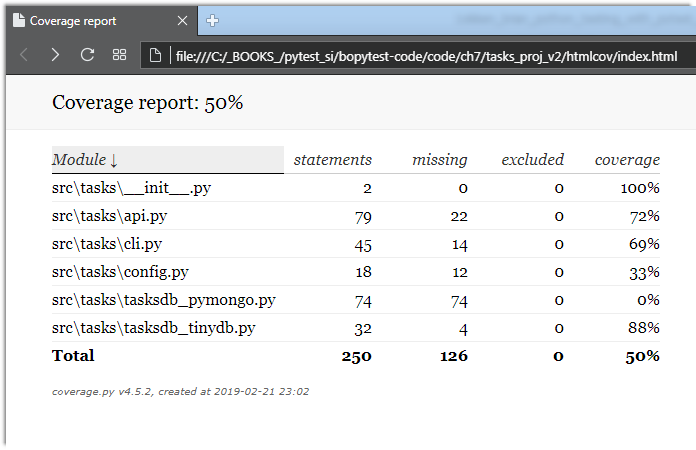

$ cd /path/to/code/ch7/tasks_proj_v2 $ pytest --cov=src ===================== test session starts ====================== plugins: mock-1.6.2, cov-2.5.1 collected 62 items tests/func/test_add.py ... tests/func/test_add_variety.py ............................ tests/func/test_add_variety2.py ............ tests/func/test_api_exceptions.py ......... tests/func/test_unique_id.py . tests/unit/test_cli.py ..... tests/unit/test_task.py .... ---------- coverage: platform darwin, python 3.6.2-final-0 ----------- Name Stmts Miss Cover -------------------------------------------------- src\tasks\__init__.py 2 0 100% src\tasks\api.py 79 22 72% src\tasks\cli.py 45 14 69% src\tasks\config.py 18 12 33% src\tasks\tasksdb_pymongo.py 74 74 0% src\tasks\tasksdb_tinydb.py 32 4 88% -------------------------------------------------- TOTAL 250 126 50% ================== 62 passed in 0.47 seconds =================== Since the current directory is tasks_proj_v2 , and the source code under test is in src, adding the option --cov=src creates a coverage report only for this directory being tested.

As you can see, some files are quite low and even 0% coverage. These are useful reminders: tasksdb_pymongo.py 0%, because we have disabled testing for MongoDB in this version. Some of them are pretty low. The project will certainly have to put tests for all these areas before it is ready for prime time.

I believe that several files have a higher percentage of coverage: api.py and tasksdb_tinydb.py . Let's look at tasksdb_tinydb.py and see what's missing. I think the best way to do this is to use HTML reports.

If you run coverage.py again with the --cov-report=html parameter, an --cov-report=html will be generated:

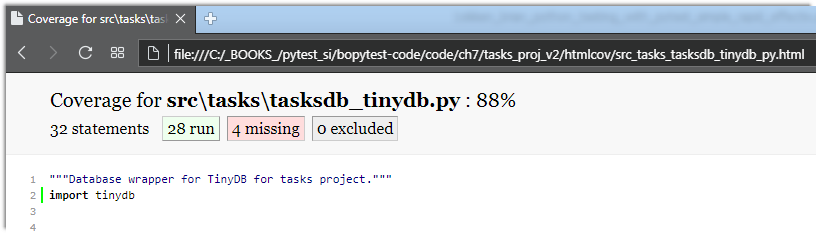

$ pytest --cov=src --cov-report=html ===================== test session starts ====================== plugins: mock-1.6.2, cov-2.5.1 collected 62 items tests/func/test_add.py ... tests/func/test_add_variety.py ............................ tests/func/test_add_variety2.py ............ tests/func/test_api_exceptions.py ......... tests/func/test_unique_id.py . tests/unit/test_cli.py ..... tests/unit/test_task.py .... ---------- coverage: platform darwin, python 3.6.2-final-0 ----------- Coverage HTML written to dir htmlcov ================== 62 passed in 0.45 seconds =================== You can then open htmlcov/index.html in a browser that shows the output on the following screen:

Clicking on tasksdb_tinydb.py will display a report for one file. The top of the report shows the percentage of covered lines, plus how many lines are covered and how many are not, as shown in the following screen:

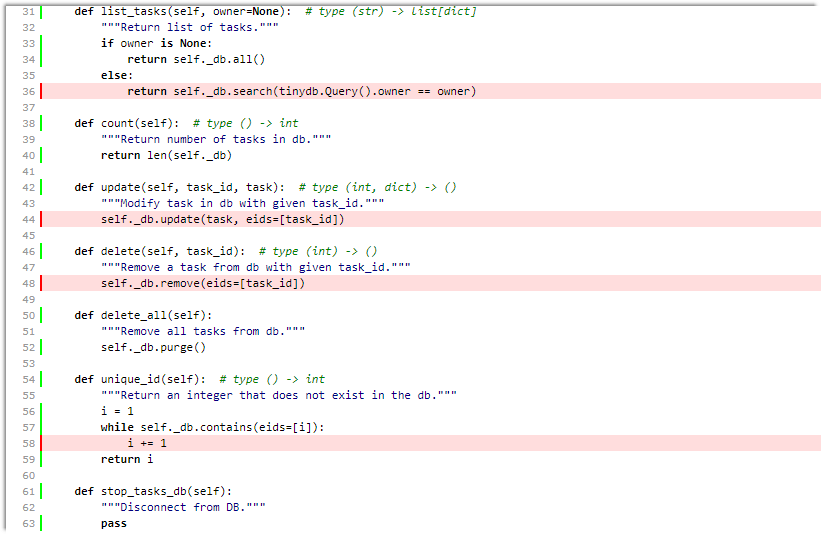

Scrolling down, you can see the missing lines, as shown in the following screen:

Even if this screen is not the complete page for this file, it is enough to tell us that:

- We do not test

list_tasks()with an established owner. - We do not test

update()ordelete(). - Perhaps we are not thoroughly testing

unique_id().

Fine. We can include them in our TO-DO testing list along with configuration system testing.

Although code coverage tools are extremely useful, striving for 100% coverage can be dangerous. When you see code that is not tested, it may mean that a test is needed. But it can also mean that there are some functions of the system that are not needed and can be deleted. Like all software development tools, code coverage analysis does not replace thinking.

More information can be found in the coverage.py and pytest-cov .

')

Source: https://habr.com/ru/post/448798/

All Articles