Python Testing with pytest. Chapter 2, Writing Test Functions

You will learn how to organize tests in classes, modules and directories. Then I will show you how to use markers to mark which tests you want to run, and discuss how embedded markers can help you skip tests and mark tests, waiting for failure. Finally, I will talk about test parameterization, which allows tests to be invoked with different data.

The examples in this book are written using Python 3.6 and pytest 3.2. pytest 3.2 supports Python 2.6, 2.7 and Python 3.3+.

The source code for the Tasks project, as well as for all the tests shown in this book, is available via the link on the book's web page at pragprog.com . You do not need to download the source code to understand the test code; The test code is presented in a convenient form in the examples. But to follow along with the project objectives, or to adapt test examples to test your own project (your hands are untied!), You should go to the book’s web page and download the work. In the same place, on the book’s web page there is a link for errata messages and a discussion forum .

Under the spoiler is a list of articles in this series.

In the previous chapter, you started pytest. You saw how to run it with files and directories and how many of the options worked. In this chapter, you will learn how to write test functions in the context of testing a Python package. If you are using pytest to test anything other than the Python package, most of this chapter will be useful.

We will write tests for the Tasks package. Before we do this, I’ll talk about the structure of the Python distribution package and the tests for it, as well as how to make the tests see the test package. Then I will show you how to use assert in tests, how tests handle unexpected exceptions and test expected exceptions.

In the end, we will have a lot of tests. This way you will learn how to organize tests into classes, modules and directories. Then I will show you how to use markers to mark which tests you want to run, and discuss how embedded markers can help you skip tests and mark tests, waiting for failure. Finally, I will talk about test parameterization, which allows tests to be invoked with different data.

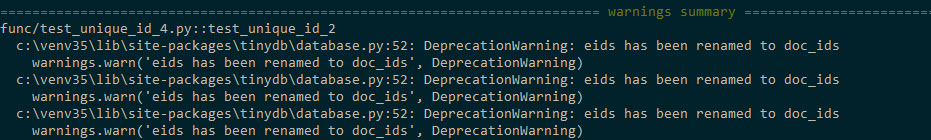

Note of translator: If you are using Python version 3.5 or 3.6, then when you run tests in Chapter 2, you may receive messages like this

This problem is treated by correcting...\code\tasks_proj\src\tasks\tasksdb_tinydb.pyand reinstalling the tasks package.$ cd /path/to/code $ pip install ./tasks_proj/`

Youeidstodoc_idsnamed parameters ondoc_idsandeidondoc_idin the module...\code\tasks_proj\src\tasks\tasksdb_tinydb.py

Explanations See#83783here

Package testing

To learn how to write test functions for the Python package, we will use the sample Tasks project, as described in the Tasks project on page xii. Tasks is a Python package that includes a command line tool with the same name as a task.

Appendix 4 “Packaging and Distributing Python Projects” on page 175 includes an explanation of how to distribute your projects locally within a small team or globally through PyPI, so I will not understand in detail how to do this; however, let's quickly consider what is in the “Tasks” project and how different files fit into the testing history of this project.

The following is the file structure of the Tasks project:

tasks_proj/ ├── CHANGELOG.rst ├── LICENSE ├── MANIFEST.in ├── README.rst ├── setup.py ├── src │ └── tasks │ ├── __init__.py │ ├── api.py │ ├── cli.py │ ├── config.py │ ├── tasksdb_pymongo.py │ └── tasksdb_tinydb.py └── tests ├── conftest.py ├── pytest.ini ├── func │ ├── __init__.py │ ├── test_add.py │ └── ... └── unit ├── __init__.py ├── test_task.py └── ... I included the complete project list (with the exception of the complete list of test files) to indicate how the tests fit into the rest of the project, and point out several files that are key to testing, namely conftest.py, pytest.ini , various __init__.py files and setup.py .

All tests are stored in tests and separately from the package source files in src . This is not a pytest requirement, but it is best practice.

All top-level files, CHANGELOG.rst, LICENSE, README.rst, MANIFEST.in , and setup.py , are discussed in more detail in Appendix 4, Packaging and Distributing Python Projects, on page 175. Although setup.py is important for building the distribution from the package, as well as to be able to install the package locally so that the package is available for import.

Functional and unit tests are divided into their own directories. This is an arbitrary decision and not required. However, organizing test files into multiple directories makes it easy to run a subset of tests. I like to separate the functional and unit tests, because functional tests should break only if we intentionally change the functionality of the system, while the unit tests can break during refactoring or implementation changes.

The project contains two types of __init__.py files: those found in the src/ directory and those that are in tests/ . The src/tasks/__init__.py tells Python that the directory is a package. It also acts as the main interface for the package when someone uses import tasks . It contains code for importing certain functions from api.py , so cli.py and our test files can access the package functions, for example, tasks.add() , instead of running task.api.add () . The tests/func/__init__.py and tests/unit/__init__.py are empty. They tell the pytest to go up one directory to find the root of the test directory and the pytest.ini file.

The pytest.ini file is optional. It contains the overall pytest configuration for the entire project. Your project should have no more than one of them. It may contain directives that change the behavior of pytest, for example, setting options for a list of parameters that will always be used. You will learn all about pytest.ini in Chapter 6, “Configuration,” on page 113.

The conftest.py file is also optional. It is considered pytest as a “local plugin” and may contain hook functions and fixtures. Hook functions are a way to insert code into the pytest part of the process to change how pytest works. Fixtures are the setup and teardown functions that run before and after test functions and can be used to represent the resources and data used by tests. (Fixtures are discussed in chapter 3, pytest Fixtures, on page 49 and chapter 4, Builtin Fixtures, on page 71, and hook functions are discussed in chapter 5 “Plugins” on page 95.) Hook functions and fixtures, which are used in tests in several subdirectories should be contained in tests / conftest.py. You can have multiple conftest.py files; for example, you can have one in tests and one for each tests subdirectory.

If you have not done so already, you can download a copy of the source code for this project from the book’s website Alternatively, you can work on your project with a similar structure.

Here is test_task.py:

ch2 / tasks_proj / tests / unit / test_task.py

"""Test the Task data type.""" # -*- coding: utf-8 -*- from tasks import Task def test_asdict(): """_asdict() .""" t_task = Task('do something', 'okken', True, 21) t_dict = t_task._asdict() expected = {'summary': 'do something', 'owner': 'okken', 'done': True, 'id': 21} assert t_dict == expected def test_replace(): """replace () .""" t_before = Task('finish book', 'brian', False) t_after = t_before._replace(id=10, done=True) t_expected = Task('finish book', 'brian', True, 10) assert t_after == t_expected def test_defaults(): """ .""" t1 = Task() t2 = Task(None, None, False, None) assert t1 == t2 def test_member_access(): """ .field namedtuple.""" t = Task('buy milk', 'brian') assert t.summary == 'buy milk' assert t.owner == 'brian' assert (t.done, t.id) == (False, None) This test import statement is specified in the test_task.py file:

from tasks import Task The best way to allow tests to import tasks or import something from tasks is to set tasks locally with pip. This is possible because there is a setup.py file for directly invoking pip.

Install tasks by running pip install . or pip install -e . from the tasks_proj directory. Or another option to run pip install -e tasks_proj from the directory one level higher:

$ cd /path/to/code $ pip install ./tasks_proj/ $ pip install --no-cache-dir ./tasks_proj/ Processing ./tasks_proj Collecting click (from tasks==0.1.0) Downloading click-6.7-py2.py3-none-any.whl (71kB) ... Collecting tinydb (from tasks==0.1.0) Downloading tinydb-3.4.0.tar.gz Collecting six (from tasks==0.1.0) Downloading six-1.10.0-py2.py3-none-any.whl Installing collected packages: click, tinydb, six, tasks Running setup.py install for tinydb ... done Running setup.py install for tasks ... done Successfully installed click-6.7 six-1.10.0 tasks-0.1.0 tinydb-3.4.0 If you only want to run tests for tasks, this command will do. If you want to be able to change the source code during the installation of tasks, you need to use the installation with the -e option (for editable “editable”):

$ pip install -e ./tasks_proj/ Obtaining file:///path/to/code/tasks_proj Requirement already satisfied: click in /path/to/venv/lib/python3.6/site-packages (from tasks==0.1.0) Requirement already satisfied: tinydb in /path/to/venv/lib/python3.6/site-packages (from tasks==0.1.0) Requirement already satisfied: six in /path/to/venv/lib/python3.6/site-packages (from tasks==0.1.0) Installing collected packages: tasks Found existing installation: tasks 0.1.0 Uninstalling tasks-0.1.0: Successfully uninstalled tasks-0.1.0 Running setup.py develop for tasks Successfully installed tasks Now let's try running the tests:

$ cd /path/to/code/ch2/tasks_proj/tests/unit $ pytest test_task.py ===================== test session starts ====================== collected 4 items test_task.py .... =================== 4 passed in 0.01 seconds =================== Import worked! The rest of the tests can now safely use import tasks. Now we will write some tests.

Using assert statements

When you write test functions, the usual Python assert statement is your primary tool for reporting test failures. The simplicity of this in pytest is brilliant. This is what makes many developers use pytest on top of other frameworks.

If you used any other testing platform, you probably saw the various assert helper functions. For example, the following is a list of some assert forms and assert helper functions:

| pytest | unittest |

|---|---|

| assert something | assertTrue (something) |

| assert a == b | assertEqual (a, b) |

| assert a <= b | assertLessEqual (a, b) |

| ... | ... |

With pytest, you can use assert <expression> with any expression. If the expression is evaluated as False, when it is converted to bool, the test will end with an error.

pytest includes a feature called assert rewriting, which intercepts assert calls and replaces them with something that can tell you more about why your statements failed. Let's see how useful this rewriting is if you look at a few assertion errors:

ch2 / tasks_proj / tests / unit / test_task_fail.py

""" the Task type .""" from tasks import Task def test_task_equality(): """ .""" t1 = Task('sit there', 'brian') t2 = Task('do something', 'okken') assert t1 == t2 def test_dict_equality(): """ , dicts, .""" t1_dict = Task('make sandwich', 'okken')._asdict() t2_dict = Task('make sandwich', 'okkem')._asdict() assert t1_dict == t2_dict All these tests fail, but the information in the trace is interesting:

(venv33) ...\bopytest-code\code\ch2\tasks_proj\tests\unit>pytest test_task_fail.py ============================= test session starts ============================= collected 2 items test_task_fail.py FF ================================== FAILURES =================================== _____________________________ test_task_equality ______________________________ def test_task_equality(): """Different tasks should not be equal.""" t1 = Task('sit there', 'brian') t2 = Task('do something', 'okken') > assert t1 == t2 E AssertionError: assert Task(summary=...alse, id=None) == Task(summary='...alse, id=None) E At index 0 diff: 'sit there' != 'do something' E Use -v to get the full diff test_task_fail.py:9: AssertionError _____________________________ test_dict_equality ______________________________ def test_dict_equality(): """Different tasks compared as dicts should not be equal.""" t1_dict = Task('make sandwich', 'okken')._asdict() t2_dict = Task('make sandwich', 'okkem')._asdict() > assert t1_dict == t2_dict E AssertionError: assert OrderedDict([...('id', None)]) == OrderedDict([(...('id', None)]) E Omitting 3 identical items, use -vv to show E Differing items: E {'owner': 'okken'} != {'owner': 'okkem'} E Use -v to get the full diff test_task_fail.py:16: AssertionError ========================== 2 failed in 0.30 seconds =========================== Wow! This is a lot of information. For each failed test, the exact error string is displayed using the> Failure Pointer. Lines E show additional information about assert failure to help you understand what went wrong.

I deliberately put two mismatches in test_task_equality() , but only the first one was shown in the previous code. Let's try again with the -v flag, as suggested in the error message:

(venv33) ...\bopytest-code\code\ch2\tasks_proj\tests\unit>pytest -v test_task_fail.py ============================= test session starts ============================= collected 2 items test_task_fail.py::test_task_equality FAILED test_task_fail.py::test_dict_equality FAILED ================================== FAILURES =================================== _____________________________ test_task_equality ______________________________ def test_task_equality(): """Different tasks should not be equal.""" t1 = Task('sit there', 'brian') t2 = Task('do something', 'okken') > assert t1 == t2 E AssertionError: assert Task(summary=...alse, id=None) == Task(summary='...alse, id=None) E At index 0 diff: 'sit there' != 'do something' E Full diff: E - Task(summary='sit there', owner='brian', done=False, id=None) E ? ^^^ ^^^ ^^^^ E + Task(summary='do something', owner='okken', done=False, id=None) E ? +++ ^^^ ^^^ ^^^^ test_task_fail.py:9: AssertionError _____________________________ test_dict_equality ______________________________ def test_dict_equality(): """Different tasks compared as dicts should not be equal.""" t1_dict = Task('make sandwich', 'okken')._asdict() t2_dict = Task('make sandwich', 'okkem')._asdict() > assert t1_dict == t2_dict E AssertionError: assert OrderedDict([...('id', None)]) == OrderedDict([(...('id', None)]) E Omitting 3 identical items, use -vv to show E Differing items: E {'owner': 'okken'} != {'owner': 'okkem'} E Full diff: E {'summary': 'make sandwich', E - 'owner': 'okken', E ? ^... E E ...Full output truncated (5 lines hidden), use '-vv' to show test_task_fail.py:16: AssertionError ========================== 2 failed in 0.28 seconds =========================== Well, I think it's damn cool! pytest not only could find both differences, but also showed us exactly where these differences are. In this example, only equality assert is used; There are many more variations of the assert operator on the pytest.org website with amazing trace debugging information.

Expectation Expected

Exceptions can occur in several places in the Tasks API. Let's quickly take a look at the functions found in tasks / api.py :

def add(task): # type: (Task) -\> int def get(task_id): # type: (int) -\> Task def list_tasks(owner=None): # type: (str|None) -\> list of Task def count(): # type: (None) -\> int def update(task_id, task): # type: (int, Task) -\> None def delete(task_id): # type: (int) -\> None def delete_all(): # type: () -\> None def unique_id(): # type: () -\> int def start_tasks_db(db_path, db_type): # type: (str, str) -\> None def stop_tasks_db(): # type: () -\> None There is an agreement between the CLI code in cli.py and the API code in api.py regarding which types will be passed to the API functions. API calls are where I expect exceptions to be raised if the type is incorrect. To make sure that these functions raise exceptions, if they are called incorrectly, use the wrong type in the test function to intentionally raise TypeError exceptions and use them with pytest.raises (expected exception), for example:

ch2 / tasks_proj / tests / func / test_api_exceptions.py

""" - API.""" import pytest import tasks def test_add_raises(): """add() param.""" with pytest.raises(TypeError): tasks.add(task='not a Task object') In test_add_raises() , with pytest.raises(TypeError) : the operator reports that everything that is in the next block of code should throw a TypeError exception. If an exception is not raised, the test fails. If the test causes another exception, it fails.

We just checked the exception type in test_add_raises() . You can also check the exclusion options. For start_tasks_db(db_path, db_type) , not only db_type should be a string, it really should be either 'tiny' or 'mongo'. You can check to make sure that the exception message is correct by adding excinfo:

ch2 / tasks_proj / tests / func / test_api_exceptions.py

def test_start_tasks_db_raises(): """, .""" with pytest.raises(ValueError) as excinfo: tasks.start_tasks_db('some/great/path', 'mysql') exception_msg = excinfo.value.args[0] assert exception_msg == "db_type must be a 'tiny' or 'mongo'" This allows us to look more closely at this exception. The variable name after as (in this case excinfo) is filled with information about the exception and is of type ExceptionInfo.

In our case, we want to make sure that the first (and only) exclusion parameter matches the string.

Marking Test Functions

pytest provides a cool mechanism for putting markers in test functions. A test can have more than one marker, and a marker can be in several tests.

Markers will make sense to you after you see them in action. Suppose we want to run a subset of our tests as a quick “smoke test” to get an idea of whether there is some serious gap in the system. Smoke tests by convention are not comprehensive, thorough test suites, but a selected subset that can be quickly launched and give the developer a decent understanding of the health of all parts of the system.

To add a smoke test suite to the Tasks project, you need to add @mark.pytest.smoke for some tests. Let's add it to several test_api_exceptions.py tests (note that the smoke and get markers are not built into pytest; I just made them up):

ch2 / tasks_proj / tests / func / test_api_exceptions.py

@pytest.mark.smoke def test_list_raises(): """list() param.""" with pytest.raises(TypeError): tasks.list_tasks(owner=123) @pytest.mark.get @pytest.mark.smoke def test_get_raises(): """get() param.""" with pytest.raises(TypeError): tasks.get(task_id='123') Now let's run only those tests that are marked with -m marker_name :

(venv33) ...\bopytest-code\code\ch2\tasks_proj\tests>cd func (venv33) ...\bopytest-code\code\ch2\tasks_proj\tests\func>pytest -v -m "smoke" test_api_exceptions.py ============================= test session starts ============================= collected 7 items test_api_exceptions.py::test_list_raises PASSED test_api_exceptions.py::test_get_raises PASSED ============================= 5 tests deselected ============================== =================== 2 passed, 5 deselected in 0.18 seconds ==================== (venv33) ...\bopytest-code\code\ch2\tasks_proj\tests\func> (venv33) ...\bopytest-code\code\ch2\tasks_proj\tests\func>pytest -v -m "get" test_api_exceptions.py ============================= test session starts ============================= collected 7 items test_api_exceptions.py::test_get_raises PASSED ============================= 6 tests deselected ============================== =================== 1 passed, 6 deselected in 0.13 seconds ==================== Remember that -v short for --verbose and allows us to see the names of the tests that are being run. Using -m 'smoke' runs both tests marked @ pytest.mark.smoke.

Using -m 'get' will run one test labeled @pytest.mark.get . Pretty simple.

Everything becomes wonder and wonder! The expression after -m can use and , or and not combine several markers:

(venv33) ...\bopytest-code\code\ch2\tasks_proj\tests\func>pytest -v -m "smoke and get" test_api_exceptions.py ============================= test session starts ============================= collected 7 items test_api_exceptions.py::test_get_raises PASSED ============================= 6 tests deselected ============================== =================== 1 passed, 6 deselected in 0.13 seconds ==================== We conducted this test only with smoke and get markers. We can use and not :

(venv33) ...\bopytest-code\code\ch2\tasks_proj\tests\func>pytest -v -m "smoke and not get" test_api_exceptions.py ============================= test session starts ============================= collected 7 items test_api_exceptions.py::test_list_raises PASSED ============================= 6 tests deselected ============================== =================== 1 passed, 6 deselected in 0.13 seconds ==================== The addition of -m 'smoke and not get' chose a test that was marked using @pytest.mark.smoke , but not @pytest.mark.get .

Filling Smoke Test

Previous tests do not seem to be a sensible set of smoke test tests. We did not actually touch the database and did not add any tasks. Of course the smoke test would have to do this.

Let's add some tests that consider adding a task, and use one of them as part of our smoke test suite:

ch2 / tasks_proj / tests / func / test_add.py

""" API tasks.add ().""" import pytest import tasks from tasks import Task def test_add_returns_valid_id(): """tasks.add(valid task) .""" # GIVEN an initialized tasks db # WHEN a new task is added # THEN returned task_id is of type int new_task = Task('do something') task_id = tasks.add(new_task) assert isinstance(task_id, int) @pytest.mark.smoke def test_added_task_has_id_set(): """, task_id tasks.add().""" # GIVEN an initialized tasks db # AND a new task is added new_task = Task('sit in chair', owner='me', done=True) task_id = tasks.add(new_task) # WHEN task is retrieved task_from_db = tasks.get(task_id) # THEN task_id matches id field assert task_from_db.id == task_id Both of these tests have a GIVEN comment on the initialized database of tasks, but there is no initialized database in the test. We can define fixture to initialize the database before the test and clean it after the test:

ch2 / tasks_proj / tests / func / test_add.py

@pytest.fixture(autouse=True) def initialized_tasks_db(tmpdir): """Connect to db before testing, disconnect after.""" # Setup : start db tasks.start_tasks_db(str(tmpdir), 'tiny') yield # # Teardown : stop db tasks.stop_tasks_db() The fixture, tmpdir, used in this example is builtin (builtin fixture). You will learn all about embedded fixtures in chapter 4, Builtin Fixtures, on page 71, and you will learn about writing your own fixtures and how they work in chapter 3, pytest Fixtures, on page 49, including the autouse parameter used here.

The autouse used in our test shows that all tests in this file will use fixture. The pre- yield code is executed before each test; code after yield is executed after the test. If desired, yield can return data to the test. You will look at all this and much more in subsequent chapters, but here we need to somehow set up the database for testing, so I can’t wait any longer, and I must show you this device (fixture of course!). (pytest also supports the old-fashioned setup and teardown functions, such as those used in unittest and nose , but they are not so interesting. However, if you are still interested, they are described in Appendix 5, xUnit Fixtures, on page 183.)

Let's postpone the discussion of fixtures and move on to the beginning of the project and launch our smoke test suite :

(venv33) ...\bopytest-code\code\ch2\tasks_proj\tests\func>cd .. (venv33) ...\bopytest-code\code\ch2\tasks_proj\tests>cd .. (venv33) ...\bopytest-code\code\ch2\tasks_proj>pytest -v -m "smoke" ============================= test session starts ============================= collected 56 items tests/func/test_add.py::test_added_task_has_id_set PASSED tests/func/test_api_exceptions.py::test_list_raises PASSED tests/func/test_api_exceptions.py::test_get_raises PASSED ============================= 53 tests deselected ============================= =================== 3 passed, 53 deselected in 0.49 seconds =================== It shows that labeled tests from different files can be run together.

Skipping Tests

Although the markers discussed in labeling verification methods were the names of your choice on page 31, pytest includes some useful built-in markers: skip , skipif , and xfail . In this section, I will talk about skip and skipif , and in the next one, -xfail .

Markers skip and skipif allow you to skip tests that do not need to perform. For example, let's say we did not know how tasks.unique_id() should work. Should each call return a different number? , ?

-, (, initialized_tasks_db ; ):

ch2/tasks_proj/tests/func/ test_unique_id_1.py """Test tasks.unique_id().""" import pytest import tasks def test_unique_id(): """ unique_id () .""" id_1 = tasks.unique_id() id_2 = tasks.unique_id() assert id_1 != id_2 :

(venv33) ...\bopytest-code\code\ch2\tasks_proj\tests\func>pytest test_unique_id_1.py ============================= test session starts ============================= collected 1 item test_unique_id_1.py F ================================== FAILURES =================================== _______________________________ test_unique_id ________________________________ def test_unique_id(): """Calling unique_id() twice should return different numbers.""" id_1 = tasks.unique_id() id_2 = tasks.unique_id() > assert id_1 != id_2 E assert 1 != 1 test_unique_id_1.py:11: AssertionError ========================== 1 failed in 0.30 seconds =========================== Hm , . API , , docstring """Return an integer that does not exist in the db.""", , DB . . , :

ch2/tasks_proj/tests/func/ test_unique_id_2.py @pytest.mark.skip(reason='misunderstood the API') def test_unique_id_1(): """ unique_id () .""" id_1 = tasks.unique_id() id_2 = tasks.unique_id() assert id_1 != id_2 def test_unique_id_2(): """unique_id() id.""" ids = [] ids.append(tasks.add(Task('one'))) ids.append(tasks.add(Task('two'))) ids.append(tasks.add(Task('three'))) # id uid = tasks.unique_id() # , assert uid not in ids , , , @pytest..skip() .

:

(venv33) ...\bopytest-code\code\ch2\tasks_proj\tests\func>pytest -v test_unique_id_2.py ============================= test session starts ============================= collected 2 items test_unique_id_2.py::test_unique_id_1 SKIPPED test_unique_id_2.py::test_unique_id_2 PASSED ===================== 1 passed, 1 skipped in 0.19 seconds ===================== , - , , 0.2.0 . skipif:

ch2/tasks_proj/tests/func/ test_unique_id_3.py @pytest.mark.skipif(tasks.__version__ < '0.2.0', reason='not supported until version 0.2.0') def test_unique_id_1(): """ unique_id () .""" id_1 = tasks.unique_id() id_2 = tasks.unique_id() assert id_1 != id_2 , skipif() , Python. , , . skip , skipif . skip , skipif . ( reason ) skip , skipif xfail . :

(venv33) ...\bopytest-code\code\ch2\tasks_proj\tests\func>pytest test_unique_id_3.py ============================= test session starts ============================= collected 2 items test_unique_id_3.py s. ===================== 1 passed, 1 skipped in 0.20 seconds ===================== s. , (skipped), (passed). , - -v :

(venv33) ...\bopytest-code\code\ch2\tasks_proj\tests\func>pytest -v test_unique_id_3.py ============================= test session starts ============================= collected 2 items test_unique_id_3.py::test_unique_id_1 SKIPPED test_unique_id_3.py::test_unique_id_2 PASSED ===================== 1 passed, 1 skipped in 0.19 seconds ===================== . -rs :

(venv33) ...\bopytest-code\code\ch2\tasks_proj\tests\func>pytest -rs test_unique_id_3.py ============================= test session starts ============================= collected 2 items test_unique_id_3.py s. =========================== short test summary info =========================== SKIP [1] func\test_unique_id_3.py:8: not supported until version 0.2.0 ===================== 1 passed, 1 skipped in 0.22 seconds ===================== -r chars :

$ pytest --help ... -r chars show extra test summary info as specified by chars ( , ) (f)ailed, (E)error, (s)skipped, (x)failed, (X)passed, (p)passed, (P)passed with output, (a)all except pP. ... , .

skip skipif , . xfail pytest , , . unique_id () , xfail :

ch2/tasks_proj/tests/func/ test_unique_id_4.py @pytest.mark.xfail(tasks.__version__ < '0.2.0', reason='not supported until version 0.2.0') def test_unique_id_1(): """ unique_id() .""" id_1 = tasks.unique_id() id_2 = tasks.unique_id() assert id_1 != id_2 @pytest.mark.xfail() def test_unique_id_is_a_duck(): """ xfail.""" uid = tasks.unique_id() assert uid == 'a duck' @pytest.mark.xfail() def test_unique_id_not_a_duck(): """ xpass.""" uid = tasks.unique_id() assert uid != 'a duck' Running this shows:

, , xfail . == vs.! =. .

:

(venv33) ...\bopytest-code\code\ch2\tasks_proj\tests\func>pytest test_unique_id_4.py ============================= test session starts ============================= collected 4 items test_unique_id_4.py xxX. =============== 1 passed, 2 xfailed, 1 xpassed in 0.36 seconds ================ X XFAIL, « ( expected to fail )». X XPASS «, , ( expected to fail but passed. )».

--verbose :

(venv33) ...\bopytest-code\code\ch2\tasks_proj\tests\func>pytest -v test_unique_id_4.py ============================= test session starts ============================= collected 4 items test_unique_id_4.py::test_unique_id_1 xfail test_unique_id_4.py::test_unique_id_is_a_duck xfail test_unique_id_4.py::test_unique_id_not_a_duck XPASS test_unique_id_4.py::test_unique_id_2 PASSED =============== 1 passed, 2 xfailed, 1 xpassed in 0.36 seconds ================ pytest , , , xfail , FAIL. pytest.ini :

[pytest] xfail_strict=true pytest.ini 6, , . 113.

, . . , , . , . . .

A Single Directory

, pytest :

(venv33) ...\bopytest-code\code\ch2\tasks_proj>pytest tests\func --tb=no ============================= test session starts ============================= collected 50 items tests\func\test_add.py .. tests\func\test_add_variety.py ................................ tests\func\test_api_exceptions.py ....... tests\func\test_unique_id_1.py F tests\func\test_unique_id_2.py s. tests\func\test_unique_id_3.py s. tests\func\test_unique_id_4.py xxX. ==== 1 failed, 44 passed, 2 skipped, 2 xfailed, 1 xpassed in 1.75 seconds ===== , -v , .

(venv33) ...\bopytest-code\code\ch2\tasks_proj>pytest -v tests\func --tb=no ============================= test session starts ============================= ...

collected 50 items tests\func\test_add.py::test_add_returns_valid_id PASSED tests\func\test_add.py::test_added_task_has_id_set PASSED tests\func\test_add_variety.py::test_add_1 PASSED tests\func\test_add_variety.py::test_add_2[task0] PASSED tests\func\test_add_variety.py::test_add_2[task1] PASSED tests\func\test_add_variety.py::test_add_2[task2] PASSED tests\func\test_add_variety.py::test_add_2[task3] PASSED tests\func\test_add_variety.py::test_add_3[sleep-None-False] PASSED ... tests\func\test_unique_id_2.py::test_unique_id_1 SKIPPED tests\func\test_unique_id_2.py::test_unique_id_2 PASSED ... tests\func\test_unique_id_4.py::test_unique_id_1 xfail tests\func\test_unique_id_4.py::test_unique_id_is_a_duck xfail tests\func\test_unique_id_4.py::test_unique_id_not_a_duck XPASS tests\func\test_unique_id_4.py::test_unique_id_2 PASSED ==== 1 failed, 44 passed, 2 skipped, 2 xfailed, 1 xpassed in 2.05 seconds ===== , .

File/Module

, , pytest:

$ cd /path/to/code/ch2/tasks_proj $ pytest tests/func/test_add.py =========================== test session starts =========================== collected 2 items tests/func/test_add.py .. ======================== 2 passed in 0.05 seconds ========================= .

, :: :

$ cd /path/to/code/ch2/tasks_proj $ pytest -v tests/func/test_add.py::test_add_returns_valid_id =========================== test session starts =========================== collected 3 items tests/func/test_add.py::test_add_returns_valid_id PASSED ======================== 1 passed in 0.02 seconds ========================= -v , , .

Test Class

Here's an example:

— , .

Here is an example:

ch2/tasks_proj/tests/func/ test_api_exceptions.py class TestUpdate(): """ tasks.update().""" def test_bad_id(self): """non-int id excption.""" with pytest.raises(TypeError): tasks.update(task_id={'dict instead': 1}, task=tasks.Task()) def test_bad_task(self): """A non-Task task excption.""" with pytest.raises(TypeError): tasks.update(task_id=1, task='not a task') , update() , . , , :: , :

(venv33) ...\bopytest-code\code\ch2\tasks_proj>pytest -v tests/func/test_api_exceptions.py::TestUpdate ============================= test session starts ============================= collected 2 items tests\func\test_api_exceptions.py::TestUpdate::test_bad_id PASSED tests\func\test_api_exceptions.py::TestUpdate::test_bad_task PASSED ========================== 2 passed in 0.12 seconds =========================== A Single Test Method of a Test Class

, — :: :

$ cd /path/to/code/ch2/tasks_proj $ pytest -v tests/func/test_api_exceptions.py::TestUpdate::test_bad_id ===================== test session starts ====================== collected 1 item tests/func/test_api_exceptions.py::TestUpdate::test_bad_id PASSED =================== 1 passed in 0.03 seconds =================== ,

')

, , , , . ,pytest -v.

-k , . and , or not . , _raises :

(venv33) ...\bopytest-code\code\ch2\tasks_proj>pytest -v -k _raises ============================= test session starts ============================= collected 56 items tests/func/test_api_exceptions.py::test_add_raises PASSED tests/func/test_api_exceptions.py::test_list_raises PASSED tests/func/test_api_exceptions.py::test_get_raises PASSED tests/func/test_api_exceptions.py::test_delete_raises PASSED tests/func/test_api_exceptions.py::test_start_tasks_db_raises PASSED ============================= 51 tests deselected ============================= =================== 5 passed, 51 deselected in 0.54 seconds =================== and not test_delete_raises() :

(venv33) ...\bopytest-code\code\ch2\tasks_proj>pytest -v -k "_raises and not delete" ============================= test session starts ============================= collected 56 items tests/func/test_api_exceptions.py::test_add_raises PASSED tests/func/test_api_exceptions.py::test_list_raises PASSED tests/func/test_api_exceptions.py::test_get_raises PASSED tests/func/test_api_exceptions.py::test_start_tasks_db_raises PASSED ============================= 52 tests deselected ============================= =================== 4 passed, 52 deselected in 0.44 seconds =================== , , , -k . , , .

[Parametrized Testing]:

, , . . - pytest, - .

, , add() :

ch2/tasks_proj/tests/func/ test_add_variety.py """ API tasks.add().""" import pytest import tasks from tasks import Task def test_add_1(): """tasks.get () id, add() works.""" task = Task('breathe', 'BRIAN', True) task_id = tasks.add(task) t_from_db = tasks.get(task_id) # , , assert equivalent(t_from_db, task) def equivalent(t1, t2): """ .""" # , id return ((t1.summary == t2.summary) and (t1.owner == t2.owner) and (t1.done == t2.done)) @pytest.fixture(autouse=True) def initialized_tasks_db(tmpdir): """ , .""" tasks.start_tasks_db(str(tmpdir), 'tiny') yield tasks.stop_tasks_db() tasks id None . id . == , , . equivalent() , id . autouse , , . , :

(venv33) ...\bopytest-code\code\ch2\tasks_proj\tests\func>pytest -v test_add_variety.py::test_add_1 ============================= test session starts ============================= collected 1 item test_add_variety.py::test_add_1 PASSED ========================== 1 passed in 0.69 seconds =========================== . , . , ? No problems. @pytest.mark.parametrize(argnames, argvalues) , :

ch2/tasks_proj/tests/func/ test_add_variety.py @pytest.mark.parametrize('task', [Task('sleep', done=True), Task('wake', 'brian'), Task('breathe', 'BRIAN', True), Task('exercise', 'BrIaN', False)]) def test_add_2(task): """ .""" task_id = tasks.add(task) t_from_db = tasks.get(task_id) assert equivalent(t_from_db, task) parametrize() — — 'task', . — , Task. pytest :

(venv33) ...\bopytest-code\code\ch2\tasks_proj\tests\func>pytest -v test_add_variety.py::test_add_2 ============================= test session starts ============================= collected 4 items test_add_variety.py::test_add_2[task0] PASSED test_add_variety.py::test_add_2[task1] PASSED test_add_variety.py::test_add_2[task2] PASSED test_add_variety.py::test_add_2[task3] PASSED ========================== 4 passed in 0.69 seconds =========================== parametrize() . , , :

ch2/tasks_proj/tests/func/ test_add_variety.py @pytest.mark.parametrize('summary, owner, done', [('sleep', None, False), ('wake', 'brian', False), ('breathe', 'BRIAN', True), ('eat eggs', 'BrIaN', False), ]) def test_add_3(summary, owner, done): """ .""" task = Task(summary, owner, done) task_id = tasks.add(task) t_from_db = tasks.get(task_id) assert equivalent(t_from_db, task) , pytest, , :

(venv35) ...\bopytest-code\code\ch2\tasks_proj\tests\func>pytest -v test_add_variety.py::test_add_3 ============================= test session starts ============================= platform win32 -- Python 3.5.2, pytest-3.5.1, py-1.5.3, pluggy-0.6.0 -- cachedir: ..\.pytest_cache rootdir: ...\bopytest-code\code\ch2\tasks_proj\tests, inifile: pytest.ini collected 4 items test_add_variety.py::test_add_3[sleep-None-False] PASSED [ 25%] test_add_variety.py::test_add_3[wake-brian-False] PASSED [ 50%] test_add_variety.py::test_add_3[breathe-BRIAN-True] PASSED [ 75%] test_add_variety.py::test_add_3[eat eggs-BrIaN-False] PASSED [100%] ========================== 4 passed in 0.37 seconds =========================== , , pytest, :

(venv35) c:\BOOK\bopytest-code\code\ch2\tasks_proj\tests\func>pytest -v test_add_variety.py::test_add_3[sleep-None-False] ============================= test session starts ============================= test_add_variety.py::test_add_3[sleep-None-False] PASSED [100%] ========================== 1 passed in 0.22 seconds =========================== , :

(venv35) c:\BOOK\bopytest-code\code\ch2\tasks_proj\tests\func>pytest -v "test_add_variety.py::test_add_3[eat eggs-BrIaN-False]" ============================= test session starts ============================= collected 1 item test_add_variety.py::test_add_3[eat eggs-BrIaN-False] PASSED [100%] ========================== 1 passed in 0.56 seconds =========================== , :

ch2/tasks_proj/tests/func/ test_add_variety.py tasks_to_try = (Task('sleep', done=True), Task('wake', 'brian'), Task('wake', 'brian'), Task('breathe', 'BRIAN', True), Task('exercise', 'BrIaN', False)) @pytest.mark.parametrize('task', tasks_to_try) def test_add_4(task): """ .""" task_id = tasks.add(task) t_from_db = tasks.get(task_id) assert equivalent(t_from_db, task) . :

(venv35) ...\bopytest-code\code\ch2\tasks_proj\tests\func>pytest -v test_add_variety.py::test_add_4 ============================= test session starts ============================= collected 5 items test_add_variety.py::test_add_4[task0] PASSED [ 20%] test_add_variety.py::test_add_4[task1] PASSED [ 40%] test_add_variety.py::test_add_4[task2] PASSED [ 60%] test_add_variety.py::test_add_4[task3] PASSED [ 80%] test_add_variety.py::test_add_4[task4] PASSED [100%] ========================== 5 passed in 0.34 seconds =========================== , . , ids parametrize() , . ids , . , tasks_to_try , :

ch2/tasks_proj/tests/func/ test_add_variety.py task_ids = ['Task({},{},{})'.format(t.summary, t.owner, t.done) for t in tasks_to_try] @pytest.mark.parametrize('task', tasks_to_try, ids=task_ids) def test_add_5(task): """Demonstrate ids.""" task_id = tasks.add(task) t_from_db = tasks.get(task_id) assert equivalent(t_from_db, task) , :

(venv33) ...\bopytest-code\code\ch2\tasks_proj\tests\func>pytest -v test_add_variety.py::test_add_5 ============================= test session starts ============================= collected 5 items test_add_variety.py::test_add_5[Task(sleep,None,True)] PASSED test_add_variety.py::test_add_5[Task(wake,brian,False)0] PASSED test_add_variety.py::test_add_5[Task(wake,brian,False)1] PASSED test_add_variety.py::test_add_5[Task(breathe,BRIAN,True)] PASSED test_add_variety.py::test_add_5[Task(exercise,BrIaN,False)] PASSED ========================== 5 passed in 0.45 seconds =========================== :

(venv33) ...\bopytest-code\code\ch2\tasks_proj\tests\func>pytest -v "test_add_variety.py::test_add_5[Task(exercise,BrIaN,False)]" ============================= test session starts ============================= collected 1 item test_add_variety.py::test_add_5[Task(exercise,BrIaN,False)] PASSED ========================== 1 passed in 0.21 seconds =========================== ; shell. parametrize() . :

ch2/tasks_proj/tests/func/ test_add_variety.py @pytest.mark.parametrize('task', tasks_to_try, ids=task_ids) class TestAdd(): """ .""" def test_equivalent(self, task): """ , .""" task_id = tasks.add(task) t_from_db = tasks.get(task_id) assert equivalent(t_from_db, task) def test_valid_id(self, task): """ .""" task_id = tasks.add(task) t_from_db = tasks.get(task_id) assert t_from_db.id == task_id :

(venv33) ...\bopytest-code\code\ch2\tasks_proj\tests\func>pytest -v test_add_variety.py::TestAdd ============================= test session starts ============================= collected 10 items test_add_variety.py::TestAdd::test_equivalent[Task(sleep,None,True)] PASSED test_add_variety.py::TestAdd::test_equivalent[Task(wake,brian,False)0] PASSED test_add_variety.py::TestAdd::test_equivalent[Task(wake,brian,False)1] PASSED test_add_variety.py::TestAdd::test_equivalent[Task(breathe,BRIAN,True)] PASSED test_add_variety.py::TestAdd::test_equivalent[Task(exercise,BrIaN,False)] PASSED test_add_variety.py::TestAdd::test_valid_id[Task(sleep,None,True)] PASSED test_add_variety.py::TestAdd::test_valid_id[Task(wake,brian,False)0] PASSED test_add_variety.py::TestAdd::test_valid_id[Task(wake,brian,False)1] PASSED test_add_variety.py::TestAdd::test_valid_id[Task(breathe,BRIAN,True)] PASSED test_add_variety.py::TestAdd::test_valid_id[Task(exercise,BrIaN,False)] PASSED ========================== 10 passed in 1.16 seconds ========================== , @pytest.mark.parametrize() . pytest.param(<value\>, id="something") :

:

(venv35) ...\bopytest-code\code\ch2\tasks_proj\tests\func $ pytest -v test_add_variety.py::test_add_6 ======================================== test session starts ========================================= collected 3 items test_add_variety.py::test_add_6[just summary] PASSED [ 33%] test_add_variety.py::test_add_6[summary\owner] PASSED [ 66%] test_add_variety.py::test_add_6[summary\owner\done] PASSED [100%] ================================ 3 passed, 6 warnings in 0.35 seconds ================================ , id .

Exercises

- ,

task_proj, - ,pip install /path/to/tasks_proj. - .

- pytest .

- pytest ,

tasks_proj/tests/func. pytest , . . , ? - xfail , pytest tests .

tasks.count(), . API , , , .- ?

test_api_exceptions.py. , . (api.py.)

What's next

pytest . , , , . initialized_tasks_db . / .

, . pytest.

Source: https://habr.com/ru/post/448788/

All Articles