Python Testing with pytest. Getting started with pytest, Chapter 1

I found that Python Testing with pytest is an extremely useful introductory guide to the pytest testing environment. This already brings me dividends in my company.

Chris Shaver

VP of Product, Uprising Technology

The examples in this book are written using Python 3.6 and pytest 3.2. pytest 3.2 supports Python 2.6, 2.7 and Python 3.3+.

The source code for the Tasks project, as well as for all the tests shown in this book, is available via the link on the book's web page at pragprog.com . You do not need to download the source code to understand the test code; The test code is presented in a convenient form in the examples. But to follow along with the project objectives, or to adapt test examples to test your own project (your hands are untied!), You should go to the book’s web page and download the work. In the same place, on the book’s web page there is a link for errata messages and a discussion forum .

Under the spoiler is a list of articles in this series.

Go

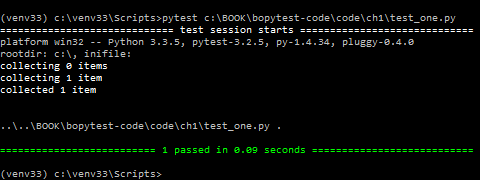

This is a test:

ch1 / test_one.py

def test_passing(): assert (1, 2, 3) == (1, 2, 3) Here is what it looks like at startup:

$ cd /path/to/code/ch1 $ pytest test_one.py ===================== test session starts ====================== collected 1 items test_one.py . =================== 1 passed in 0.01 seconds ===================

A dot after test_one.py means that one test was run and it passed. If you need more information, you can use -v or --verbose :

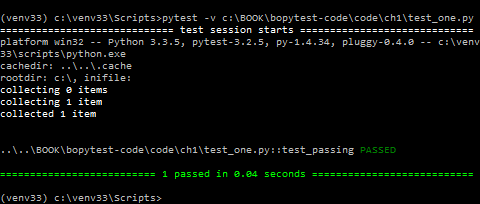

$ pytest -v test_one.py ===================== test session starts ====================== collected 1 items test_one.py::test_passing PASSED =================== 1 passed in 0.01 seconds ===================

If you have a color terminal, PASSED and the bottom row are green. Perfectly!

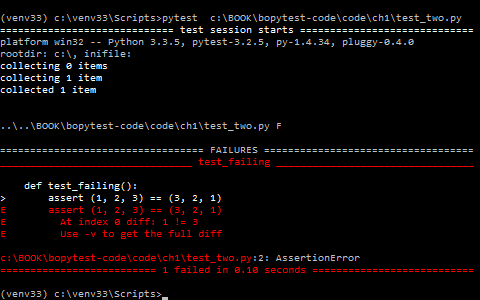

This is a bad test:

ch1 / test_two.py

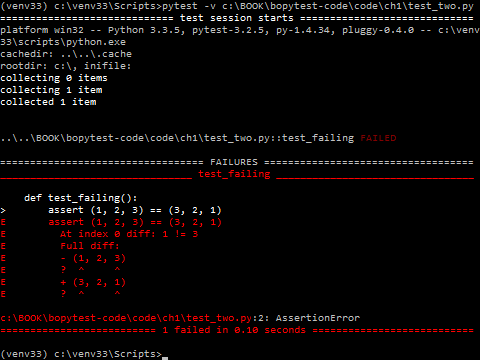

def test_failing(): assert (1, 2, 3) == (3, 2, 1) How pytest shows failures during testing is one of the many reasons why developers love pytest. Let's see what comes of it:

$ pytest test_two.py ===================== test session starts ====================== collected 1 items test_two.py F =========================== FAILURES =========================== _________________________ test_failing _________________________ def test_failing(): > assert (1, 2, 3) == (3, 2, 1) E assert (1, 2, 3) == (3, 2, 1) E At index 0 diff: 1 != 3 E Use -v to get the full diff test_two.py:2: AssertionError =================== 1 failed in 0.04 seconds ===================

Fine! The test test test_failing gets its section to show us why it did not pass.

And pytest accurately reports that the first failure: index 0 is a mismatch.

Much of this message is red, which makes it really stand out (if you have a color terminal).

This is already a lot of information, but there is a line with a hint that says Use -v to get even more descriptions of the differences.

Let's zayuzay this -v :

$ pytest -v test_two.py ===================== test session starts ====================== collected 1 items test_two.py::test_failing FAILED =========================== FAILURES =========================== _________________________ test_failing _________________________ def test_failing(): > assert (1, 2, 3) == (3, 2, 1) E assert (1, 2, 3) == (3, 2, 1) E At index 0 diff: 1 != 3 E Full diff: E - (1, 2, 3) E ? ^ ^ E + (3, 2, 1) E ? ^ ^ test_two.py:2: AssertionError =================== 1 failed in 0.04 seconds ===================

Wow!

pytest adds a caret (^) to show us exactly what the difference is.

If you're already impressed with how easy it is to write, read and run tests with pytest and how easy it is to read the output to see where the failure happened, well ... you haven't seen anything yet. Where it came from, there are more miracles. Stay and let me show you why I think that pytest is absolutely the best test platform.

In the remainder of this chapter, you install pytest, look at the various ways to start it, and execute some of the most frequently used command line options. In future chapters, you will learn how to write test functions that maximize the power of pytest, how to pull installation code into setup and removal sections, called fixtures, and how to use fixtures and plug-ins to really overload software testing.

But first I have to apologize. Sorry to test assert (1, 2, 3) == (3, 2, 1) , it’s so boring. I hear snoring ?! No one would have written such a test in real life. Software tests consist of code that checks other software, which unfortunately will not always work positively. A (1, 2, 3) == (1, 2, 3) will always work. That is why we will not use too stupid tests like this in the rest of the book. We will look at tests for a real software project. We will use the sample project Tasks, which requires a test code. Hopefully, it is simple enough to be easily understood, but not so easy to be boring.

Another useful application of software tests is to test your assumptions about how the software under test works, which may include testing your understanding of third-party modules and packages and even building Python data structures.

The Tasks project uses a Task structure based on the factory namedtuple method, which is part of the standard library. The task structure is used as a data structure for transferring information between the user interface and the API.

In the rest of this chapter, I will use Task to demonstrate running pytest and using some commonly used command line parameters.

Here is the task:

from collections import namedtuple Task = namedtuple('Task', ['summary', 'owner', 'done', 'id']) Pytest and install it.

The factory namedtuple () function exists with Python 2.6, but I still discover that many Python developers do not know how cool it is. At least, using the problem for test cases will be more interesting than (1, 2, 3) == (1, 2, 3) or (1, 2)==3 .

Before moving on to the examples, let's take a step back and talk about where to get the pytest and how to install it.

We get pytest

Headquarters pytest https://docs.pytest.org . This is official documentation. But it is distributed through PyPI (Python package index) at https://pypi.python.org/pypi/pytest .

Like other Python packages distributed through PyPI, use pip to install pytest to the virtual environment used for testing:

$ pip3 install -U virtualenv $ python3 -m virtualenv venv $ source venv/bin/activate $ pip install pytest If you are not familiar with virtualenv or pip, I will introduce you. Read Appendix 1, “Virtual Environments,” on page 155 and in Appendix 2, on page 159.

How about windows, python 2 and venv?

The example for virtualenv and pip should work on many POSIX systems, such as Linux and macOS, as well as on many versions of Python, including Python 2.7.9 and later.

The source venv / bin / activate in the line will not work for Windows, use venv \ Scripts \ activate.bat instead.

Do this:

C:\> pip3 install -U virtualenv C:\> python3 -m virtualenv venv C:\> venv\Scripts\activate.bat (venv) C:\> pip install pytest For Python 3.6 and higher, you can do venv instead of virtualenv, and you do not have to worry about installing it first. It is included in Python 3.6 and higher. However, I heard that some platforms still behave better with virtualenv.

Run pytest

$ pytest --help usage: pytest [options] [file_or_dir] [file_or_dir] [...] ... Without arguments, pytest will examine your current directory and all the subdirectories for the test files and run the test code that it finds. If you pass the pytest file name, directory name or list of them, they will be found there instead of the current directory. Each directory specified on the command line is recursively searched for test code.

For example, let's create a subdirectory called tasks and start with this test file:

ch1 / tasks / test_three.py

""" Task.""" from collections import namedtuple Task = namedtuple('Task', ['summary', 'owner', 'done', 'id']) Task.__new__.__defaults__ = (None, None, False, None) def test_defaults(): """ , .""" t1 = Task() t2 = Task(None, None, False, None) assert t1 == t2 def test_member_access(): """ .field () namedtuple.""" t = Task('buy milk', 'brian') assert t.summary == 'buy milk' assert t.owner == 'brian' assert (t.done, t.id) == (False, None) It is not necessary to identify the namedtuples.

We’ve gotten more tests to complete the _asdict () and _replace () functionality

You can use __new __.__ defaults__ to create Task objects without specifying all the fields. The test test_defaults () is designed to demonstrate and test how defaults work.

The test_member_access() test should demonstrate how to address members by the name nd not by index, which is one of the main reasons for using namedtuples.

Let's add a couple more tests to the second file to demonstrate the _asdict() and _replace() functions

ch1 / tasks / test_four.py

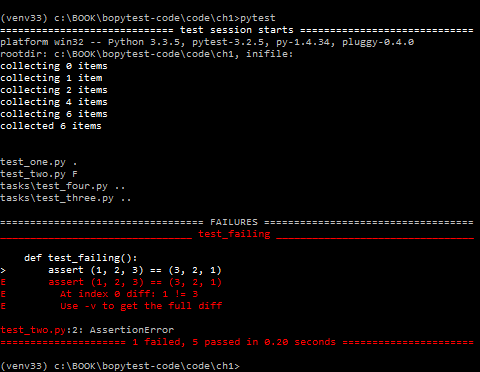

""" Task.""" from collections import namedtuple Task = namedtuple('Task', ['summary', 'owner', 'done', 'id']) Task.__new__.__defaults__ = (None, None, False, None) def test_asdict(): """_asdict() .""" t_task = Task('do something', 'okken', True, 21) t_dict = t_task._asdict() expected = {'summary': 'do something', 'owner': 'okken', 'done': True, 'id': 21} assert t_dict == expected def test_replace(): """ fields.""" t_before = Task('finish book', 'brian', False) t_after = t_before._replace(id=10, done=True) t_expected = Task('finish book', 'brian', True, 10) assert t_after == t_expected To run pytest you have the ability to specify files and directories. If you do not specify any files or directories, pytest will look for tests in the current working directory and subdirectories. It searches for files starting with test_ or ending with _test. If you run pytest from the ch1 directory, without commands, you will run tests for four files:

$ cd /path/to/code/ch1 $ pytest ===================== test session starts ====================== collected 6 items test_one.py . test_two.py F tasks/test_four.py .. tasks/test_three.py .. =========================== FAILURES =========================== _________________________ test_failing _________________________ def test_failing(): > assert (1, 2, 3) == (3, 2, 1) E assert (1, 2, 3) == (3, 2, 1) E At index 0 diff: 1 != 3 E Use -v to get the full diff test_two.py:2: AssertionError ============== 1 failed, 5 passed in 0.08 seconds ==============

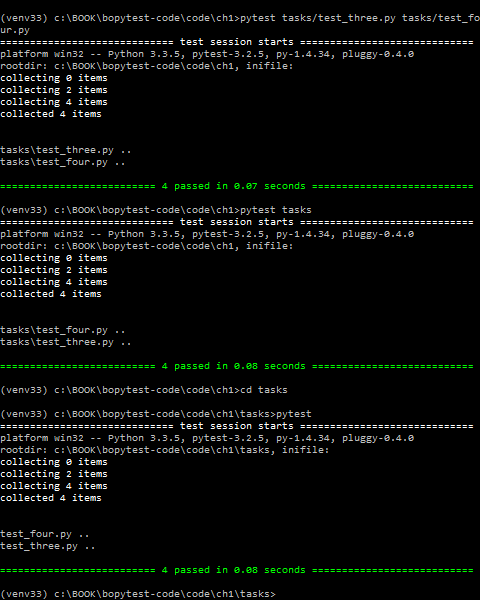

To perform only our new task tests, you can provide pytest with all the file names you want to run or the directory, or call pytest from the directory where our tests are located:

$ pytest tasks/test_three.py tasks/test_four.py ===================== test session starts ====================== collected 4 items tasks/test_three.py .. tasks/test_four.py .. =================== 4 passed in 0.02 seconds =================== $ pytest tasks ===================== test session starts ====================== collected 4 items tasks/test_four.py .. tasks/test_three.py .. =================== 4 passed in 0.03 seconds =================== $ cd /path/to/code/ch1/tasks $ pytest ===================== test session starts ====================== collected 4 items test_four.py .. test_three.py .. =================== 4 passed in 0.02 seconds ===================

The pytest part of the execution where pytest passes and finds which tests to run is called test discovery. pytest was able to find all the tests that we wanted to run, because we called them according to the pytest naming conventions.

Below is a brief overview of the naming conventions so that your test code can be detected using pytest:

- Test files must be named

test_<something>.pyor<something>_test.py. - Test methods and functions should be called

test_<something>. - Test classes should be called

Test<Something>.

Since our test files and functions start with test_ , we are fine. There are ways to change these detection rules if you have a bunch of tests with different names.

I will cover this in Chapter 6, “Configuration,” on page 113.

Let's take a closer look at the result of running only one file:

$ cd /path/to/code/ch1/tasks $ pytest test_three.py ================= test session starts ================== platform darwin -- Python 3.6.2, pytest-3.2.1, py-1.4.34, pluggy-0.4.0 rootdir: /path/to/code/ch1/tasks, inifile: collected 2 items test_three.py .. =============== 2 passed in 0.01 seconds =============== The result tells us quite a bit.

===== test session starts ====

.

pytest provides an elegant delimiter to start a test session. A session is one pytest call, including all tests performed in several directories. This session definition becomes important when I talk about the session area in relation to the pytest fixtures in defining the fixtures area, on page 56.

The darwin platform is on my Mac. On a Windows PC, the platform is different. The following lists the versions of Python and pytest, as well as dependencies on pytest packages. Both py and pluggy are packages developed by the pytest team to help with the pytest implementation.

rootdir: / path / to / code / ch1 / tasks, inifile:

rootdir is the topmost shared directory for all directories in which test code is searched. The inifile (empty here) lists the configuration files used. Configuration files can be pytest.ini , tox.ini or setup.cfg . More information about the configuration files can be found in Chapter 6, “Configuration,” on page 113.

collected 2 items

These are two test functions in the file.

test_three.py ..

test_three.py shows the file being tested. There is one line for each test file. Two points mean that the tests passed - one point for each test function or method. Points are intended only for passing tests. Failures, errors (errors), skips (gaps), xfails, and xpasses are denoted with F, E, s, x, and X, respectively. If you want to see more points for passing tests, use the -v or --verbose option.

== 2 passed in 0.01 seconds ==

This line refers to the number of passed tests and the time spent on the entire test session. If there are non-passing tests, the number of each category will also be listed here.

A test result is the primary way a user who performs a test or views a result can understand what happened during the test. In pytest, test functions may have several different results, and not just pass or fail. Here are the possible results of the test function:

- PASSED (.): The test was successful.

- FAILED (F): Test failed (or XPASS + strict).

- SKIPPED (s): The test has been missed. You can force pytest to skip the test using the decorators

@pytest.mark.skip()orpytest.mark.skipif(), discussed in the skipping tests section, on page 34. - xfail (x): The test should not have passed, was launched and failed. You can force pytest to indicate that the test should fail using the

@pytest.mark.xfail()decorator, described in test markings as failing, on page 37. - XPASS (X): The test should not have passed, was launched and passed! ..

- ERROR (E): An exception occurred outside the testing function, or in fixture, discussed in chapter 3, pytest fixtures, on page 49, or in hook function, discussed in chapter 5, Plugins, on page 95.

Performing Only One Test

Perhaps the first thing you want to do after you start writing tests is to run only one. Specify the file directly and add the name ::test_name :

$ cd /path/to/code/ch1 $ pytest -v tasks/test_four.py::test_asdict =================== test session starts =================== collected 3 items tasks/test_four.py::test_asdict PASSED ================ 1 passed in 0.01 seconds ================= Now let's consider some options.

Using Options

We have already used the verbose, -v or --verbose option a couple of times, but there are still many options worth knowing about. We are not going to use them all in this book, only some. You can view the full list using the pytest option --help .

Below are a few options that are quite useful when working with pytest. This is not a complete list, but these options are enough for the beginning.

$ pytest --help usage: pytest [options] [file_or_dir] [file_or_dir] [...] ... subset of the list ... positional arguments: file_or_dir general: -k EXPRESSION only run tests which match the given substring expression. An expression is a python evaluatable expression where all names are substring-matched against test names and their parent classes. Example: -k 'test_method or test_other' matches all test functions and classes whose name contains 'test_method' or 'test_other', while -k 'not test_method' matches those that don't contain 'test_method' in their names. Additionally keywords are matched to classes and functions containing extra names in their 'extra_keyword_matches' set, as well as functions which have names assigned directly to them. -m MARKEXPR only run tests matching given mark expression. example: -m 'mark1 and not mark2'. --markers show markers (builtin, plugin and per-project ones). -x, --exitfirst exit instantly on first error or failed test. --maxfail=num exit after first num failures or errors. ... --capture=method per-test capturing method: one of fd|sys|no. -s shortcut for --capture=no. ... --lf, --last-failed rerun only the tests that failed at the last run (or all if none failed) --ff, --failed-first run all tests but run the last failures first. This may re-order tests and thus lead to repeated fixture setup/teardown ... reporting: -v, --verbose increase verbosity. -q, --quiet decrease verbosity. --verbosity=VERBOSE set verbosity ... -l, --showlocals show locals in tracebacks (disabled by default). --tb=style traceback print mode (auto/long/short/line/native/no). ... --durations=N show N slowest setup/test durations (N=0 for all). ... collection: --collect-only only collect tests, don't execute them. ... test session debugging and configuration: --basetemp=dir base temporary directory for this test run.(warning: this directory is removed if it exists) --version display pytest lib version and import information. -h, --help show help message and configuration info --collect-only

The --collect-only parameter indicates which tests will be executed with the given parameters and configuration. This option is convenient to first show that the output can be used as a reference for other examples. If you start in the ch1 directory, you should see all the test functions that you have looked at so far in this chapter:

$ cd /path/to/code/ch1 $ pytest --collect-only =================== test session starts =================== collected 6 items <Module 'test_one.py'> <Function 'test_passing'> <Module 'test_two.py'> <Function 'test_failing'> <Module 'tasks/test_four.py'> <Function 'test_asdict'> <Function 'test_replace'> <Module 'tasks/test_three.py'> <Function 'test_defaults'> <Function 'test_member_access'> ============== no tests ran in 0.03 seconds =============== The --collect-only is useful for checking the correctness of the choice of other options that test selects before running tests. We will use it again with -k to show how this works.

-k EXPRESSION

The -k allows you to use an expression to define test functions.

Very powerful option! It can be used as a shortcut to run a single test if the name is unique, or run a test suite that has a common prefix or suffix in their names. Suppose you want to run the tests test_asdict() and test_defaults() . You can check the filter with: --collect-only :

$ cd /path/to/code/ch1 $ pytest -k "asdict or defaults" --collect-only =================== test session starts =================== collected 6 items <Module 'tasks/test_four.py'> <Function 'test_asdict'> <Module 'tasks/test_three.py'> <Function 'test_defaults'> =================== 4 tests deselected ==================== ============== 4 deselected in 0.03 seconds =============== Aha This is similar to what we need. Now you can run them by removing --collect-only :

$ pytest -k "asdict or defaults" =================== test session starts =================== collected 6 items tasks/test_four.py . tasks/test_three.py . =================== 4 tests deselected ==================== ========= 2 passed, 4 deselected in 0.03 seconds ========== Oops! Just a point. So they went through. But were they the right tests? One way to find out is to use -v or --verbose :

$ pytest -v -k "asdict or defaults" =================== test session starts =================== collected 6 items tasks/test_four.py::test_asdict PASSED tasks/test_three.py::test_defaults PASSED =================== 4 tests deselected ==================== ========= 2 passed, 4 deselected in 0.02 seconds ========== Aha These were the right tests.

-m MARKEXPR

Markers are one of the best ways to mark a subset of test functions for co-launch. As an example, one of the ways to run test_replace() and test_member_access() , even if they are in separate files, is to flag them. You can use any marker name. Say you want to use run_these_please . Note the tests using the @pytest.mark.run_these_please decorator, like this:

import pytest ... @pytest.mark.run_these_please def test_member_access(): ... Now the same for test_replace() . Then you can run all the tests with the same marker using pytest -m run_these_please :

$ cd /path/to/code/ch1/tasks $ pytest -v -m run_these_please ================== test session starts =================== collected 4 items test_four.py::test_replace PASSED test_three.py::test_member_access PASSED =================== 2 tests deselected =================== ========= 2 passed, 2 deselected in 0.02 seconds ========= A marker expression does not have to be a single marker. You can use options such as -m "mark1 and mark2" for tests with both markers, -m "mark1 and not mark2" for tests that are marked 1, but not 2, -m "mark1 or mark2" for tests with one of, etc., I will discuss markers in more detail in the Marking Verification Methods, on page 31.

-x, --exitfirst

Pytest's normal behavior is to run all the tests it finds. If the test function detects a failure assert or exception , the execution of this test is stopped, and the test fails. And then pytest runs the next test. For the most part, this is what you need. However, especially when debugging a problem, it immediately interferes with the entire test session when the test is not correct. This is what the -x option does. Let's try it on the six tests we currently have:

$ cd /path/to/code/ch1 $ pytest -x ====================== test session starts ==================== collected 6 items test_one.py . test_two.py F ============================ FAILURES ========================= __________________________ test_failing _______________________ def test_failing(): > assert (1, 2, 3) == (3, 2, 1) E assert (1, 2, 3) == (3, 2, 1) E At index 0 diff: 1 != 3 E Use -v to get the full diff test_two.py:2: AssertionError =============== 1 failed, 1 passed in 0.38 seconds ============ At the top of the output you can see that all six tests (or “items”) were collected, and on the bottom line you see that one test failed and one passed, and pytest displayed the line “Interrupted” to let us know that it is stopped. Without -x all six tests would be run. Let's repeat it again without -x . We also use --tb=no to disable the stack trace, since you have already seen it and you do not need to see it again:

$ cd /path/to/code/ch1 $ pytest --tb=no =================== test session starts =================== collected 6 items test_one.py . test_two.py F tasks/test_four.py .. tasks/test_three.py .. =========== 1 failed, 5 passed in 0.09 seconds ============ , -x , pytest test_two.py .

--maxfail=num

-x . , , , --maxfail , , . , . , --maxfail = 2 , , --maxfail = 1 , -x :

$ cd /path/to/code/ch1 $ pytest --maxfail=2 --tb=no =================== test session starts =================== collected 6 items test_one.py . test_two.py F tasks/test_four.py .. tasks/test_three.py .. =========== 1 failed, 5 passed in 0.08 seconds ============ $ pytest --maxfail=1 --tb=no =================== test session starts =================== collected 6 items test_one.py . test_two.py F !!!!!!!!! Interrupted: stopping after 1 failures !!!!!!!!!! =========== 1 failed, 1 passed in 0.19 seconds ============ E --tb=no , .

-s and --capture=method

-s — , stdout , . --capture=no . , . , , , . -s --capture=no . print() , .

, , -l/--showlocals , , .

--capture=fd --capture=sys . — --capture=sys sys.stdout/stderr mem-. --capture=fd 1 2 .

sys fd . , , . -s . , -s , .

; . , , .

-lf, --last-failed

. --lf :

, --tb , , .

$ cd /path/to/code/ch1 $ pytest --lf =================== test session starts =================== run-last-failure: rerun last 1 failures collected 6 items test_two.py F ======================== FAILURES ========================= ______________________ test_failing _______________________ def test_failing(): > assert (1, 2, 3) == (3, 2, 1) E assert (1, 2, 3) == (3, 2, 1) E At index 0 diff: 1 != 3 E Use -v to get the full diff test_two.py:2: AssertionError =================== 5 tests deselected ==================== ========= 1 failed, 5 deselected in 0.08 seconds ========== –ff, --failed-first

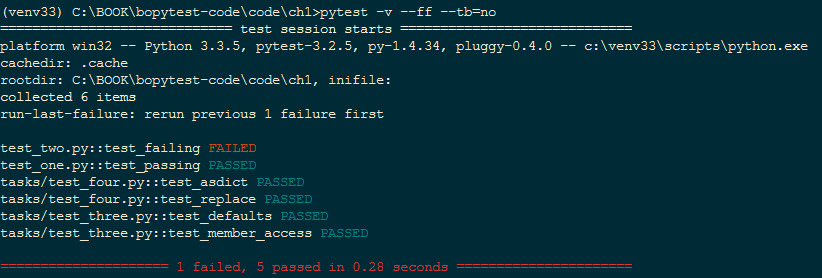

--ff/--failed-first , --last-failed , , :

$ cd /path/to/code/ch1 $ pytest --ff --tb=no =================== test session starts =================== run-last-failure: rerun last 1 failures first collected 6 items test_two.py F test_one.py . tasks/test_four.py .. tasks/test_three.py .. =========== 1 failed, 5 passed in 0.09 seconds ============ test_failing() test\_two.py test\_one.py . , test_failing() , --ff

-v, --verbose

-v/--verbose . , , .

, --ff --tb=no :

$ cd /path/to/code/ch1 $ pytest -v --ff --tb=no =================== test session starts =================== run-last-failure: rerun last 1 failures first collected 6 items test_two.py::test_failing FAILED test_one.py::test_passing PASSED tasks/test_four.py::test_asdict PASSED tasks/test_four.py::test_replace PASSED tasks/test_three.py::test_defaults PASSED tasks/test_three.py::test_member_access PASSED =========== 1 failed, 5 passed in 0.07 seconds ============

FAILED PASSED.

-q, --quiet

-q/--quiet -v/--verbose ; . --tb=line , .

- q :

$ cd /path/to/code/ch1 $ pytest -q .F.... ======================== FAILURES ========================= ______________________ test_failing _______________________ def test_failing(): > assert (1, 2, 3) == (3, 2, 1) E assert (1, 2, 3) == (3, 2, 1) E At index 0 diff: 1 != 3 E Full diff: E - (1, 2, 3) E ? ^ ^ E + (3, 2, 1) E ? ^ ^ test_two.py:2: AssertionError 1 failed, 5 passed in 0.08 seconds -q , . -q ( --tb=no ), , .

-l, --showlocals

-l/--showlocals tracebacks .

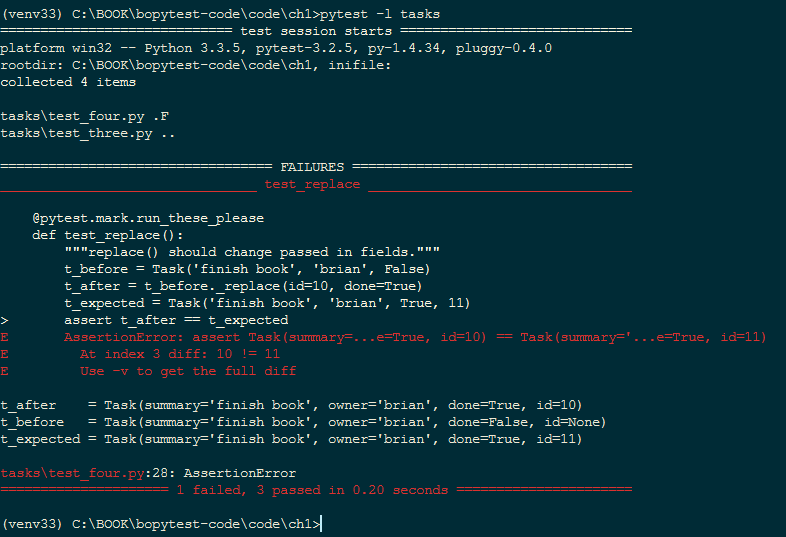

. test_replace()

t_expected = Task('finish book', 'brian', True, 10) on

t_expected = Task('finish book', 'brian', True, 11) 10 11 . . --l/--showlocals :

$ cd /path/to/code/ch1 $ pytest -l tasks =================== test session starts =================== collected 4 items tasks/test_four.py .F tasks/test_three.py .. ======================== FAILURES ========================= ______________________ test_replace _______________________ @pytest.mark.run_these_please def test_replace(): """replace() should change passed in fields.""" t_before = Task('finish book', 'brian', False) t_after = t_before._replace(id=10, done=True) t_expected = Task('finish book', 'brian', True, 11) > assert t_after == t_expected E AssertionError: assert Task(summary=...e=True, id=10) == Task(summary='...e=True, id=11) E At index 3 diff: 10 != 11 E Use -v to get the full diff t_after = Task(summary='finish book', owner='brian', done=True, id=10) t_before = Task(summary='finish book', owner='brian', done=False, id=None) t_expected = Task(summary='finish book', owner='brian', done=True, id=11) tasks\test_four.py:28: AssertionError =========== 1 failed, 3 passed in 0.08 seconds ============

t_after , t_before t_expected , assert-.

--tb=style

--tb=style . pytest , , . tracebacks , , . --tb=style . , , short, line no. short assert E ; line ; no .

test_replace() , , . --tb=no

$ cd /path/to/code/ch1 $ pytest --tb=no tasks =================== test session starts =================== collected 4 items tasks/test_four.py .F tasks/test_three.py .. =========== 1 failed, 3 passed in 0.04 seconds ============ --tb=line in many cases is enough to tell what's wrong. If you have a ton of failing tests, this option can help to show a pattern in the failures:

--tb=line , , . , :

$ pytest --tb=line tasks =================== test session starts =================== collected 4 items tasks/test_four.py .F tasks/test_three.py .. ======================== FAILURES ========================= /path/to/code/ch1/tasks/test_four.py:20: AssertionError: assert Task(summary=...e=True, id=10) == Task( summary='...e=True, id=11) =========== 1 failed, 3 passed in 0.05 seconds ============ verbose tracebacks --tb=short :

$ pytest --tb=short tasks =================== test session starts =================== collected 4 items tasks/test_four.py .F tasks/test_three.py .. ======================== FAILURES ========================= ______________________ test_replace _______________________ tasks/test_four.py:20: in test_replace assert t_after == t_expected E AssertionError: assert Task(summary=...e=True, id=10) == Task( summary='...e=True, id=11) E At index 3 diff: 10 != 11 E Use -v to get the full diff =========== 1 failed, 3 passed in 0.04 seconds ============ , , .

, .

pytest --tb=long traceback. pytest --tb=auto tracebacks , . . pytest --tb=native traceback .

--durations=N

--durations=N , . ; N tests/setups/teardowns . --durations=0 , .

, time.sleep(0.1) . , :

$ cd /path/to/code/ch1 $ pytest --durations=3 tasks ================= test session starts ================= collected 4 items tasks/test_four.py .. tasks/test_three.py .. ============== slowest 3 test durations =============== 0.10s call tasks/test_four.py::test_replace 0.00s setup tasks/test_three.py::test_defaults 0.00s teardown tasks/test_three.py::test_member_access ============== 4 passed in 0.13 seconds sleep , . : call(), (setup) (teardown). , , , , . 3, pytest Fixtures, . 49.

--version

--version pytest , :

$ pytest --version This is pytest version 3.0.7, imported from /path/to/venv/lib/python3.5/site-packages/pytest.py pytest , pytest site-packages .

-h, --help

-h/--help , , pytest. , stock- pytest, , , .

-h :

- : pytest [] [file_or_dir] [file_or_dir] [...]

- ,

- , ini , 6, , . 113

- , pytest ( 6, , . 113)

- , pytest

--markers, 2, , . 23 - , pytest

--fixtures, 3, pytest, . 49

:

(shown according to specified file_or_dir or current dir if not specified) , , . , pytest conftest.py, - (hook functions), , .

pytest conftest.py . conftest.py ini, pytest.ini 6 «», 113.

Exercises

,

python -m virtualenvpython -m venv. , , , , , . , . 1, , . 155, ..

- $ source venv/bin/activate - $ deactivate On Windows: - C:\Users\okken\sandbox>venv\scripts\activate.bat - C:\Users\okken\sandbox>deactivatepytest . . 2, pip, 159, . , pytest, , .

. , . pytest .

assert. assert something == something_else; , :

- assert 1 in [2, 3, 4]

- assert a < b

- assert 'fizz' not in 'fizzbuzz'

What's next

In this chapter, we looked at where to get pytest and the various ways to start it. However, we did not discuss what is included in the test functions. In the next chapter, we will look at writing test functions, parameterizing them so that they are called with different data, and grouping tests into classes, modules, and packages.

')

Source: https://habr.com/ru/post/448782/

All Articles