Benchmark Kubernetes (CNI) network plug-in results over 10 Gbps network (updated: April 2019)

This is an update of my previous benchmark , which now works on Kubernetes 1.14 with the current version of CNI for April 2019.

First, I want to thank the Cilium team: the guys helped me check and fix the metrics monitoring scripts.

What has changed since November 2018

That's what has changed since (if you're interested):

Flannel remains the fastest and easiest CNI interface, but still does not support network policies and encryption.

Romana is no longer supported, so we removed it from the benchmark.

WeaveNet now supports network policies for Ingress and Egress! But productivity has declined.

Calico still needs to manually adjust the maximum packet size (MTU) for better performance. Calico offers two options for installing CNI, so you can do without a separate ETCD repository:

- storing the state in the Kubernetes API as a data warehouse (cluster size <50 nodes);

- storing the state in the Kubernetes API as a data store with a Typha proxy to relieve the K8S API (cluster size> 50 nodes).

Calico announced application policy support on top of Istio for application security.

Cilium now supports encryption! Cilium provides encryption with IPSec tunnels and offers an alternative to the WeaveNet encrypted network. But WeaveNet is faster than Cilium with encryption enabled.

Cilium is now easier to deploy - thanks to the built-in ETCD operator.

The Cilium team tried to drive weight from its CNI, reducing memory consumption and CPU costs, but competitors are still lighter.

Benchmark context

The benchmark is held on three non-virtualized Supermicro servers with a Supermicro 10 Gbps switch. Servers are connected to the switch directly via passive DAC SFP + cables and are configured in the same VLAN with jumbo frames (MTU 9000).

Kubernetes 1.14.0 is installed on Ubuntu 18.04 LTS with Docker 18.09.2 (the default Docker version in this release).

To improve reproducibility, we decided to always configure the master on the first node, place the server part of the benchmark on the second server, and the client part on the third. For this, we use the NodeSelector in Kubernetes deployments.

The benchmark results will be described on the following scale:

CNI choice for benchmark

This is a benchmark only for CNI from the list in the section on creating one master cluster with kubeadm in the official documentation Kubernetes. Of the 9 CNIs, we take only 6: we exclude those that are difficult to install and / or do not work without setting up documentation (Romana, Contiv-VPP and JuniperContrail / TungstenFabric).

We will compare the following CNI:

- Calico v3.6

- Canal v3.6 (in fact, this is Flannel for networking + Calico as a firewall)

- Cilium 1.4.2

- Flannel 0.11.0

- Kube-router 0.2.5

- WeaveNet 2.5.1

Installation

The easier it is to install CNI, the better our first impression will be. All CNI from the benchmark is very easy to install (one or two teams).

As we said, the servers and the switch are configured with activated jumbo frames (we installed MTU 9000). We would be happy if CNI automatically determined the MTU based on the adapters settings. However, only Cilium and Flannel coped with this. The rest of the CNI have requests in GitHub to add automatic MTU discovery, but we will configure it manually by changing the ConfigMap for Calico, Canal and Kube-router, or passing the environment variable for WeaveNet.

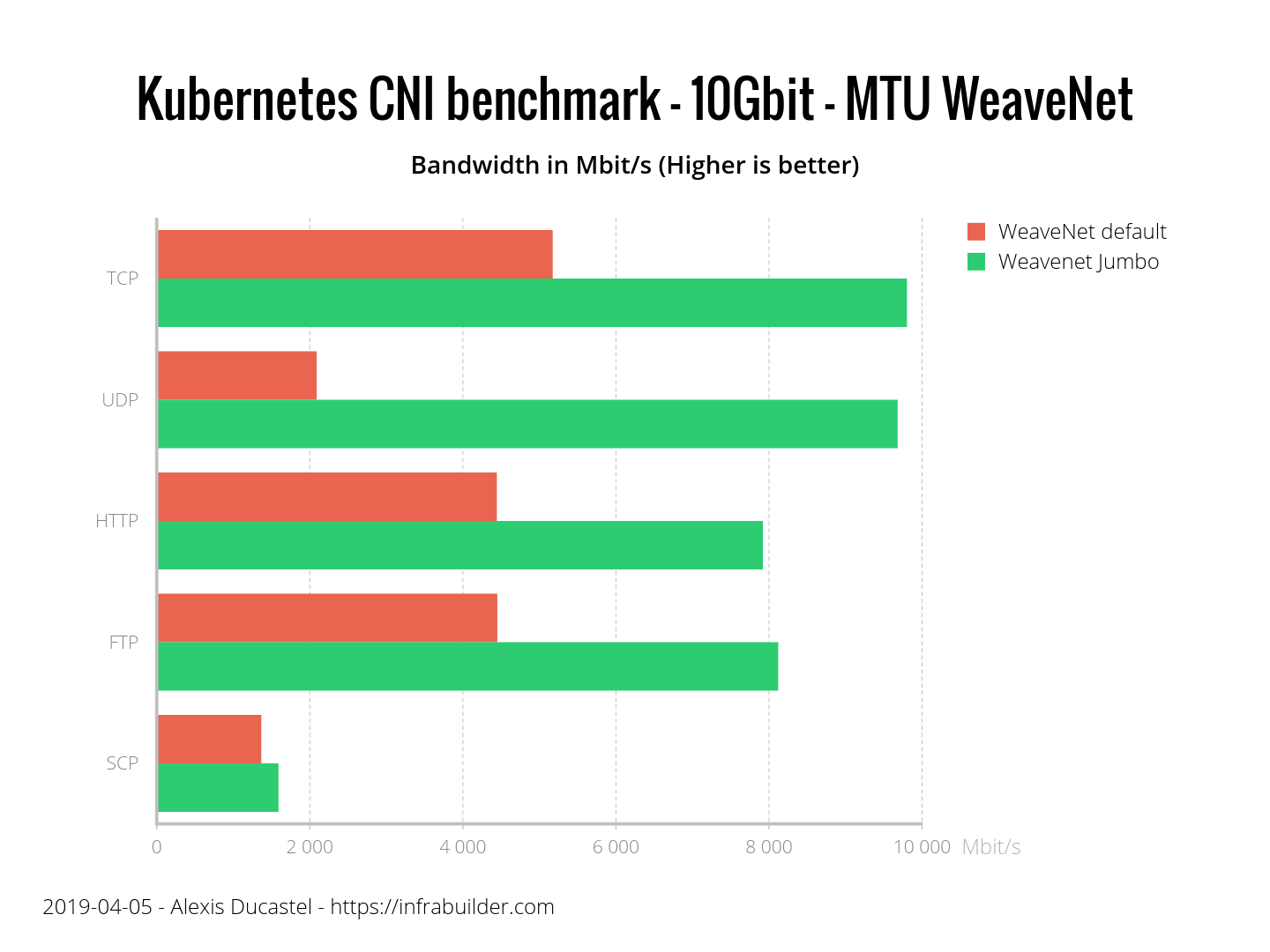

What is the problem with incorrect MTU? This diagram shows the difference between WeaveNet with the default MTU and jumbo frames enabled:

How the MTU parameter affects bandwidth

We figured out how important MTU is to performance, and now let's see how our CNIs automatically determine it:

CNI automatically determines MTU

The graph shows that you need to configure MTU for Calico, Canal, Kube-router and WeaveNet for optimal performance. Cilium and Flannel themselves managed to correctly determine the MTU without any settings.

Security

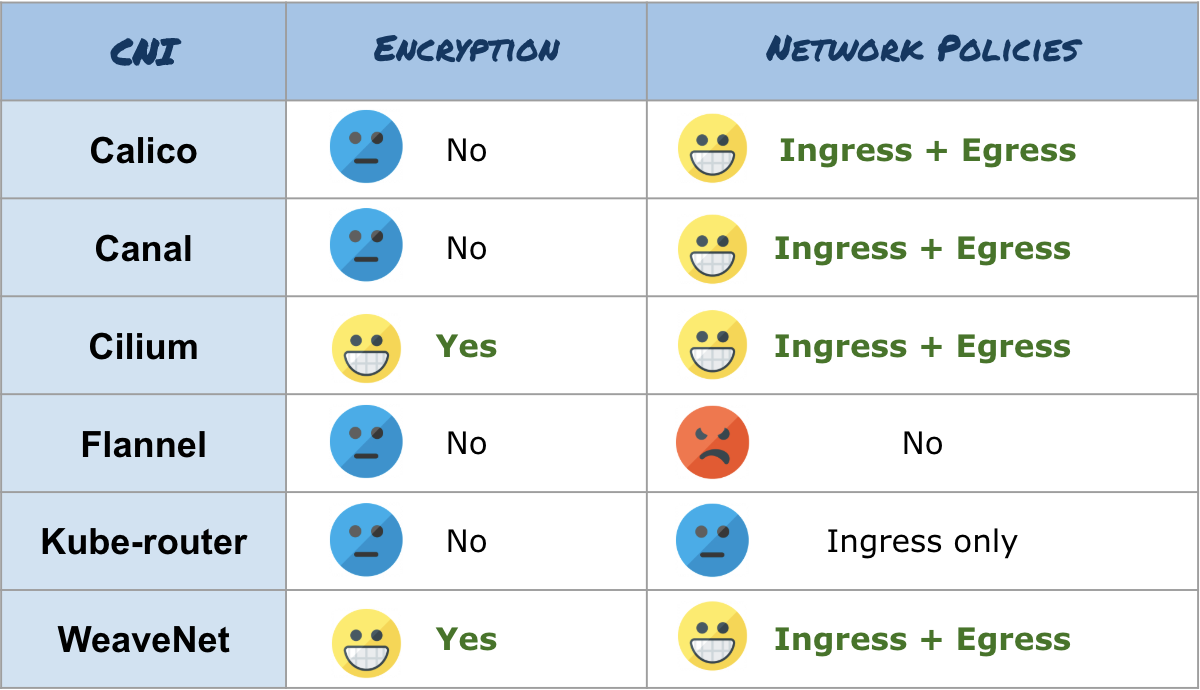

We will compare the security of CNI in two aspects: the ability to encrypt the transmitted data and the implementation of the network policies of Kubernetes (in real tests, not in documentation).

Only two CNI encrypt data: Cilium and WeaveNet. WeaveNet encryption is enabled by setting the encryption password as the CNI environment variable. The WeaveNet documentation describes this difficult, but everything is done simply. Cilium encryption is configured by the teams, by creating Kubernetes secrets, and by modifying daemonSet (slightly more complicated than in WeaveNet, but Cilium has step-by-step instructions ).

As for the implementation of network policy, Calico, Canal, Cilium and WeaveNet have succeeded , in which you can configure the rules Ingress and Egress. For Kube-router, there are rules only for Ingress, and Flannel has no network policies at all.

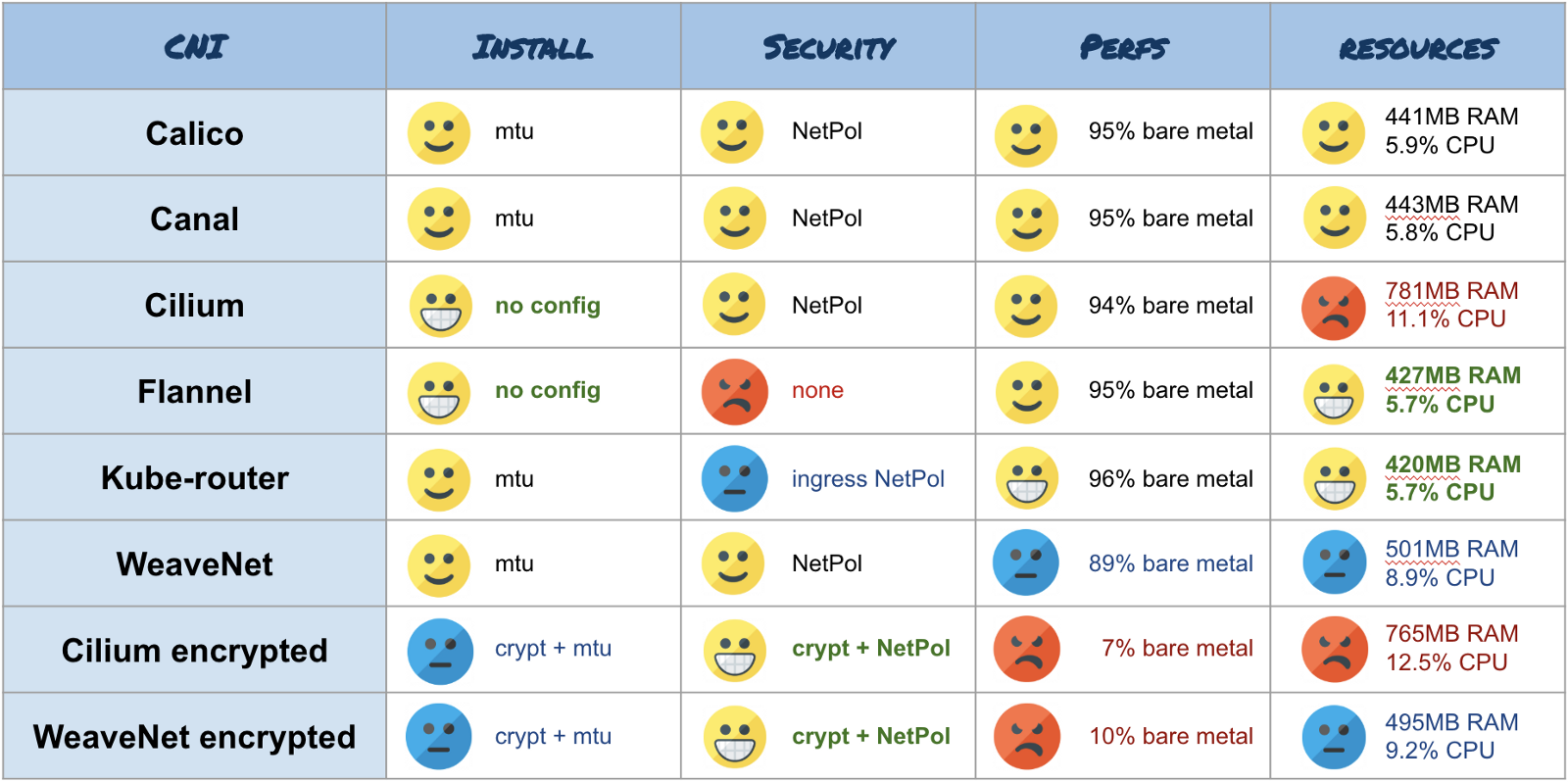

Here are the general results:

Benchmark Security Performance Results

Performance

This benchmark shows the average throughput for at least three runs of each test. We test the performance of TCP and UDP (using iperf3), real applications, such as HTTP, (with Nginx and curl) or FTP (with vsftpd and curl) and, finally, applications running using SCP-based encryption (using client and server OpenSSH).

For all tests, we made a benchmark on “bare iron” (green line) to compare the effectiveness of CNI with the native network performance. Here we use the same scale, but color:

- Yellow = very good

- Orange = good

- Blue = so-so

- Red = bad

We will not take incorrectly configured CNI and show only results for CNI with the correct MTU. (Note. Cilium incorrectly counts the MTU if you enable encryption, so you have to manually reduce the MTU to 8900 in version 1.4. In the next version, 1.5, this is done automatically.)

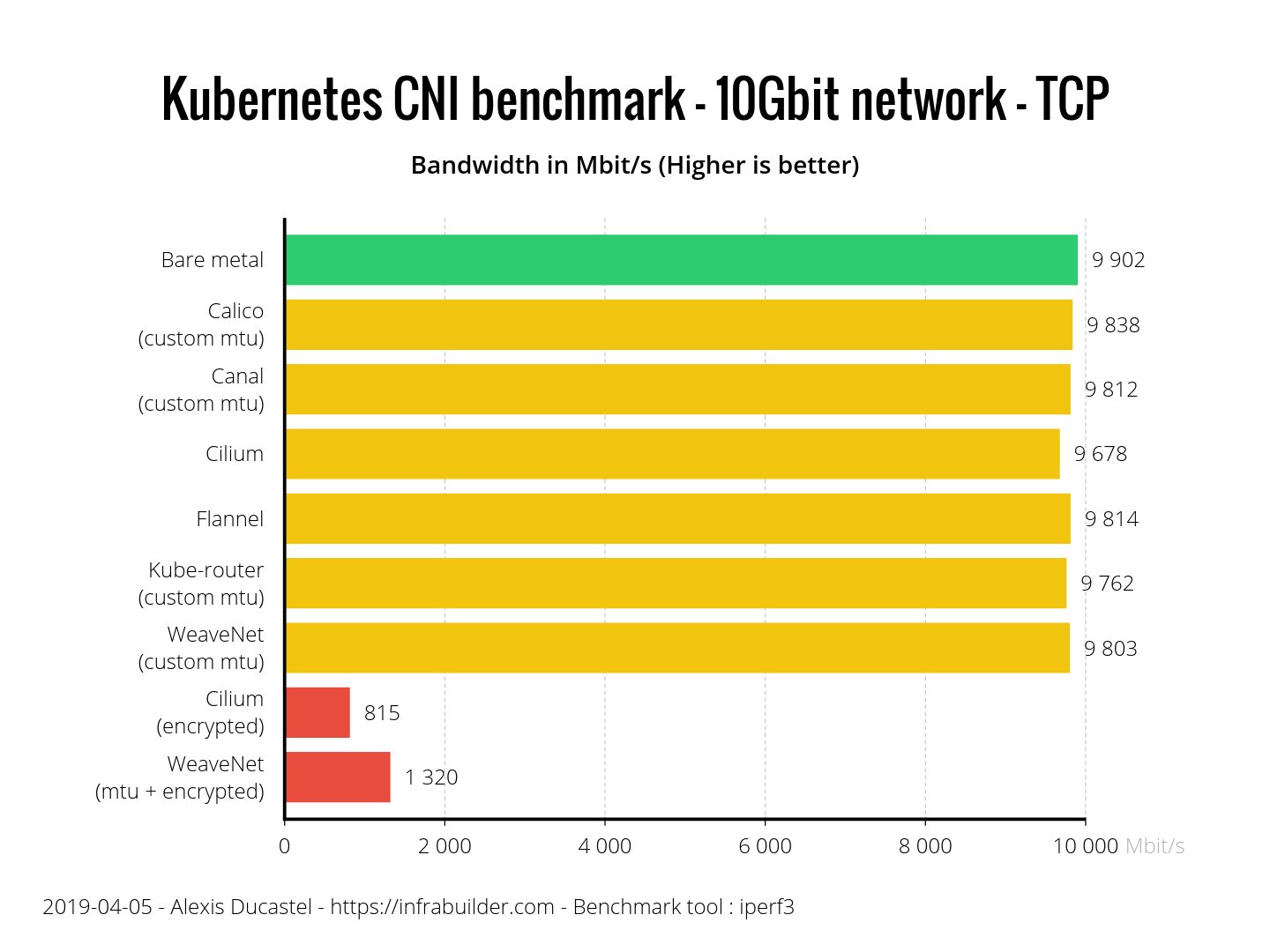

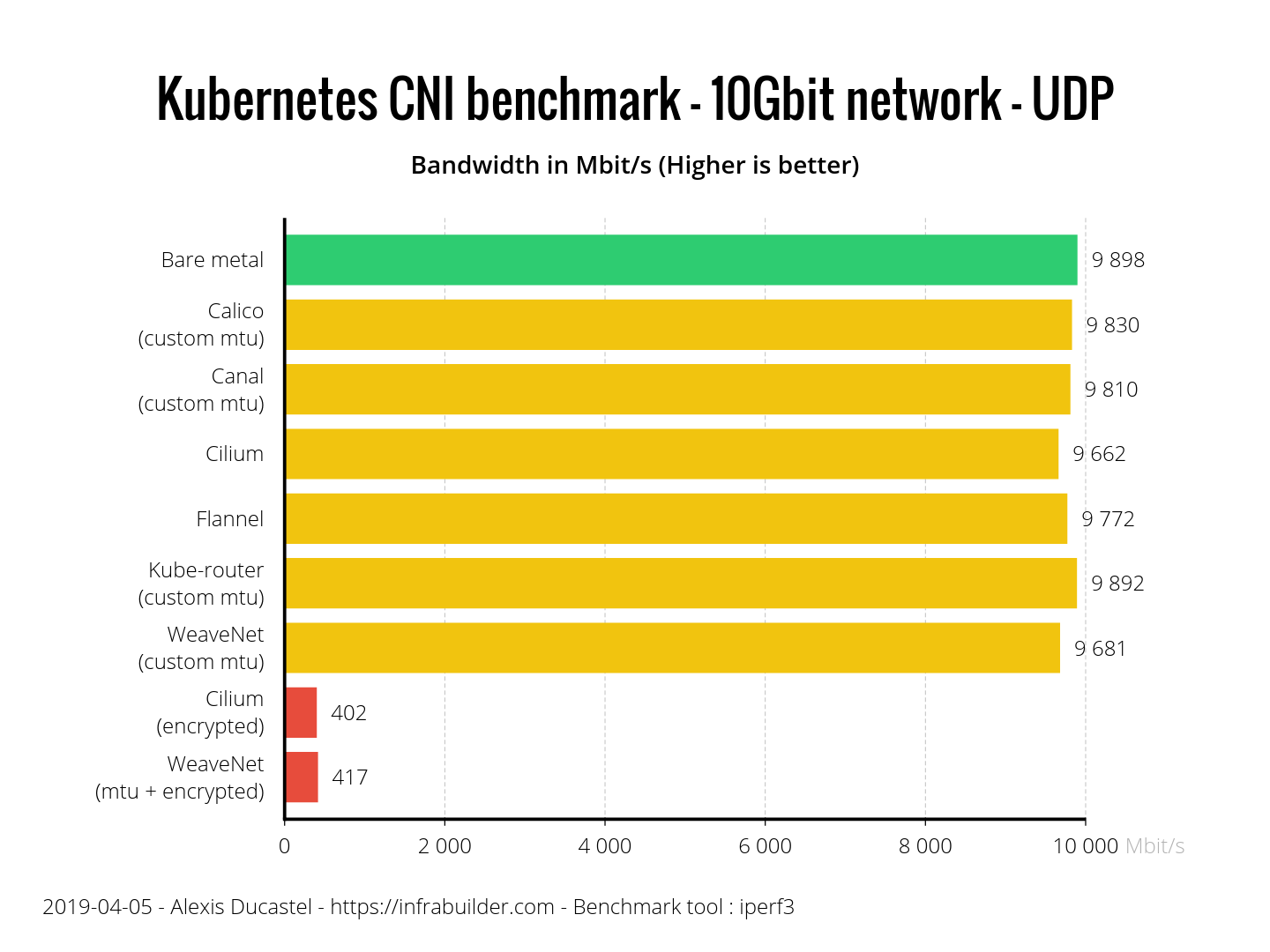

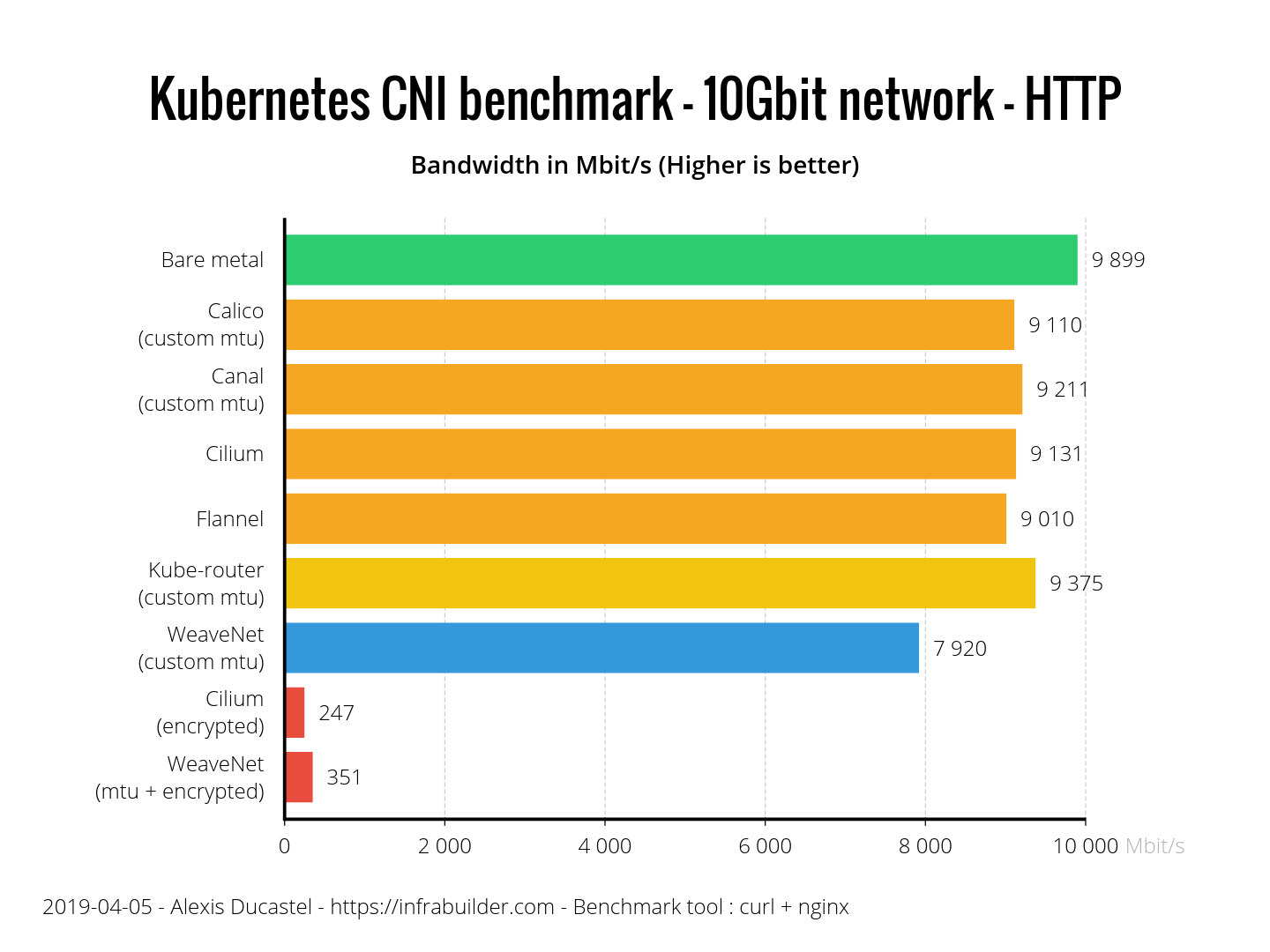

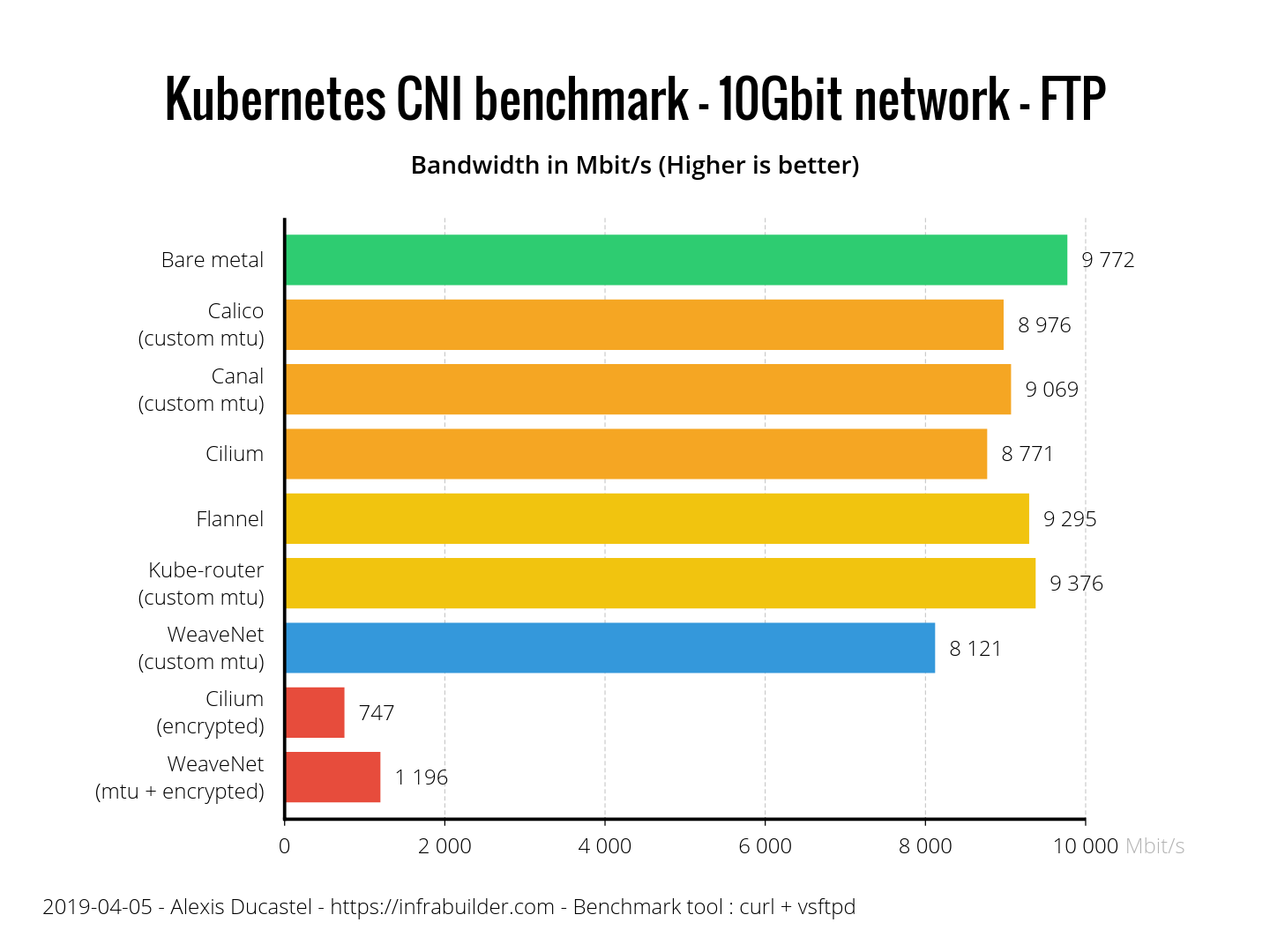

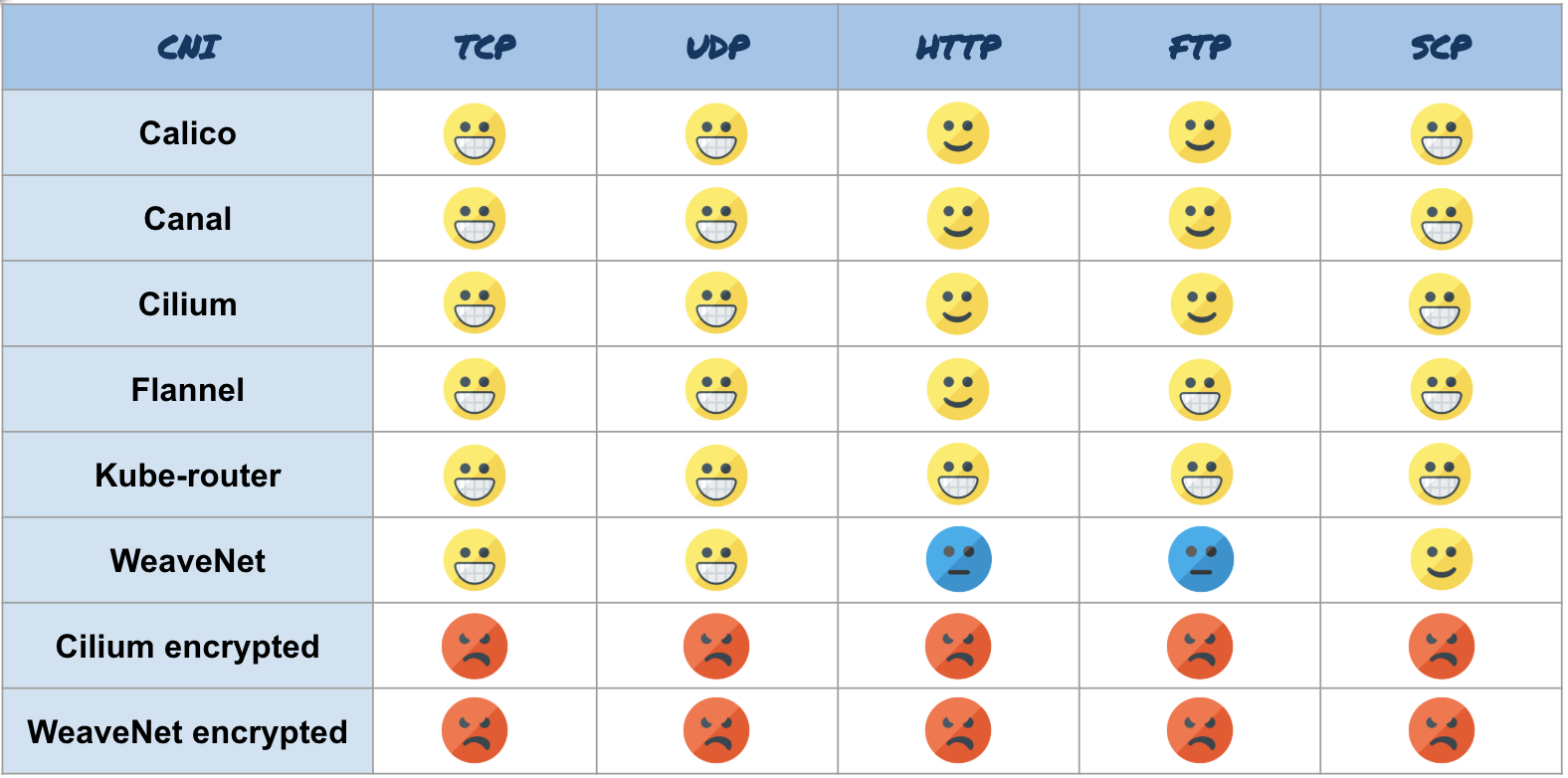

Here are the results:

All CNIs performed well on the TCP benchmark. CNI with encryption lags far behind, because encryption is expensive.

Here, too, all CNIs are fine. CNI with encryption showed almost the same result. Cilium is a little behind competitors, but this is only 2.3% of bare metal, so the result is not bad. Do not forget that only Cilium and Flannel themselves correctly defined the MTU, and these are their results without additional configuration.

How about a real app? As you can see, for HTTP, overall performance is slightly lower than for TCP. Even if you use HTTP with TCP, we configured iperf3 in the TCP benchmark to avoid a slow start, and this will affect the HTTP benchmark. Here, they did a pretty good job. Kube-router has a clear advantage, but WeaveNet did not prove to be the best: approximately 20% worse than bare metal. Cilium and WeaveNet with encryption look very sad.

With FTP, another TCP based protocol, the results vary. Flannel and Kube-router are coping, and Calico, Canal and Cilium are a little behind and work about 10% slower than bare metal. WeaveNet does not keep up by as much as 17%, but WeaveNet with encryption is 40% ahead of encrypted Cilium.

SCP immediately shows how much SSH encryption costs. Almost all CNI are doing well, and WeaveNet is lagging again. Cilium and WeaveNet with encryption is expected worst of all because of double encryption (SSH + CNI).

Here is a summary table with the results:

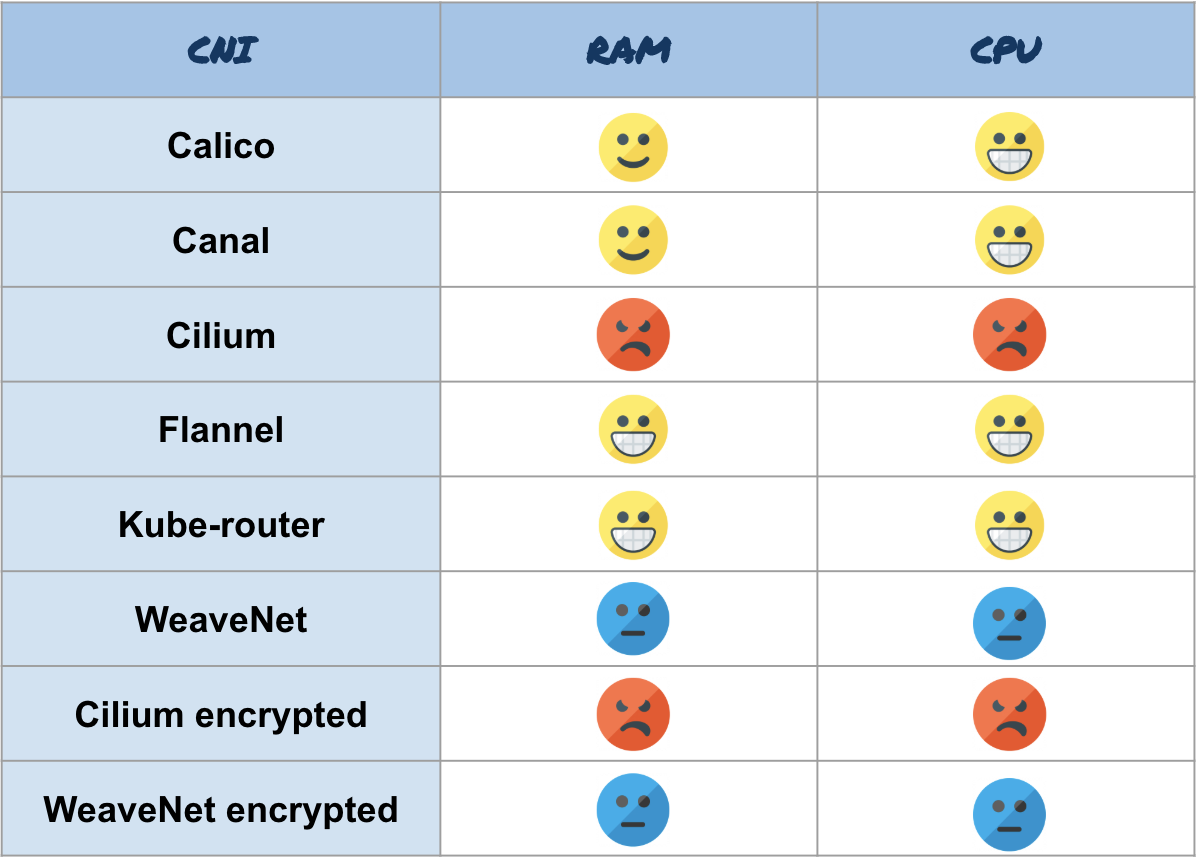

Resource consumption

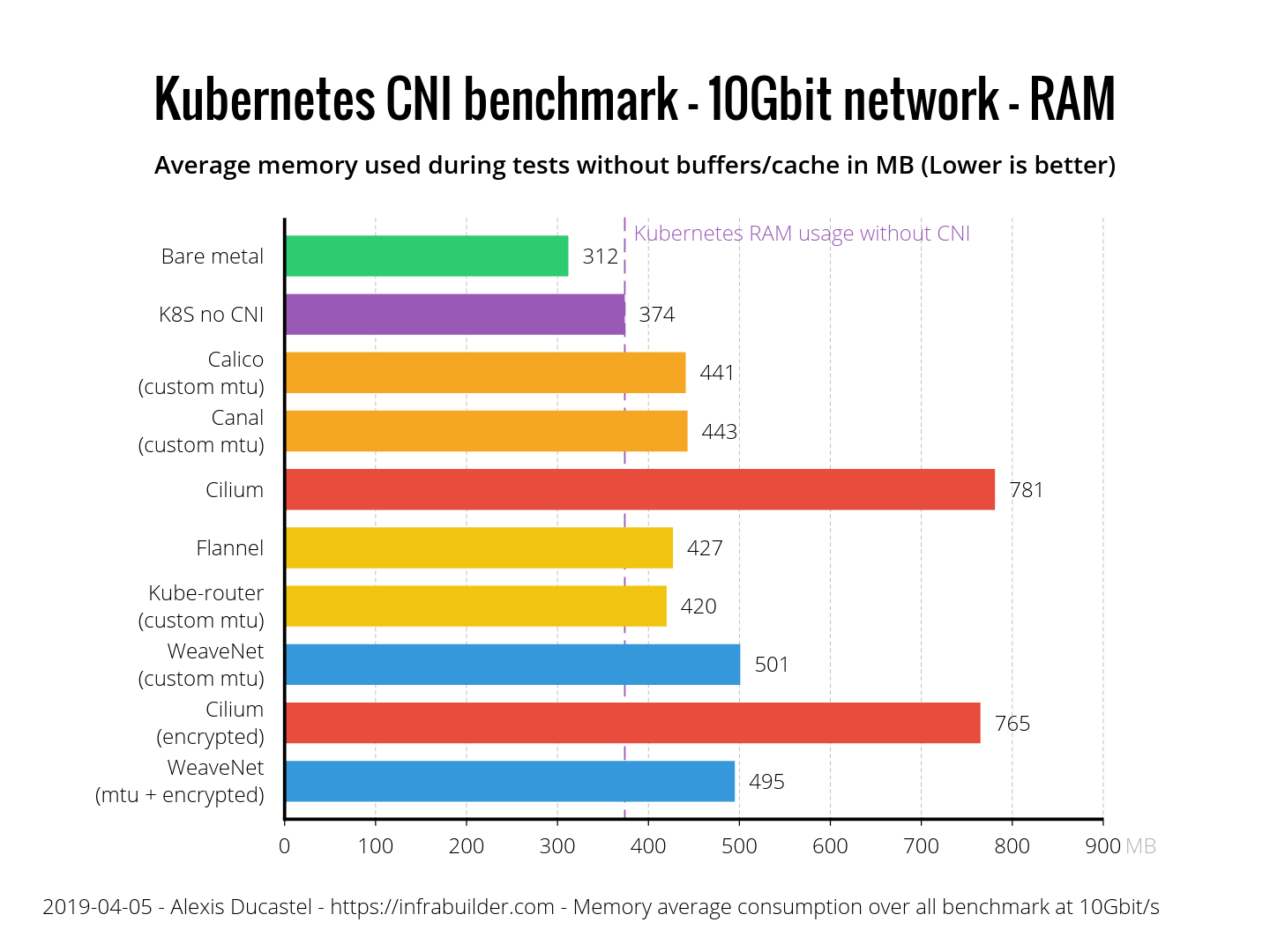

Now let's compare how CNIs consume resources under heavy loads (during transmission over TCP, 10 Gbit / s). In performance tests, we compare CNI with a “bare iron” (green line). For resource consumption, we’ll show pure Kubernetes (purple line) without CNI and see how many extra resources CNI consumes.

Let's start with the memory. Here is the average value for the RAM of the nodes (without buffers and cache) in MB during transmission.

Flannel and Kube-router showed an excellent result - only 50 MB. Calico and Canal have 70. WeaveNet obviously consumes more than the rest - 130 MB, and Cilium uses as many as 400.

Now let's check the CPU consumption. Note : on the chart, not percents, but ppm, that is, 38 ppm for “bare iron” is 3.8%. Here are the results:

Calico, Canal, Flannel and Kube-router use CPUs very efficiently - only 2% more than Kubernetes without CNI. WeaveNet is lagging far behind with an extra 5%, and behind it is Cilium - 7%.

Here is a summary of resource consumption:

Results

Table with all the results:

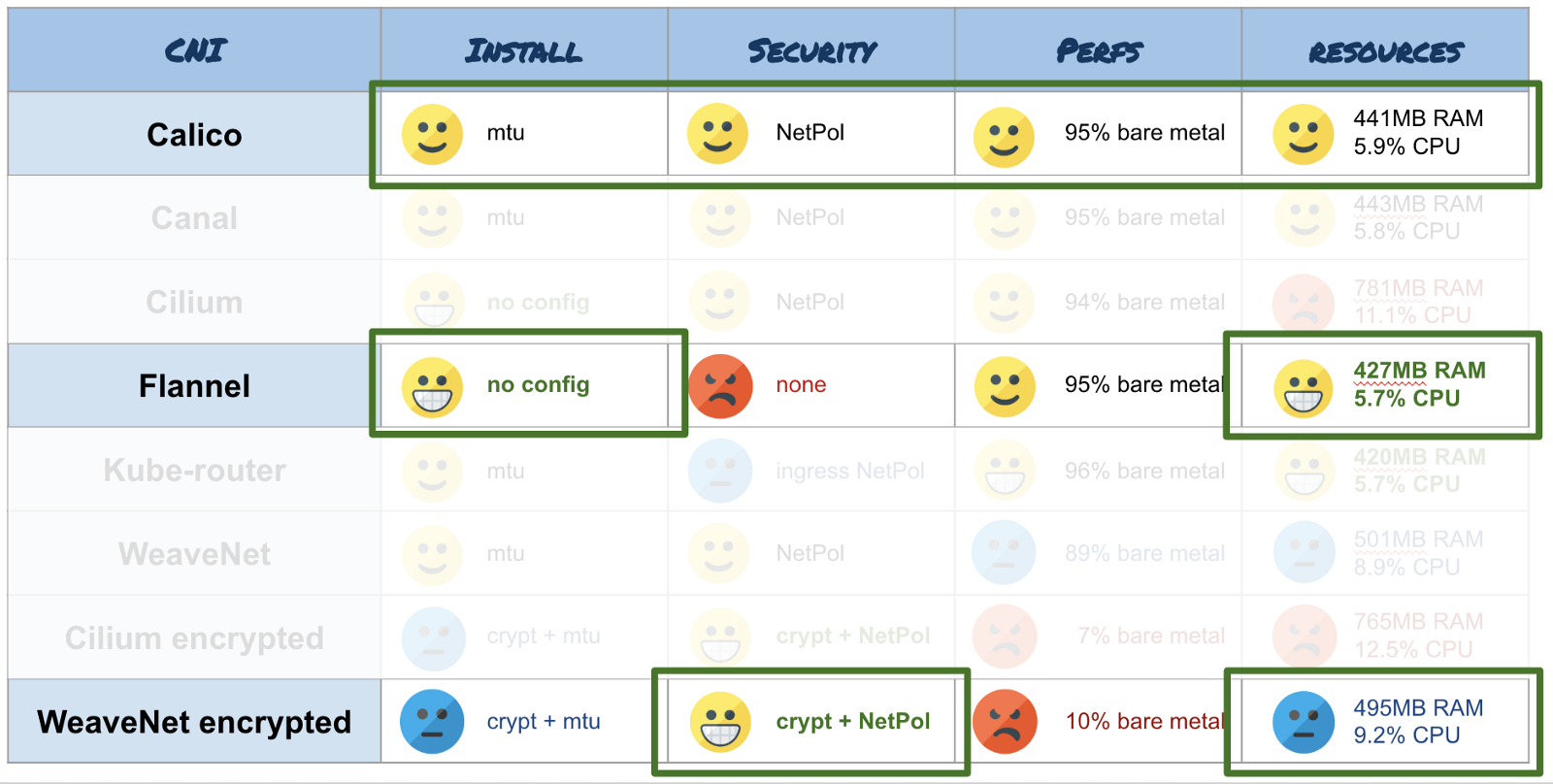

Conclusion

In the last part, I will express my subjective opinion on the results. Remember that this benchmark only tests the bandwidth of one connection on a very small cluster (3 nodes). It does not apply to large clusters (<50 nodes) or parallel connections.

I suggest using the following CNI depending on the scenario:

- You have nodes in the cluster with a small amount of resources (several GB of RAM, several cores) and you do not need security features - choose Flannel . This is one of the most economical CNI. And it is compatible with a variety of architectures (amd64, arm, arm64, etc.). In addition, it is one of the two (the second - Cilium) CNI, which can automatically determine the MTU, so you do not have to configure anything. Kube-router is also suitable, but it is not so standard and you will need to manually configure the MTU.

- If you need to encrypt your network for security, take WeaveNet . Do not forget to specify the size of the MTU if you are using jumbo frames, and activate encryption by specifying the password through the environment variable. But it is better to forget about productivity - such is the fee for encryption.

- For normal use, I advise Calico . This CNI is widely used in various Kubernetes deployment tools (Kops, Kubespray, Rancher, etc.). As with WeaveNet, do not forget to configure MTU in ConfigMap if you use jumbo frames. It is a multifunctional tool, effective in terms of resource consumption, performance and security.

And finally, I advise you to follow the development of Cilium . This CNI has a very active team that works a lot on its product (functions, saving resources, performance, security, distribution across clusters ...), and they have very interesting plans.

Visual scheme for selecting CNI

')

Source: https://habr.com/ru/post/448688/

All Articles