Evolving or making a base for a rote cart on the ARDUINO platform, while sensors and video are being driven to a computer via a smartphone

For respected GeekTimes readers, the next (fourth) long-awaited article on what will happen if you re-mix Arduinku, ESP8266, WI-FI, spice up with an Android smartphone and sprinkle over the JAVA application.

We are going to talk about robotelegu from before last article , which was time to at least smarter.

')

Who cares, welcome under cat.

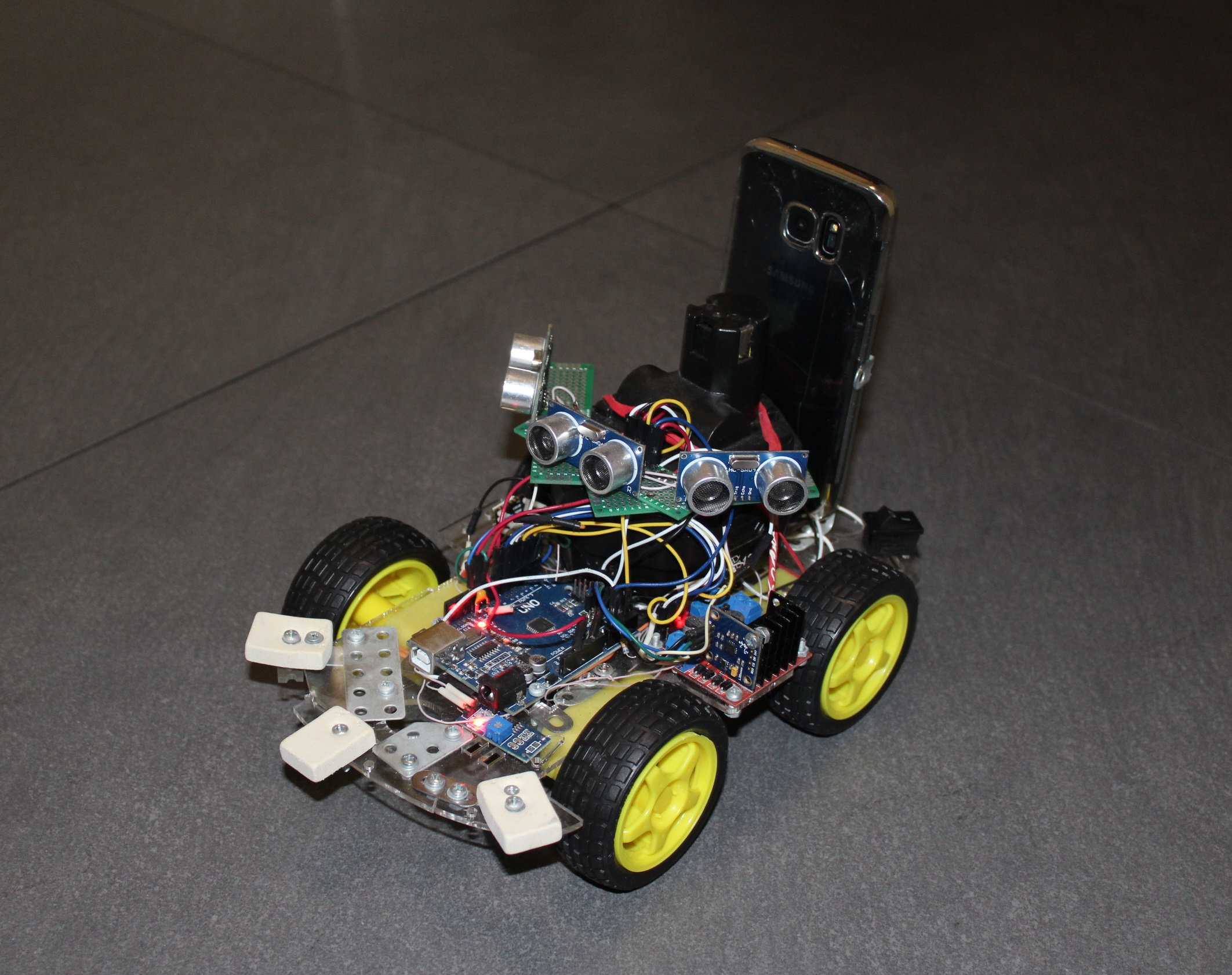

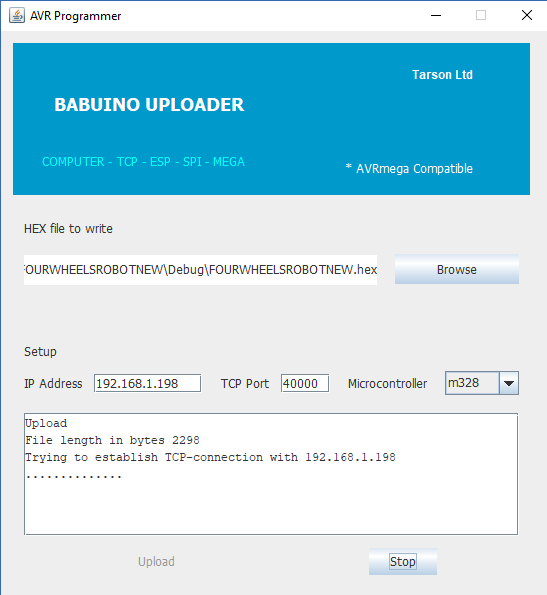

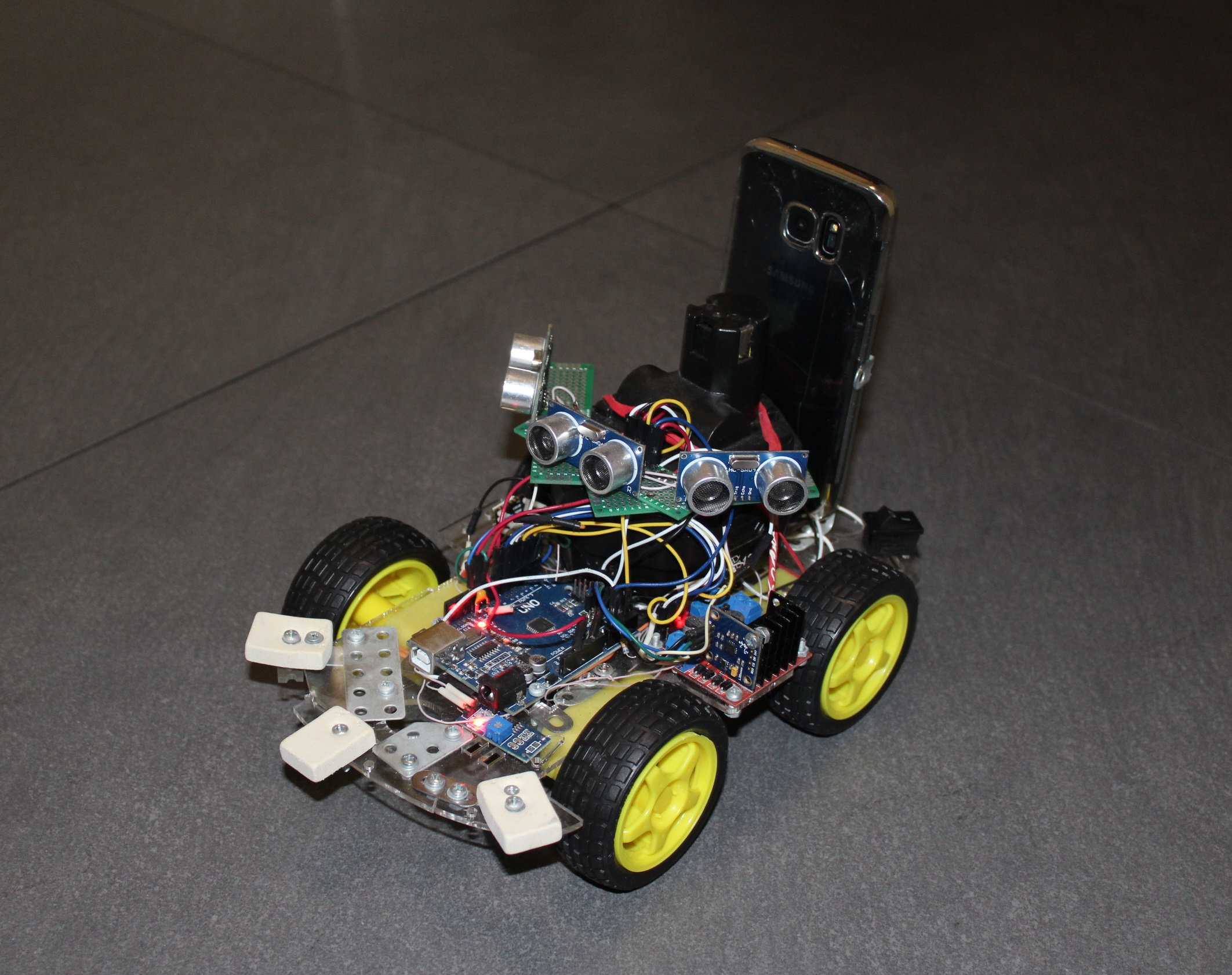

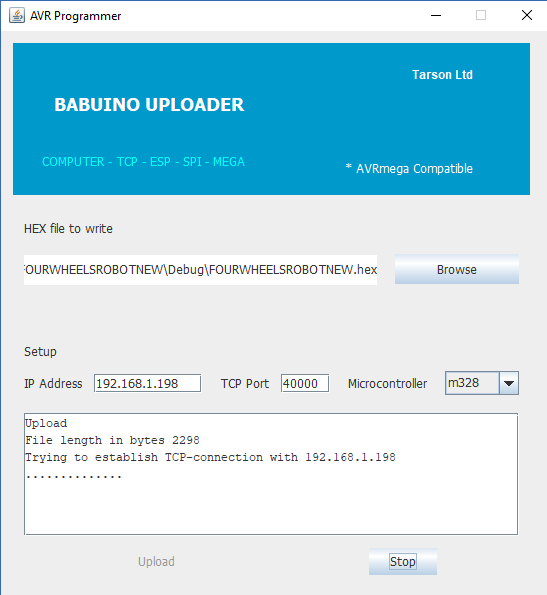

If you are not very interested in reading old articles, then briefly - the point was that to control an ordinary four-wheeled cart on the Arduino platform, it was attached to a wireless UART bridge I developed based on the well-known ESP8266 module. Also for convenience (and in general this was the main goal) with the help of the same ESP, I wrote a programmer for arduinka, which allows you to flash it remotely.

That is, the cart is somewhere far away (but within your WI-FI network) ezdiet (yes, I like to write this word), it sends data and receives commands, and if necessary, it is possible to change the program in AVR microcontroller by order. Accordingly, the program on JAVA for PC was compiled, by launching which you could enjoy control and receive a primitive telemetry in the form of the distance traveled (a reed switch and a magnet on the wheel).

Further, I successfully experimented in the following article with the control of a trolley using a smartphone - buttons, tilts and even voice. But when the cart left for the next room, then even the voice could not return it (unlike the cat). She traveled there, knocked on the walls and furniture, tangled in the wires, but besides the information about the distance traveled did not send anything.

Therefore, immediately the thought arose of providing the future Terminator with organs of sense. One of the easiest options for this is the use of the sonar.

Algorithm of work to a disgrace simple, one front we start the sensor and at the same time any counter of the microcontroller. HC-SR04 begins to be shot by ultrasound into the distance. The response signal from the sensor on the other wire signals the end of the distance measurement, and the time interval between start and response is proportional to the measured distance. Accordingly, at this moment we slow down the counter and see how much it has hit.

Accuracy is obtained to about a centimeter, and the range to about two meters. He does not like fleecy and wool surfaces (for example, a cat), where any echo signals irrevocably sink.

The affected area of view of the HC-SR04 is small, so in order to know what is happening ahead in the framework of at least ninety degrees, it is advisable to do the following:

Initially, I implemented the first option, putting the sonar on a cheap SG90 servo and the cart turned into a rover. It took a lot of time to carry out at least three measurements, so basically the cart stood, rotating servo, then carefully moved forward, but not very far (and suddenly an obstacle appeared on the side), and again she felt the space in front of her for several seconds. Still, the sound is not your light.

Therefore, without further ado, I put three sonars on it at once. The cart acquired a chthonic spider look, stopped blunting in front of obstacles, and began to turn them on the go. But in the end, there was only enough brains to simply not get stuck in a friendly environment. We had to move on - to autonomy and progress. And here, without the various senses, as even a nematode worm will tell you, it is impossible to do.

Further, usually enthusiasts begin to sculpt various new sensors like gyro-accelerometers-magnetometers and even FIRE sensors onto their creations (everything that nameless Chinese produce in million quantities for Arduino). And I, too, almost did not go on this slippery slope, but thought about it in time. And I did this why. In the farthest perspective, the robotic cart should have received the sight in the form of a camera and also understood what it saw. But the AVR microcontroller from the Arduino board will tell you “goodbye” at the stage of receiving the video, not to mention processing it. And suddenly my eyes fell on the middle-aged GALAXY S7 smartphone, already battered by life.

Such computational power, eight cores, 4 gigabytes of memory, two cameras, access to the network, what else is needed to turn a monkey into a human?

And just need a small design in order to keep our smartphone on the cart and so that it can be easily put and removed.

Then I went to the Android developer site to find out what other opportunities a regular smartphone can bestow on us. It turned out not small. Theoretically you can

As they say, what is there just not! And indeed, in relation to the specific GALAXY S7 there was not a lot of things. For example, a humidity sensor. And the ambient temperature (although I realized that being inside the case, it will show the temperature of the smartphone itself). But pressure and light sensors were present. Not to mention the accelerometer gyroscopes, using which you can easily determine your position in space.

As a result, the decision has matured, let the smartphone receive and process all the top-level information - video and all these various sensors. And the Arduino platform will be responsible, so to speak, for the unconscious - for everything that is already working and does not require rework, all these motors, sonar, reed switches and so on.

Since it is difficult to debug the program directly on the smartphone, even with UDB, I decided that let the whole thing be transferred to a normal personal computer and processed there. And somehow later, when there is a working version, we will return the brains back to the cart. We must start small, and in general it is interesting to look at the transfer of video from a mad cart.

Data from the sensors can be sent in a simple line, through a primitive client-server, with this there are no problems at all. But with the transfer of video, immediately had difficulty. In general, I needed to stream real-time video from the camera of the smartphone to the application window on the computer. This is for now. In the future, not only I could look at this picture in the window, but also some kind of pattern recognition system. For example JAVA OpenCV. And maybe even a neural network from the cloud: D. I do not know, this stage is still very far away. But I would like to see the world with the “eye” of the robot.

Everyone knows the numerous applications of the “mobile camera” type from Google’s store, where you catch the video stream from the smartphone’s camera by opening a browser with the required IP on your computer. Therefore, at first, I thought that it would be easy to implement the broadcast from my GALAXY (which was not a weak error), so you first need to check how it will be with its reception on a computer, taking into account that I can only write on JAVA.

As it turned out, with video playback on JAVA, to put it mildly, is not very good. Once a long time ago in 1997, the so-called Java Media Framework came out, a library that facilitates the development of programs that work with audio and video from the creators of JAVA themselves. But, somewhere after 2003, they put a big bolt on it and since then, have already noticed 15 years. After some experiments, I managed to run one file in the window I don’t remember which one (it seems AVI), but it looked pretty shabby. Files with other extensions didn’t want to run at all, in the extreme case there was one audio track.

On the Internet, I found two more alternative projects for working with video: Xuggler and Caprica VLCj. The first project was attractive in its capabilities, but also died a long time ago, but the second one turned out to be quite lively and interesting in its very idea. The guys took and screwed the well-known popular VLC media player to JAVA. That is Caprica does not use self-written codecs, but uses it already prepared from professionals. With it, you will play any file. A wise decision, but the main thing is that you have already installed this very VLC player on your computer. Well, and who does not have it? The only caveat, however, is that you have the same bit depth of the player and JAVA. I, for example, later with surprise, found out that I still had 32-bit VLC on my computer, unlike 64-bit JAVA. And half a day of life was lost in vain.

On its site, developers from Caprica promise users a lot of things. And all the file formats and running in several windows in the JAVA application, playing videos from You-Tube, capturing a live video stream and so on. But the harsh reality has put everything in its place. No, the files are not deceived - everything is played. But the YouTube video no longer wants. At first I could not understand why, but then I saw an inscription in the log that somehow, somewhere it was impossible to launch a certain lua-script and immediately recalled that:

But I did not find the promised streaming at all, although it was written that:

But the files are played, I repeat, without any complaints. For example, you can create, here is such indecency

The installation of the package itself is not difficult and is even described in detail, for example, here . True, I somehow clumsily prescribed environment variables and now my VLC is started for the first time with a delay of ten seconds, but then it keeps what is needed in the cache and later in the current session it starts without pauses.

Then in your JAVA project you prescribe the necessary dependencies and you can start torivet the media players in the JAVA windows to work.

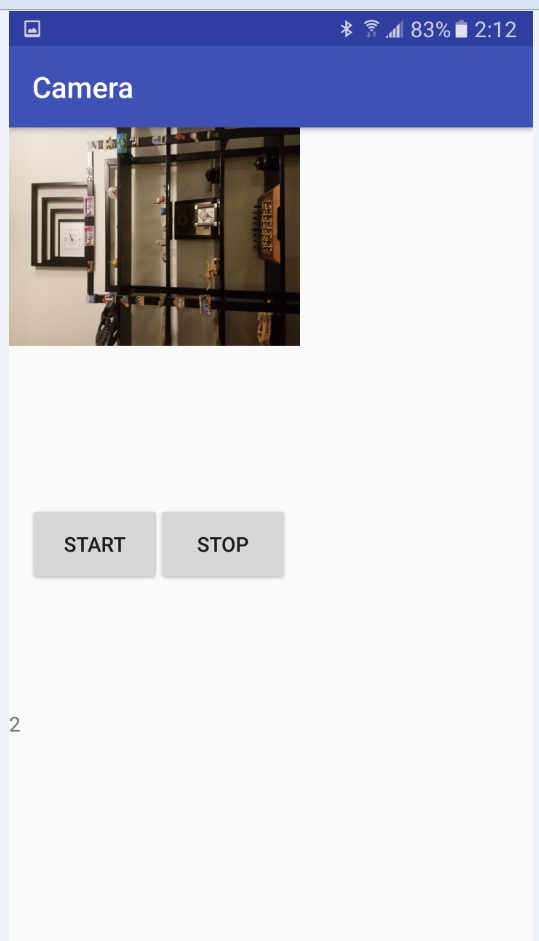

Having thus designated an approximate work plan on the side of a personal computer, I returned to the source of the video data, my ANDROID smartphone.

But here I had another rather big disappointment. Having gone through quite a few websites and even an online ANDROID manual, I found that it is not possible to stream in real time. As in Caprica, you can only read already written files. That is, the camera turned on, MEDIA RECORDER started recording when you need to stop. And we can get access to the data (and their transfer) only after stopping.

I found confirmation of my conclusions in one ancient article . There really were hints that once someone was able to deceive Android, so that he thought he was writing to a file, and in fact he slipped a buffer. But in any case, as it was already clarified earlier, I could not catch such a video stream on the PC side in the JAVA application.

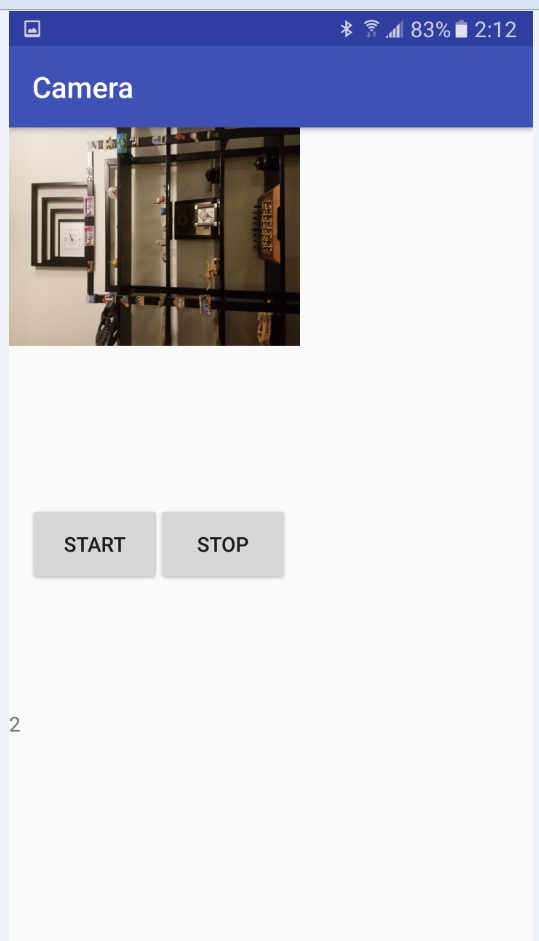

Therefore, it was made a simple, oak, temporary (I want to emphasize) the decision - to send a video stream from the camera in slices of two seconds. Not a rover, of course, but the moon rover is already working out completely.

Having stopped on this decision I began to master the classes CAMERA and MEDIA RECORDER. There are quite a few examples of code in the network for launching the camera and recording video files, but for some reason none of them worked for me. Neither on the emulator, nor on a real device. It turned out that the reason lies in permissions, that is, permissions. It turns out that code samples were written in those times when Android was still free and the programmer could do everything he wanted if he wrote all the necessary permissions in the manifest. But my current version of OC did not allow this. At first it was necessary to give the user permission to write the file and turn on the camera immediately after launching the application. It cost me extra activity, that is, in Activity.

After this, things went smoothly and the following working code appeared. The second class Camera_Activity is responsible for working with the camera and recording video files. Http_server class for forwarding (the name, of course, wrong, but it happened so historically). The code is simple, wherever there is an explanation.

The whole thing is lying on the Githab. Link

The essence of the program after starting and turning on the camera is as follows:

write video two seconds in the first file,

We write video for two seconds to the second file, and in the meantime we send the first file via TCP-IP via local WI-FI to the computer,

again we write the first file, and in the meantime we send the second

and so on.

Then the cycle repeats until the “stop” button is pressed or the battery of the smartphone dies. In principle, it is possible to implement the analog of button presses, commands from a computer, also via TCP, it is not difficult.

First, the video buffer, just in case, consisted of three files of 3GP format (we write the first we send the third, we write the second, we send the first, we write the third, we send the second), but then it turned out that there are two enough files (recording and sending each do not interfere).

When the camera resolution is 640 by 480, the files are obtained, somewhere between 200-300 kB, which is fine for my router. I didn’t bother with the sound, but everything seems to be easy there: you install the necessary audio encoders, bitrates, the number of channels and the like.

A little later, when I debugged the video transmission, I added the code to the transfer of information from the sensors of the smartphone. Everything is transmitted trivially in one line, but I could not transfer it through the same socket as the video. Apparently, the classes for transmitting the PrintWriter string and transferring the binary data to the BufferedOutputStream use different streams, but they all have one output buffer, where they successfully spoil each other. As a result, the video begins to glitch and pour. In addition, the video file is transmitted every two seconds, and for the sensors this interval is too big. Therefore, it was decided to push them into different sockets so that they do not interfere with each other. For this reason, a new class Http_server_Sensors.

So, we have organized the shipment, now back to the dark host.

As we have already seen from the very first example, playing video files in a JAVA application using the VLC player is no longer a problem. The main thing is to get these files.

The next demo program is responsible for this.

Its essence is simple. A TCP client is started, which starts to wait for the server to be ready on the smartphone. After receiving the first file, it immediately starts playing and in parallel is expected file number two. Next is expected either the end of the reception of the second file, or the end of the playback of the first. Best of all, of course,three stars , when the file is downloaded faster than it plays. If the first file was lost, and the second one was not received yet, then everything is waiting ... We are demonstrating a black screen. If not, we quickly start playing the second file and simultaneously download the first file again.

I had a vague hope that the pause between switching the files being played would be less than the response time of the human eye, but it did not materialize. Leisurely, what to say, this VLC.

As a result, we get a sort of nasty interrupted video (this, apparently, saw the world as the first trilobite), where the sharpness adjustment is constantly taking place. And we must bear in mind that the video is also delayed by two seconds. In short, in production I do not recommend this. But on my bezrybe and trilobite, as they say ...

Summarizing the above, we can absolutely say that:

Transferring video by chunks is essentially inoperable and can be useful only in cases where the duration of the video is long enough and you do not need a second reaction to what is happening.

Transmitting video over TCP-IP is also a wrong idea, whatever some individuals on Habré say about the data transfer rate using this protocol (which is even faster than UDP). Of course, modern wireless intranets have quite good characteristics to provide continuous handshakes for TCP servers and clients, and TCP itself seems to have been upgraded for long data, but still, intermittently appear between playing video glasses.

But at least for the future the following thoughts appeared:

Of course, there are more questions than answers here. Will the JAVA reception speed with its abstraction level work with the network and images? Is it possible to do 30 normal shots per second at the level accessible to me on Android? Will they have to be pressed before shipping to reduce the bitrate? And then will there be enough JAVA performance for packaging and unpacking? And if, all of a sudden, something happens, will it be possible to go through the next step, screw the JAVA OpenCV computer vision system here? Most, of course, streaming video from the floor level is always interesting to see, but we should not forget about the highest goal - the rote cart with ant intelligence!

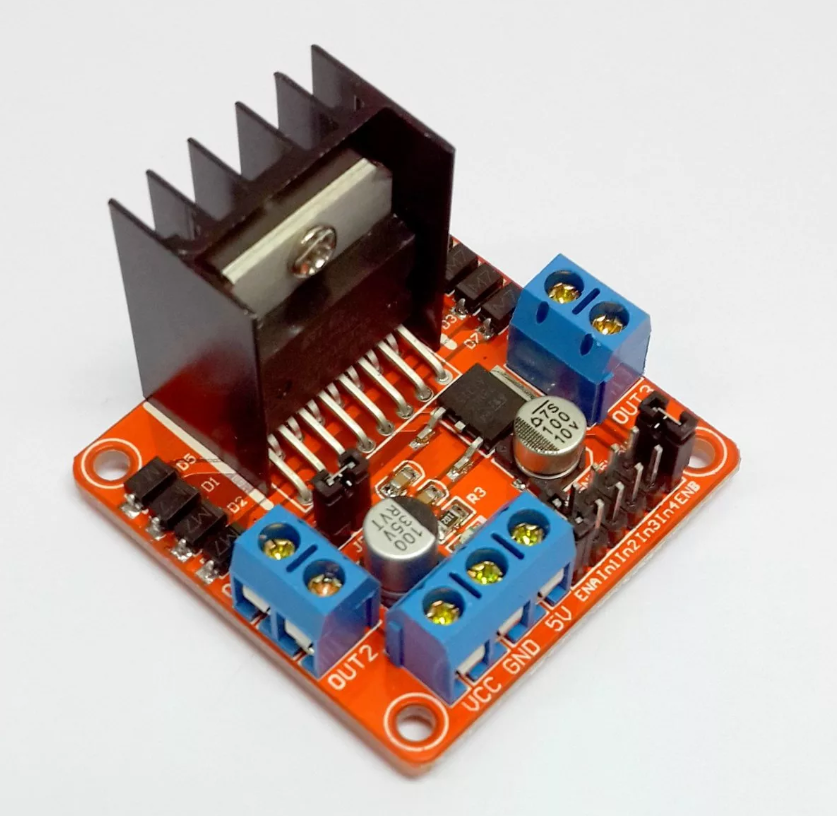

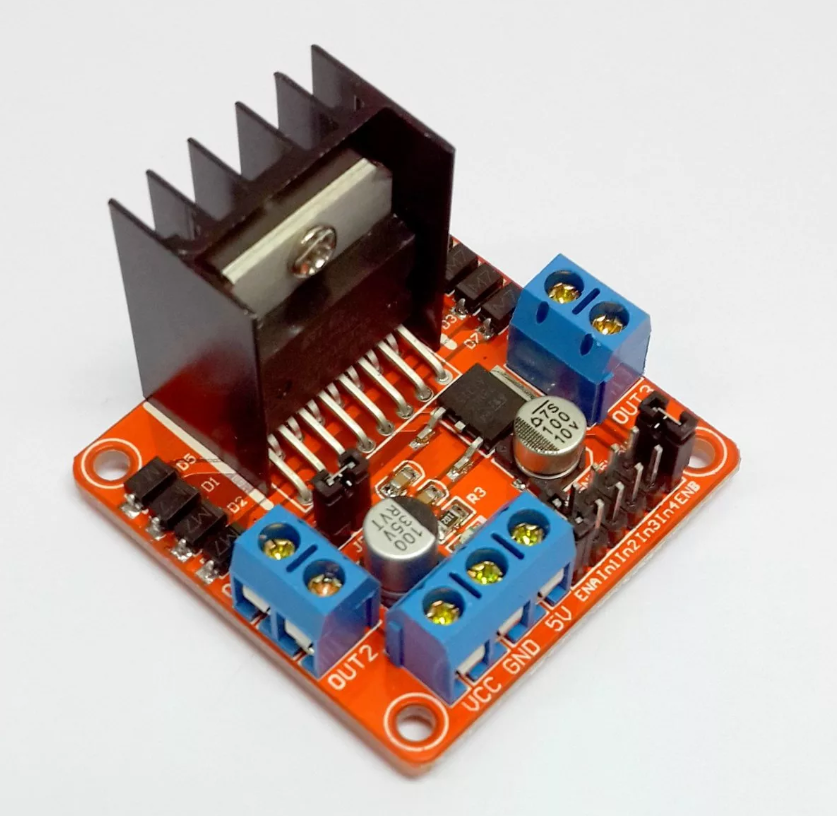

But, while having what we have, let us return to the current cart. The old program from the previous article for the AVR microcontroller on the Arduino platform almost did not change, only the choice of branching was added - autonomous driving or under the control of the operator. The data that the cart (spinal cord) transmits via WI-FI is the same - the distance traveled. A little later, I attached also the transfer of the temperature of a critical element - the driver of motors. All this is transmitted and received first via UART, and already when accessing the network via UDP. I do not give the code, all the complete analysis in the article before last .

For two of his motor (driver) is still more or less, but with four after a while it overheats to an inoperative state. At first I tried to use the simplest analog temperature sensors based on zener diodes, such as LM335, but nothing came of it. The reference voltage source, abbreviated ION, was not on my board. And there is no sense in catching millivolts on a sinking battery. By the way, about the battery - when I was tired of constantly removing and inserting 14500 lithium batteries for recharging, I just took a spare battery from a screwdriver and the cart started to drive continuously for an hour and a half, plus I also got a threatening look and weight (yes, it’s on this battery "Eyes"). Therefore, to measure the temperature, I adjusted the faulty gyro accelerometer based on the L3G4200D.Fortunately, he also measured the temperature and transmitted it via the I2C bus.

The smartphone, sitting behind the battery and above the echo-holders, transmits the video stream, accelerometers readings (you can estimate the roll and pitch of the cart when it is standing), roll and pitch in degrees, already counted by the smartphone itself (a very convenient option) , linear acceleration in the direction of travel, illumination. In general, of course, you can transfer everything that your smartphone can measure from air pressure to the north.

As a result, the application on JAVA acquired the following form:

The funny thing is that it really looks like a control panel of the Soviet lunar rover, only I have a TV screen on the side, and they have it in the center.

When turned on, first connect to the cart and smartphone on it, select the manual control mode or full autonomy - and go ahead! Until the driver temperature window turns yellow, and then turns red. Graphics!

This is how it looks live.

I don’t cite the program here, because as usual, building windows takes up 95% of the code. She can be found here.

In the future, the brain (smartphone) should learn how to transfer a normal (and not such as now) video to a computer, where it should be recognized for good (as long as the goal is to build a room map at the floor level and determine its location). Well, and already quite under communism, I would like to port it back to a smartphone so that he does not need a computer.

If at least something happens, be sure to post. Thanks for attention.

We are going to talk about robotelegu from before last article , which was time to at least smarter.

')

Who cares, welcome under cat.

If you are not very interested in reading old articles, then briefly - the point was that to control an ordinary four-wheeled cart on the Arduino platform, it was attached to a wireless UART bridge I developed based on the well-known ESP8266 module. Also for convenience (and in general this was the main goal) with the help of the same ESP, I wrote a programmer for arduinka, which allows you to flash it remotely.

That is, the cart is somewhere far away (but within your WI-FI network) ezdiet (yes, I like to write this word), it sends data and receives commands, and if necessary, it is possible to change the program in AVR microcontroller by order. Accordingly, the program on JAVA for PC was compiled, by launching which you could enjoy control and receive a primitive telemetry in the form of the distance traveled (a reed switch and a magnet on the wheel).

Further, I successfully experimented in the following article with the control of a trolley using a smartphone - buttons, tilts and even voice. But when the cart left for the next room, then even the voice could not return it (unlike the cat). She traveled there, knocked on the walls and furniture, tangled in the wires, but besides the information about the distance traveled did not send anything.

Therefore, immediately the thought arose of providing the future Terminator with organs of sense. One of the easiest options for this is the use of the sonar.

Algorithm of work to a disgrace simple, one front we start the sensor and at the same time any counter of the microcontroller. HC-SR04 begins to be shot by ultrasound into the distance. The response signal from the sensor on the other wire signals the end of the distance measurement, and the time interval between start and response is proportional to the measured distance. Accordingly, at this moment we slow down the counter and see how much it has hit.

Accuracy is obtained to about a centimeter, and the range to about two meters. He does not like fleecy and wool surfaces (for example, a cat), where any echo signals irrevocably sink.

- screw the sensor to the servo-typewriter and look them in different directions

- put a few sensors.

Initially, I implemented the first option, putting the sonar on a cheap SG90 servo and the cart turned into a rover. It took a lot of time to carry out at least three measurements, so basically the cart stood, rotating servo, then carefully moved forward, but not very far (and suddenly an obstacle appeared on the side), and again she felt the space in front of her for several seconds. Still, the sound is not your light.

Therefore, without further ado, I put three sonars on it at once. The cart acquired a chthonic spider look, stopped blunting in front of obstacles, and began to turn them on the go. But in the end, there was only enough brains to simply not get stuck in a friendly environment. We had to move on - to autonomy and progress. And here, without the various senses, as even a nematode worm will tell you, it is impossible to do.

Further, usually enthusiasts begin to sculpt various new sensors like gyro-accelerometers-magnetometers and even FIRE sensors onto their creations (everything that nameless Chinese produce in million quantities for Arduino). And I, too, almost did not go on this slippery slope, but thought about it in time. And I did this why. In the farthest perspective, the robotic cart should have received the sight in the form of a camera and also understood what it saw. But the AVR microcontroller from the Arduino board will tell you “goodbye” at the stage of receiving the video, not to mention processing it. And suddenly my eyes fell on the middle-aged GALAXY S7 smartphone, already battered by life.

Such computational power, eight cores, 4 gigabytes of memory, two cameras, access to the network, what else is needed to turn a monkey into a human?

And just need a small design in order to keep our smartphone on the cart and so that it can be easily put and removed.

Then I went to the Android developer site to find out what other opportunities a regular smartphone can bestow on us. It turned out not small. Theoretically you can

Get access to the following sensor sensors.

TYPE_ACCELEROMETER

TYPE_AMBIENT_TEMPERATURE

TYPE_GAME_ROTATION_VECTOR

GEOMAGNETIC_ROTATION_VECTOR

TYPE_GRAVITY

TYPE_GYROSCOPE

TYPE_GYROSCOPE_UNCALIBRATED

TYPE_HEART_BEAT

TYPE_HEART_RATE

TYPE_LIGHT

TYPE_LINEAR_ACCELERATION

TYPE_LOW_LATENCY_OFFBODY_DETECT

TYPE_MAGNETIC_FIELD

TYPE_MAGNETIC_FIELD_UNCALIBRATED

TYPE_MOTION_DETECT

TYPE_ORIENTATION

TYPE_POSE_6DOF

TYPE_PRESSURE

TYPE_PROXIMITY

TYPE_RELATIVE_HUMIDITY

TYPE_ROTATION_VECTOR

TYPE_SIGNIFICANT_MOTION

TYPE_STATIONARY_DETECT

TYPE_STEP_COUNTER

TYPE_STEP_DETECTOR

TYPE_AMBIENT_TEMPERATURE

TYPE_GAME_ROTATION_VECTOR

GEOMAGNETIC_ROTATION_VECTOR

TYPE_GRAVITY

TYPE_GYROSCOPE

TYPE_GYROSCOPE_UNCALIBRATED

TYPE_HEART_BEAT

TYPE_HEART_RATE

TYPE_LIGHT

TYPE_LINEAR_ACCELERATION

TYPE_LOW_LATENCY_OFFBODY_DETECT

TYPE_MAGNETIC_FIELD

TYPE_MAGNETIC_FIELD_UNCALIBRATED

TYPE_MOTION_DETECT

TYPE_ORIENTATION

TYPE_POSE_6DOF

TYPE_PRESSURE

TYPE_PROXIMITY

TYPE_RELATIVE_HUMIDITY

TYPE_ROTATION_VECTOR

TYPE_SIGNIFICANT_MOTION

TYPE_STATIONARY_DETECT

TYPE_STEP_COUNTER

TYPE_STEP_DETECTOR

As they say, what is there just not! And indeed, in relation to the specific GALAXY S7 there was not a lot of things. For example, a humidity sensor. And the ambient temperature (although I realized that being inside the case, it will show the temperature of the smartphone itself). But pressure and light sensors were present. Not to mention the accelerometer gyroscopes, using which you can easily determine your position in space.

As a result, the decision has matured, let the smartphone receive and process all the top-level information - video and all these various sensors. And the Arduino platform will be responsible, so to speak, for the unconscious - for everything that is already working and does not require rework, all these motors, sonar, reed switches and so on.

Since it is difficult to debug the program directly on the smartphone, even with UDB, I decided that let the whole thing be transferred to a normal personal computer and processed there. And somehow later, when there is a working version, we will return the brains back to the cart. We must start small, and in general it is interesting to look at the transfer of video from a mad cart.

Data from the sensors can be sent in a simple line, through a primitive client-server, with this there are no problems at all. But with the transfer of video, immediately had difficulty. In general, I needed to stream real-time video from the camera of the smartphone to the application window on the computer. This is for now. In the future, not only I could look at this picture in the window, but also some kind of pattern recognition system. For example JAVA OpenCV. And maybe even a neural network from the cloud: D. I do not know, this stage is still very far away. But I would like to see the world with the “eye” of the robot.

Everyone knows the numerous applications of the “mobile camera” type from Google’s store, where you catch the video stream from the smartphone’s camera by opening a browser with the required IP on your computer. Therefore, at first, I thought that it would be easy to implement the broadcast from my GALAXY (which was not a weak error), so you first need to check how it will be with its reception on a computer, taking into account that I can only write on JAVA.

As it turned out, with video playback on JAVA, to put it mildly, is not very good. Once a long time ago in 1997, the so-called Java Media Framework came out, a library that facilitates the development of programs that work with audio and video from the creators of JAVA themselves. But, somewhere after 2003, they put a big bolt on it and since then, have already noticed 15 years. After some experiments, I managed to run one file in the window I don’t remember which one (it seems AVI), but it looked pretty shabby. Files with other extensions didn’t want to run at all, in the extreme case there was one audio track.

On the Internet, I found two more alternative projects for working with video: Xuggler and Caprica VLCj. The first project was attractive in its capabilities, but also died a long time ago, but the second one turned out to be quite lively and interesting in its very idea. The guys took and screwed the well-known popular VLC media player to JAVA. That is Caprica does not use self-written codecs, but uses it already prepared from professionals. With it, you will play any file. A wise decision, but the main thing is that you have already installed this very VLC player on your computer. Well, and who does not have it? The only caveat, however, is that you have the same bit depth of the player and JAVA. I, for example, later with surprise, found out that I still had 32-bit VLC on my computer, unlike 64-bit JAVA. And half a day of life was lost in vain.

On its site, developers from Caprica promise users a lot of things. And all the file formats and running in several windows in the JAVA application, playing videos from You-Tube, capturing a live video stream and so on. But the harsh reality has put everything in its place. No, the files are not deceived - everything is played. But the YouTube video no longer wants. At first I could not understand why, but then I saw an inscription in the log that somehow, somewhere it was impossible to launch a certain lua-script and immediately recalled that:

This is a “screen-scraping” of the web page - if it’s then you’ll get it. script for a new version of VLC to be released.In short, apparently YouTube has changed the structure of its web page and I have to wait for a new release. On the other hand, I also need a “live” video broadcast, not a file playback from the site. That is, even if the lua script worked, it would not help me much.

But I did not find the promised streaming at all, although it was written that:

Network streaming server (eg a network radio station);Maybe this Wishlist, and maybe there is a commercial version, it is difficult to say.

Network streaming client;

But the files are played, I repeat, without any complaints. For example, you can create, here is such indecency

The installation of the package itself is not difficult and is even described in detail, for example, here . True, I somehow clumsily prescribed environment variables and now my VLC is started for the first time with a delay of ten seconds, but then it keeps what is needed in the cache and later in the current session it starts without pauses.

Then in your JAVA project you prescribe the necessary dependencies and you can start to

Having thus designated an approximate work plan on the side of a personal computer, I returned to the source of the video data, my ANDROID smartphone.

But here I had another rather big disappointment. Having gone through quite a few websites and even an online ANDROID manual, I found that it is not possible to stream in real time. As in Caprica, you can only read already written files. That is, the camera turned on, MEDIA RECORDER started recording when you need to stop. And we can get access to the data (and their transfer) only after stopping.

I found confirmation of my conclusions in one ancient article . There really were hints that once someone was able to deceive Android, so that he thought he was writing to a file, and in fact he slipped a buffer. But in any case, as it was already clarified earlier, I could not catch such a video stream on the PC side in the JAVA application.

Therefore, it was made a simple, oak, temporary (I want to emphasize) the decision - to send a video stream from the camera in slices of two seconds. Not a rover, of course, but the moon rover is already working out completely.

Having stopped on this decision I began to master the classes CAMERA and MEDIA RECORDER. There are quite a few examples of code in the network for launching the camera and recording video files, but for some reason none of them worked for me. Neither on the emulator, nor on a real device. It turned out that the reason lies in permissions, that is, permissions. It turns out that code samples were written in those times when Android was still free and the programmer could do everything he wanted if he wrote all the necessary permissions in the manifest. But my current version of OC did not allow this. At first it was necessary to give the user permission to write the file and turn on the camera immediately after launching the application. It cost me extra activity, that is, in Activity.

Class MainActivity.java

import android.Manifest; import android.content.Intent; import android.content.pm.PackageManager; import android.hardware.Camera; import android.media.MediaRecorder; import android.os.AsyncTask; import android.support.v4.app.ActivityCompat; import android.support.v4.content.ContextCompat; import android.support.v7.app.AppCompatActivity; import android.os.Bundle; import android.widget.Toast; import java.io.File; public class MainActivity extends AppCompatActivity { Camera camera; MediaRecorder mediaRecorder; public static MainActivity m; public static boolean Camera_granted; File videoFile; @Override protected void onCreate(Bundle savedInstanceState) { super.onCreate(savedInstanceState); setContentView(R.layout.activity_main); if ((ContextCompat.checkSelfPermission(MainActivity.this, Manifest.permission.CAMERA) != PackageManager.PERMISSION_GRANTED) ||(ContextCompat.checkSelfPermission(MainActivity.this, Manifest.permission.WRITE_EXTERNAL_STORAGE) != PackageManager.PERMISSION_GRANTED) ) //ask for authorisation { ActivityCompat.requestPermissions(MainActivity.this, new String[]{Manifest.permission.CAMERA,Manifest.permission.WRITE_EXTERNAL_STORAGE}, 50); } if ((ContextCompat.checkSelfPermission(MainActivity.this, Manifest.permission.CAMERA) != PackageManager.PERMISSION_GRANTED)& (ContextCompat.checkSelfPermission(MainActivity.this, Manifest.permission.WRITE_EXTERNAL_STORAGE) != PackageManager.PERMISSION_GRANTED)) { Toast.makeText(MainActivity.this,"", Toast.LENGTH_SHORT).show();} m=this; MyTask mt = new MyTask(); mt.execute(); } } class MyTask extends AsyncTask<Void, Void, Void> { @Override protected void onPreExecute() { super.onPreExecute(); } @Override protected Void doInBackground(Void... params) { boolean ready = false; while(!ready) { if ((ContextCompat.checkSelfPermission(MainActivity.m, Manifest.permission.CAMERA) == PackageManager.PERMISSION_GRANTED)& (ContextCompat.checkSelfPermission(MainActivity.m, Manifest.permission.WRITE_EXTERNAL_STORAGE) == PackageManager.PERMISSION_GRANTED)) { System.out.println(" "); ready=true; } } return null; } @Override protected void onPostExecute(Void result) { Toast.makeText(MainActivity.m,"", Toast.LENGTH_SHORT).show(); Intent intent=new Intent(MainActivity.m,CameraActivity.class); // : MainActivity.m.startActivity(intent); } } After this, things went smoothly and the following working code appeared. The second class Camera_Activity is responsible for working with the camera and recording video files. Http_server class for forwarding (the name, of course, wrong, but it happened so historically). The code is simple, wherever there is an explanation.

The whole thing is lying on the Githab. Link

Camera_Activity

import android.hardware.Camera; import android.media.MediaRecorder; import android.os.AsyncTask; import android.os.Bundle; import android.os.Environment; import android.support.annotation.Nullable; import android.support.v7.app.AppCompatActivity; import android.view.SurfaceHolder; import android.view.SurfaceView; import android.view.View; import android.widget.Button; import android.widget.TextView; import java.io.BufferedInputStream; import java.io.File; import java.io.FileInputStream; import java.io.IOException; import java.net.ServerSocket; import android.hardware.SensorEvent; import android.hardware.SensorEventListener; import android.hardware.SensorManager; import android.content.Context; import android.hardware.Sensor; import static android.hardware.Camera.getNumberOfCameras; import java.io.BufferedOutputStream; import java.io.OutputStreamWriter; import java.io.PrintWriter; import java.net.Socket; /** * Created by m on 01.02.2019. */ public class CameraActivity extends AppCompatActivity implements SensorEventListener { SurfaceView surfaceView; TextView mTextView; Button mStart; Button mStop; Camera camera; MediaRecorder mediaRecorder; public static ServerSocket ss; public static ServerSocket ss2; public static MainActivity m; public static volatile boolean stopCamera=true; public static int count=1; public static File videoFile1; public static File videoFile2; public static File videoFile3; public static volatile byte[] data; public SensorManager mSensorManager; public Sensor mAxeleration, mLight,mRotation,mHumidity,mPressure,mTemperature; public int ax; public int ay; public int az; public double light; public int x; public int y; public int z; public double hum; public double press; public double tempr; public static String Sensors; @Override protected void onCreate(@Nullable Bundle savedInstanceState) { super.onCreate(savedInstanceState); mSensorManager = (SensorManager) getSystemService(Context.SENSOR_SERVICE); // mAxeleration = mSensorManager.getDefaultSensor(Sensor.TYPE_ACCELEROMETER); // mSensorManager.registerListener(this, mAxeleration, SensorManager.SENSOR_DELAY_NORMAL); mLight = mSensorManager.getDefaultSensor(Sensor.TYPE_LIGHT); mSensorManager.registerListener(this, mLight, SensorManager.SENSOR_DELAY_NORMAL); mRotation = mSensorManager.getDefaultSensor(Sensor.TYPE_ORIENTATION); mSensorManager.registerListener(this, mRotation, SensorManager.SENSOR_DELAY_NORMAL); mHumidity = mSensorManager.getDefaultSensor(Sensor.TYPE_LINEAR_ACCELERATION); mSensorManager.registerListener(this, mHumidity, SensorManager.SENSOR_DELAY_NORMAL); mPressure = mSensorManager.getDefaultSensor(Sensor.TYPE_PRESSURE); mSensorManager.registerListener(this, mPressure, SensorManager.SENSOR_DELAY_NORMAL); mTemperature = mSensorManager.getDefaultSensor(Sensor.TYPE_AMBIENT_TEMPERATURE); mSensorManager.registerListener(this, mTemperature, SensorManager.SENSOR_DELAY_NORMAL); setContentView(R.layout.camera); // videoFile = new File(Environment.getExternalStorageDirectory() + File.separator+ Environment.DIRECTORY_DCIM + File.separator + "test.3gp"); videoFile1 = new File(Environment.getExternalStoragePublicDirectory(Environment.DIRECTORY_DCIM), "test1.3gp"); videoFile2 = new File(Environment.getExternalStoragePublicDirectory(Environment.DIRECTORY_DCIM), "test2.3gp"); videoFile3 = new File(Environment.getExternalStoragePublicDirectory(Environment.DIRECTORY_DCIM), "test3.3gp"); // videoFile4 = new File(Environment.getExternalStoragePublicDirectory(Environment.DIRECTORY_DCIM), "testOUT.3gp"); // File file = new File(Environment.getExternalStorageDirectory(),VIDEO_PATH_NAME);// // "touch" the file try { videoFile1.createNewFile(); videoFile2.createNewFile(); videoFile3.createNewFile(); } catch (IOException e) { } mStart = (Button) findViewById(R.id.btnStartRecord); mStop = (Button) findViewById(R.id.btnStopRecord); surfaceView = (SurfaceView) findViewById(R.id.surfaceView); mTextView = (TextView) findViewById(R.id.textView); SurfaceHolder holder = surfaceView.getHolder(); holder.addCallback(new SurfaceHolder.Callback() { @Override public void surfaceCreated(SurfaceHolder holder) { try { camera.setPreviewDisplay(holder); camera.startPreview(); } catch (Exception e) { e.printStackTrace(); } } @Override public void surfaceChanged(SurfaceHolder holder, int format, int width, int height) { } @Override public void surfaceDestroyed(SurfaceHolder holder) { } }); mStart.setOnClickListener(new View.OnClickListener() { public void onClick(View v){ System.out.println(" "); // WriteVideo WV = new WriteVideo(); WV.start(); mStart.setClickable(false); mStop.setClickable(true); Http_server.File_is_sent=true; new ServerCreation().execute();// } } ); mStop.setOnClickListener(new View.OnClickListener() { public void onClick(View v){ stopCamera = false; // mTextView.setText(" "); releaseMediaRecorder(); releaseCamera(); mStart.setClickable(true); mStop.setClickable(false); System.out.println(" "); Http_server.File_is_sent=true; } } ); } @Override public void onAccuracyChanged(Sensor sensor, int accuracy) { // } @Override public void onSensorChanged(SensorEvent event) { // switch (event.sensor.getType()) { case Sensor.TYPE_ACCELEROMETER: ax = (int)(event.values[0] * 9); // + - az = (int)(event.values[2] * 9);// + - // System.out.println(" = "+ ax + " = " + az); break; case Sensor.TYPE_LIGHT: light = event.values[0]; // System.out.println(" = "+ light); break; case Sensor.TYPE_ORIENTATION: x = (int)event.values[0]; y = (int)event.values[1]+90; // + - z = (int)event.values[2]; // + - // System.out.println("x = "+ x + " y = " + y+ " z= "+ z); break; case Sensor.TYPE_LINEAR_ACCELERATION: hum = event.values[2]; int k = (int)(hum*100); hum = - (double)k;// , /2 // System.out.println(hum); break; case Sensor.TYPE_PRESSURE: press = event.values[0]*760/10.1325; int i = (int) press; press = (double)i/100; // System.out.println(press); break; case Sensor.TYPE_AMBIENT_TEMPERATURE: tempr = event.values[0]; System.out.println(tempr); break; } Sensors = " tangaz_1 "+ az+ " kren_1 " + ax + " tangaz_2 "+ y + " kren_2 " + z + " forvard_accel "+ hum + " light " + light+ " "; // System.out.println(Sensors); } @Override protected void onResume() { super.onResume(); releaseCamera(); releaseMediaRecorder(); int t = getNumberOfCameras(); mTextView.setText(""+t); if(camera == null) { camera = Camera.open(); // camera.unlock(); } else{ } } @Override protected void onPause() { super.onPause(); } @Override protected void onStop() { super.onStop(); releaseCamera(); releaseMediaRecorder(); } private boolean prepareVideoRecorder() { if (mediaRecorder==null) {mediaRecorder = new MediaRecorder();} camera.unlock(); mediaRecorder.setCamera(camera); mediaRecorder.setVideoSource(MediaRecorder.VideoSource.CAMERA); switch (count) { case 1: mediaRecorder.setOutputFile(videoFile1.getAbsolutePath()); break; case 2: mediaRecorder.setOutputFile(videoFile2.getAbsolutePath()); break; case 3: mediaRecorder.setOutputFile(videoFile3.getAbsolutePath()); break; } mediaRecorder.setPreviewDisplay(surfaceView.getHolder().getSurface()); mediaRecorder.setOutputFormat(MediaRecorder.OutputFormat.THREE_GPP); //mediaRecorder.setOutputFormat(MediaRecorder.OutputFormat.MPEG_4); mediaRecorder.setVideoSize(640,480); mediaRecorder.setOrientationHint(90);// , mediaRecorder.setVideoFrameRate(30); mediaRecorder.setVideoEncoder(1); try { mediaRecorder.prepare(); } catch (Exception e) { e.printStackTrace(); releaseMediaRecorder(); return false; } return true; } private void releaseMediaRecorder() { if (mediaRecorder != null) { mediaRecorder.release(); mediaRecorder = null; if (camera != null) { // camera.lock();// } } } public void releaseCamera() { if (camera != null) { // camera.setPreviewCallback(null); // SurfaceView.getHolder().removeCallback(SurfaceView); camera.release(); camera = null; } } private class WriteVideo extends Thread{ public void run () { stopCamera=true; do{ // releaseMediaRecorder(); // releaseCamera(); if(camera == null) { camera = Camera.open(Camera.CameraInfo.CAMERA_FACING_BACK); } System.out.println(""); if (prepareVideoRecorder()) { mediaRecorder.start(); } else { releaseMediaRecorder(); } try { Thread.sleep(2000);// } catch (Exception e) { } count++; if(count==3){count=1;}// 4 if (mediaRecorder != null) { releaseMediaRecorder(); } System.out.println(""); File f=null; switch (count) { case 1: f = videoFile2;// 3 break; case 2: f = videoFile1;//1 break; case 3: f = videoFile2; break; } try { BufferedInputStream bis = new BufferedInputStream(new FileInputStream(f)); data = new byte[bis.available()]; bis.read(data); bis.close(); }catch (Exception e) { System.out.println(e); } if(Http_server.File_is_sent)// { System.out.println(" "); new HTTP_Server_Calling().execute(); Http_server.File_is_sent=false; } } while(stopCamera); if (mediaRecorder != null) { System.out.println(""); } } } } class ServerCreation extends AsyncTask<Void, Void, Void> { @Override protected void onPreExecute() { super.onPreExecute(); } @Override protected Void doInBackground(Void... params) { try { CameraActivity.ss = new ServerSocket(40001);// System.out.println(" "); new Http_server(CameraActivity.ss.accept()); CameraActivity.ss2 = new ServerSocket(40002);// }catch (Exception e) { System.out.println(e); System.out.println(" "); } new HTTP_Server_Calling2().start(); return null; } @Override protected void onPostExecute(Void result) { } } class HTTP_Server_Calling extends AsyncTask<Void, Void, Void> { @Override protected void onPreExecute() { super.onPreExecute(); } @Override protected Void doInBackground(Void... params) { try { new Http_server(CameraActivity.ss.accept()); } catch (Exception e) { System.out.println(e); } return null; } @Override protected void onPostExecute(Void result) { } } class HTTP_Server_Calling2 extends Thread// { public void run() { while (CameraActivity.stopCamera) { try { Thread.sleep(500);// new Http_server_Sensors(CameraActivity.ss2.accept()); } catch (Exception e) { System.out.println(e); } } } } class Http_server extends Thread { public Socket socket; public static volatile boolean File_is_sent=true; Http_server(Socket s) { System.out.println(" "); socket = s; setPriority(MAX_PRIORITY); start(); } public void run() { try { System.out.println(" "); BufferedOutputStream bos = new BufferedOutputStream((socket.getOutputStream())); bos.write(CameraActivity.data); bos.flush(); bos.close(); socket.close(); } catch (Exception e) { System.out.println(e); } File_is_sent = true; } } public class Http_server_Sensors extends Thread { public Socket socket; PrintWriter pw; Http_server_Sensors(Socket s) { socket = s; setPriority(MAX_PRIORITY); start(); } public void run() { try { pw = new PrintWriter(new OutputStreamWriter(socket.getOutputStream()), true);// System.out.println(CameraActivity.Sensors); pw.println(CameraActivity.Sensors);// pw.flush(); pw.close(); socket.close(); } catch (Exception e) { System.out.println(e); } } } The essence of the program after starting and turning on the camera is as follows:

write video two seconds in the first file,

We write video for two seconds to the second file, and in the meantime we send the first file via TCP-IP via local WI-FI to the computer,

again we write the first file, and in the meantime we send the second

and so on.

Then the cycle repeats until the “stop” button is pressed or the battery of the smartphone dies. In principle, it is possible to implement the analog of button presses, commands from a computer, also via TCP, it is not difficult.

First, the video buffer, just in case, consisted of three files of 3GP format (we write the first we send the third, we write the second, we send the first, we write the third, we send the second), but then it turned out that there are two enough files (recording and sending each do not interfere).

When the camera resolution is 640 by 480, the files are obtained, somewhere between 200-300 kB, which is fine for my router. I didn’t bother with the sound, but everything seems to be easy there: you install the necessary audio encoders, bitrates, the number of channels and the like.

A little later, when I debugged the video transmission, I added the code to the transfer of information from the sensors of the smartphone. Everything is transmitted trivially in one line, but I could not transfer it through the same socket as the video. Apparently, the classes for transmitting the PrintWriter string and transferring the binary data to the BufferedOutputStream use different streams, but they all have one output buffer, where they successfully spoil each other. As a result, the video begins to glitch and pour. In addition, the video file is transmitted every two seconds, and for the sensors this interval is too big. Therefore, it was decided to push them into different sockets so that they do not interfere with each other. For this reason, a new class Http_server_Sensors.

So, we have organized the shipment, now back to the dark host.

As we have already seen from the very first example, playing video files in a JAVA application using the VLC player is no longer a problem. The main thing is to get these files.

The next demo program is responsible for this.

Video player

import java.awt.BorderLayout; import java.awt.event.ActionEvent; import java.awt.event.ActionListener; import java.awt.event.WindowAdapter; import java.awt.event.WindowEvent; import javax.swing.JButton; import javax.swing.JFrame; import javax.swing.JOptionPane; import javax.swing.JPanel; import javax.swing.SwingUtilities; import uk.co.caprica.vlcj.component.EmbeddedMediaPlayerComponent; import uk.co.caprica.vlcj.discovery.NativeDiscovery; import uk.co.caprica.vlcj.player.MediaPlayer; import uk.co.caprica.vlcj.player.MediaPlayerEventAdapter; import java.io.*; import java.net.Socket; public class VideoPlayer { public final JFrame frame; public static EmbeddedMediaPlayerComponent mediaPlayerComponent; public final JButton pauseButton; public final JButton rewindButton; public final JButton skipButton; public static String mr1, mr2; public static boolean playing_finished = false; public static boolean File_1_play_starting = false; public static boolean File_1_play_finished = false; public static boolean File_2_play_starting = false; public static boolean File_2_play_finished = false; //192.168.1.128 public static void main(final String[] args) { new NativeDiscovery().discover(); mr1 = "D:\\test1.3gp"; mr2 = "D:\\test2.3gp"; SwingUtilities.invokeLater(new Runnable() { @Override public void run() { VideoPlayer vp = new VideoPlayer(); vp.mediaPlayerComponent.getMediaPlayer().setPlaySubItems(true); VideoPlayer.playing_finished=false; new Control().start();// // while (!Http_client.File_ready) { // System.out.println(""); try { Thread.sleep(100); } catch (Exception e) { } } // while (true) { playing_finished = false; } // System.out.println(" 1"); } }); } public VideoPlayer() { frame = new JFrame("My First Media Player"); frame.setBounds(100, 100, 600, 400); frame.setDefaultCloseOperation(JFrame.DO_NOTHING_ON_CLOSE); frame.addWindowListener(new WindowAdapter() { @Override public void windowClosing(WindowEvent e) { System.out.println(e); mediaPlayerComponent.release(); System.exit(0); } }); JPanel contentPane = new JPanel(); contentPane.setLayout(new BorderLayout()); mediaPlayerComponent = new EmbeddedMediaPlayerComponent(); contentPane.add(mediaPlayerComponent, BorderLayout.CENTER); frame.setContentPane(contentPane); JPanel controlsPane = new JPanel(); pauseButton = new JButton("Pause"); controlsPane.add(pauseButton); rewindButton = new JButton("Rewind"); controlsPane.add(rewindButton); skipButton = new JButton("Skip"); controlsPane.add(skipButton); contentPane.add(controlsPane, BorderLayout.SOUTH); pauseButton.addActionListener(new ActionListener() { @Override public void actionPerformed(ActionEvent e) { mediaPlayerComponent.getMediaPlayer().pause(); } }); rewindButton.addActionListener(new ActionListener() { @Override public void actionPerformed(ActionEvent e) { mediaPlayerComponent.getMediaPlayer().skip(-10000); } }); skipButton.addActionListener(new ActionListener() { @Override public void actionPerformed(ActionEvent e) { mediaPlayerComponent.getMediaPlayer().skip(10000); } }); mediaPlayerComponent.getMediaPlayer().addMediaPlayerEventListener(new MediaPlayerEventAdapter() { @Override public void playing(MediaPlayer mediaPlayer) { SwingUtilities.invokeLater(new Runnable() { @Override public void run() { frame.setTitle(String.format( "My First Media Player - %s", mediaPlayerComponent.getMediaPlayer() )); } }); } @Override public void finished(MediaPlayer mediaPlayer) { SwingUtilities.invokeLater(new Runnable() { @Override public void run() { playing_finished = true; System.out.println("finished " + playing_finished); //closeWindow(); } }); } @Override public void error(MediaPlayer mediaPlayer) { SwingUtilities.invokeLater(new Runnable() { @Override public void run() { JOptionPane.showMessageDialog( frame, "Failed to play media", "Error", JOptionPane.ERROR_MESSAGE ); closeWindow(); } }); } }); frame.setVisible(true); //mediaPlayerComponent.getMediaPlayer(); } public void start(String mrl) { mediaPlayerComponent.getMediaPlayer().setPlaySubItems(true); mediaPlayerComponent.getMediaPlayer().prepareMedia(mrl); //mediaPlayerComponent.getMediaPlayer().parseMedia(); mediaPlayerComponent.getMediaPlayer().playMedia(mrl); // mediaPlayerComponent. } public void closeWindow() { frame.dispatchEvent(new WindowEvent(frame, WindowEvent.WINDOW_CLOSING)); } } class PlayFile { public static void run(int number) { if (number==1) { VideoPlayer.mediaPlayerComponent.getMediaPlayer().prepareMedia(VideoPlayer.mr1); System.out.println(" 1"); VideoPlayer.mediaPlayerComponent.getMediaPlayer().start(); VideoPlayer.mediaPlayerComponent.getMediaPlayer().playMedia(VideoPlayer.mr1); VideoPlayer.File_1_play_starting = true; VideoPlayer.File_1_play_finished = false; while (!VideoPlayer.playing_finished) {// try { Thread.sleep(1); } catch (Exception e) { } } VideoPlayer.mediaPlayerComponent.getMediaPlayer().stop(); VideoPlayer.playing_finished = false; VideoPlayer.File_1_play_starting = false; VideoPlayer.File_1_play_finished = true; } { try { Thread.sleep(10); } catch (Exception e) { } } if (number==2) { VideoPlayer.mediaPlayerComponent.getMediaPlayer().prepareMedia(VideoPlayer.mr2); System.out.println(" 2"); VideoPlayer.mediaPlayerComponent.getMediaPlayer().start(); VideoPlayer.mediaPlayerComponent.getMediaPlayer().playMedia(VideoPlayer.mr2); VideoPlayer.File_2_play_starting = true; VideoPlayer.File_2_play_finished = false; while (!VideoPlayer.playing_finished) { try { Thread.sleep(1); } catch (Exception e) { } } VideoPlayer.mediaPlayerComponent.getMediaPlayer().stop(); VideoPlayer.playing_finished = false; VideoPlayer.File_2_play_starting = false; VideoPlayer.File_2_play_finished = true; } { try { Thread.sleep(10); } catch (Exception e) { } } } } public class Control extends Thread{ public static boolean P_for_play_1=false; public static boolean P_for_play_2=false; public void run() { // new Http_client(1).start(); while (!Http_client.File1_reception_complete) { try { Thread.sleep(1); } catch (Exception e) { } } while (true) { new Http_client(2).start(); PlayFile.run(1); while (!VideoPlayer.File_1_play_finished) { try { Thread.sleep(1); } catch (Exception e) { } } while (!Http_client.File2_reception_complete) { try { Thread.sleep(1); } catch (Exception e) { } } new Http_client(1).start(); PlayFile.run(2); while (!VideoPlayer.File_2_play_finished) { try { Thread.sleep(1); } catch (Exception e) { } } while (!Http_client.File1_reception_complete) { try { Thread.sleep(1); } catch (Exception e) { } } //PlayFile.run(1); } } } public class Http_client extends Thread { public static boolean File1_starts_writing=false; public static boolean File1_reception_complete=false; public static boolean File2_starts_writing=false; public static boolean File2_reception_complete=false; public int Number; public BufferedOutputStream bos; Http_client(int Number) { this.Number = Number; } public void run(){ try { Socket socket= new Socket("192.168.1.128", 40001); // System.out.println(" "); // PrintWriter pw = new PrintWriter(new OutputStreamWriter(socket.getOutputStream()), true); // pw.println("ready");// Greetings with CLIENT // System.out.println(" "); BufferedInputStream bis = new BufferedInputStream(socket.getInputStream()); if (Number ==1) { File1_starts_writing=true; File1_reception_complete=false; System.out.println(" 1, 2"); bos = new BufferedOutputStream(new FileOutputStream(VideoPlayer.mr1)); byte[] buffer = new byte[32768]; while (true) { // int readBytesCount = bis.read(buffer); if (readBytesCount == -1) { // break; } if (readBytesCount > 0) { // - , bos.write(buffer, 0, readBytesCount); } } System.out.println(" "); System.out.println(" "); bos.flush(); bos.close(); File1_starts_writing=false; File1_reception_complete=true; } if (Number==2) { File2_starts_writing=true; File2_reception_complete=false; System.out.println(" 2, 1"); bos = new BufferedOutputStream(new FileOutputStream(VideoPlayer.mr2)); byte[] buffer = new byte[32768]; while (true) { // int readBytesCount = bis.read(buffer); if (readBytesCount == -1) { // break; } if (readBytesCount > 0) { // - , bos.write(buffer, 0, readBytesCount); } } System.out.println(" "); System.out.println(" "); bos.flush(); bos.close(); File2_starts_writing=false; File2_reception_complete=true; } socket.close(); // pw.close(); bis.close(); } catch(Exception e){ System.out.println(e); System.out.println("gfgfg"); } try { Thread.sleep(10); } catch (Exception e) { } } } Its essence is simple. A TCP client is started, which starts to wait for the server to be ready on the smartphone. After receiving the first file, it immediately starts playing and in parallel is expected file number two. Next is expected either the end of the reception of the second file, or the end of the playback of the first. Best of all, of course,

I had a vague hope that the pause between switching the files being played would be less than the response time of the human eye, but it did not materialize. Leisurely, what to say, this VLC.

As a result, we get a sort of nasty interrupted video (this, apparently, saw the world as the first trilobite), where the sharpness adjustment is constantly taking place. And we must bear in mind that the video is also delayed by two seconds. In short, in production I do not recommend this. But on my bezrybe and trilobite, as they say ...

Summarizing the above, we can absolutely say that:

Transferring video by chunks is essentially inoperable and can be useful only in cases where the duration of the video is long enough and you do not need a second reaction to what is happening.

Transmitting video over TCP-IP is also a wrong idea, whatever some individuals on Habré say about the data transfer rate using this protocol (which is even faster than UDP). Of course, modern wireless intranets have quite good characteristics to provide continuous handshakes for TCP servers and clients, and TCP itself seems to have been upgraded for long data, but still, intermittently appear between playing video glasses.

But at least for the future the following thoughts appeared:

- send frame-by-frame (not video, but photos) via UDP, but control information via TCP,

- drive photo frames all through the UDP but with the clock signals in the same channel.

Of course, there are more questions than answers here. Will the JAVA reception speed with its abstraction level work with the network and images? Is it possible to do 30 normal shots per second at the level accessible to me on Android? Will they have to be pressed before shipping to reduce the bitrate? And then will there be enough JAVA performance for packaging and unpacking? And if, all of a sudden, something happens, will it be possible to go through the next step, screw the JAVA OpenCV computer vision system here? Most, of course, streaming video from the floor level is always interesting to see, but we should not forget about the highest goal - the rote cart with ant intelligence!

But, while having what we have, let us return to the current cart. The old program from the previous article for the AVR microcontroller on the Arduino platform almost did not change, only the choice of branching was added - autonomous driving or under the control of the operator. The data that the cart (spinal cord) transmits via WI-FI is the same - the distance traveled. A little later, I attached also the transfer of the temperature of a critical element - the driver of motors. All this is transmitted and received first via UART, and already when accessing the network via UDP. I do not give the code, all the complete analysis in the article before last .

For two of his motor (driver) is still more or less, but with four after a while it overheats to an inoperative state. At first I tried to use the simplest analog temperature sensors based on zener diodes, such as LM335, but nothing came of it. The reference voltage source, abbreviated ION, was not on my board. And there is no sense in catching millivolts on a sinking battery. By the way, about the battery - when I was tired of constantly removing and inserting 14500 lithium batteries for recharging, I just took a spare battery from a screwdriver and the cart started to drive continuously for an hour and a half, plus I also got a threatening look and weight (yes, it’s on this battery "Eyes"). Therefore, to measure the temperature, I adjusted the faulty gyro accelerometer based on the L3G4200D.Fortunately, he also measured the temperature and transmitted it via the I2C bus.

The smartphone, sitting behind the battery and above the echo-holders, transmits the video stream, accelerometers readings (you can estimate the roll and pitch of the cart when it is standing), roll and pitch in degrees, already counted by the smartphone itself (a very convenient option) , linear acceleration in the direction of travel, illumination. In general, of course, you can transfer everything that your smartphone can measure from air pressure to the north.

As a result, the application on JAVA acquired the following form:

The funny thing is that it really looks like a control panel of the Soviet lunar rover, only I have a TV screen on the side, and they have it in the center.

When turned on, first connect to the cart and smartphone on it, select the manual control mode or full autonomy - and go ahead! Until the driver temperature window turns yellow, and then turns red. Graphics!

This is how it looks live.

I don’t cite the program here, because as usual, building windows takes up 95% of the code. She can be found here.

In the future, the brain (smartphone) should learn how to transfer a normal (and not such as now) video to a computer, where it should be recognized for good (as long as the goal is to build a room map at the floor level and determine its location). Well, and already quite under communism, I would like to port it back to a smartphone so that he does not need a computer.

If at least something happens, be sure to post. Thanks for attention.

Source: https://habr.com/ru/post/448516/

All Articles